Ho Ho Ho, Alex here! (a real human writing these words, this needs to be said in 2025)

Merry Christmas (to those who celebrate) and welcome to the very special yearly ThursdAI recap! This was an intense year in the world of AI, and after 51 weekly episodes (this is episode 52!) we have the ultimate record of all the major and most important AI releases of this year!

So instead of bringing you a weekly update (it’s been a slow week so far, most AI labs are taking a well deserved break, the Cchinese AI labs haven’t yet surprised anyone), I’m dropping a comprehensive yearly AI review! Quarter by quarter, month by month, both in written form and as a pod/video!

Why do this? Who even needs this? Isn’t most of it obsolete? I have asked myself this exact question while prepping for the show (it was quite a lot of prep, even with Opus’s help). I eventually landed on, hey, if nothing else, this will serve as a record of the insane week of AI progress we all witnessed. Can you imagine that the term Vibe Coding is less than 1 year old? That Claude Code was released at the start of THIS year?

We get hedonicly adapt to new AI goodies so quick, and I figured this will serve as a point in time check, we can get back to and feel the acceleration!

With that, let’s dive in - P.S. the content below is mostly authored by my co-author for this, Opus 4.5 high, which at the end of 2025 I find the best creative writer with the best long context coherence that can imitate my voice and tone (hey, I’m also on a break! 🎅)

“Open source AI has never been as hot as this quarter. We’re accelerating as f*ck, and it’s only just beginning—hold on to your butts.” — Alex Volkov, ThursdAI Q1 2025

🏆 The Big Picture — 2025 - The Year the AI Agents Became Real

Looking back at 51 episodes and 12 months of relentless AI progress, several mega-themes emerged:

1. 🧠 Reasoning Models Changed Everything

From DeepSeek R1 in January to GPT-5.2 in December, reasoning became the defining capability. Models now think for hours, call tools mid-thought, and score perfect on math olympiads.

2. 🤖 2025 Was Actually the Year of Agents

We said it in January, and it came true. Claude Code launched the CLI revolution, MCP became the universal protocol, and by December we had ChatGPT Apps, Atlas browser, and AgentKit.

3. 🇨🇳 Chinese Labs Dominated Open Source

DeepSeek, Qwen, MiniMax, Kimi, ByteDance — despite chip restrictions, Chinese labs released the best open weights models all year. Qwen 3, Kimi K2, DeepSeek V3.2 were defining releases.

4. 🎬 We Crossed the Uncanny Valley

VEO3’s native audio, Suno V5’s indistinguishable music, Sora 2’s social platform — 2025 was the year AI-generated media became indistinguishable from human-created content.

5. 💰 The Investment Scale Became Absurd

$500B Stargate, $1.4T compute obligations, $183B valuations, $100-300M researcher packages, LLMs training in space. The numbers stopped making sense.

6. 🏆 Google Made a Comeback

After years of “catching up,” Google delivered Gemini 3, Antigravity, Nano Banana Pro, VEO3, and took the #1 spot (briefly). Don’t bet against Google.

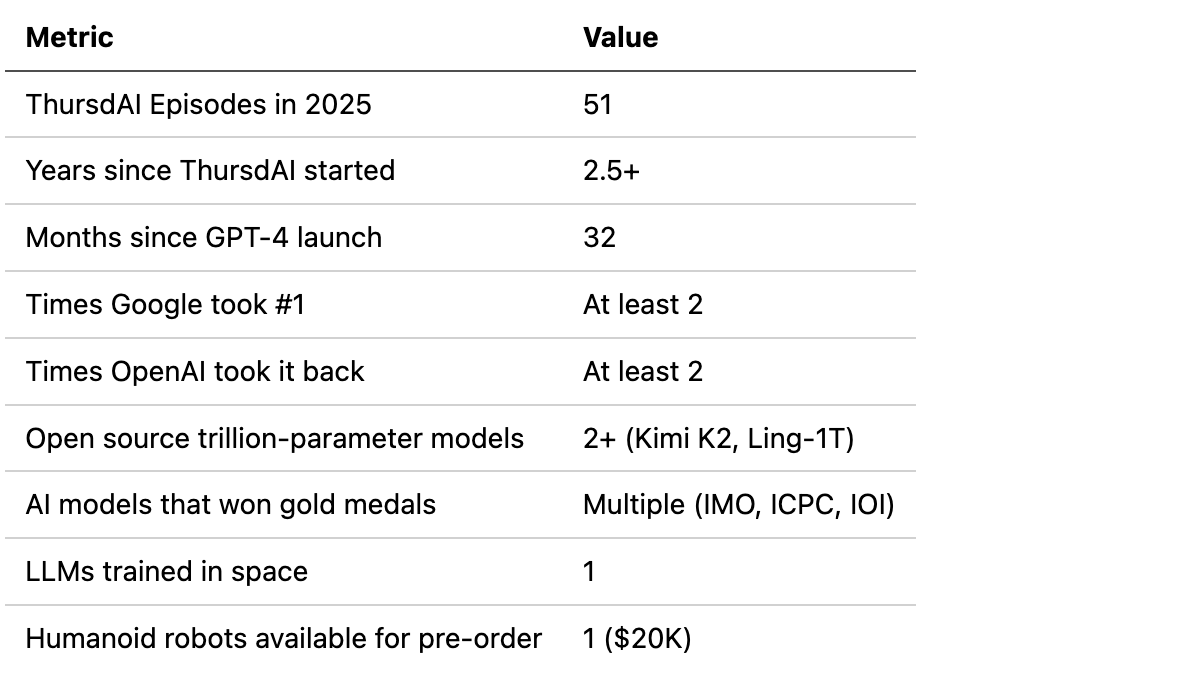

By the Numbers

Q1 2025 — The Quarter That Changed Everything

DeepSeek R1 crashed NVIDIA’s stock, reasoning models went mainstream, and Chinese labs took over open source. The quarter that proved AI isn’t slowing down—it’s just getting started.

Key Themes:

🧠 Reasoning models went mainstream (DeepSeek R1, o1, QwQ)

🇨🇳 Chinese labs dominated open source (DeepSeek, Alibaba, MiniMax, ByteDance)

🤖 2025 declared “The Year of Agents” (OpenAI Operator, MCP won)

🖼️ Image generation revolution (GPT-4o native image gen, Ghibli-mania)

💰 Massive infrastructure investment (Project Stargate $500B)

January — DeepSeek Shakes the World

(Jan 02 | Jan 10 | Jan 17 | Jan 24 | Jan 30)

The earthquake that shattered the AI bubble. DeepSeek R1 dropped on January 23rd and became the most impactful open source release ever:

Crashed NVIDIA stock 17% — $560B loss, largest single-company monetary loss in history

Hit #1 on the iOS App Store

Cost allegedly only $5.5M to train (sparking massive debate)

Matched OpenAI’s o1 on reasoning benchmarks at 50x cheaper pricing

The 1.5B model beat GPT-4o and Claude 3.5 Sonnet on math benchmarks 🤯

“My mom knows about DeepSeek—your grandma probably knows about it, too” — Alex Volkov

Also this month:

OpenAI Operator — First agentic ChatGPT (browser control, booking, ordering)

Project Stargate — $500B AI infrastructure (Manhattan Project for AI)

NVIDIA Project Digits — $3,000 desktop that runs 200B parameter models

Kokoro TTS — 82M param model hit #1 on TTS Arena, Apache 2, runs in browser

MiniMax-01 — 4M context window from Hailuo

Gemini Flash Thinking — 1M token context with thinking traces

February — Reasoning Mania & The Birth of Vibe Coding

(Feb 07 | Feb 13 | Feb 20 | Feb 28)

The month that redefined how we work with AI.

OpenAI Deep Research (Feb 6) — An agentic research tool that scored 26.6% on Humanity’s Last Exam (vs 10% for o1/R1). Dr. Derya Unutmaz called it “a phenomenal 25-page patent application that would’ve cost $10,000+.”

Claude 3.7 Sonnet & Claude Code (Feb 24-27) — Anthropic’s coding beast hit 70% on SWE-Bench with 8x more output (64K tokens). Claude Code launched as Anthropic’s agentic coding tool — marking the start of the CLI agent revolution.

“Claude Code is just exactly in the right stack, right around the right location... You can do anything you want with a computer through the terminal.” — Yam Peleg

GPT-4.5 (Orion) (Feb 27) — OpenAI’s largest model ever (rumored 10T+ parameters). 62.5% on SimpleQA, foundation for future reasoning models.

Grok 3 (Feb 20) — xAI enters the arena with 1M token context and “free until GPUs melt.”

Andrej Karpathy coins “Vibe Coding” (Feb 2) — The 5.2M view tweet that captured a paradigm shift: developers describe what they want, AI handles implementation.

OpenAI Roadmap Revelation (Feb 13) — Sam Altman announced GPT-4.5 will be the last non-chain-of-thought model. GPT-5 will unify everything.

March — Google’s Revenge & The Ghibli Explosion

(Mar 06 | Mar 13 | Mar 20 | Mar 27)

Gemini 2.5 Pro Takes #1 (Mar 27) — Google reclaimed the LLM crown with AIME jumping nearly 20 points, 1M context, “thinking” integrated into the core model.

GPT-4o Native Image Gen — Ghibli-mania (Mar 27) — The internet lost its collective mind and turned everything into Studio Ghibli. Auto-regressive image gen with perfect text rendering, incredible prompt adherence.

“The internet lost its collective mind and turned everything into Studio Ghibli” — Alex Volkov

MCP Won (Mar 27) — OpenAI officially adopted Anthropic’s Model Context Protocol. No VHS vs Betamax situation. Tools work across Claude AND GPT.

DeepSeek V3 685B — AIME jumped from 39.6% → 59.4%, MIT licensed, best non-reasoning open model.

ThursdAI Turns 2! (Mar 13) — Two years since the first episode about GPT-4.

Open Source Highlights:

Gemma 3 (1B-27B) — 128K context, multimodal, 140+ languages, single GPU

QwQ-32B — Qwen’s reasoning model matches R1, runs on Mac

Mistral Small 3.1 — 24B, beats Gemma 3, Apache 2

Qwen2.5-Omni-7B — End-to-end multimodal with speech output

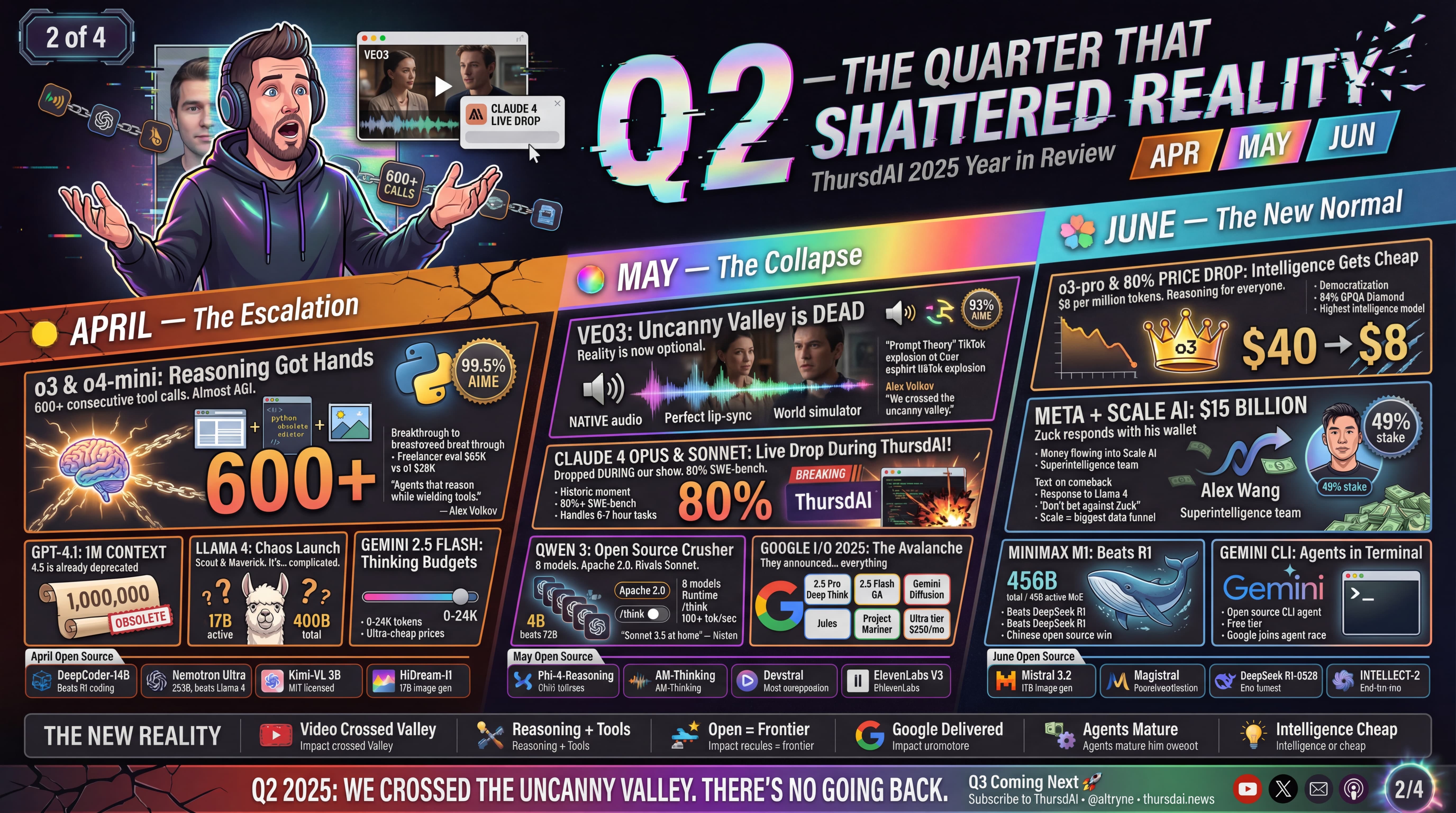

Q2 2025 — The Quarter That Shattered Reality

VEO3 crossed the uncanny valley, Claude 4 arrived with 80% SWE-bench, and Qwen 3 proved open source can match frontier models. The quarter we stopped being able to tell what’s real.

Key Themes:

🎬 Video AI crossed the uncanny valley (VEO3 with native audio)

🧠 Tool-using reasoning models emerged (o3 calling tools mid-thought)

🇨🇳 Open source matched frontier (Qwen 3, Claude 4)

📺 Google I/O delivered everything

💸 AI’s economic impact accelerated ($300B valuations, 80% price drops)

April — Tool-Using Reasoners & Llama Chaos

(Apr 03 | Apr 10 | Apr 17 | Apr 24)

OpenAI o3 & o4-mini (Apr 17) — The most important reasoning upgrade ever. For the first time, o-series models can use tools during reasoning: web search, Python, image gen. Chain 600+ consecutive tool calls. Manipulate images mid-thought.

“This is almost AGI territory — agents that reason while wielding tools” — Alex Volkov

GPT-4.1 Family (Apr 14) — 1 million token context across all models. Near-perfect recall. GPT-4.5 deprecated.

Meta Llama 4 (Apr 5) — Scout (17B active/109B total) & Maverick (17B active/400B total). LMArena drama (tested model ≠ released model). Community criticism. Behemoth teased but never released.

Gemini 2.5 Flash (Apr 17) — Set “thinking budget” per API call. Ultra-cheap at $0.15/$0.60 per 1M tokens.

ThursdAI 100th Episode! 🎉

May — VEO3 Crosses the Uncanny Valley & Claude 4 Arrives

(May 01 | May 09 | May 16 | May 23 | May 29)

VEO3 — The Undisputed Star of Google I/O (May 20) — Native multimodal audio generation (speech, SFX, music synced perfectly). Perfect lip-sync. Characters understand who’s speaking. Spawned viral “Prompt Theory” phenomenon.

“VEO3 isn’t just video generation — it’s a world simulator. We crossed the uncanny valley this quarter.” — Alex Volkov

Claude 4 Opus & Sonnet — Live Drop During ThursdAI! (May 22) — Anthropic crashed the party mid-show. First models to cross 80% on SWE-bench. Handles 6-7 hour human tasks. Hybrid reasoning + instant response modes.

Qwen 3 (May 1) — The most comprehensive open source release ever: 8 models, Apache 2.0. Runtime /think toggle for chain-of-thought. 4B dense beats Qwen 2.5-72B on multiple benchmarks. 36T training tokens, 119 languages.

“The 30B MoE is ‘Sonnet 3.5 at home’ — 100+ tokens/sec on MacBooks” — Nisten

Google I/O Avalanche:

Gemini 2.5 Pro Deep Think (84% MMMU)

Jules (free async coding agent)

Project Mariner (browser control via API)

Gemini Ultra tier ($250/mo)

June — The New Normal

(Jun 06 | Jun 13 | Jun 20 | Jun 26)

o3 Price Drop 90% (Jun 12) — From $40/$10 → $8/$2 per million tokens. o3-pro launched at 87% cheaper than o1-pro.

Meta’s $15B Scale AI Power Play (Jun 12) — 49% stake in Scale AI. Alex Wang leads new “Superintelligence team” at Meta. Seven-to-nine-figure comp packages for researchers.

MiniMax M1 — Reasoning MoE That Beats R1 (Jun 19) — 456B total / 45B active parameters. Full weights on Hugging Face.

Gemini CLI (Jun 26) — Google’s open source terminal agent brings Gemini 2.5 Pro to your command line.

Flux Kontext — SOTA image editing with character consistency.

Q3 2025 — The Quarter of GPT-5 & Trillion-Parameter Open Source

GPT-5 arrived after 32 months. Open source hit trillion-parameter scale. World models became playable. Chinese labs continued their dominance.

Key Themes:

👑 GPT-5 Era began (unified reasoning + chat)

🇨🇳 Open source hit trillion-scale (Kimi K2, Qwen3-Coder)

🌍 World models became playable (Google Genie-3)

🎥 Video reached “can’t tell” quality

💰 Unprecedented investment ($100B pledges, $183B valuations)

July — Trillion-Parameter Open Source Arrives

(Jul 03 | Jul 11 | Jul 17 | Jul 24)

Kimi K2 — The Trillion-Parameter King (Jul 17) — Moonshot dropped a 1 trillion parameter MoE model: 65.8% on SWE-bench Verified (beating Claude Sonnet without reasoning), 32B active parameters, 128K context, Modified MIT license.

“This isn’t just another model release. This is ‘Sonnet at home’ if you have the hardware.” — Alex Volkov

Grok-4 & Grok Heavy (Jul 10) — 50% on Humanity’s Last Exam with tools. 100% on AIME25. xAI finally became a serious contender.

ChatGPT Agent (Odyssey) (Jul 17) — Unified agentic AI: browser + terminal + research. 41.6% on HLE (double o3).

Chinese Open Source Explosion:

Baidu ERNIE 4.5 (10 models, Apache 2.0)

Tencent Hunyuan-A13B (80B MoE, 256K context)

Huawei Pangu Pro (trained entirely on Ascend NPUs — no Nvidia!)

Qwen3-Coder-480B (69.6% SWE-bench)

August — GPT-5 Month

(Aug 01 | Aug 07 | Aug 15 | Aug 21)

GPT-5 Launch (Aug 7) — 32 months after GPT-4:

400K context window

$1.25/$10 per million tokens (Opus is $15/$75)

Unified thinking + chat model

Router-based architecture (initially buggy)

Free tier access for back-to-school

“32 months since GPT-4 release, 32 months of ThursdAI” — Alex Volkov

GPT-OSS (Aug 5) — OpenAI goes Apache 2.0 open source for the first time since GPT-2: 120B and 20B models, configurable reasoning, full chain-of-thought access.

Google Genie-3 (Aug 7) — DeepMind’s world model generates fully interactive 3D environments: real-time at 24fps, memory/consistency breakthrough, walk/fly/control in generated worlds.

DeepSeek V3.1 Hybrid (Aug 21) — Matches/beats R1 with fewer thinking tokens. 66% SWE-bench Verified. Tool calls inside thinking. MIT licensed.

September — Shiptember Delivers

(Sep 05 | Sep 12 | Sep 19 | Sep 26)

GPT-5-Codex (Sep 18) — Works 7+ hours independently. 93% fewer tokens on simple tasks. Reviews majority of OpenAI’s own PRs. Perfect 12/12 on 2025 ICPC.

Meta Connect 25 (Sep 18) — AI glasses with built-in display, neural band wristband, live translation with subtitles, $799 shipping immediately.

Qwen-mas Strikes Again (Sep 26):

Qwen3-VL-235B (vision reasoner, 1M context for video)

Qwen3-Omni-30B (end-to-end omni-modal)

Qwen-Max (over 1T parameters, roadmap to 100M token context)

NVIDIA $100B pledge to OpenAI — “Biggest infrastructure project in history”

Suno V5 — The music generation model where we officially can’t tell anymore.

“I can no longer tell which music is AI and which is human. This is it. We’ve passed the Rubicon.” — Alex Volkov

Q4 2025 — The Quarter of Agents, Gemini’s Crown & The Reasoning Wars

The densest quarter in AI history. Google took the throne with Gemini 3, OpenAI fired back with GPT-5.2, and agents became real products. Someone trained an LLM in space.

Key Themes:

🚀 Reasoning wars peaked (Gemini 3 → GPT-5.2 → DeepSeek gold medals)

🤖 Agents became products (Atlas, AgentKit, ChatGPT Apps)

👑 Google’s comeback (Gemini 3, Antigravity, Nano Banana)

🏃 ASI race accelerated ($1.4T compute, 2028 autonomous researchers)

🎬 Sora 2 launched AI-native social media

October — Sora Changes Social Media Forever

(Oct 03 | Oct 10 | Oct 17 | Oct 24 | Oct 30)

Sora 2 — AI Social Media is Born (Oct 2):

Shot to #3 on iOS App Store within days

Cameos: upload your face, star in any video

Sam Altman shared his Cameo publicly, becoming the internet’s most meme-able person

All content is AI-generated — no uploads, only creations

“This is the first social media with UGC where content can ONLY be generated” — Alex Volkov

OpenAI Dev Day (Oct 9):

ChatGPT Apps for 800M+ weekly active users

AgentKit: drag-and-drop agent builder

GPT-5-Pro in API

Sam revealed $1.4 trillion in compute obligations

AI Makes Novel Cancer Discovery (Oct 16) — A 27B Gemma-based model generated a novel hypothesis about cancer cells validated in a wet lab. First confirmed case of AI creating genuinely new scientific knowledge.

Claude Sonnet 4.5 — 61.4% OS World (computer use)

Claude Haiku 4.5 — 73.3% SWE-Bench, lightning fast

November — The Week That Changed Everything

(Nov 07 | Nov 13 | Nov 20 | Nov 27)

THE MOST INSANE WEEK IN AI HISTORY. In a single span of ~10 days:

Grok 4.1 — #1 LMArena (briefly)

Gemini 3 Pro — Took the throne with 45.14% on ARC-AGI-2 (Deep Think)

GPT-5.1-Codex-Max — 24+ hour autonomous coding

Nano Banana Pro — 4K image generation with perfect text rendering

Meta SAM 3 & SAM 3D — Open-vocabulary segmentation

Claude Opus 4.5 — 80.9% SWE-Bench Verified, beats GPT-5.1

“This week almost broke me as a person whose full-time job is to cover and follow AI releases.” — Alex Volkov

Gemini 3 Pro + Deep Think (Nov 20) — Google finally took the LLM throne: 45.14% on ARC-AGI-2, roughly double previous SOTA.

Google Antigravity IDE (Nov 20) — Free agent-first VS Code fork with browser integration, multiple parallel agents.

Nano Banana Pro (Nov 20) — Native 4K resolution with “thinking” traces, perfect text rendering.

Claude Opus 4.5 (Nov 27) — 80.9% SWE-Bench Verified. $5/$25 per MTok (1/3 previous cost). “Effort” parameter for reasoning control.

“Opus 4.5 is unbelievable. You can ship a full feature on a mature code base in one day, always. It’s just mind blowing.” — Ryan Carson

1X NEO (Oct 30) — First consumer humanoid robot, pre-orders at $20,000, delivery early 2026.

December — GPT-5.2 Fires Back

(Dec 02 | Dec 05 | Dec 12 | Dec 19)

GPT-5.2 — OpenAI’s Answer to Gemini 3 (Dec 11) — Dropped live during ThursdAI:

90.5% on ARC-AGI-1 (Pro X-High configuration)

54%+ on ARC-AGI-2 — reclaiming frontier from Gemini 3

100% on AIME 2025 — perfect math olympiad score

70% on GDPval (up from 47% in Sept!)

Reports of models thinking for 1-3 hours on hard problems

DeepSeek V3.2 & V3.2-Speciale — Gold Medal Reasoning (Dec 4):

96% on AIME (vs 94% for GPT-5 High)

Gold medals on IMO (35/42), CMO, ICPC (10/12), IOI (492/600)

$0.28/million tokens on OpenRouter

MCP Donated to Linux Foundation (Dec 11) — Agentic AI Foundation launched under Linux Foundation. MCP, AGENTS.md, and goose donated to vendor-neutral governance.

Mistral 3 Returns to Apache 2.0 (Dec 4) — Mistral Large 3 (675B MoE), Ministral 3 (vision, edge-optimized).

Starcloud: LLM Training in Space (Dec 11) — An H100 satellite trained nanoGPT on Shakespeare. SSH into an H100… in space… with a US flag in the corner.

“Peak 2025 energy — the era of weird infra ideas has begun.” — Karpathy reacts

Gemini 3 Flash (Dec 18) — Fastest frontier model, pairs with Gemini 3 Pro for speed vs depth tradeoffs.

🙏 Thank You

This has been an incredible year of ThursdAI. 51 episodes, countless releases, and a community that keeps showing up every week to make sense of the madness together.

Huge thanks to our amazing co-hosts and friends of the pod:

Alex Volkov — AI Evangelist, Weights & Biases (@altryne)

Wolfram Ravenwolf (@WolframRvnwlf)

Yam Peleg (@yampeleg)

Nisten Tahiraj (@nisten)

LDJ (@ldjconfirmed)

Ryan Carson (@ryancarson)

Kwindla Hultman Kramer — CEO of Daily (@kwindla)

And to everyone who tunes in — whether you’re listening on your commute, doing dishes, or just trying to keep up with the insanity — thank you. You make this possible.

📢 Stay Connected

🎧 Subscribe: thursdai.news

🐦 Follow Alex: @altryne

💻 This recap is open source: github.com/altryne/thursdAI_yearly_recap

“We’re living through the early days of a technological revolution, and we get to be part of it. That’s something to be genuinely thankful for.” — Alex Volkov

Happy Holidays, and see you in 2026! 🚀

The best is yet to come. Hold on to your butts.