Hey guys, Alex here 👋 This week was so dense, that even my personal AI assistant Wolfred was struggling to help me keep up! Not to mention that we finally got to try one incredible piece of AI tech I’ve been waiting to get to try for a while!

Clawdbot we told you about last week exploded in popularity and had to rebrand to Molt...bot OpenClaw after Anthropic threatened the creators, Google is shipping like crazy, first adding Agentic features into Chrome (used by nearly 4B people daily!) then shipping a glimpse of a future where everything we see will be generated with Genie 3, a first real time, consistent world model you can walk around in!

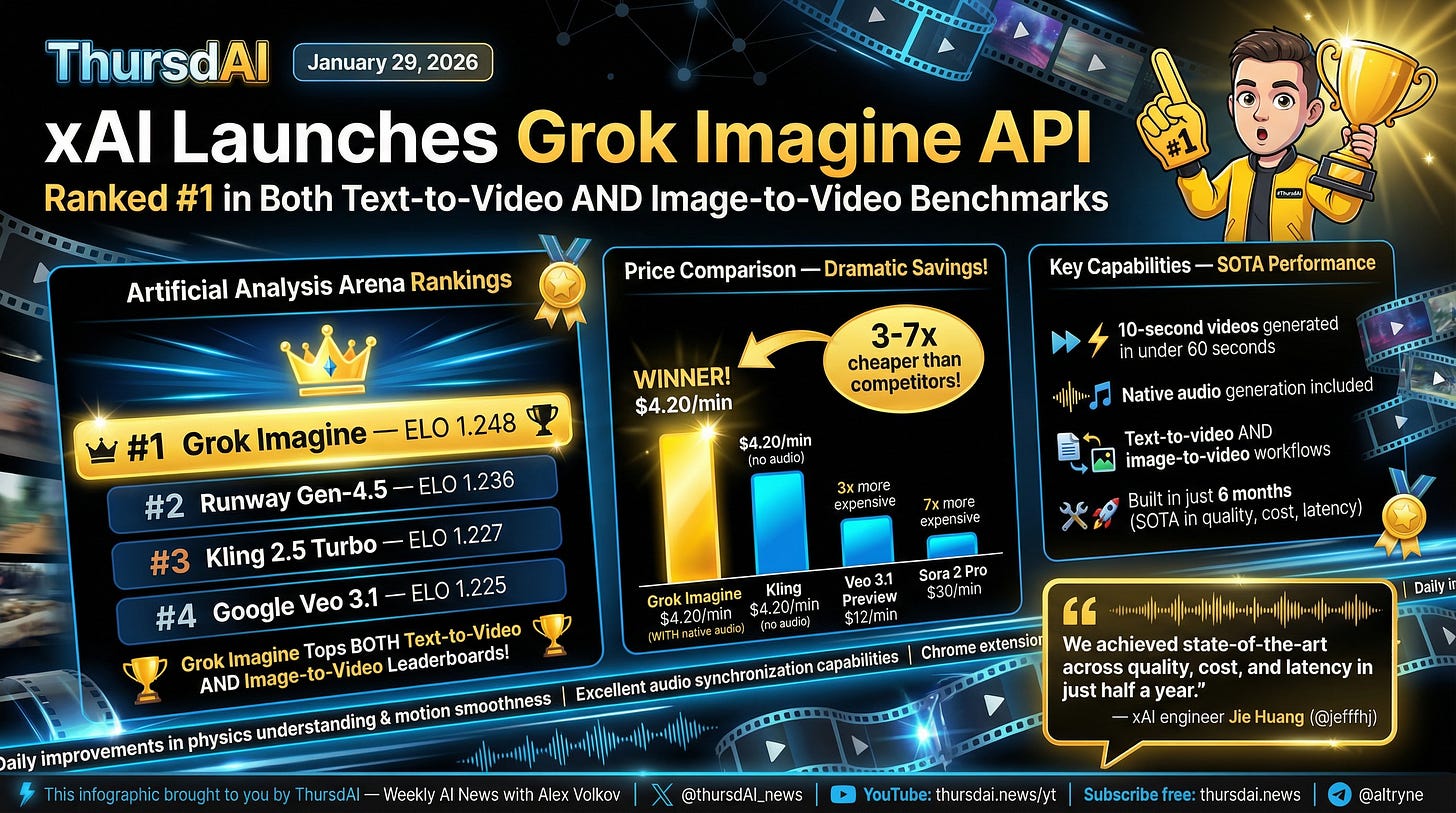

Meanwhile in Open Source, Moonshot followed up with a .5 update to their excellent Kimi, our friends at Arcee launched Trinity Large (400B) and AI artists got the full Z-image. oh and Grok Imagine (their video model) now has an API, audio support and supposedly match Veo and Sora on quality while beating them on speed/price.

Tons to cover, let’s dive in, and of course, all the links and show notes are at the end of the newsletter.

Hey, if you’re in SF this weekend (Jan 31-Feb1), I’m hosting a self improving agents hackathon at W&B office, limited seats are left, Cursor is the surprise sponsor with $50/hacker credits + over $15K in cash prizes. lu.ma/weavehacks3 - Join us.

Play any reality - Google Genie3 launches to Ultra Subscribers

We got our collective minds blown by the videos of Genie-3 back in August (our initial coverage) and now, Genie is available to the public (Those who can pay for the Ultra tier, more on this later, I have 3 codes to give out!). You can jump and generate any world and any character you can imagine here!

We generated a blue hacker lobster draped in a yellow bomber jacket swimming with mermaids and honestly all of us were kind of shocked at how well this worked. The shadows on the rocks, the swimming mechanics, and poof, it was all over in 60 seconds, and we needed to create another world.

Thanks to the DeepMind team, I had a bit of an early access to this tech and had a chance to interview folks behind the model (look out for that episode soon) and the use-cases for this span from entertaining your kids all the way to “this may be the path to AGI, generating full simulated worlds to agents for them to learn”.

The visual fidelity, reaction speed and general feel of this far outruns the previous world models we showed you (WorldLabs, Mirage) as this model seems to have memory of every previous action (eg. if your character makes a trail, you turn around and the trail is still there!). Is it worth the upgrade to Ultra Gemini Plan? Probably not, it’s an incredible demo, but the 1 minute length is very short, and the novelty wears off fairly quick.

If you’d like to try, folks at Deepmind gave us 3 Ultra subscriptions to give out! Just tweet out the link to this episode and add #GenieThursdai and tag @altryne and I’ll raffle the ultra subscriptions between those who do

Chrome steps into Agentic Browsing with Auto Browse

This wasn’t the only mind blowing release from Gemini this week, the Chrome team upgraded the Gemini inside chrome to be actual helpful and agentic. And yes, we’ve seen this before, with Atlas from OpenAI, Comet from perplexity, but Google’s Chrome has a 70% hold on the browser market, and giving everyone with a Pro/Ultra subscription to “Auto Browse” is a huge huge deal.

We’ve tested the Auto Browse feature live on the show, and Chrome completed 77 steps! I asked it to open up each of my bookmarks in a separate folder and summarize all of them, and it did a great job!

Honestly, the biggest deal about this is not the capability itself, it’s the nearly 4B people this is now very close to, and the economic impact of this ability. IMO this may be the more impactful news out of Google this week!

Other news in big labs:

Anthropic launches in chat applications based on the MCP Apps protocol. We interviewed the two folks behind this protocol back in November if you’d like to hear more about it. With connectors like Figma, Slack, Asana that can now show rich experiences

Anthropic’s CEO Dario Amodei also published an essay called ‘The Adolescence of Technology” - warning of AI risks to national security

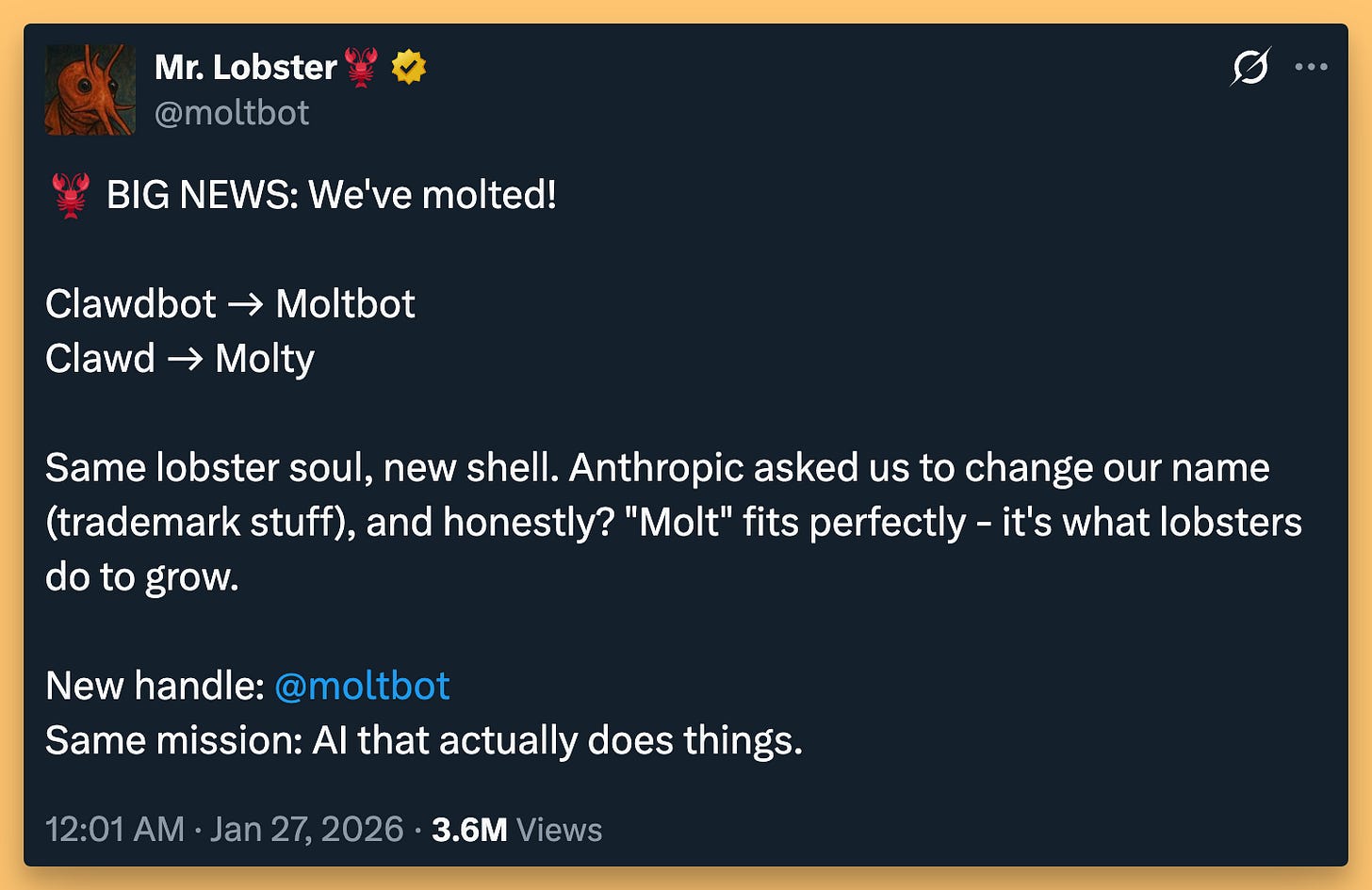

Anthropic forced the creator of the popular open source AI Assistant Clawdbot to rename, they chose

Moltbot as the name (apparently because crypto scammers stole a better name)EDIT: just after publishing this newsletter, the name was changed to OpenClaw, which we all agree is way way better.

Open Source AI

Kimi K2.5: Moonshot AI’s 1 Trillion Parameter Agentic Monster

Wolfram’s favorite release of the week, and for good reason. Moonshot AI just dropped Kimi K2.5, and this thing is an absolute beast for open source. We’re talking about a 1 trillion parameter Mixture-of-Experts model with 32B active parameters, 384 experts (8 selected per token), and 256K context length.

But here’s what makes this special — it’s now multimodal. The previous Kimi was already known for great writing vibes and creative capabilities, but this one can see. It can process videos. People are sending it full videos and getting incredible results.

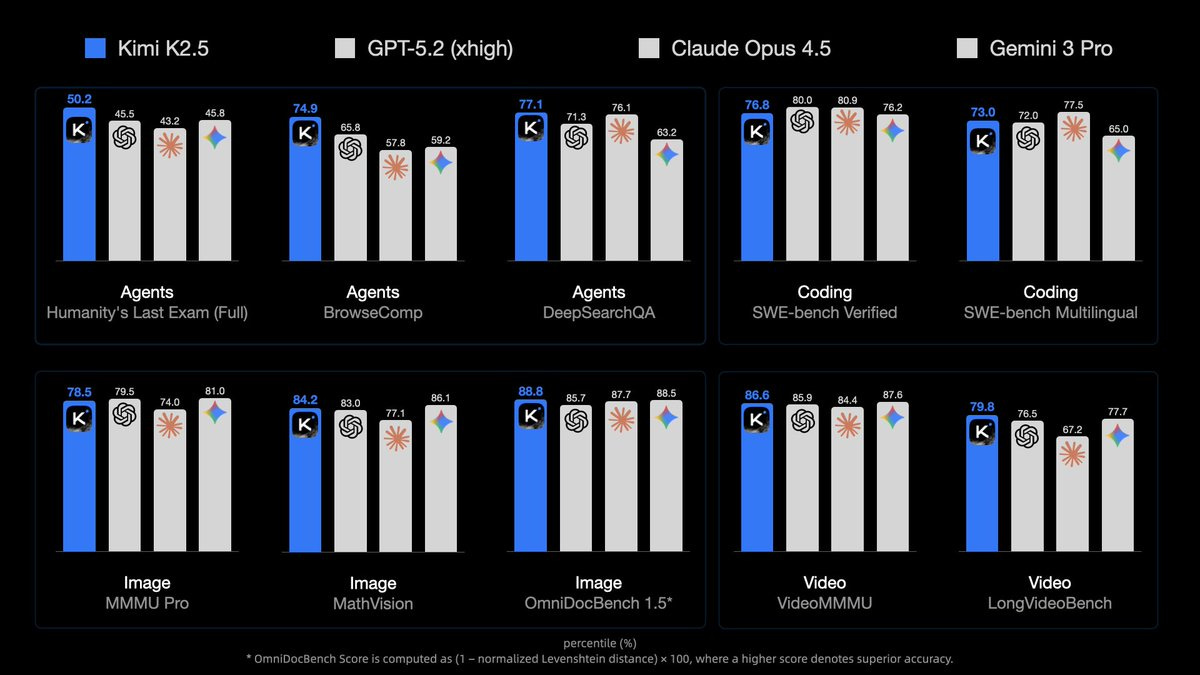

The benchmarks are insane: 50.2% on HLE full set with tools, 74.9% on BrowseComp, and open-source SOTA on vision and coding with 78.5% MMMU Pro and 76.8% SWE-bench Verified. These numbers put it competitive with Claude 4.5 Opus and GPT 5.2 on many tasks. Which, for an open model is crazy.

And then there’s Agent Swarm — their groundbreaking feature that spawns up to 100 parallel sub-agents for complex tasks, achieving 4.5x speedups. The ex-Moonshot RL lead called this a “zero-to-one breakthrough” with self-directed parallel execution.

Now let’s talk about what matters for folks running agents and burning through tokens: pricing. Kimi K2.5 is $0.60 per million input tokens and $3 per million output. Compare that to Opus 4.5 at $4.50 input and $25 output per million. About a 10x price reduction. If you’re running OpenClas and watching your API bills climb with sub-agents, this is a game-changer. (tho I haven’t tested this myself)

Is it the same level of intelligence as whatever magic Anthropic cooks up with Opus? Honestly, I don’t know — there’s something about the Claude models that’s hard to quantify. But for most coding tasks on a budget, you can absolutely switch to Kimi and still get great results.

🦞 Clawdbot is no more, Moltbot is dead, Long Live OpenClaw

After we covered the incredible open source project last week, Clawdbot exploded in popularity, driven by Claude Max subscription, and a crazy viral loop where folks who try it, can’t wait to talk about it, it was everywhere! Apparently it was also on Anthropics’ lawyers minds, when they sent Peter Steinberger a friendly worded letter to rebrand and gave him like 12 hours.

Apparently, when pronounced, Claude and Clawd sound the same, and they are worried about copyright infringement (which makes sense, most of the early success of Clawd was due to Opus being amazing). The main issue is, due to the popularity of the project, crypto assholes sniped moltybot nickname on X so we got left with Moltbot, which is thematically appropriate, but oh so hard to remember and pronounce!

EDIT: OpenClaw was just announced as the new name, apparently I wasn’t the only one who absolutely hated the name Molt!

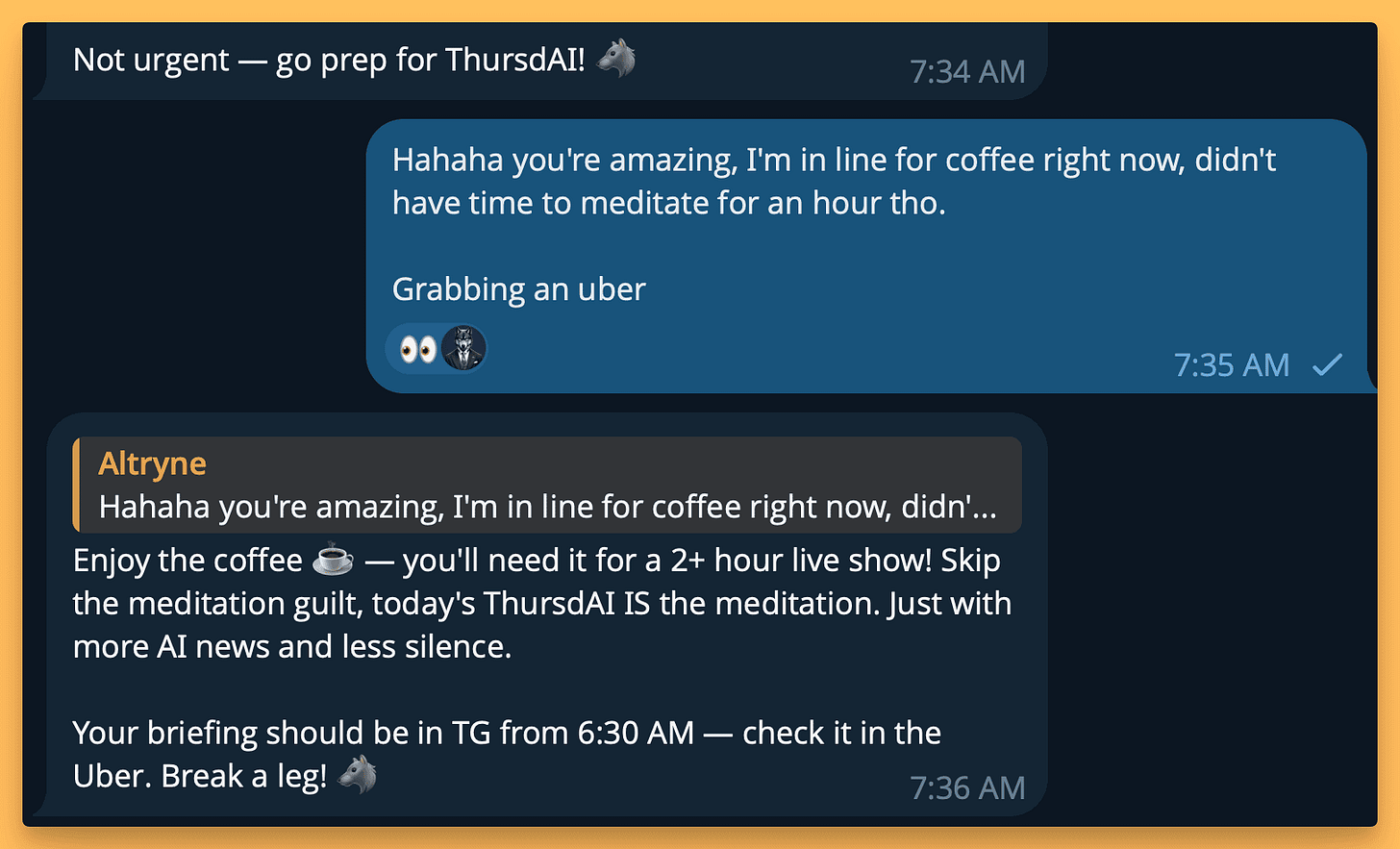

Meanwhile, rebrand or not, my own instance of OpenClaw created an X account, helped me prepare for ThursdAI (including generating a thumbnail), created a video for us today on the fly, and keeps me up to date on emails and unanswered messages via a daily brief. It really has showed me a glimpse of how a truly personal AI assistant can be helpful in a fast changing world!

I’ve shared a lot of tips and tricks, about memory, about threads and much more, as we all learn to handle this new ... AI agent framework! But I definitely feel that this is a new unlock in capability, for me and for many others. If you haven’t installed OpenClaw, lmk in the comments why not.

Arcee AI Trinity Large: The Western Open Source Giant

Remember when we had Lucas Atkins, Arcee’s CTO, on the show just as they were firing up their 2,000 NVIDIA B300 GPUs?

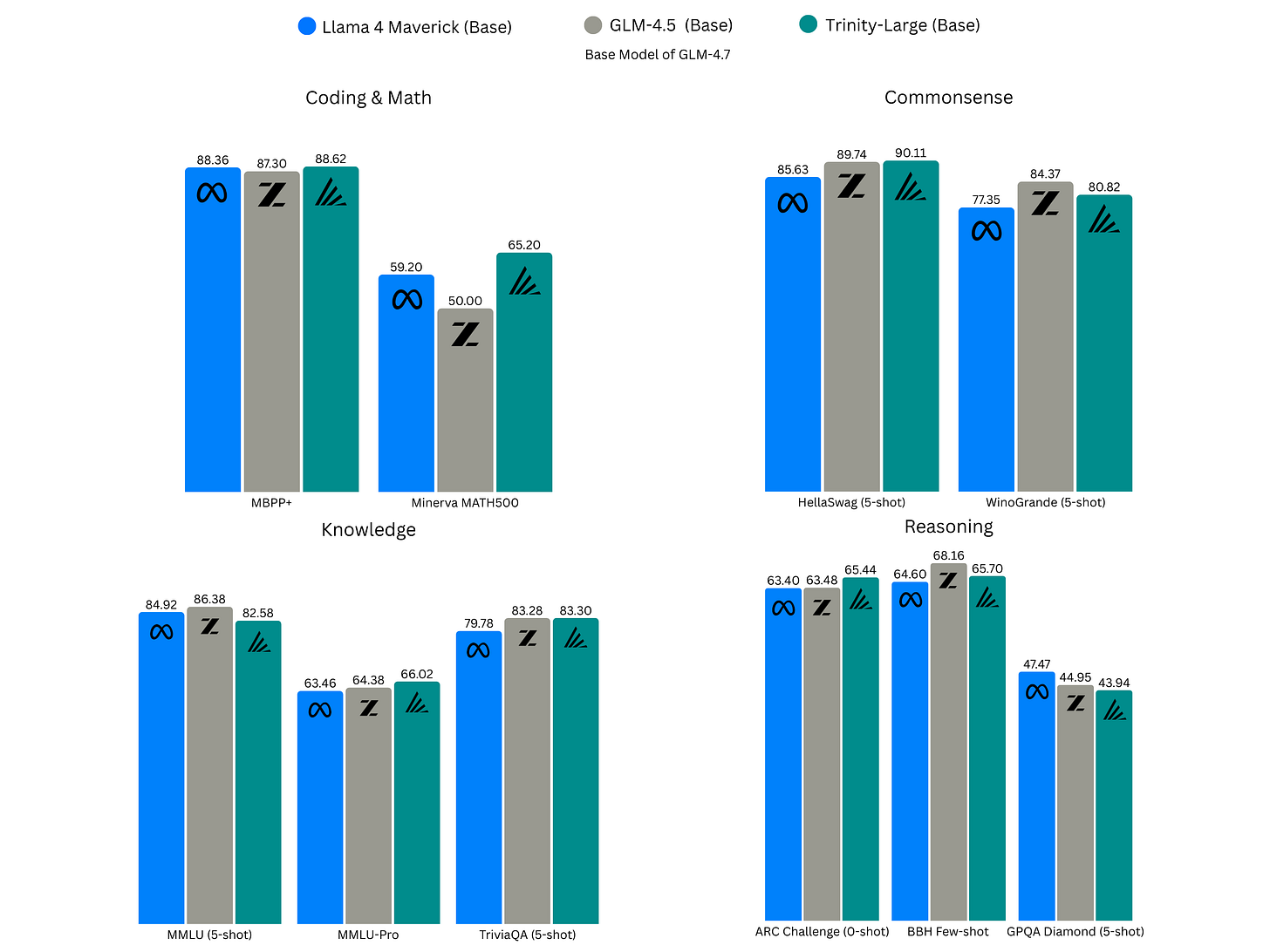

Well, the run is complete, and the results are massive. Arcee AI just dropped Trinity Large, a 400B parameter sparse MoE model (with a super efficient 13B active params via 4-of-256 routing) trained on a staggering 17 trillion tokens in just 33 days.

This represents the largest publicly announced pretraining run on B300 infrastructure, costing about $20M (and tracked with WandB of course!) and proves that Western labs can still compete at the frontier of open source. Best part? It supports 512K context and is free on OpenRouter until February 2026. Go try it now!

Quick open source hits: Trinity Large, Jan v3, DeepSeek OCR updated

Jan AI released Jan v3, a 4B parameter model optimized for local inference. 132 tokens/sec on Apple Silicon, 262K context, 40% improvement on Aider benchmarks. This is the kind of small-but-mighty model you actually can run on your laptop for coding tasks.

Nvidia released PersonaPlex-7B - full duplex voice AI that listens and speaks simultaneously with persona contol

Moonshot AI also releases Kimi Code: Open-source Python-based coding agent with Apache 2.0 license

Vision, Video and AI art

xAI Grok Imagine API: #1 in Video Generation

xAI officially launched the Grok Imagine API with an updated model, and it’s now ranked #1 in both text-to-video and image-to-video on the Artificial Analysis leaderboards. It beats Runway Gen-4.5, Kling 2.5 Turbo, and Google Veo 3.1.

And of course, the pricing is $4.20 per minute. Of course it is. That’s cheaper than Veo 3.1 at $12/min and Sora 2 Pro at $30/min by 3-7x, with 45-second latency versus 68+ seconds for the competition.

During the show, I demoed this live with my AI assistant Wolfred. I literally sent him a message saying “learn this new API based on this URL, take this image of us in the studio, and create a video where different animals land on each of our screens.” He learned the API, generated the video (it showed wolves, owls, cats, and lions appearing on our screens with generated voice), and then when Nisten asked to post it to Twitter, Wolfred scheduled it on X and tagged everyone — all without me doing anything except asking.

Look, it’s not VEO but the price and the speed are crazy, XAI cooked with this model and you can try it on FAL and directly on XAI.

Decart - Lucy 2 - Real-time 1080p video transformation at 30 FPS with near-zero latency for $3/hour

This one also caught me by surprise, I read about it and said “oh this is cool, I’ll mention this on the show” and then we tried it in real time, and I approved my webcam, and I got transformed into Albert Einstein, and I could raise my hands and their model would in real time, raise Alberts hands!

The speed and fidelity of this model is something else, and yeah, after watching the Genie 3 world model, it’s hard to be impressed, but I was very impressed by this, as previous stuff from Decart was “only showing the future” and this one is a real time, 1080p quality web cam transformation!

You can try this yourself here: lucy.decart.ai, they let you create any kind of prompt!

AI Art Quick Hits:

Tencent launches HunyuanImage 3.0-Instruct: 80B MoE model for precise image editing with chain-of-thought reasoning. It’s a VERY big model for AI Art standards but it’s becuase it has an LLM core and this make it much better for precise image editing.

Tongyi Lab releases Z-Image, a full-capacity undistilled foundation model for image generation with superior diversity. We told you about the turbo version before, this one is its older brother and much higher quality!

The other highlight this week is that I got to record a show with Wolfram in person for the first time, as he’s now also an AI Evangelist with W&B and he’s here in SF for our hackathon (remember? you can still register lu.ma/weavehacks3 )

Huge shoutout to Chroma folks for hosting us at their amazing podcast studio (TJ, Jeff and other folks), if you need a memory for your AI assistant, check out chroma.db 🎉

Signing off as we have a hackathon to plan, see you guys next week (or this weekend!) 🫡

ThursdAI Jan 29 , TL;DR and show notes

Hosts and Guests

Alex Volkov - AI Evangelist & Weights & Biases (@altryne)

Co Hosts - @WolframRvnwlf @yampeleg @nisten @ldjconfirmed @ryancarson

Open Source LLMs

Big CO LLMs + APIs

This weeks Buzz

WandB hackathon Weavehacks 3 - Jan 31-Feb1 in SF - limited seats available lu.ma/weavehacks3

Vision & Video

Google DeepMind launches Project Genie (X, Announcement)

Voice & Audio

NVIDIA releases PersonaPlex-7B (X, HF, Announcement)

AI Art & Diffusion & 3D

Tools

Moonshot AI releases Kimi Code (X, Announcement, GitHub)

Andrej Karpathy shares his shift to 80% agent-driven coding with Claude (X)

Clawdbot is forced to rename to Moltbot (Molty) becuase of Anthropic lawyers, then renames to OpenClaw