Hey ya’ll, Alex here, and this week I was especially giddy to record the show! Mostly because when a thing clicks for me that hasn’t clicked before, I can’t wait to tell you all about it!

This week, that thing is Agent Skills! The currently best way to customize your AI agents with domain expertise, in a simple, repeatable way that doesn’t blow up the context window! We mentioned skills when Anthropic first released them (Oct 16) and when they became an open standard but it didn’t really click until last week! So more on that below.

Also this week, Anthropic released a research preview of Claude Cowork, an agentic tool for non coders, OpenAI finally let loos GPT 5.2 Codex (in the API, it was previously available only via Codex), Apple announced a deal with Gemini to power Siri, OpenAI and Anthropic both doubled down on healthcare and much more! We had an incredible show, with an expert in Agent Skills, Eleanor Berger and the usual gang on co-hosts, strongly recommend watching the show in addition to the newsletter!

Also, I vibe coded skills support for all LLMs to Chorus, and promised folks a link to download it, so look for that in the footer, let’s dive in!

Big Company LLMs + APIs: Cowork, Codex, and a Browser in a Week

Anthropic launches Claude Cowork: Agentic AI for Non‑Coders (research preview)

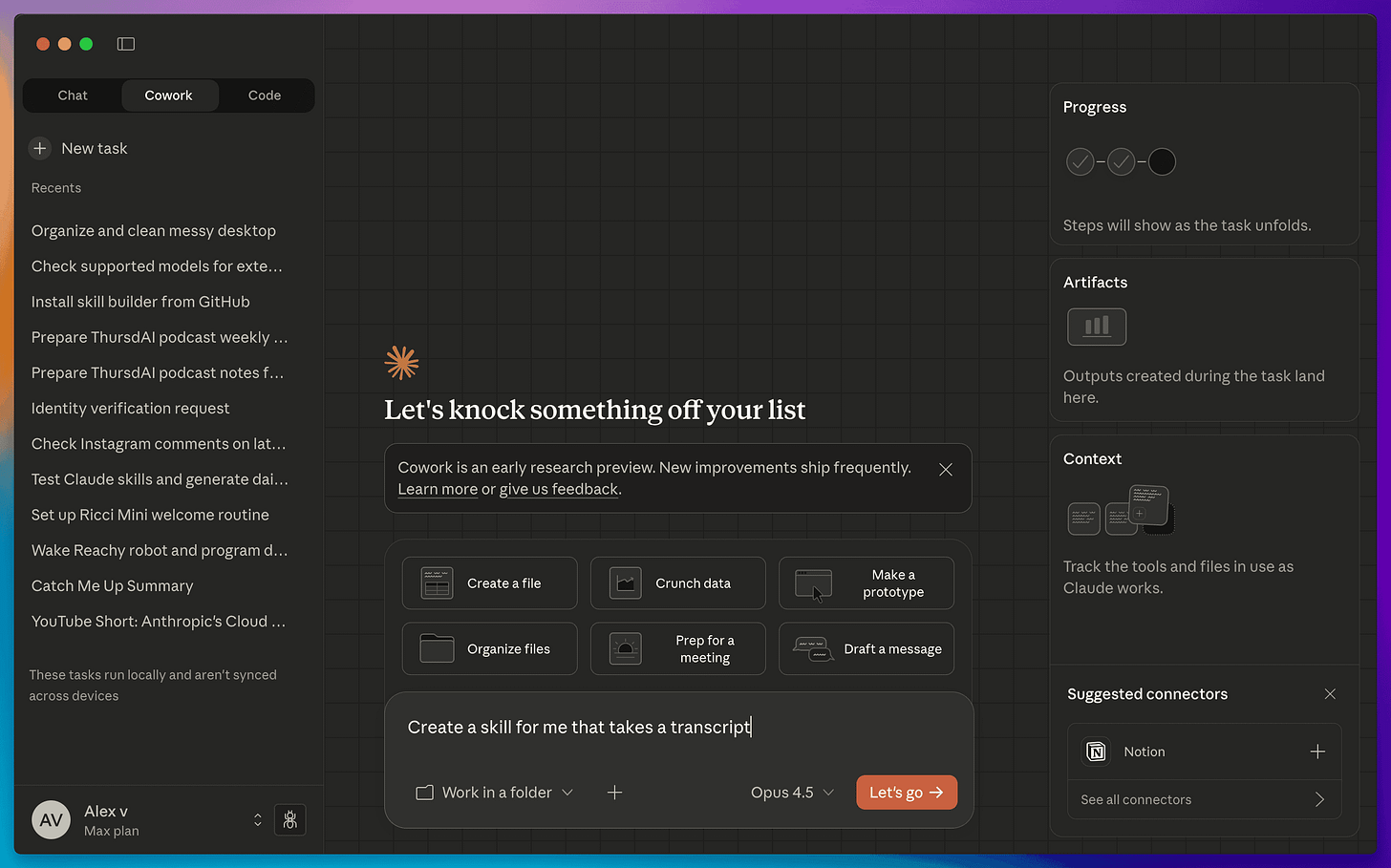

Anthropic announced Claude Cowork, which is basically Claude Code wrapped in a friendly UI for people who don’t want to touch a terminal. It’s a research preview available on the Max tier, and it gives Claude read/write access to a folder on your Mac so it can do real work without you caring about diffs, git, or command line.

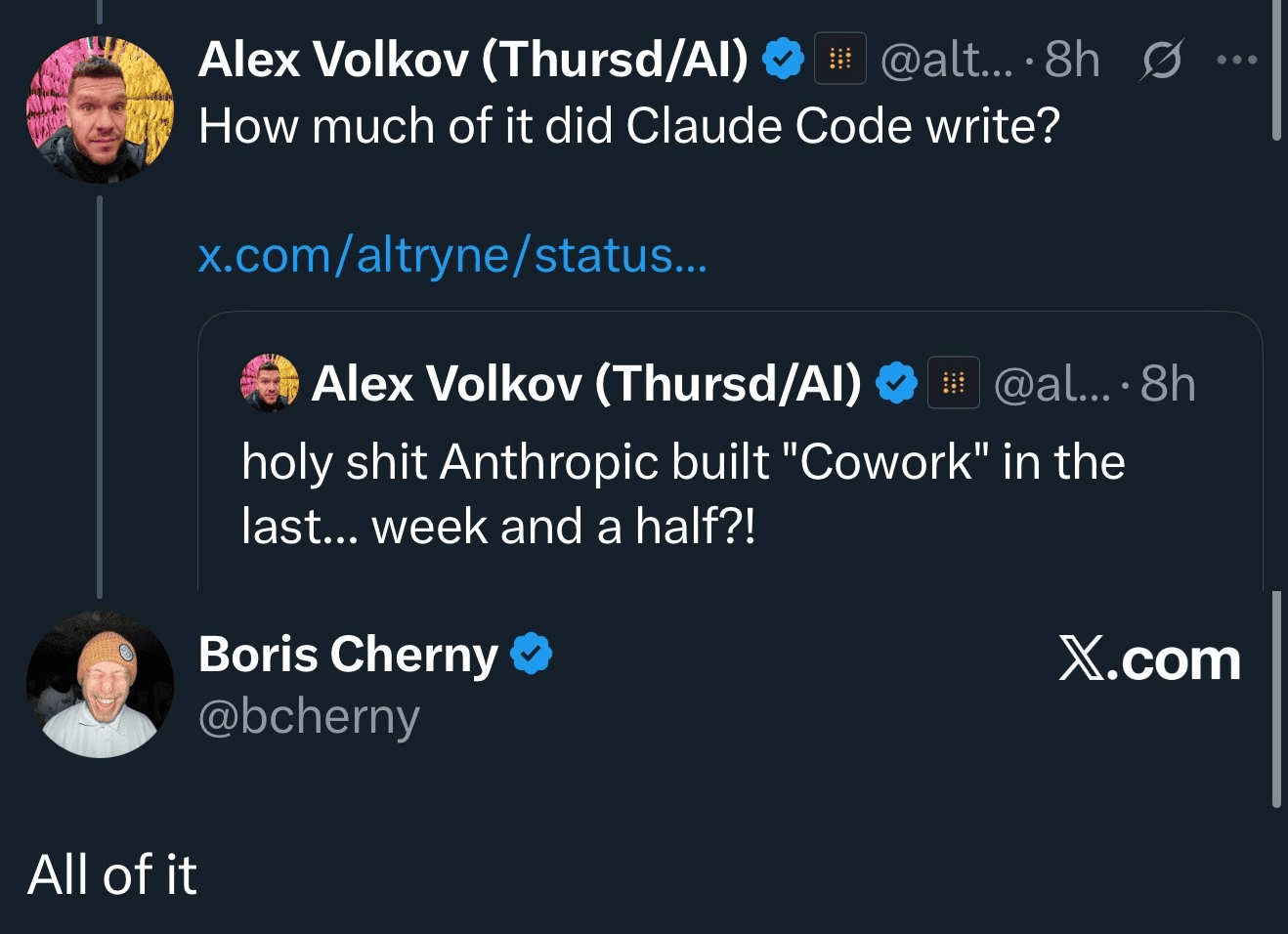

The wild bit is that Cowork was built in a week and a half, and according to the Anthropic team it was 100% written using Claude Code. This feels like a “we’ve crossed a threshold” moment. If you’re wondering why this matters, it’s because coding agents are general agents. If a model can write code to do tasks, it can do taxes, clean your desktop, or orchestrate workflows, and that means non‑developers can now access the same leverage developers have been enjoying for a year.

It also isn’t just for files—it comes with a Chrome connector, meaning it can navigate the web to gather info, download receipts, or do research and it uses skills (more on those later)

Earlier this week I recorded this first reactions video about Cowork and I’ve been testing it ever since, it’s a very interesting approach of coding agents that “hide the coding” to just... do things. Will this become as big as Claude Code for anthropic (which is reportedly a 1B business for them)? Let’s see!

There are real security concerns here, especially if you’re not in the habit of backing up or using git. Cowork sandboxes a folder, but it can still delete things in that folder, so don’t let it loose on your whole drive unless you like chaos.

GPT‑5.2 Codex: Long‑Running Agents Are Here

OpenAI shipped GPT‑5.2 Codex into the API finally! After being announced as the answer for Opus 4.5 and only being available in Codex. The big headline is SOTA on SWE-Bench and long‑running agentic capability. People describe it as methodical. It takes longer, but it’s reliable on extended tasks, especially when you let it run without micromanaging.

This model is now integrated into Cursor, GitHub Copilot, VS Code, Factory, and Vercel AI Gateway within hours of launch. It’s also state‑of‑the‑art on SWE‑Bench Pro and Terminal‑Bench 2.0, and it has native context compaction. That last part matters because if you’ve ever run an agent for long sessions, the context gets bloated and the model gets dumber. Compaction is an attempt to keep it coherent by summarizing old context into fresh threads, and we debated whether it really works. I think it helps, but I also agree that the best strategy is still to run smaller, atomic tasks with clean context.

Cursor vibe-coded browser with GPT-5.2 and 3M lines of code

The most mind‑blowing thing we discussed is Cursor letting GPT‑5.2 Codex run for a full week to build a browser called FastRenderer. This is not Chromium‑based. It’s a custom HTML parser, CSS cascade, layout engine, text shaping, paint pipeline, and even a JavaScript VM, written in Rust, from scratch. The codebase is open source on GitHub, and the full story is on Cursor’s blog

It took nearly 30,000 commits and millions of lines of code. The system ran hundreds of concurrent agents with a planner‑worker architecture, and GPT‑5.2 was the best model for staying on task in that long‑running regime. That’s the real story, not just “lol a model wrote a browser.” This is a stress test for long‑horizon agentic software development, and it’s a preview of how teams will ship in 2026.

I said on the show, browsers are REALLY hard, it took two decades for the industry to settle and be able to render websites normally, and there’s a reason everyone’s using Chromium. This is VERY impressive 👏

Now as for me, I began using Codex again, but I still find Opus better? Not sure if this is just me expecting something that’s not there? I’ll keep you posted

Gemini Personal Intelligence: The Data Moat king is back!

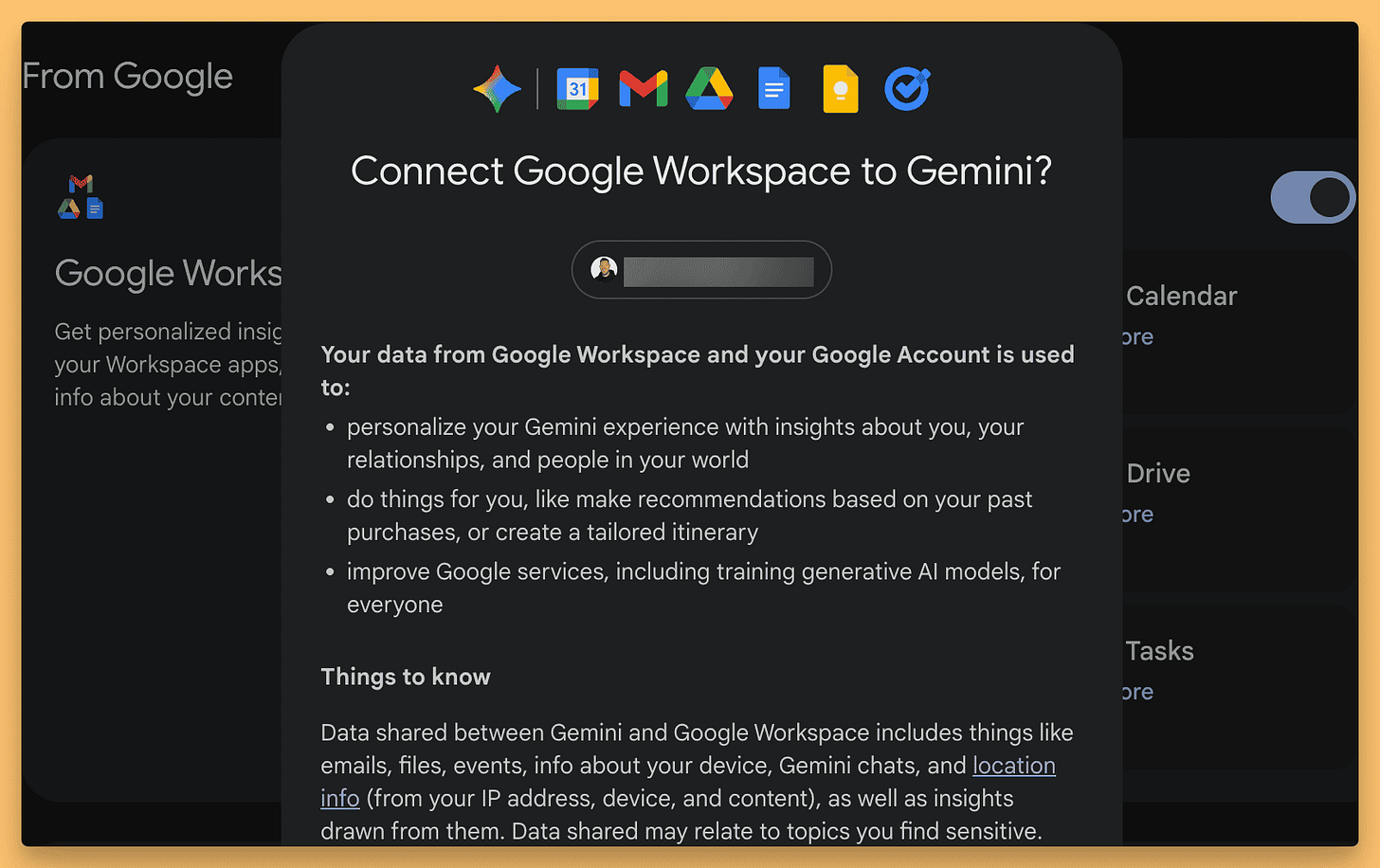

What kind of car do you drive? Does ChatGPT know that? welp, it turns our Google does (based on your emails, Google photos) and now Gemini can tap into this personal info (if you allow it, they are stressing privacy), and give you much more personalized answers!

Flipping this Beta feature on, lets Gemini reason across Gmail, YouTube, Photos, and Search with explicit opt‑in permissions, and it’s rolling out to Pro and Ultra users in the US first.

I got to try it early, and it’s uncanny. I asked Gemini what car I drive, and it told me I likely drive a Model Y, but it noticed I recently searched for a Honda Odyssey and asked if I was thinking about switching. It was kinda... freaky because I forgot I had early access and this was turned on 😂

Pro Tip: if you’re brave enough to turn this on, ask for a complete profile on you 🙂

Now the last piece is for Gemini to become proactive, suggesting things for me based on my needs!

Apple & Google: The Partnership (and Drama Corner)

We touched on this in the intro, but it’s official: Apple Intelligence will be powered by Google Gemini for “world knowledge” tasks. Apple stated that after “careful evaluation,” Google provided the most capable foundation model for their.. apple foundation models. It’s confusing, I agree.

Honestly? I got excited about Apple Intelligence, but Siri is still... Siri. It’s 2026 and we are still struggling with basic intents. Hopefully, plugging Gemini into the backend changes that?

In other drama: The silicon valley carousel continues. 3 Co-founders (Barret Zoph, Sam Schoenholz and Luke Metz) from Thinking Machines (and former OpenAI folks) have returned to the mothership (OpenAI), amid some vague tweets about “unethical conduct.” It’s never a dull week on the timeline.

This Week’s Buzz: WeaveHacks 3 in SF

I’ve got one thing in the Buzz corner this week, and it’s a big one. WeaveHacks 3 is back in San Francisco, January 31st - February 1st. The theme is self‑improving agents, and if you’ve been itching to build in person, this is it. We’ve got an amazing judge lineup, incredible sponsors, and a ridiculous amount of agent tooling to play with.

You can sign up here: https://luma.com/weavehacks3

If you’re coming, add to the form you heard it on ThursdAI and we’ll make sure you get in!

Deep Dive: Agent Skills With Eleanor Berger

This was the core of the episode, and I’m still buzzing about it. We brought on Eleanor Berger, who has basically become the skill evangelist for the entire community, and she walked us through why skills are the missing layer in agentic AI.

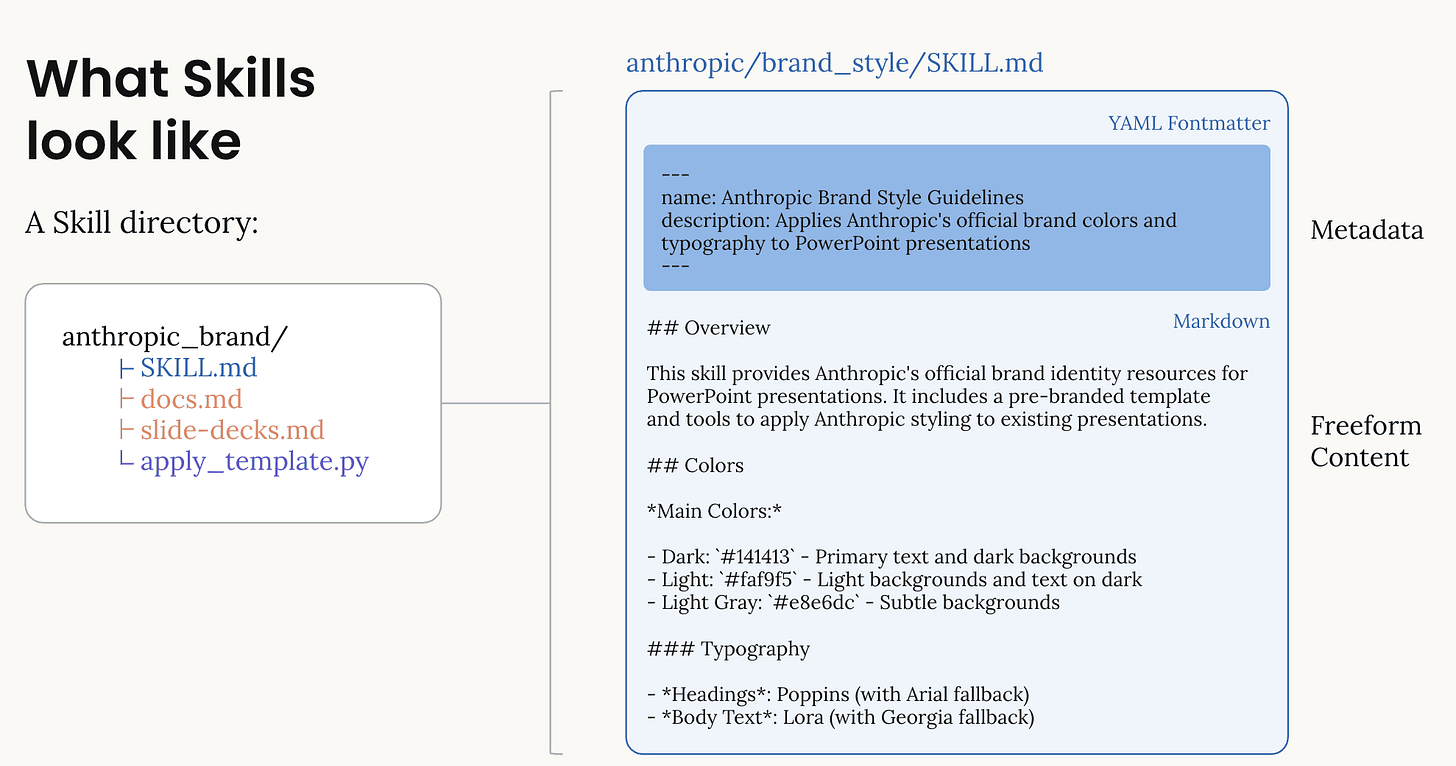

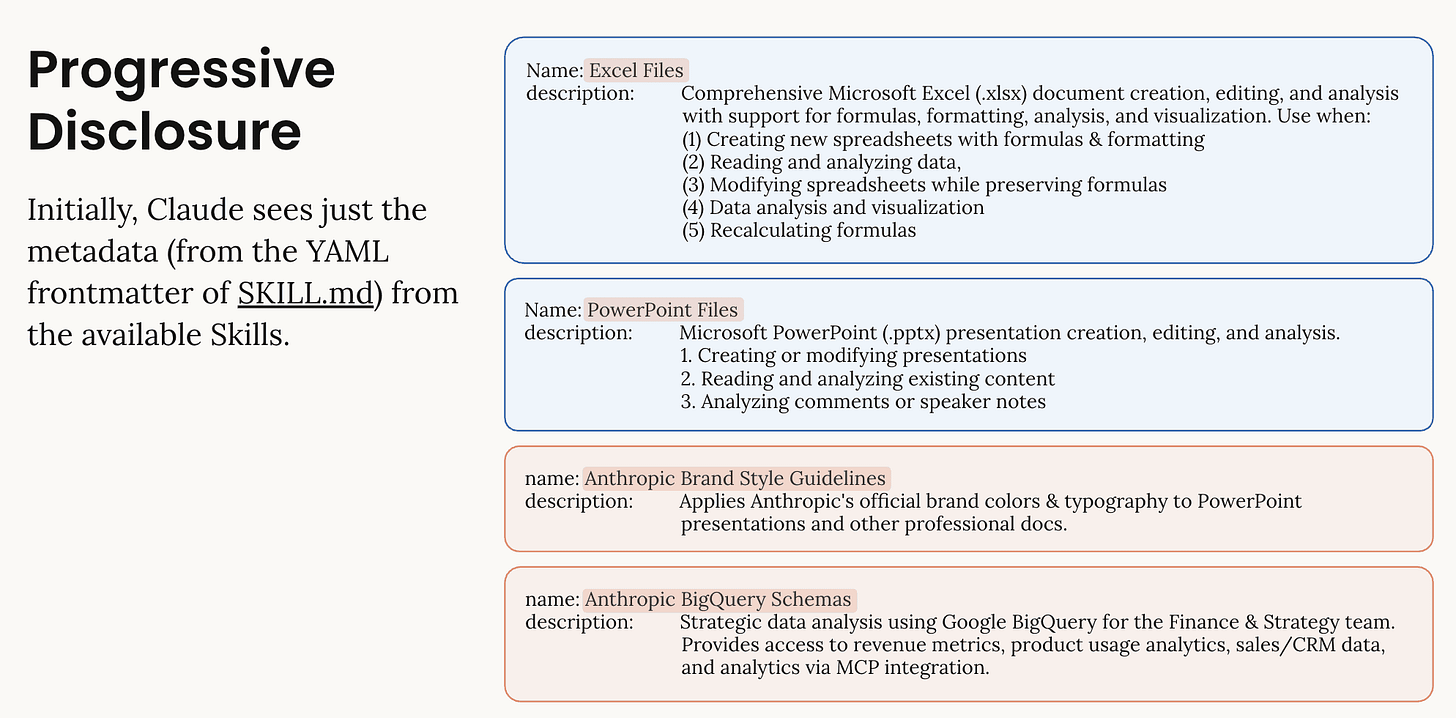

Skills are simple markdown files with a tiny bit of metadata in a directory together optional scripts, references, and assets. The key idea is progressive disclosure. Instead of stuffing your entire knowledge base into the context, the model only sees a small list of skills and let it load only what it needs. That means you can have hundreds of skills without blowing your context window (and making the model dumber and slower in result)

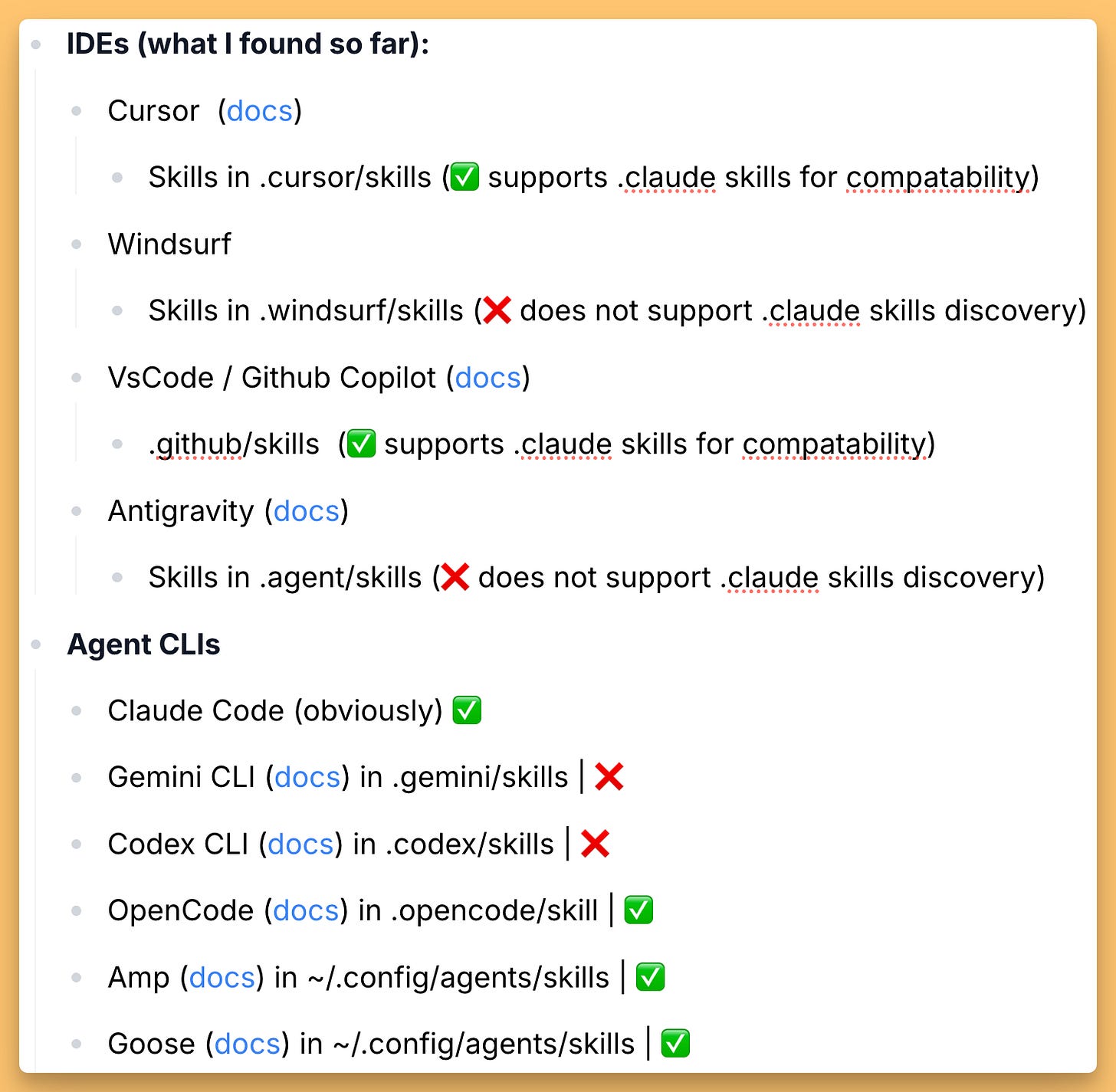

The technical structure is dead simple, but the implications are huge. Skills create a portable, reusable, composable way to give agents domain expertise, and they now work across most major harnesses. That means you can build a skill once and use it in Claude, Cursor, AMP, or any other agent tool that supports the standard.

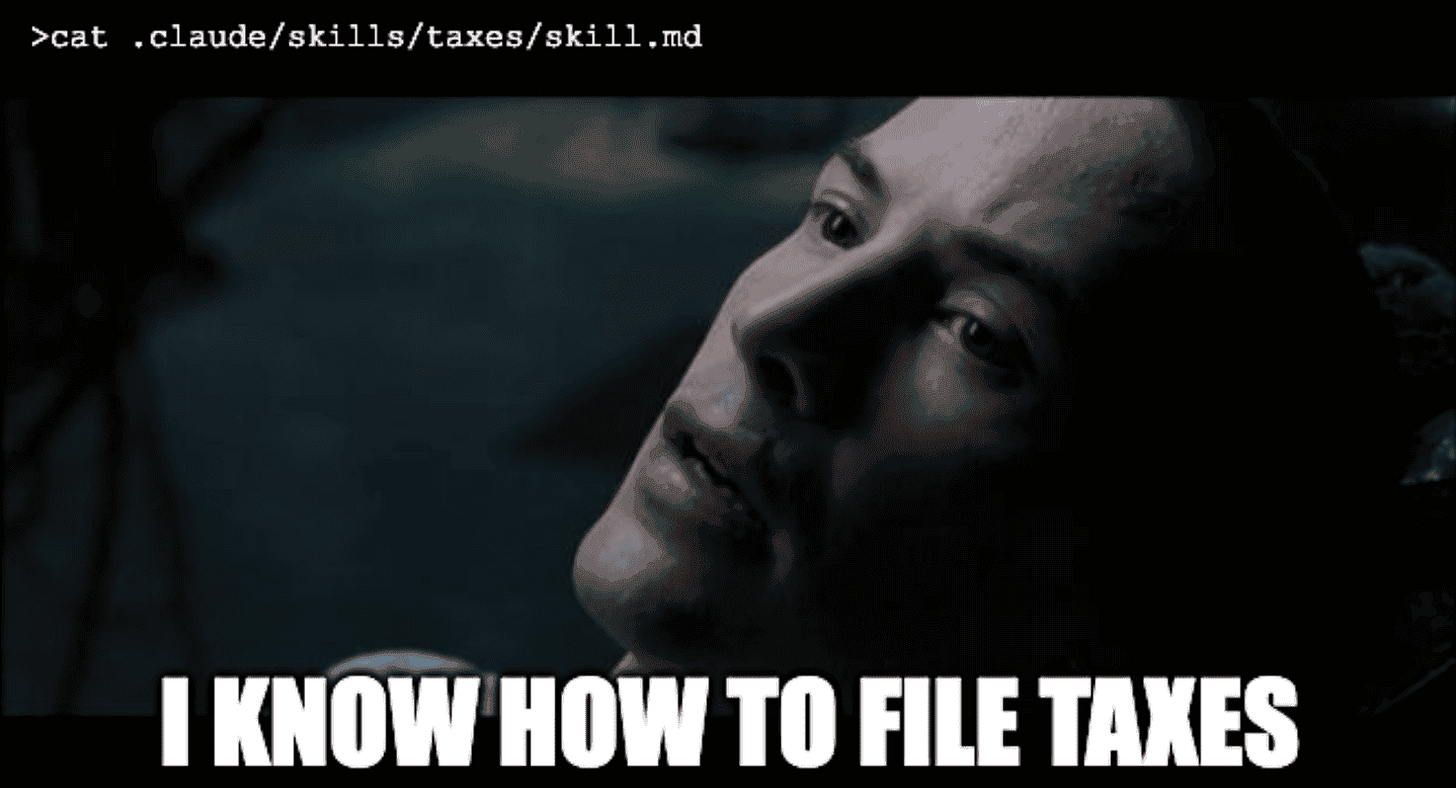

Eleanor made the point that skills are an admission that we now have general‑purpose agents. The model can do the work, but it doesn’t know your preferences, your domain, your workflows. Skills are how you teach it those things. We also talked about how scripts inside skills reduce variance because you’re not asking the model to invent code every time; you’re just invoking trusted tools.

What really clicked for me this week is how easy it is to create skills using an agent. You don’t need to hand‑craft directories. You can describe your workflow, or even just do the task once in chat, and then ask the agent to turn it into a skill. It really is very very simple! And that’s likely the reason everyone is adopting this simple formart for extension their agents knowledge.

Get started with skills

If you use Claude Chat, the simplest way to get started is ask Claude to review your previous conversations and suggest a skill for you. Or, at the end of a long chat where you went back and forth with Claude on a task, ask it to distill the important parts into a skill. If you want to use other people’s skills, and you are using Claude Code, or any of the supported IDE/Agents, here’s where to download the folders and install them:

If you aren’t a developer and don’t subscribe to Claude, well, I got good news for you! I vibecoded skill support for every LLM 👇

The Skills Demo That Changed My Mind

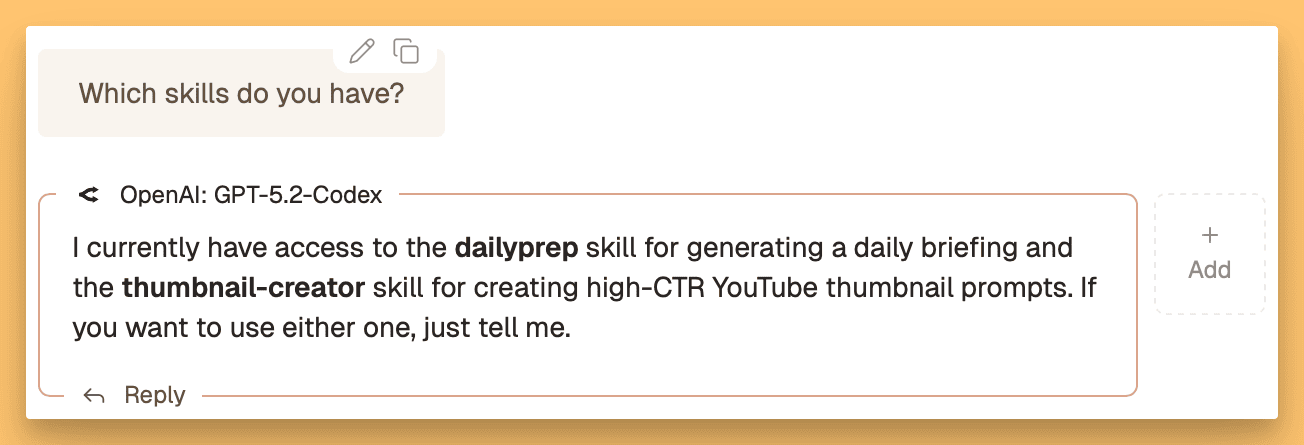

I was resistant to skills at first, mostly because I wanted them inside my chat interface and not just in CLI tools. And I wasn’t subscribed to Claude for a while. Then I realized I could add skill support directly to Chorus, the open‑source multi‑model chat app, and I used Claude Code plus Ralph loops to vibe code it in a few hours. Now I can run skills with GPT‑5.2 Codex, Claude Opus, and Gemini from the same chat interface. That was my “I know kung fu” moment.

If you want to try Chorus with skills enabled, you can download my release here! Only for mac, and they are unsigned, mac will not like it, but you can run them anyway.

And if you want to explore more awesome skills, check out Vercel’s React Best Practices skills and UI Skills. It’s the beginning of a new kind of distribution: knowledge packaged as skills, shared like open source libraries (or paid for!) and

Open Source AI

Baichuan-M3 is a 235B medical LLM fine-tuned from Qwen3, released under Apache 2.0. The interesting claim here is that it beats GPT-5.2 on OpenAI’s HealthBench, including a remarkably low 3.5% hallucination rate.

What makes it different from typical medical models is that it’s trained to run actual clinical consultations asking follow-up questions and reasoning through differential diagnoses rather than just spitting out answers. Nisten pointed out that if you’re going to fine-tune something for healthcare, Qwen3 MoE is an excellent base because of its multilingual capabilities, which matters a lot in clinical settings. You can run it with vLLM or SGLang if you’ve got the hardware. (HF)

LongCat-Flash-Thinking-2601 from Meituan is a 560B MoE (27B active) released fully MIT-licensed. It’s specifically built for agentic tasks, scoring well on tool-use benchmarks like τ²-Bench and BrowseComp.

There’s a “Heavy Thinking” mode that pushes AIME-25 to 100%. What I like about this one is the training philosophy, they inject noise and broken tools during RL to simulate messy real-world conditions, which is exactly what production agents deal with. You can try it at longcat.chat and Github

We also saw Google release MedGemma this week (blog) a 4B model optimized for medical imaging like X-rays and CT scans and TranslateGemma (X) a family of on device translations (4B, 12B and 27B) which seem kind of cool! Didn’t have tons of time to dive into them unfortunately.

Vision, Voice & Art (Rapid Fire)

Veo 3.1 adds native vertical video, 4K output, and better consistency in the Gemini API. Huge for creators (blog)

Viral Kling motion‑transfer vids are breaking people’s brains about what AI video pipelines will look like.

Pocket TTS from Kyutai Labs: a 100M‑parameter open‑source TTS model that runs on CPU and clones voices from seconds of audio (X)

GLM‑Image drops as an open‑source hybrid AR + diffusion image model with genuinely excellent text rendering but pretty bad for everything else

Black Forest Labs drops open source Flux.2 [Klein] 4B and 9B small models that create images super fast! (X, Fal, HF)

Phew, ok. I was super excited about this one and I’m really really happy with the result. I was joking on the pod that to prepare for this podcast, I not only had to collect all the news, I also had to ramp up on Agent Skills, and I wish we had an ability to upload information like the Matrix, but alas we didn’t. I also really enjoyed vibecoding a whole feature into Chorus just to explore skills fully, mind was absolutely blown when it worked after 3 hours of Ralphing!

See you next week, I think I have one more super exciting thing to play with this week before I talk about it!

TL;DR and Show Notes

Hosts & Guests

Alex Volkov - AI Evangelist & Weights & Biases (@altryne)

Co-Hosts: Wolfram Ravenwolf (@WolframRvnwlf), Yam Peleg (@yampeleg), Nisten Tahiraj (@nisten), LDJ (@ldjconfirmed)

Guest: Eleanor Berger (@intellectronica)

Open Source LLMs

Baichuan-M3 - A 235B open-source medical LLM that beats GPT-5.2 on HealthBench with a 3.5% hallucination rate, featuring full clinical consultation capabilities. (HF, Blog, X Announcement)

LongCat-Flash-Thinking-2601 - Meituan’s 560B MoE (27B active) agentic reasoning model, fully MIT licensed. Features “Heavy Thinking” mode scoring 100% on AIME-25. (GitHub, Demo, X Announcement)

TranslateGemma - Google’s open translation family (4B, 12B, 27B) supporting 55 languages. The 4B model runs entirely on-device. (Arxiv, Kaggle, X Announcement)

MedGemma 1.5 & MedASR - Native 3D imaging support (CT/MRI) and a speech model that beats Whisper v3 by 82% on clinical dictation error rates. (MedGemma HF, MedASR HF, Arxiv)

Big CO LLMs + APIs

Claude Cowork - Anthropic’s new desktop agent allows non-coders to give Claude file system and browser access to perform complex tasks. (TechCrunch, X Coverage)

GPT-5.2 Codex - Now in the API ($1.75/1M input). Features native context compaction and state-of-the-art performance for long-running agentic loops. (Blog, Pricing)

Cursor & FastRenderer - Cursor used GPT-5.2 Codex to build a 3M+ line Rust browser from scratch in one week of autonomous coding. (Blog, GitHub, X Thread)

Gemini Personal Intelligence - Google leverages its data moat, letting Gemini reason across Gmail, Photos, and Search for hyper-personalized proactive help. (Blog, X Announcement)

Partnerships & Drama

Apple + Gemini - Apple officially selects Gemini to power Siri backend capabilities.

OpenAI + Cerebras - A $10B deal for 750MW of high-speed compute through 2028. (Announcement)

Thinking Machines - Co-founders and CTO return to OpenAI amidst drama; Soumith Chintala named new CTO.

This Week’s Buzz

WeaveHacks 3 - Self-Improving Agents Hackathon in SF (Jan 31-Feb 1). (Sign Up Here)

Vision, Voice & Audio

Veo 3.1 - Native 9:16 vertical video, 4K resolution, and reference image support in Gemini API. (Docs)

Pocket TTS - A 100M parameter CPU-only model from Kyutai Labs that clones voices from 5s of audio. (GitHub, HF)

GLM-Image - Hybrid AR + Diffusion model with SOTA text rendering. (HF, GitHub)

FLUX.2 [klein] - Black Forest Labs releases fast 4B (Apache 2.0) and 9B models for sub-second image gen. (HF Collection, X Announcement)

Kling Motion Transfer - Viral example of AI video pipelines changing Hollywood workflows. (X Thread)

Deep Dive: Agent Skills