Hey everyone, Alex here (yes the real me if you’re reading this)

The weeks are getting crazier, but what OpenAI pulled this week, with a whole new social media app attached to their latest AI breakthroughs is definitely breathtaking! Sora2 released and instantly became a viral sensation, shooting to the top 3 free iOS spot on AppStore, with millions of videos watched, and remixed.

On weeks like these, even huge releases like Claude 4.5 are taking the backseat, but we still covered them!

For listeners of the pod, the second half of the show was very visual heavy, so it may be worth it watching the YT video attached in a comment if you want to fully experience the Sora revolution with us! (and if you want a SORA invite but don’t have one yet, more on that below)

Sora 2 - the AI video model that signifies a new era of social media

Look, you’ve probably already heard about the SORA-2 release, but in case you haven’t, OpenAI released a whole new model, but attached it to a new, AI powered social media experiment in the form of a very addictive TikTok style feed. Besides being hyper-realistic, and producing sounds and true to source voice-overs, Sora2 asks you to create your own “Cameo” by taking a quick video, and then allows you to be featured in your own (and your friends) videos.

This makes a significant break from the previously “slop” based meta Vibes, becuase, well, everyone loves seeing themselves as the stars of the show!

Cameos are a stroke of genius, and what’s more, one can allow everyone to use their Cameo, which is what Sam Altman did at launch, making everyone Cameo him, and turning him, almost instantly into one of the most meme-able (and approachable) people on the planet!

Sam sharing away his likeness like this for the sake of the app achieved a few things, it added trust in the safety features, made it instantly viral and showed folks they shouldn’t be afraid of adding their own likeness.

Vibes based feed and remixing

Sora 2 is also unique in that, it’s the first social media with UGC (user generated content) where content can ONLY be generated, and all SORA content is created within the app. It’s not possible to upload pictures that have people to create the posts, and you can only create posts with other folks if you have access to their Cameos, or by Remixing existing creations.

Remixing is also a way to let users “participate” in the creation process, by adding their own twist and vibes!

Speaking of Vibes, while the SORA app has an algorithmic For You page, they have a completely novel and new way to interact with the algorithm, by using their Pick a Mood feature, where you can describe which type of content you want to see, or not see, with natural language!

I believe that this feature will come to all social media platforms later, as it’s such a game changer. Want only content in a specific language? or content that doesn’t have Sam Altman in it? Just ask!

Content that makes you feel good

The most interesting thing is about the type of content is, there’s no sexualisation (because all content is moderated by OpenAI strong filters), and no gore etc. OpenAI has clearly been thinking about teenagers and have added parent controls, things like being able to turn of the For You page completely etc to the mix.

Additionally, SORA seems to be a very funny model, and I mean this literally. You can ask the video generation for a joke and you’ll often get a funny one. The scene setup, the dialogue, the things it does even unprompted are genuinely entertaining.

AI + Product = Profit?

OpenAI shows that they are one of the worlds best product labs in the world, not just a foundational AI lab. Most AI advancements are tied to products, and in this case, the whole experience is so polished, it’s hard to accept that it’s a brand new app from a company that didn’t do social before. There’s very little buggy behavior, videos are loaded up quick, there’s even DMs! I’m thoroughly impressed and am immersing myself in the SORA sphere. Please give me a follow there and feel free to use my Cameo by tagging @altryne in there. I love seeing how folks have used my Cameo, it makes me laugh 😂

The copyright question is.. wild

Remember last year when I asked Sam why Advanced Voice Mode couldn’t sing Happy Birthday? He said they didn’t have classifiers to detect IP violations. Well, apparently that’s not a concern anymore because SORA 2 will happily generate perfect South Park episodes, Rick and Morty scenes, and Pokemon battles. They’re not even pretending they didn’t train on this stuff. You can even generate videos with any dead famous person (I’ve had zoom meetings with Michael Jackson and 2Pac, JFK and Mister Rogers)

Our friend Ryan Carson already used it to create a YouTube short ad for his startup in two minutes. What would have cost $100K and three months now takes six generations and you’re done. This is the real game-changer for businesses.

Getting invited

EDIT: If you’re reading this on Friday, try the code `FRIYAY` and let me know in comments if it worked for you 🙏

I wish I would have invites for all of you, but all invited users have 4 other folks they can invite, so we shared a bunch of invites during the live show, and asked folks to come back and invite other listeners, this went on for half an hour so I bet we’ve got quite a few of you in! If you’re still looking for an invite, you can visit the thread on X and see who claimed and invite and ask them for one, tell them you’re also a ThursdAI listener, they hopefully will return the favor!

Alternatively, OpenAI employees often post codes with a huge invite ratio, so follow @GabrielPeterss4 who often posts codes and you can get in there fairly quick, and if you’re not in the US, I heard a VPN works well. Just don’t forget to follow me on there as well 😉

A Week with OpenAI Pulse: The Real Agentic Future is Here

Listen to me, this may be a hot take. I think OpenAI Pulse is a bigger news story than Sora. I’ve told you about Pulse last week, but today on the show I was able to share my weeks worth of experience, and honestly, it’s now the first thing I look at when I wake up in the morning after brushing my teeth!

While Sora is changing media, Pulse is changing how we interact with AI on a fundamental level. Released to Pro subscribers for now, Pulse is an agentic, personalized feed that works for you behind the scenes. Every morning, it delivers a briefing based on your interests, your past conversations, your calendar—everything. It’s the first asynchronous AI agent I’ve used that feels truly proactive.

You don’t have to trigger it. It just works. It knew I had a flight to Atlanta and gave me tips. I told it I was interested in Halloween ideas for my kids, and now it’s feeding me suggestions. Most impressively, this week it surfaced a new open-source video model, Kandinsky 5.0, that I hadn’t seen anywhere on X or my usual news feeds. An agent found something new and relevant for my show, without me even asking.

This is it. This is the life-changing-level of helpfulness we’ve all been waiting for from AI. Personalized, proactive agents are the future, and Pulse is the first taste of it that feels real. I cannot wait for my next Pulse every morning.

This Week’s Buzz: The AI Build-Out is NOT a Bubble

This show is powered by Weights & Biases from CoreWeave, and this week that’s more relevant than ever. I just got back from a company-wide offsite where we got a glimpse into the future of AI infrastructure, and folks, the scale is mind-boggling.

CoreWeave, our parent company, is one of the key players providing the GPU infrastructure that powers companies like OpenAI and Meta. And the commitments being made are astronomical. In the past few months, CoreWeave has locked in a $22.4B deal with OpenAI, a $14.2B pact with Meta, and a $6.3B “backstop” guarantee with NVIDIA that runs through 2032.

If you hear anyone talking about an “AI bubble,” show them these numbers. These are multi-year, multi-billion dollar commitments to build the foundational compute layer for the next decade of AI. The demand is real, and it’s accelerating. And the best part? As a Weights & Biases user, you have access to this same best-in-class infrastructure that runs OpenAI through our inference services. Try wandb.me/inference, and let me know if you need a bit of a credit boost!

Claude Sonnet 4.5: The New Coding King Has a Few Quirks

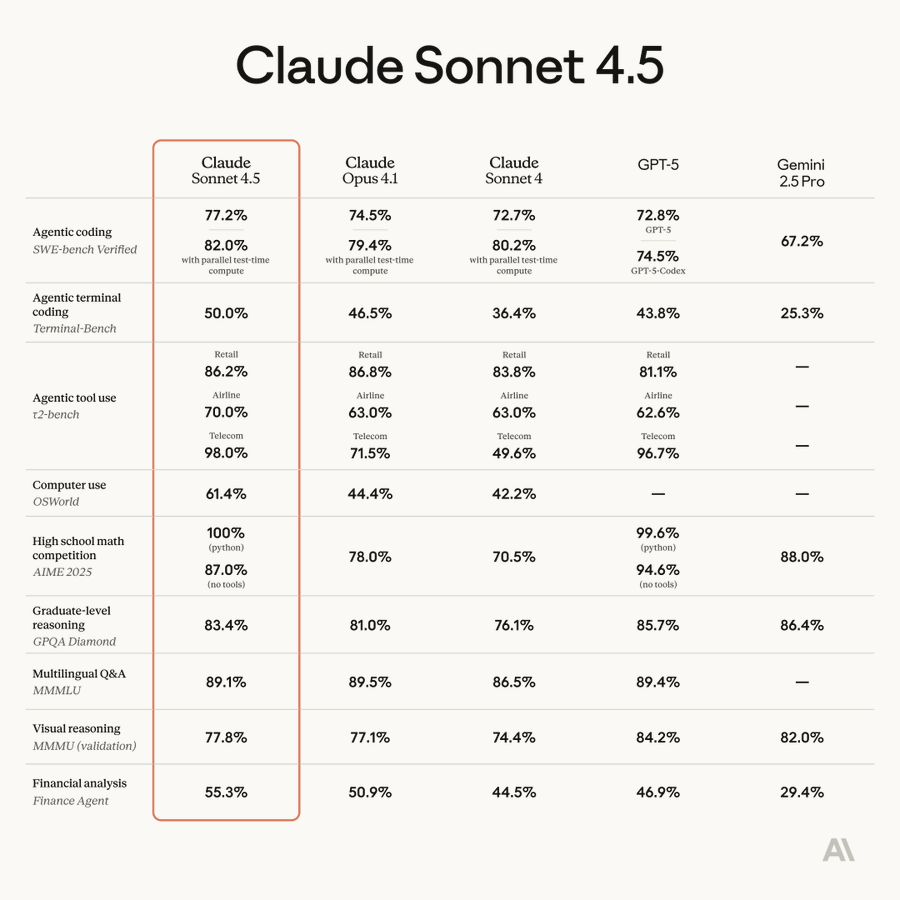

On any other week, Anthropic’s release of Claude Sonnet 4.5 would’ve been the headline news. They’re positioning it as the new best model for coding and complex agents, and the benchmarks are seriously impressive. It matches or beats their previous top-tier model, Opus 4.1, on many difficult evals, all while keeping the same affordable price as the previous Sonnet.

One of the most significant jumps is on the OS World benchmark, which tests an agent’s ability to use a computer—opening files, manipulating windows, and interacting with applications. Sonnet 4.5 scored a whopping 61.4%, a massive leap from Opus 4.1’s 44%. This clearly signals that Anthropic is doubling down on building agents that can act as real digital assistants.

However, the real-world experience has been a bit of a mixed bag. My co-host Ryan Carson, whose company Amp switched over to 4.5 right away, noted some regressions and strange errors, saying they’re even considering switching back to the previous version until the rough edges are smoothed out. Nisten also found it could be more susceptible to “slop catalysts” in prompting. It seems that while it’s incredibly powerful, it might require some re-prompting and adjustments to get the best, most stable results. The jury’s still out, but it’s a potent new tool in the developer’s arsenal.

Open Source LLMs: DeepSeek’s Attention Revolution

Despite the massive news from the big companies, open source still brought the heat this week, with one release in particular representing a fundamental breakthrough.

DeepSeek released V3.2 Experimental, and the big news is DSA, or DeepSeek Sparse Attention. For those who don’t know, one of the biggest bottlenecks in LLMs is the “quadratic attention problem”—as you double the context length, the computation and memory required quadruple. This makes very long contexts incredibly expensive. DeepSeek’s new architecture makes the cost curve nearly flat, allowing for massive context at a fraction of the cost, all while maintaining the same SOTA performance as their previous model.

This is one of those “unhobbling moments,” like the invention of RoPE or GRPO, that moves the entire field forward. Everyone will be able to implement this, making all open-source models faster and more efficient. It’s a huge deal.

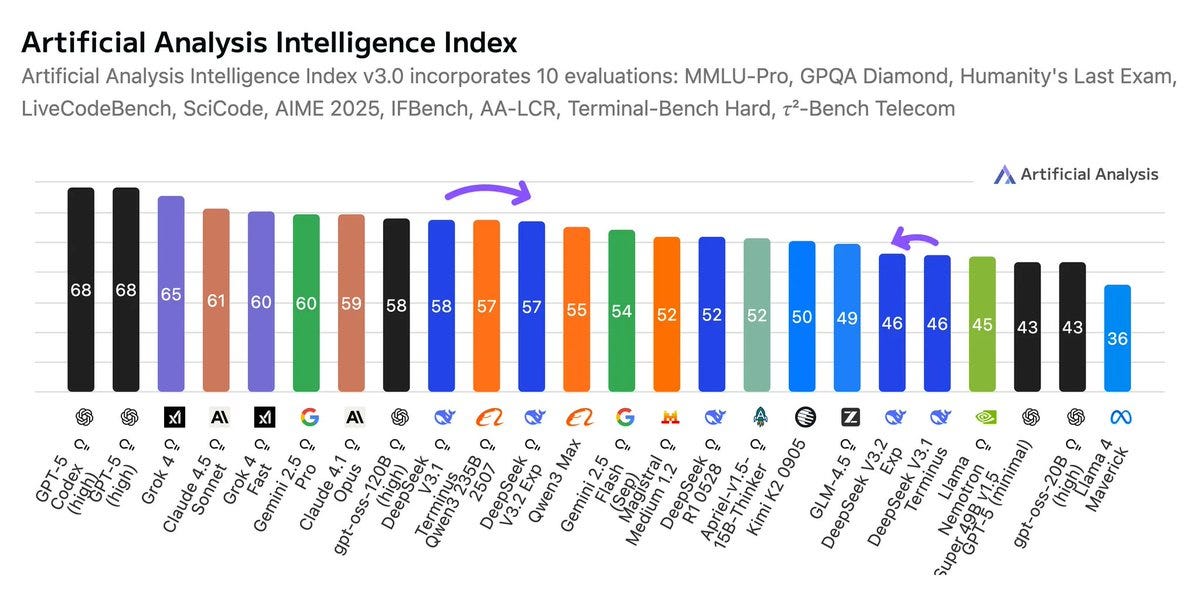

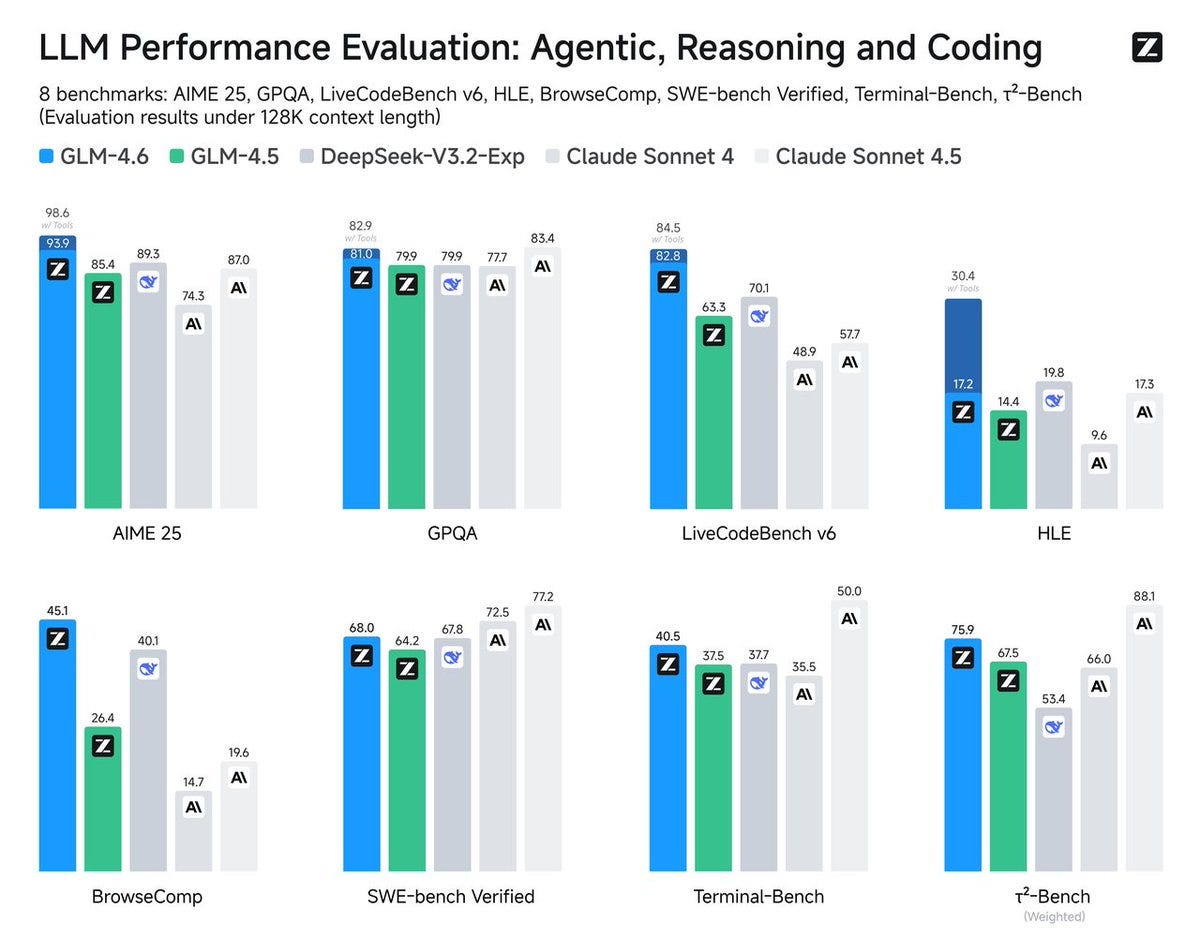

We also saw major releases from Z.ai with GLM-4.6, an advanced agentic model with a 200K context window that’s getting incredibly close to Claude’s performance, and a surprise from ServiceNow SLAM Labs, who dropped Apriel-1.5-15B, a frontier-level multimodal model that’s fully open source. It’s amazing to see a huge enterprise company contributing to the open-source ecosystem at this level.

Multimodal Madness: Audio, Video, and Image Models updates

The torrent of releases continued across all modalities this week, a bit overshadowed by SORA but definitely still happened (all links in the TL;DR section)

In voice and audio, our friends at Hume AI launched Octave 2, their next-gen text-to-speech model that’s faster, cheaper, and now fluent in over 11 languages. We also saw LFM2-Audio from Liquid AI, an incredibly efficient 1.5B parameter end-to-end audio model with sub-100ms latency.

In video, the open-source community answered Sora 2 with Kandinsky 5.0, a new 2B parameter text-to-video model that is claiming the #1 spot in open source and looks incredibly promising. And as I mentioned on the show, I wouldn’t have even known about it if it weren’t for my new personal AI agent, Pulse!

Finally, in AI art, Tencent dropped a monster: HunyuanImage 3.0, a massive 80-billion-parameter open-source text-to-image model. The scale of these open-source releases is just breathtaking.

Agentic browsing for all is here

Just as I was wrapping up the show, Perplexity has decided to let everyone in to use their Comet Agentic browser. I strongly recommend it, as I switched to it lately and it’s great!

I’m using it right now to run some agents, it can click stuff, scroll through stuff, collect info across tabs, it’s really great. Give it a spin, really, it’s worth getting into the habit of agentic browsing!

Many of you were asking me for invites before, well, it’s free access now, download it here (not sponsored, I just really like it)

Phew, ok, this was a WILD week, and I’m itching to get back to creating and seeing all the folks who used my Cameo on SORA, which you can see too btw if you hit the Cameo button here (https://sora.chatgpt.com/profile/altryne)

Next week is OpenAI’s Dev Day, and for the third year in a row we’re going to cover it, so follow us on social media and tune in Monday 8:30am Pacific. We’ll be live streaming from the location and re-streaming the keynote with Sam so don’t miss it!

TL;DR and Show Notes

Hosts and Guests:

Alex Volkov - AI Evangelist & Weights & Biases (@altryne)

Co Hosts - @WolframRvnwlf @yampeleg @nisten @ldjconfirmed @ryancarson

Big CO LLMs + APIs:

OpenAI releases SORA2 + a new social media app (X, Blog, App download)

Anthropic releases Claude Sonnet 4.5 - same price as 4.1 - leading coding model (X)

OpenAI launches Instant Checkout & Agentic Commerce Protocol (X, Protocol)

Open Source LLMs:

DeepSeek V3.2 Exp: Sparse Attention, Cost Drop (X, Evals, HF)

Apriel-1.5-15B-Thinker by ServiceNow SLAM Labs (X, HF, Arxiv)

This weeks Buzz:

CoreWeave locks $22.4B OpenAI, a $6.3B NVIDIA “backstop”, and a $14.2B Meta compute pact (X)

Voice & Audio:

Vision & Video:

AI Art & Diffusion & 3D: