ThursdAI TL;DR - November 23

TL;DR of all topics covered:

OpenAI Drama

Sam... there and back again.

Open Source LLMs

Intel finetuned Mistral and is on top of leaderboards with neural-chat-7B (Thread, HF, Github)

And trained on new Habana hardware!

Yi-34B Chat - 4-bit and 8-bit chat finetune for Yi-34 (Card, Demo)

Microsoft released Orca 2 - it's underwhelming (Thread from Eric, HF, Blog)

System2Attention - Uses LLM reasons to figure out what to attend to (Thread, Paper)

Lookahead decoding to speed up LLM inference by 2x (Lmsys blog, Github)

Big CO LLMs + APIs

Anthropic Claude 2.1 - 200K context, 2x less hallucinations, tool use finetune (Announcement, Blog, Ctx length analysis)

InflectionAI releases Inflection 2 (Announcement, Blog)

Bard can summarize youtube videos now

Vision

Voice

OpenAI added voice for free accounts (Announcement)

11Labs released speech to speech including intonations (Announcement, Demo)

Whisper.cpp - with OpenAI like drop in replacement API server (Announcement)

AI Art & Diffusion

Stable Video Diffusion - Stability releases text2video and img2video (Announcement, Try it)

Zip-Lora - combine diffusion LORAs together - Nataniel Ruiz (Annoucement, Blog)

Some folks are getting NERFs out from SVD (Stable Video Diffusion) (link)

LCM everywhere - In Krea, In Tl;Draw, in Fal, on Hugging Face

Tools

Ctrl+Altman+Delete weekend

If you're subscribed to ThursdAI, then you most likely either know the full story of the crazy OpenAI weekend. Here's my super super quick summary (and if you want a full blow-by-blow coverage, Ben Tossel as a great one here)

Sam got fired, Greg quit, Mira flipped then Ilya Flipped. Satya played some chess, there was an interim CEO for 54 hours, all employees sent hearts then signed a letter, neither of the 3 co-fouders are on the board anymore, Ilya's still there, company is aligned AF going into 24 and Satya is somehow a winner in all this.

The biggest winner to me is open source folks, who got tons of interest suddenly, and specifically, everyone seems to converge on the OpenHermes 2.5 Mistral from Teknium (Nous Research) as the best model around!

However, I want to shoutout the incredible cohesion that came out of the folks in OpenAI, I created a list of around 120 employees on X and all of them were basically aligned the whole weekend, from ❤️ sending to signing the letter, to showing how happy they are Sam and Greg are back!

Yay

This Week's Buzz from WandB (aka what I learned this week)

As I’m still onboarding, the main things I’ve learned this week, is how transparent Weights & Biases is internally. During the whole OAI saga, Lukas the co-founder sent a long message in Slack, addressing the situation (after all, OpenAI is a big customer for W&B, GPT-4 was trained on W&B end to end) and answering questions about how this situation can affect us and the business.

Additionally, another co-founder, Shawn Lewis shared a recording of his update to the BOD of WandB, about out progress on the product side. It’s really really refreshing to see this information voluntarily shared with the company 👏

The first core value of W&B is Honesty, and it includes transparency outside of matters like personal HR stuff, and after hearing about this during onboarding, it’s great to see that the company lives it in practice 👏

I also learned that almost every loss curve image that you see on X, is a W&B dashboard screenshot ✨ and while we do have a share functionality, it’s not built for viral X sharing haha so in the spirit of transparency, here’s a video I recorded and shared with product + feature request to make these screenshot way more attractive + clear that it’s W&B

Open Source LLMs

Intel passes Hermes on SOTA with a DPO Mistral Finetune (Thread, Hugging Face, Github)

Yes, that intel, the... oldest computing company in the world, not only comes out strong with the best (on benchmarks) open source LLM, it also does DPO, and has been trained on a completely new hardware + Apache 2 license!

Here's Yam's TL;DR for the DPO (Direct Policy Optimization) technique:

Given a prompt and a pair of completions, train the model to prefer one over the other.

This model was trained on prompts from SlimOrca's dataset where each has one GPT-4 completion and one LLaMA-13B completion. The model trained to prefer GPT-4 over LLaMA-13B.

Additionally, even tho there is custom hardware included here, Intel supports the HuggingFace trainer fully, and the whole repo is very clean and easy to understand, replicate and build things on top of (like LORA)

LMSys Lookahead decoding (Lmsys, Github)

This method significantly improves the output of LLMs, sometimes by more than 2x, using some jacobian notation (don't ask me) tricks. It's copmatible with HF transformers library! I hope this comes to open source tools like LLaMa.cpp soon!

Big CO LLMs + APIs

Anthropic Claude comes back with 2.1 featuring 200K context window, tool use

While folks on X thought this was new, Anthropic actually announced Claude with 200K back in the May, and just gave us 100K context window, which for the longest time was the largest context window around. I was always thinking, they don't have a reason to release 200K since none of their users actually wants it, and it's a marketing/sales decision to wait until OpenAI catches up. Remember, back then, GPT-4 was 8K and some lucky folks got 32K!

Well, OpenAI releases GPT-4-turbo with 128K so Anthropic re-trained and released Claude to gain an upper hand. I also love the tool use capabilities.

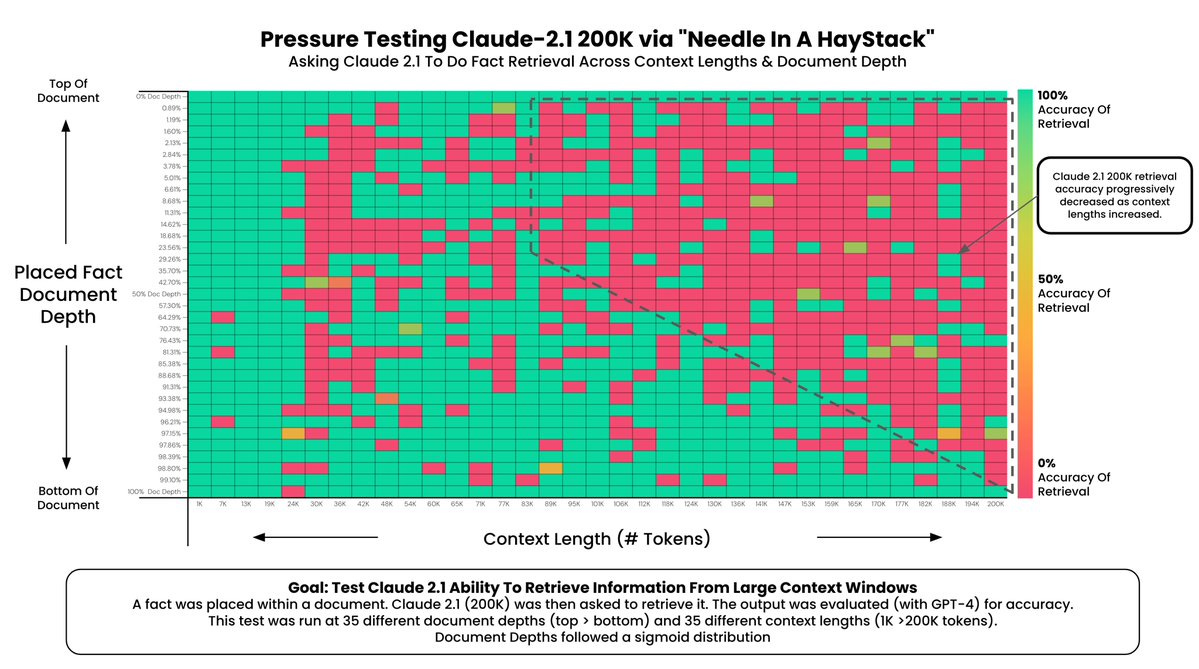

Re: longer context window, there were a bunch of folks testing if 200K context window is actually all that great, and it turns out, besides being very expensive to run (you pay per tokens) it also loses a bunch of information at lengths over 200K using needle in the haystack searches. Here's an analysis by Greg Kamradt that shows that:

Starting at ~90K tokens, performance of recall at the bottom of the document started to get increasingly worse

Less context = more accuracy - This is well know, but when possible reduce the amount of context you send to the models to increase its ability to recall.

I had similar issues back in May with their 100K tokens window (source)

Voice & Audio

ElevenLabs has speech-to-speech

Creating a significant jump in capabilities, ElevenLabs now allows you to be the actor behind the voice! With speech to speech, they would transfer the pauses, the intonation, the emotion, into the voice generation. Here's my live reaction and comparison:

Notable: Whisper.CPP now supports a server compatible with OpenAI (Announcement, Github)

AI Art & Diffusion

Stable Video diffusion - text-2-video / img-2-video foundational model (Announcement, Hugging Face, Github, DEMO)

Stable has done it again, Stable Video allows you to create increidbly consistent videos with images or just text! They are short now, but working on extending the times, and they videos look incredible! (And thanks to friends at Fal, you can try right now, here)

And here’s a quick gif I created with DALL-E 3 and Fal to celebrate the Laundry Buddy team at OAI while the outage was happening)

Tools

Screenshot to HTML (Github)

I… what else is there to say? Someone used GPT4-Vision to … take screenshots and iteratively re-create the HTML for them. As someone who used to spend month on this exact task, I’m very very happy it’s now automated!

Happy Thanksgiving 🦃

I am really thankful to all of you who subscribe and come back every week, thank you! I would have been here without all your support, comments, feedback! Including this incredible art piece that Andrew from spacesdashboard created just in time for our live recording, just look at those little robots! 😍

See you next week (and of course the emoji of the week is 🦃, DM or reply!)