Haaaapy first of the second month of 2024 folks, how was your Jan? Not too bad I hope? We definitely got quite a show today, the live recording turned into a proceeding of breaking news, authors who came up, deeper interview and of course... news.

This podcast episode is focusing only on the news, but you should know, that we had deeper chats with Eugene (PicoCreator) from RWKV, and a deeper dive into dataset curation and segmentation tool called Lilac, with founders Nikhil & Daniel, and also, we got a breaking news segment and Nathan Lambert (from Interconnects ) joined us to talk about the latest open source from AI2 👏

Besides that, oof what a week, started out with the news that the new Bard API (apparently with Gemini Pro + internet access) is now the 2nd best LLM in the world (According to LMSYS at least), then there was the whole thing with Miqu, which turned out to be, yes, a leak from an earlier version of a Mistral model, that leaked, and they acknowledged it, and finally the main release of LLaVa 1.6 to become the SOTA of vision models in the open source was very interesting!

TL;DR of all topics covered + Show notes

Open Source LLMs

Meta releases Code-LLama 70B - 67.8% HumanEval (Announcement, HF instruct version, HuggingChat, Perplexity)

Together added function calling + JSON mode to Mixtral, Mistral and CodeLLama

RWKV (non transformer based) Eagle-7B - (Announcement, Demo, Yam's Thread)

Someone leaks Miqu, Mistral confirms it's an old version of their model

Olmo from Allen Institute - fully open source 7B model (Data, Weights, Checkpoints, Training code) - Announcement

Datasets & Embeddings

Teknium open sources Hermes dataset (Announcement, Dataset, Lilac)

Lilac announces Garden - LLM powered clustering cloud for datasets (Announcement)

BAAI releases BGE-M3 - Multi-lingual (100+ languages), 8K context, multi functional embeddings (Announcement, Github, technical report)

Nomic AI releases Nomic Embed - fully open source embeddings (Announcement, Tech Report)

Big CO LLMs + APIs

Vision & Video

🔥 LLaVa 1.6 - 34B achieves SOTA vision model for open source models (X, Announcement, Demo)

Voice & Audio

Tools

Infinite Craft - Addicting concept combining game using LLama 2 (neal.fun/infinite-craft/)

Open Source LLMs

Meta releases CodeLLama 70B

Benches 67% on MMLU (without fine-tuninig) and already available on HuggingChat, Perplexity, TogetherAI, Quantized for MLX on Apple Silicon and has several finetunes, including SQLCoder which beats GPT-4 on SQL

Has 16K context window, and is one of the top open models for code

Eagle-7B RWKV based model

I was honestly disappointed a bit for the multilingual compared to 1.8B stable LM , but the folks on stage told me to not compare this in a transitional sense to a transformer model ,rather look at the potential here. So we had Eugene, from the RWKV team join on stage and talk through the architecture, the fact that RWKV is the first AI model in the linux foundation and will always be open source, and that they are working on bigger models! That interview will be released soon

Olmo from AI2 - new fully open source 7B model (announcement)

This announcement came as Breaking News, I got a tiny ping just before Nathan dropped a magnet link on X, and then they followed up with the Olmo release and announcement.

A fully open source 7B model, including checkpoints, weights, Weights & Biases logs (coming soon), dataset (Dolma) and just... everything that you can ask, they said they will tell you about this model. Incredible to see how open this effort is, and kudos to the team for such transparency.

They also release a 1B version of Olmo, and you can read the technical report here

Big CO LLMs + APIs

Mistral handles the leak rumors

This week the AI twitter sphere went ablaze again, this time with an incredibly dubious (quantized only) version of a model that performed incredible on benchmarks, that nobody expected, called MIQU, and i'm not linking to it on purpose, and it started a set of rumors that maybe this was a leaked version of Mistral Medium. Remember, Mistral Medium was the 4th best LLM in the world per LMSYS, it was rumored to be a Mixture of Experts, just larger than the 8x7B of Mistral.

So things didn't add up, and they kept not adding up, as folks speculated that this is a LLama 70B vocab model etc', and eventually this drama came to an end, when Arthur Mensch, the CEO of Mistral, did the thing Mistral is known for, and just acknowleged that the leak was indeed an early version of a model, they trained once they got access to their cluster, super quick and that it indeed was based on LLama 70B, which they since stopped using.

Leaks like this suck, especially for a company that ... gives us the 7th best LLM in the world, completely apache 2 licensed and it's really showing that they dealt with this leak with honor!

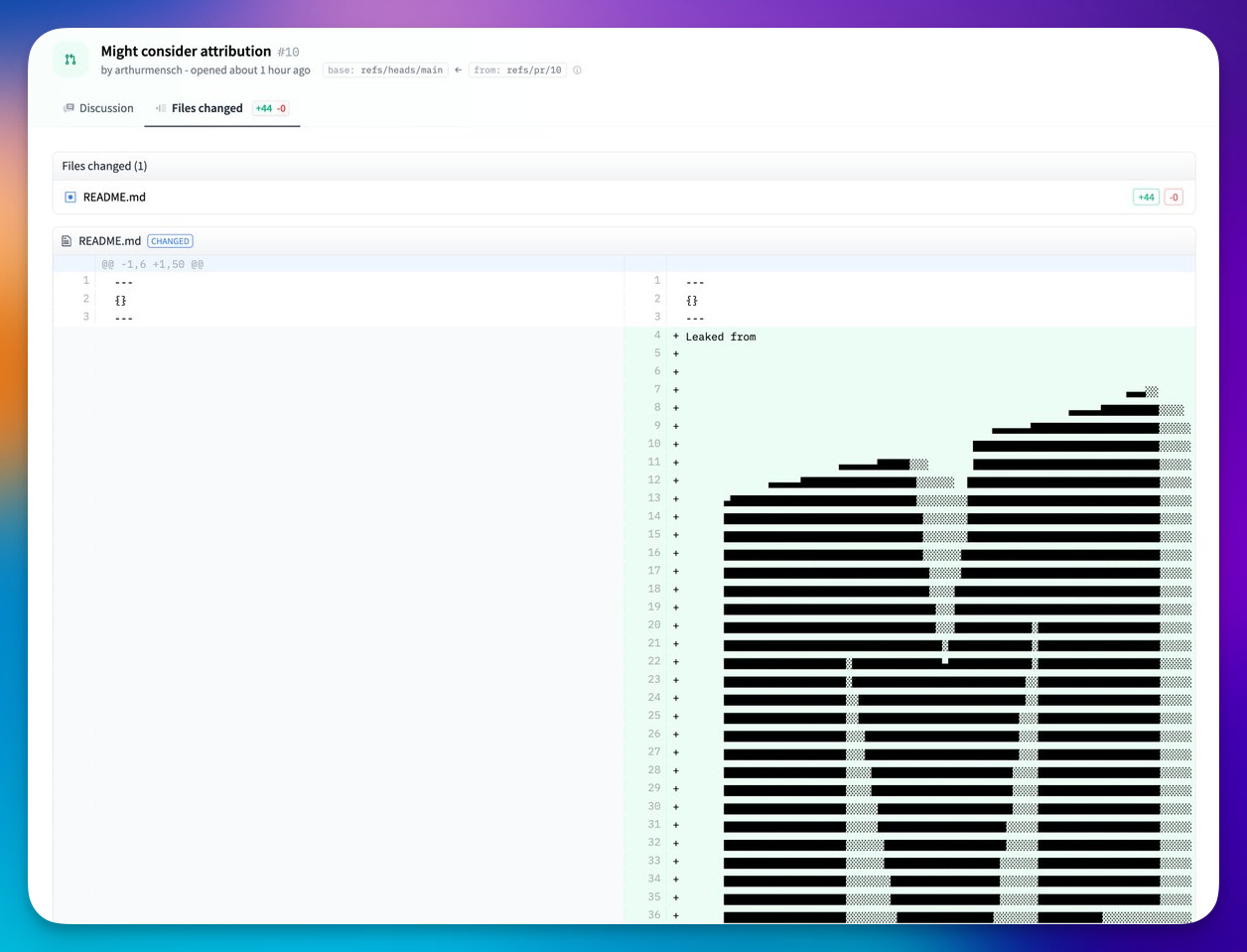

Arthur also proceeded to do a very Mistral thing and opened a pull request to the Miqu HuggingFace readme with an attribution that looks like this, with the comment "Might consider attribution" 🫳🎤

Bard (with Gemini Pro) beats all but the best GPT4 on lmsys (and I'm still not impressed, help)

This makes no sense, and yet, here we are. Definitely a new version of Bard (with gemini pro) as they call it, from January 25 on the arena, now is better than most other models, and it's could potentially be because it has internet access?

But so does perplexity and it's no where close, which is weird, and it was a weird result that got me and the rest of the team in the ThursdAI green room chat talking for hours! Including getting folks who usually don't reply, to reply 😆 It's been a great conversation, where we finally left off is, Gemini Pro is decent, but I personally don't think it beats GPT4, however most users don't care about which models serves what, rather which of the 2 choices LMSYS has shown them answered what they asked. And if that question has a google search power behind it, it's likely one of the reasons people prefer it.

To be honest, when I tried the LMSYS version of Bard, it showed me a 502 response (which I don't think they include in the ELO score 🤔) but when I tried the updated Bard for a regular task, it performed worse (in my case) than a 1.6B parameter model running locally.

Folks from google replied and said that it's not that they model is bad, it's that I used a person's name, and the model just.. refused to answer. 😵💫 When I removed a last name it did perform ok, no where near close to GPT 4 though.

In other news, they updated Bard once again today, with the ability to draw images, and again, and I'm sorry if this turns to be a negative review but, again, google what's going on?

The quality in this image generation is subpar, at least to mea and other folks, I'll let you judge which image was created with IMAGEN (and trust me, I cherry picked) and which one was DALLE for the same exact prompt

This weeks Buzz (What I learned with WandB this week)

Folks, the growth ML team in WandB (aka the team I'm on, the best WandB team duh) is going live!

That's right, we're going live on Monday, 2:30 PM pacific, on all our socials (X, LinkedIn, Youtube) as I'm hosting my team, and we do a recap of a very special week in December, a week where we paused other work, and built LLM powered projects for the company!

I really wanted to highlight the incredible projects, struggles, challenges and learnings of what it takes to take an AI idea, and integrated it, even for a company our size that works with AI often, and I think it's going to turn out super cool, so you all are invited to check out the live stream!

Btw, this whole endeavor is an initiative by yours truly, not like some boring corporate thing I was forced to do, so if you like the content here, join the live and let us know how it went!

OpenAI releases a powerful new feature, @mentions for GPTs

This is honestly so great, it went under the radar for many folks, so I had to record a video to expalin why this is awesome, you can now @mention GPTs from the store, and they will get the context of your current conversation, no longer you need to switch between GPT windows.

This opens the door for powerful combinations, and I show some in the video below:

Apple is coming to AI

Not the Apple Vision Pro, that's coming tomorrow and I will definitely tell you how it is! (I am getting one and am very excited, it better be good)

No, today on the Apple earnings call, Tim Cook finally said the word AI, and said that they are incredibly excited about this tech, and that we'll get to see something from them this year.

Which makes sense, given the MLX stuff, the Neural Engine, the Ml-Ferret and the tons of other stuff we've seen from them this year, Apple is definitely going to step in a big way!

Vision & Video

LLaVa 1.6 - SOTA in open source VLM models! (demo)

Wow, what a present we got for Haotian Liu and the folks at LLaVa, they upgraded the LlaVa architecture and released a few more models, raging from 7B to 34B, and created the best open source state of the art vision models! It's significantly better at OCR (really, give it a go, it's really impressive) and they exchanged the LLM backbone with Mistral and Hermes Yi-34B.

Better OCR and higher res

Uses several bases like Mistral and NousHermes 34B

Uses lmsys SGlang for faster responses (which we covered a few weeks ago)

SoTA Performance! LLaVA-1.6 achieves the best performance compared with open-source LMMs such as CogVLM or Yi-VL. Compared with commercial ones, it catches up to Gemini Pro and outperforms Qwen-VL-Plus on selected benchmarks.

Low Training Cost. LLaVA-1.6 is trained with 32 GPUs for ~1 day, with 1.3M data samples in total. The compute / training data cost is 100-1000 times smaller than others.

Honestly it's quite stunningly good, however, it does take a lot more GPU due to the resolution changes they made. Give it a try in this online DEMO and tell me what you think.

Tools

Infinite Craft Game (X, Game)

This isn't a tool, but an LLM based little game that's so addicting, I honestly didn't have time to keep playing it, and it's super simple. I especially love this, as it's uses LLama and I don't see how something like this could have been scaled without AI before, and the ui interactions are so ... tasty 😍

All-right folks, I can go on and on, but truly, listen to the whole episode, it really was a great one, and stay tuned for the special sunday deep dive episode with the folks from Lilac and featuring our conversation with about RWKV.

If you scrolled all the way until here, send me the 🗝️ emoji somewhere in DM so I'll know that there's at least one person who read this through, leave a comment and tell 1 friend about ThursdAI! 🫡

Full transcript of Feb 1

[00:00:00] Alex Volkov: Happy Thursday,

[00:00:03] Intro and housekeeping

[00:00:03] Alex Volkov: everyone. This is Alex Volkov from Weights Biases. And I bring you February 1st, ThursdAI.

[00:00:29] Alex Volkov: Today we had an incredible show, incredible live recording, and we overshot by 30 minutes, we usually set for two hours, and we had just a bunch of breaking news and surprises and authors from different places. It was all very exciting. And as always. I would like to bring you the recap of that show that I usually do during the live recording at the end.

[00:00:53] Alex Volkov: I'm going to bring you the podcast listeners here in the beginning, specifically because this was a long show. You'd be able to choose which of the things that we talk about you actually liked. And if you listen to this on the Apple podcast app or other podcasts that support chapters, you'd be able actually to swipe.

[00:01:14] Alex Volkov: down and jump to the chapter that you want.

[00:01:25] Alex Volkov: Some additional, let's say housekeeping here is that I've been listening to other successful podcasts. In an effort to make the show better for you, and I would love to know what works, what doesn't so definitely feel free to leave a comment on the sub stack as a reply, if you're subscribed to that, and if not, just hit me up on Twitter, I love feedback, I want to improve this as much as possible, and the recap is one result of this, I would love to know what works, what doesn't work, please send feedback.

[00:01:56] Alex Volkov: And if you don't have any feedback, and you're only feedback, hey, this is great. I love it. Like many of you have been telling me, the best way to show your appreciation is to actually give us Some upvotes and some stars on the Apple podcast. This actually really helps. This is how the internet works, apparently.

[00:02:14] Alex Volkov: And yeah, would love to see our podcast grow. I will say that recently , ThursdAI broke the top 50 in the US tech news category for Apple podcast. And I think we'll end up like number 23 at some point. So this obviously fluctuates from week to week. But I was very.

[00:02:33] Alex Volkov: Surprised, Honored, Humbled, Excited, all of these things, just to see the tech news as a category. We're breaking not only the top 50, but the top Let's say 30. I definitely thought that our content is more niche, but it looks like more people are enjoying AI. So with that help us get more eyes on this.

[00:02:51] Alex Volkov: I know that many folks learn through ThursdAI, which is awesome. And I'm now going to put the music up again and give you the recap. Again, this recap was required at the end of the conversation where I knew everything we actually talked about. And then this will slide to a conversation. We had quite an incredible show.

[00:03:08] Alex Volkov: So there we go.

[00:03:13] TL;DR and Recap

[00:03:13] Alex Volkov: Alright, recap time. So today is February 1st. And here's a recap for ThursdAI, February 1st, everything we've talked about. It's been a whole month of 2024, and we're covering just the week. And we talked about an incredible amount of stuff today.

[00:03:28] Alex Volkov: So in the area of open source, we covered Code Llama 70b that Meta, released and gave us. It achieves a 67. 8 percent on human eval evaluation. It's a coding model. We got three models. We've got the base one, instruct one that sometimes refuses to write the code that you want.

[00:03:46] Alex Volkov: And also the Python specific one and a bunch of fine tunes already in place. So for example, the SQL fine tune. already beats GPT 4 at text to SQL generation, which is super cool. I think it's already up for a demo on Hug Chat and Together and other places. Really the industry adopted the Code Llama.

[00:04:05] Alex Volkov: It released with the same license as well, so it's commercially viable. Incredible release for Meta, and we're just getting started with the Finetunes. Speaking of Finetunes and speaking of different things to use it for, Together AI added function calling for open models. like Mistral, Mixtral, and Code Llama.

[00:04:22] Alex Volkov: So like a day after Code Llama was released, now you're able to get it in function calling mode and JSON mode, thanks to Together. And together is generally awesome, but this specific thing is, I wanted to shout it out. We then, we talked about this Ominous MiQ model that performs very well. And folks were thinking that maybe this is a leak.

[00:04:42] Alex Volkov: And then we covered that Mistral, our CEO, Arthur Mensch. confirmed this is an old version, so this is indeed a leak, it leaked in the quantized version, and it's up now on Hug Face. Leaks are not cool, and the way that Mistral handled this we think, I personally think, is like super cool. Kudos to them, they confirmed that this is like an old version of something they trained.

[00:05:01] Alex Volkov: They also confirmed that this is a continued training of a Lama 2 model, so not a full pre trained like Mistral 7b, for example. There were some speculations to this there was also some speculations where Mistral Medium Is it an MOE or not an MOE? Is it a mixture of X or not? This potentially confirms that it's not, although we don't know fully whether or not this is Mistral Medium.

[00:05:22] Alex Volkov: We just know that it answers like one and performs and benches like one. Pretty cool to see the folks from Mistral. Sad that it happened to them, but pretty cool to see how they reacted to this. We covered, and we had the great, awesome honor to have Eugene here, PicoCreator. We covered RWKV's new non transformer based, RWKV based Eagle 7B model.

[00:05:45] Alex Volkov: And we did a little deep dive with PicoCreator later in the show. about what RWKV means and what quadratic attention, what problems it comes with, and what the future holds. It was a great conversation. RWKV is part of the Linux Foundation, so committed to open source. Like Eugene said, they threw away the keys and it will be always open source.

[00:06:05] Alex Volkov: A really great effort and hopefully to see more from there. If that interests you, definitely listen to the next, to the second hour. Then we talked about embeddings and we had quite a few Almost AKA breaking new stuff about embeddings because BAAI, if you remember the BGE embedding models we've talked about on MTV leaderboard, BGE is I don't honestly remember the acronym.

[00:06:30] Alex Volkov: They released the M3 version of their BGE embeddings. And they have been on the top of the leaderboard for embeddings for a while. Now they released a multilingual embedding update. So they support more than a hundred languages, which is incredibly important because In the pursuit of open source embeddings, many people turn to OpenAI just because they support more languages.

[00:06:50] Alex Volkov: And most of the up and coming embedding models in open source, they only support like English or English and Spanish or something like this. Or maybe English and Chinese. That's a very popular pair. But now BGE supports more than 100 languages. They also support 8K context. And they're also multifunctional embeddings.

[00:07:07] Alex Volkov: Whatever that means, we'll have to read the technical report, but it's going to be in the show notes. 8K context is also very important. On the topic of embeddings, a company called Nomic that we've mentioned before with a product called Atlas. Nomic released their embeddings, and not only did they release their embeddings, they committed to fully open source, where they released the training data as well, they released, reproducible way of getting those embeddings, and they claim that they beat OpenAI's previous auto models.

[00:07:35] Alex Volkov: And the fairly new ish OpenAI text embedding models as well, at least the small ones. So OpenAI just upgraded their embedding endpoints as well. So a lot of stuff is heating up in the embedding area, which we understand why it's important, because many people do RAG and Retrieval Augmented Generation, and they need embeddings, but also for other purposes as well.

[00:07:55] Alex Volkov: So very interesting embedding day or week. We also had the great pleasure to also have breaking news because as we were starting the space, I got maybe seven DMs from different people, including one of the authors for a model called Olmo from the Allen Institute or AI2, Allen Institute for AI.

[00:08:14] Alex Volkov: So Nathan Lambert joined us briefly to talk about Olmo. How, they're committed to understanding and releasing as much [00:08:20] as possible in the open source. So this model is not it's beating LLAMA7B, it's beating other places. It's not, it's running, I think, behind Mistral a little bit. And, , Nathan attributed this to just the amount of compute and the amount of data that they're throwing at it.

[00:08:33] Alex Volkov: However, Olmo from Allen Institute of AI released In everything open source, as much as possible, Nathan highlighted how much of it is released. And if there's something that didn't release and you want to release from them, like I asked for the Weights and Biases logs and he said, okay we'll look into this.

[00:08:50] Alex Volkov: which they used for this model, which is awesome. They release like full checkpoints every 500 steps. Code to train, data set, like everything. Incredible effort in learning and building these models. And so check out Olmo7B and the Allen Institute of AI. Nathan is also Very incredibly smart AI researcher and very worth a follow

[00:09:09] Alex Volkov: he writes a very popular blog called Interconnects. I will recommend this on our sub stack as well. So great conversation with Nathan. I did not expect him to Japan, but hopefully he's now a participant in the spaces. Then we moved to discuss datasets. We had a new segment this week, specifically because there was a few very interesting updates.

[00:09:29] Alex Volkov: So first of all, the Capybara dataset from LDJ, who joins the spaces and co hosts often is now DPO'd by folks from Argia AI. So like it turns into a direct preference optimization version of the dataset, which we saw that does very like good performance on models if we train them in the DPO way. And this has been released by the folks from Argilla and Argilla is the open source worth mentioning because we already saw multiple stuff that they do to improve data sets and then retrain them on already existing finetunes to make them better.

[00:10:01] Alex Volkov: do better. So this is in this case also what they did. There's a model now that beats, I think, neural Hermes by a few points, just because this data set was like higher quality and DPO as well. And then we moved into the data set section. We moved towards talking about the Hermes or Hermes data set that Technium has been painstakingly working on.

[00:10:21] Alex Volkov: It's a million records data set. That Nous Research and Technium has used to release anything from a 7B model. There was a Mistral 7B, Hermes up until to like a 34 Finetune on top of Yi that was very well performing. And that dataset is now out. It's possible for you to use this. It includes a bunch of other datasets in it.

[00:10:41] Alex Volkov: So we've talked about that. And the way to visualize this data set, we had a long conversation with folks from Lilac, so Nikhil and Daniel from Lilac, we talked about what segmentation means and how these data sets are getting visualized and how it's very difficult to actually read millions of lines of text, nobody can do this, or actually segment them on their computer and understand what it includes, just imagining like a million lines of million like records of text.

[00:11:09] Alex Volkov: So Lilac, we went into a conversation about how to cluster that data set, how to understand what's in it, how to search it, how to Segment and see the segments and how to figure out how much of the data set more or less of something you want. A super cool conversation, very worth your time. It's probably going to get released as a separate episode because it's a deep dive.

[00:11:30] Alex Volkov: Incredible and definitely give those folks a follow. We then had the deep dive into Lava 1. 6 from from the folks, Hao Ting Liu and some other folks behind Lava. It's an open source VLM, Vision Language Model that is the backbone of it is the Hermes 34 billion parameter from Nous Research.

[00:11:52] Alex Volkov: That's the kind of textual brain. This Lava release is the best open source VLM that we currently have. It includes support for high resolution. It includes incredible OCR for open source. Like really, I played around with this pasted text. It's quite convincingly incredible. It's achieved state of the art in open source models and beats the previous attempts as well.

[00:12:14] Alex Volkov: And we've talked about why and how it does that. And it's like very cool as well. We also, Briefly mentioned that in the voice and audio category, there is now a way to run Whisper directly on iOS devices. The folks from ArgMax opened, ArgMax released something called WhisperKit, which is a way for you to run Whisper in a very optimized way.

[00:12:34] Alex Volkov: We also mentioned Parakit from NVIDIA that's bidding performance and we then mentioned that Bard, with Gemini Pro on the LMC Serena, which is the way for us to know which models are good based on human preference, is now the second best LLM in the world, which was a surprise to many of us, and we talked about potentially why, and definitely worth checking out that conversation, and also LDJ hinted about something that's upcoming on the LMC Serena that could be super cool, a very mysterious deluxe chat something that achieves potentially state of the art performance on open source.

[00:13:13] Alex Volkov: We'll keep you in the loop after we learn more details as well. And, I covered the OpenAI new mention feature, where you can add mention and share context between different GPTs. If you talk with my GPT, you can suddenly summon another one, and that other one will have the context of your conversation in it, which is super cool.

[00:13:33] Alex Volkov: And one thing I didn't mention, and I really wanted to mention, is this unique use case for LLAMA, which is a game called Infinite Craft from Neil. Neil. fun, this is the URL, neil. fun. infinicraft. It's really addicting, I didn't want to mention this so far because literally your Thursday will not be productive if you start playing this game.

[00:13:49] Alex Volkov: But it's a game where you combine concepts, you just drag names on top, you start with Earth, Wind, Something, and Fire, and then you drag stuff on top of each other, and then they use the SLLM to combine this concept into one concept, and you keep going all the way up until you get Homer Simpson, for example, or The Avengers, or whatever.

[00:14:04] Alex Volkov: It's really a fun way to check out It's something that only an LLM can build. And I think this is most of what we talked about. I think we talked about multiple other stuff, but this is the gist of the conversation

[00:14:15]

[00:14:18] Alex Volkov: I guess we'll start with open source.

[00:14:36] ## OPEN SOURCE - Code LLama 7B

[00:14:36] Alex Volkov: All right, let's get it started. We have this nice transition here. And then, we will first start talking about Code Llama. Of course, Code Llama. Code Llama is quite the big news from the gods at Meta. Because we've been waiting for something like this. Llama, when it was released, it was not that great on coding necessarily.

[00:15:00] Alex Volkov: And we already had Code Llama before. I think 34b, if I'm not mistaken. And this time, Meta released Code Llama 70, They kept training this. They gave us a few, they gave us three models, correct? They gave us the the instruct model. They gave us the base model and also the Python specific model that is significant in performance.

[00:15:18] Alex Volkov: What I love about the open source here the open source area is that how fast everybody started like. saying, okay, we will give you Code Llama because super, super quick. Hug Face released an instruct version on Hug Face. It's supported in Hug Chat. I think Perplexity also serves this. Then together in like less than a day together decided, hey, we're now putting out Code Llama and it's supporting the function calling stuff that we're going to talk about in a second.

[00:15:44] Alex Volkov: Very impressive. 67. 8 on human eval, which is not the best that we had in open source, right? So Meta didn't come out and said, Hey, this is the state of the art open source coding model.

[00:15:54] Alex Volkov: I think DeepSeek and other areas were even better in code. However, we know that every Meta release is not the final release. We know that every Meta release is coming up to open source and then fine tuning. And so we definitely already saw quite a few fine tunes. Great release from Meta.

[00:16:11] Alex Volkov: Oh, and also, the thing to mention, it has 16, 000 context window, which is great for code, and it's been trained for longer sequences of code as well. Supposedly, hopefully, we'll spit out longer tokens and a lot more code. I think that's all I had. Oh no, I also had a thing that to mention that it's already been quantized for MLX and we've talked about MLX the kind of the Apple built inference engine, I guess on Apple Silicon, and it has several [00:16:40] fine tunes.

[00:16:40] Alex Volkov: And the fine tune that I wanted to highlight is SQL Coder. Because they finetuned like super quick on Code Llama, and then they beat GPT 4 on a bunch of like specific SQL queries. And SQL Coder is now probably the top SQL, text to SQL model in the world. Anything else on Code Llama folks here on stage that want to chat?

[00:17:01] Alex Volkov: Nisten, go ahead.

[00:17:03] Nisten Tahiraj: Yeah, there are a few things here. So it's true that DeepSeq and DeepSeq, I think 6. 7b and 33b, which is also what Miguel's White Rabbit Neo is based off the cybersecurity LLM. It is true that they do feel better when you use them in comparison to using CodeLlama. However, The good thing about having a 70B for doing fine tunes is that larger models do better with longer context lengths, as we've seen before with the YARN models, the YARN7B.

[00:17:37] Nisten Tahiraj: Yeah, they have 128k context length, but it's, there's not enough meat, so to say, in there, in the model for it to be good at those long contexts. And from what I know from other people running stuff in production, and at scale, they would all say, if you want to get reliable function calling you need to go with with a larger model and preferably at at least eight bit.

[00:18:03] Nisten Tahiraj: And because this was one of the few things that they would notice. Now, this is probably going to change with further architectures, but that's just how it is right now. So again, if you're going to use it yourself for looking up code snippets or fixing stuff here and there, you might be better off with one of the smaller ones.

[00:18:22] Nisten Tahiraj: However, overall, I think we'll see better fine tunes and better tools, especially for longer context stuff where you can just dump in the whole code base and then get stuff out of it. Yeah, and my last comment is that they had RLHF, the instruct version, too much to the point where it won't even answer.

[00:18:45] Nisten Tahiraj: It thinks it's unsafe to talk about building stuff on Mars or making, adding buttons to websites.

[00:18:53] Alex Volkov: I saw that. Thank you, Nisten. Yeah, definitely, the bigger the model is it's not only about the evaluations. We keep saying evaluations are not broken to an extent, etc. But it's not only about evaluations and how well it performs on different areas. It's definitely the bigger models, we know that they perform better on different simulation tasks as well.

[00:19:09] Alex Volkov: And yeah. Yassin, what's up? Welcome, man. This is your second appearance, I think, here. Do you, have you played with Code Llama? You want to comment on it?

[00:19:16] Yacine: Oh, what's up, everybody? Code Llama, let's go. Has anyone used it for work yet?, I like, probably could happily replace GPT 4 very begrudgingly, and that is like the thing that I do. So when these models come out, I load up the most convenient interface and then I try it.

[00:19:38] Yacine: And then, if I, and then I measure the performance based on how long it takes me to go back to GPT 4, and I'm pretty sure that I could be very productive with the internet disconnected and having this thing. Running. It's good.

[00:19:56] Alex Volkov: I think on that topic, I saw someone, and so this thing this specific thing running is hard on, on your Mac, but it's possible Nisten? If it's quantized it's, on the bigger Silicon Macs, I think it's possible now to run this, even though it's 70B.

[00:20:12] Nisten Tahiraj: Yeah, so you're going to need at least 40 gigs of VRAM to get good performance. Because once you go to 4 bit and below, it really starts to do great. But, yeah, I've used the other 70B as well, daily for the last few days. Which is what we're going to talk about, but yeah, oh, one, one more thing in, in open source, not as, as much related to this, but the people that built Atom and Electron they also built this other ID development environment called ZED, and they just open sourced it and ZED has they are about to push some pull requests to allow other assistants, and, on it, and I was trying myself, but I'm not a Rust dev, and so pretty soon you will have Other IDEs that are very fast and that you can just connect you can have a cursor like experience, like the cursor app that that you can just use at home because otherwise you're stuck with other Vim or Emacs if you want to use this as a, as an integrated development environment, if you want to use the code, but yeah, just shout out to Zed for open

[00:21:34] Alex Volkov: Definitely shout out

[00:21:35] LDJ: their stuff,

[00:21:35] Alex Volkov: and where it connects with what Yassin just said is that many of us like expect at some point to be fully offline and running these tools like Copilot. I saw some stuff, and I think I mentioned this before on ThursdAI, where you could fake. A copilot endpoint with a proxy and you can go into settings in VS code and say, Hey, my copilot actually sits in this URL and then serve local models and have copilot, but it talks to Code Llama.

[00:21:59] Alex Volkov: I haven't tried this yet but Yassine, I agree with you. The metric of how fast you go back to GPT 4 is a very good metric, especially because metrics are a little broken. So let's move forward from Code Llama. Yeah, go ahead.

[00:22:11] LDJ: There's one more way for those locally, either Windows, Mac, or Linux, just go in your hosts file and change the OpenAI domain to your local host or your whatever your home 192, that 168 server you have, and then it will route to that. So there's an even, easier way, then you don't have to change the configs of other models.

[00:22:33] LDJ: But yeah we're almost there. We're almost, the great decoupling is almost here,

[00:22:38] Alex Volkov: And as always.

[00:22:39] LDJ: much is here.

[00:22:40] Alex Volkov: Shout out to the goats at Meta. ai for giving us more and more open source and cool stuff.

[00:22:45] Together AI releases function calling & JSON mode for Mistral, Mixtral & Code LLama

[00:22:45] I think the thing to mention here in addition that folks from Together. ai and Together we've mentioned it before, they have, they serve models, they also lead to fine tuned models.

[00:22:54] Alex Volkov: We did the hackathon together with them, with Weights & Biases and Together. They super quickly added Code Llama, but also the thing to call out for Together specifically is because there's multiple folks serving free models. Definitely fast. They just added function calling in Jason mode. And I think it's important for the AI devs in the crowd that, we're getting so spoiled by open AI and function.

[00:23:15] Alex Volkov: Calling was super cool in Jason mode. I don't think anybody came close to c create, recreate the assistance API area in the open source, but definitely I know that Lama CPP and LM Studio as well, and I think some others, they expose a local server that kind of behaves like. It behaves like the open OpenAI API, so you can just quickly just exchange the OpenAI endpoint in the calls.

[00:23:38] Alex Volkov: So definitely together is doing that, but together is going further and giving us function calling, which was missing. And we talked about this a little bit with Jason from Instructor Library last time and so that's super cool and shadow together for this and hopefully. The folks from Together are gonna keep releasing interesting things like they did before, and they do quite a lot for open source as well.

[00:23:57] Alex Volkov: Together also has the folks behind Hyena Architecture, if you guys remember, we talked about Hyena. And the gold behind FlashAttention, TreeDAO is, I think, Chief Scientist and together. So definitely worth a check out if you're planning to work with these like open models and want some more stuff.

[00:24:13] Breaking News - AI2 releases OLMO - end to end open source 7B model

[00:24:13] Alex Volkov: Definitely worth a shout out. So I wanna hit the, this breaking news button for a second because we have some breaking news as I was sent this news. Just before we started this phase, I invited Nathan here to join us. So I'm gonna hit this button and then we're gonna chat with Nathan.

[00:24:39] Alex Volkov: Makes me feel like a full on radio talk show host. I want to say that the breaking news, we got a couple, but definitely the breaking news from Allen Institute. I received it in the form of another magnet link on Nathan's Twitter. So Nathan, welcome to the stage, to the show. This is the first time here.

[00:24:56] Alex Volkov: Please introduce yourself briefly. And then we can chat about [00:25:00] the interesting stuff.

[00:25:02] Nathan Lambert: Hey, yeah, thanks for having me. My name is Nathan Lambert. I'm a researcher at the Allen Institute for AI. I also write a somewhat popular ML blog, Interconnects. ai. I don't know how to quantify popular there. But yeah happy to be here,

[00:25:19] Nathan Lambert: share built.

[00:25:20] Alex Volkov: It's great. And you, so just to shout out interconnects just super quick. Last, I want to say Sunday, I released the conversation with Maxim Le Bon that about merging that some of you who are frequent here probably heard live, but it wasn't released in a podcast form.

[00:25:36] Alex Volkov: And then in that I, I mentioned, Nathan, I mentioned you in that newsletter, because you, Quote we did something and you said oh, I have to pay attention into this merging thing and then on the interconnects you release this like Opus, this essay, this incredible deep dive way deeper than I even would imagine to attempt, about where merging started and where it went.

[00:25:58] Alex Volkov: So definitely, if folks are interested in finding out everything about merging that's possible, and you already read Maxim's kind of essay on Hug Face Interconnects is the place to go. So definitely thank you for that. It was a

[00:26:09] Nathan Lambert: Yeah, thanks for the shout

[00:26:11] Alex Volkov: I don't know how long it took you, but definitely for folks who want the Deeper stuff, Interconnects is a great place, but this is not the reason why you're here on stage, and this is not the reason why I hit the break in new stuff.

[00:26:23] Alex Volkov: Could you talk , a bit about why you're dropping Magnet links on Twitter as though you're from Mistral?

[00:26:29] Nathan Lambert: Yeah, the magnet links and the word art is purely to increase visibility of this model. The model's available on HuggingBase. It's this Olmo model, which is named after Open Language Model. It's The first model in a series from AI2 to try to really release a actual open source model. There's always so many asterisks on saying ML is open source, but in a practical sense, make it as close to that as you can.

[00:26:56] Nathan Lambert: And at the same time, the state of the art, I think the core takeaway for the core lesson that I think people later on in this year will get around to is what a state of the art language model means now. And you could take into the weeds so much for evaluations for a base model, AI2 did this a lot.

[00:27:15] Nathan Lambert: You can see the paper, blog posts, whatever that lists the evaluations that we use, but A, it's really hard to evaluate base models. So like the base leaderboard is partial coverage there. And then B, everyone knows scaling laws are about the compute needed for parameters and data. So then we have to start evaluating models on a per token basis, as well as just their raw performance.

[00:27:37] Nathan Lambert: So that's why if you look at this, we compare to LLAMA. And among the models where we know the token count almost 7B is equivalently state of the art. There's also 1B model and training a bigger model is underway. And the big thing that's everyone's Oh, what about Mistral? And the rumors are that Mistral is trained on way more tokens than we don't know.

[00:27:57] Nathan Lambert: And it's we beat LLAMA by training on 20 percent more tokens, but Mistral might be trained on 300 percent more tokens. So we don't really know, but it's in the ballpark of a model that's LLAMA 2 caliber, people will be able to use this and we're excited to see what research people do from there.

[00:28:14] Alex Volkov: That's awesome, and could you talk about like the completeness of, you mentioned this briefly, but the completeness of the Open, like end to end openness. I think we've heard folks talk about this multiple times where what's open, what's considered open source. If somebody drops just a Waze file, is that open source?

[00:28:30] Alex Volkov: Do they open source the training parameters? Do they open source the data set? Do they open source the different things? From our perspective, Waze Ambassador, do they show you the last curve as well? Could you talk about a little bit of that approach and what you guys want to show about about that with with Alma?

[00:28:46] Nathan Lambert: Yeah, so the one that normally get people, gets people stuck is releasing the actual exact data that the model is trained on and then the second one that gets people stuck is release the training code. Essentially, Olmo has everything. I could try to list through them, but there are so many things to say that I honestly always forget one or two.

[00:29:03] Nathan Lambert: It's the data is released exactly, the model is released, we train, the 7B model we trained on multiple types of hardware. So there's like an NVIDIA version and an AMD version. The checkpoints are released for every thousand steps. There's 500 checkpoints per model on Hugging Face. The training code is released, the evaluation code is released. They're like, not a val washing paper is released, I don't know what else you want, like some things like demo and instruction models are coming soon, but if there's something that you want, we are either trying to release it or already have. Some of the things like weights and biases logs are just like tricky to get through permissions and stuff.

[00:29:45] Nathan Lambert: As in, like, how do you actually,

[00:29:46] Alex Volkov: I didn't even realize it was a Weights and Biases podcast, but we're just trying to get these last things out, and if we have issues with that, I guess I know where I can ask. I didn't even think about it on our to do list.

[00:29:56] Alex Volkov: Absolutely. This is ThursdAI coming to you from Weights Biases. We definitely would love to see the tracking and everything. One shout I don't want to say is the wing here. Wing from . Excelatel. Releasing just reports full of different charts and everything. Nathan, so this is great.

[00:30:11] Alex Volkov: And, you mentioned briefly you're training a bigger model. Can you give us a little hint about this or are we going to have to just sit and wait?

[00:30:16] Nathan Lambert: All

[00:30:19] Nathan Lambert: we are. It's in the official communications, and it's been in official communications for a while. It takes a little bit more research wrangling to train a 70B model, but 65 or 70 is the plan and I don't like There's a lot of wait and see there I can't really teach performance because you have to go out and do it, but we're gonna go do that

[00:30:40] Nathan Lambert: and get back to you

[00:30:41] Alex Volkov: Awesome. When you do please feel free to welcome, be welcomed back on the stage here on ThursdAI. And definitely a shout out for releasing everything as much as possible. And like you said, if there's anything you guys didn't and folks in the community maybe want to hit up Nathan and AI2 or Allen Institute of AI, I guess that's why it's AI2, because it's AI.

[00:31:04] Alex Volkov: Did I get it right?

[00:31:07] Alex Volkov: Yeah,

[00:31:07] Nathan Lambert: Allen Institute is AI and then for AI is AI too. ya! It shouldn't be too hard. It's easy to find.

[00:31:15] Alex Volkov: Yeah, easy to find, follow Nathan, follow AI2 on Twitter. Thank you for releasing everything as much as possible, including training code. Definitely support our community. And then we'll mention this in the show notes as well for folks who are listening. Nathan, feel free to stick around on stage as we talk about some stuff that you talk about.

[00:31:30]

[00:31:30] RWKV - Eagle 7B - a non transformer based LLM

[00:31:30] Alex Volkov: I in additional semi breaking news, but from the tradition of talking about the things that we have the authors for I wanna chat about RWKV, the Eagle 7B a little bit.

[00:31:42] Alex Volkov: And I wanna welcome where is my friend Eugene? Is he was on stage just before. Let's see Oh, looks like maybe We lost him. All right. So let me briefly mention RWKV, the, this, the model.

[00:31:56] Alex Volkov: However there's the transformers that we all know and love and many of the models that we talk about are based on the transformers architecture. And there's quite a few architectures that are trying to break the quadratic attention problem with transformers. RWKV is one of them based on, I want to say RNN.

[00:32:16] Alex Volkov: I see Luigi, you want to do this intro? I think you introduced me to

[00:32:19] LDJ: Yeah, sure. I think you're actually giving a decent explanation, but yeah, I would mostly say it solves the, or it tries to try and solve the quadratic scaling problem of every time you double the context length, usually in transformers, you end up doing something like 4x ing the multiplying by 4 the amount of memory you're using and the amount of slowdown you're causing.

[00:32:44] LDJ: And that's what things like Mamba and Hyena and RetNet and RWKV are all trying to solve in different ways. And the RWKV originally started actually from being inspired from a paper by Apple back in, I think, 2020 or 2021 called Attention Free Transformer. And since then, this guy named BlinkDL, sorry, a guy named BlinkDL has just been working on this with a bunch of other people and upgrading and changing and modifying things for years now, all the way up to this point.

[00:33:16] LDJ: Now they're on RWKV5.

[00:33:19] Alex Volkov: So this [00:33:20] latest release from RWKV is called Eagle. It's a 7b model and apparently it's quite performant. However there's also a demo. I played with it and I gotta say the, Nisten, go ahead. I think you have a comment as well.

[00:33:37] LDJ: Yeah, I think it's important to keep in mind it's not trained for as much as something like Mistral probably was. I think it's probably most fair to, I think this one's only trained for one trillion tokens. So I think the most fair comparison would actually be LLAMA 1. 7b. And then, once it gets to 2 trillion tokens, then it would be fair to compare against LLAMA 2, 7b et cetera, yeah.

[00:33:59] LDJ: So it's like hard to exactly be able to compare, but it looks like it's probably scales well.

[00:34:05] LDJ: I used the previous version a lot, and it did feel faster. Also, I want to say, now, RWKB has been part of the Linux Foundation. For a few months. So again, just because it doesn't feel or doesn't reason as well as a Mistral model, which has yet to be proven, does not mean that this is not going to be useful.

[00:34:29] LDJ: Because it scales so well at larger contexts, it might actually perform better there, where, for example, you just dump in a whole book or a whole code base, you And or just dumping like 300, 000 words or whatever, so it has a lot of potential in that regard for those uses. I have yet to test how it runs on CPU, so that, that might be useful there as well.

[00:34:56] LDJ: But again, it's super interesting because it's not really the usual transformer based one. And yeah, it has been around for a while. And it goes to say that the transformers are not a dead end. And we're going to see a lot more improvements in coming just from architecture changes. The

[00:35:17] Alex Volkov: so we, we definitely want more improvements for architecture changes. Definitely. We've talked about the Hyena architecture from together. We've talked about Mamba, the state, space, state models. I think I mentioned this before, but I got a brief chance to meet and take a picture with Yann LeCun on in Europe.

[00:35:35] Alex Volkov: And the only thing that I could come up with, I literally had a minute there is to ask him about like state space models and just the meh. That Yann LeCun gave me, it was really funny, like a very specific meh about state space models. And then he said something about we're working and there have been continuous attempts at like changing transformers.

[00:35:53] Alex Volkov: Now, we're still We're still not there in terms of completely being able to replace, but a very interesting area of research and some combination, probably between transformer like attention based stuff and the infinite context links with RNNs could be very interesting. And Rwkv, the latest eagle, if anything, and I added a tweet here, and I'll add to show notes from Yam, who's dug into this more deeply, so you can follow that thread as well that The continued training will show promise hopefully for the RWKV and hopefully at some point we'll get The folks behind RWKV.

[00:36:31] Alex Volkov: It looks like we had some connectivity issues for this time. All right moving on here moving on to

[00:36:38] Miqu - a mistral large model leak confirmed by Mistral CEO

[00:36:38] Alex Volkov: I guess we should talk about Miku Let's talk about Miku folks, so

[00:36:47] Alex Volkov: I don't know, who wants to start? Nisten, do you want to start? LDJ, do you want to start? Just briefly, folks, I don't want to cover this whole space with Miku, but definitely this next thing happened. Somebody dropped a very performant, quantized version of a model called Miku on, I guess Hug Face? And I think it originated from Probably 4chan, everything originates on 4chan, right?

[00:37:10] Alex Volkov: The Waifu Nathan, you called them the Waifu Diffusion Army or something similar, right? Waifu Research Department.

[00:37:17] Nathan Lambert: Waifu Research Department,

[00:37:18] Nathan Lambert: it's a real hugging face org. That's where I got that. That has real models and data sets and stuff on it. It's I didn't make that up. It's they have 150

[00:37:26] Nathan Lambert: members. It's hilarious.

[00:37:27] Alex Volkov: Oh, I see. Okay. So I thought that you're like calling everything that happens in the places that I don't frequent, which is like 4chan and everything that Waifu Research Department, but it's a real org. Good to know. So shout out to Waifu Research Department. But also as some of these things go, especially the leaks and everything they originate in areas like 4chan, maybe Local Llama on Reddit, and folks started talking about Mikyu.

[00:37:48] Alex Volkov: MiQ QUU, which is a model that wasn't clear where it came from, but it performed very well, very, I want to say very well. And folks started thinking about, hey, potentially this is a leak of one of the bigger companies. And I think I don't know if I'm doing the whole thread justice, but I think at some point people hinted that this could be Mistral, or Mistral based, or maybe Llama based.

[00:38:11] Alex Volkov: So I want to hear from two folks, but in an orderly fashion, because Nisten, I followed your threads on this, and then LDJ, I followed some of the stuff that you mentioned on this. Where are we at the end of this? Because I definitely want to mention Arthur's involvement in announcing the leak, but, Walk me through of like how this model performs if you downloaded the Nisten, I think you did.

[00:38:31] Alex Volkov: Let's talk about this.

[00:38:33] LDJ: I downloaded it before, I knew what it was to check, and the first check came out that this is exactly like LLAMA70B, the architecture, the shapes, the weights, and we had heard, we'd heard earlier rumors that Mistral Medium was a 14B aid expert It led me to dismiss this. Also, I've done the prompt template wrong, so I wasn't getting the exact results.

[00:39:03] LDJ: But

[00:39:04] Alex Volkov: I think

[00:39:04] LDJ: now

[00:39:04] Alex Volkov: got fact checked by, by, by the GOAT himself, Georgi Gerganov. Was that?

[00:39:09] LDJ: By Georgi

[00:39:10] LDJ: On having misconfigured

[00:39:12] Alex Volkov: no, Nisten, you used my thing wrong. Yeah.

[00:39:15] LDJ: So he had put the prompt template wrong,

[00:39:18] Alex Volkov: That was great.

[00:39:18] LDJ: just pretty epic. That was an epic mistake. Yeah, anyway, people ran benchmarks. Actually, Technium ran the benchmarks. And it performs very close to the Mistral Medium API.

[00:39:31] LDJ: Bye. And now other things that people have noticed, it is a Q5KM, so it's a 5 bit quantized so that, that means it's going to be maybe like a percent off or two on the benchmark, so it could be very close. But it looks like that either this is an early version of Mistral Medium that got leaked and was sent to customers, or is It could still be that Mistral Medium is an MOE, just a much more efficient one, and this was trained on the same data.

[00:40:11] Alex Volkov: So just to be clear we didn't get an MOE model out of this, right? So this is a quantized

[00:40:15] LDJ: it's a LLAMA70B, exactly. It's exactly like every other LLAMA70B Finetune, in terms of the files,

[00:40:25] Alex Volkov: So we're still pre Arthur's announcement. Luigi, go ahead. You have a hands up, pick it up from here.

[00:40:31] LDJ: Yeah, so something that I feel like is interesting in terms of Mistral medium API is if we want to speculate if it's MOE or what is based off or if it's based on an existing architecture or whatever, I think there is actually evidence pointing towards. It being like LLAMA based, or continued pre training of existing LLAMA model, like maybe LLAMA70B, because if you look at the tokenizer for Mistral and the Mixtral47B model, those are like, that's the Mistral tokenizer that, if they wanted to keep making their own token.

[00:41:07] LDJ: Models completely from scratch, they probably would use that same tokenizer, but you can actually see that the way the Mistral media model tokenizes things is actually closer to how LLAMA 7b tokenizer tokenizes things. And if you just do continue pre training instead of training completely from scratch, that's a situation tokenizer.

[00:41:32] LDJ: I'm not sure why they would use that tokenizer for Mistral Medium if it was trained completely from scratch on their own mixture of experts architecture.

[00:41:39] Alex Volkov: [00:41:40] So I just want to like chime in here real quick. We've got this model, it performed really good. It had like good evaluations. Some folks like kept saying this could be this, it could be that we had speculation before that. Because the guys behind Mistral first dropped the 7B model, then the mixture of experts that we've talked about.

[00:41:59] Alex Volkov: At nauseam, I think at this point, like a very good open source model. They also released Mistral Medium in their API without dropping this open source for everybody else. And Mistral Medium was like significantly well performing on the LMCS arena as well. We, there was speculation that's also like a mixture of experts, but bigger one.

[00:42:16] Alex Volkov: And so this didn't fit the narrative that what we got is a A leak from the bigger mixture of experts. However, because this was like one model that's similar to Llama7TV. But then, we got Official news from Arthur Mensch, the CEO of Mistral, that I gotta shout out everything they do with style.

[00:42:35] Alex Volkov: I don't know where they, like learned this method, but if you remember the Magnet Link, if you remember the logo that's WordArt, I don't know what, like, where in France they teach you how to be very stylish about everything, but definitely they handled this potential leak news with that flair as well.

[00:42:54] Alex Volkov: So really want to shout out. Arthur came out and said that, hey, indeed, one of the earlier clients, or I guess one of our one of our partners in the early stages of the company one of their like overzealous employees released the suites file and leaked the suites file of an Earlier model that we've trained super quick on LLAMA because we were like running to get started.

[00:43:20] Alex Volkov: And this is around the language that he used. He didn't say Mistral Medium. So when I posted my stuff and the folks who found out through me, I apologize. I said he confirmed Mistral Medium. He did not confirm Mistral Medium. He did confirm that this is a leak from one of the big companies from Mistral.

[00:43:34] Alex Volkov: He also mentioned, he also changed his tweet. He edited it from I went and looked and tried to figure out what the change was. He said earlier version, and then he changed it to old version. So I don't know if that means anything. However so we don't have a full confirmation from them that this is indeed Mistral medium.

[00:43:52] Alex Volkov: We do have a confirmation that unlike the 7b Mistral. This big model was not trained from scratch. And he also said we moved on from there. So we don't know still whether or not Mistral has a whole, if Mistral Medium is a full creation of their own. But there's zero doubt in my mind that Mistral folks are capable of doing like a Mistral Medium from scratch.

[00:44:16] Alex Volkov: Specifically there's zero doubt in my mind because I went and looked and it's probably known, but for folks who don't know this. Many of the folks who work in Mistral worked on LLAMA 1, I think the Guillaume Lampel, I think, Chief Scientist, and Theobud no, I, Timothy Lacroix, I think, there's a few folks who now work at Mistral who literally worked on LLAMA in my head, there's, continuing some of the work they did before, before they switched oversides and, I think it's super cool the way they handled it.

[00:44:46] Alex Volkov: In addition to this, The CEO of Mistro Arthur Mensch, he went on hug and face to the Mickey model and he opened the pull request to the Readme MD file and just dumped like a huge word art of the like letter M for attribution and just said, Hey, how about some attribution or something like this? Which was the most epic way to acknowledge that this leak is actually coming from their lab?

[00:45:12] Alex Volkov: And I really want to shout out everything they do with Flare, and, this is a leak, so it's not cool to leak the stuff I know we all want it, but the let's say, a shop, an LLM shop that releases a bunch of open source like Mistral does, and we're gonna get some stuff from them anyway at some point, it's way better to get it unquantized and properly attributed so we'd be able to use, I think.

[00:45:34] Alex Volkov: Yeah, so just I found the tweet I added to the space, thanks Luigi, the literal words from like Arthur from the CEO, to quickly start working with a few selected customers, we retrained this model, we retrained this model from Lama 2, the minute we got access to our entire cluster, the pre training finished on the day of Mistral 7b release.

[00:45:54] Alex Volkov: So I will say like this It's quite incredible the amount of progress the folks from Mistral were able to do given that they raised the round like during the summer and they fairly, I think they started like less than a year ago or so, maybe just a little bit more. So I don't know if we know, like rounds usually are PR announcements and not necessarily when they closed, but definitely a very short time for them to rise like as quick to the top of the leaderboards.

[00:46:17] Alex Volkov: A shout out to the Mistral team, it sucks that it happened. Hopefully, this will happen less with continued models. I think it's time for us to move on because we have some more stuff to, to chat about. And I want to talk about, I want to talk about embeddings.

[00:46:33] Alex Volkov: . Let me just do a brief reset and we'll be able to talk about some embedding stuff and dataset stuff.

[00:46:49] Alex Volkov: If you're just tuning in to the live recording, you're on ThursdAI, the space that tries to, and sometimes Achieves this goal, to bring you everything that happened in the world of AI, open source and LLMs, And definitely be company APIs and vision, so everything that's interesting that happened in the world of AI, That that is worth chatting about, we're trying to bring you and chat about.

[00:47:12] BAAI releases BGE-3M - a new 100 language enabled embedding model

[00:47:12] Alex Volkov: And This is the second hour. We're moving into the second hour. And so far we only talk about open source LMs. And it looks like this is the main focus of the space usually when there's a bunch of stuff, but we still on this and we have to move forward towards embedding models. And so I want to just mention two things that are very interesting.

[00:47:29] Alex Volkov: The folks who follow the embedding space area. If you remember, we've talked about something called M5 that I think Microsoft. Trained, they trained the embedding model on top of Mistral, and then it outperformed on the leaderboard of MTEB the like biggest leaderboard in, in embedding space.

[00:47:48] Alex Volkov: And since then, we've had like multiple folks that are interested in embeddings. Opened the AI released two new embeddings to replace their ada, which most of the people used. Two new embeddings looks embedding small and embedding large and. Everybody focuses on this MTEB score, however it's fairly clear that this score is not that great and it's very easy to overfit and to show that you're performing well on that leaderboard, however, without actually proving that this wasn't trained as part of your embedding model.

[00:48:17] Alex Volkov: And so this MTEB scores, they don't usually matter that much, unfortunately. However, it's this is the only thing that we have. And so since. This space looks like to be heating up. We now have like two new embedding models that we should talk about. So the first one is, I prepared for it from BAAI, and then it's called BGE.

[00:48:40] Alex Volkov: M3. So BGE for the longest time on that MTB leaderboard was the model, BGE was the model that was like small enough and performant enough to run on your own hardware. And the trade off for embeddings is often how fast they are and if you can outspeed whatever OpenAI gives you. but also languages.

[00:49:00] Alex Volkov: Like the one thing that we always talked about that the MTB leaderboard doesn't show, and we had like folks from Hug Face who host this leaderboard talk to us about this as well, is that even if somebody releases like super cool new embedding models that like significant more dimensions, whatever it doesn't mean that it's working well because in real world scenarios, sometimes your customers speak different languages.

[00:49:21] Alex Volkov: Most of these like Embedding models were only English, right? So they beat OpenAI, Ada, whatever at different parameters, but they were only in English, which is not super practical for somebody who builds. I don't know Document Retrieval, RAG Pipeline, something like this, where you have customers, you don't know what language they're going to use.

[00:49:41] Alex Volkov: And so OpenAI, they don't disclose how many languages they, they support, but they support most of the stuff that probably JGPT supports. BAAI, so BGE was one of the top open source ones. They just released a new version, they called BGE M3, and M3 stands for Different things, I want to talk about them super quick.

[00:49:57] Alex Volkov: They added multilinguality [00:50:00] to this embedding model, which now has a hundred plus languages, which is quite incredible, which probably covers most of the use cases for companies that use embeddings for any type of purpose. They also said something multi functionality or something, 8k context, they added 8k context to the whole thing Embeddings.

[00:50:20] Alex Volkov: If you want to embed like larger documents, this used to be a big problem as well. And now they added like longer context. We've talked with the folks from Jina AI, who's also incumbent in the space, or somebody who releases models. They also mentioned that back then, like longer context or longer SQL Sinks is important because people sometimes embed bigger documents.

[00:50:42] Alex Volkov: And they also said something about multifunctional embedding, which I didn't really honestly get the embedding. I will add this to show notes. It's called Flag on Embeddings. It's up on the MTB leaderboards. It's pretty decent.

[00:50:54] Nomic AI fully open source Nomic Embeddings

[00:50:54] Alex Volkov: And an additional like breaking news from our friends at Nomic looks like they're also stepping into to the embedding.

[00:51:01] Alex Volkov: Space the fact that Nomec released Nomec AI, the company, if you guys are not familiar, you've probably seen their Atlas product, any of you? Atlas is this way to take These embeddings from this like incredibly multi-dimensional space and reduce them down to 3D dimensional space or even 2D, and then somehow visualize them.

[00:51:20] Alex Volkov: So I think they did this for a bunch of open source data sets. They definitely did this for tweets. So if you go to Atlas from Naec, you can like. Scroll through timeline and they embedded all the tweets up until, or I don't know, 5 million tweets. So you can actually see on there like embedding visualization what topics people talked about because they embedded like a bunch of tweets.

[00:51:39] Alex Volkov: It's super cool as a demo. So they also have GPT4ALL, which we mentioned here a couple of times like a LM Studio similar thing that you can run. Locally download models, quantized models, I believe as well. And they also do document retrieval locally. So they, Nomic released a embedding just as we were coming up on the space.

[00:51:59] Alex Volkov: Yeah. So open source, open data and open training code as well for NumX Embed the first release of NumX Embed. And they claim it's like fully reproducible and auditable, which is I don't think we get from BA AI. I didn't see any training. Maybe I'm just omitting this. It looks like still everybody in the embedding space tries to at least compete with OpenAI's like new TextEmbedding 3.

[00:52:25] Alex Volkov: And Nomics says they outperform with the context length, they outperform Ada, they outperform TextEmbedding 3 on short and long tasks as well. And they're featured very well on the leaderboard. The problem with these announcements, as you guys know, sometimes you get a Twitter thread or somebody in email or a blog post and selectively the folks who how should I say?

[00:52:46] Alex Volkov: The folks who decide which graphs to show, they have to show that they're better at something. So sometimes they only choose in the graph, , the companies or products that they're better from at many people do Mistral, for example. They don't include Mistral in what they beat, because Mistral beats a bunch of stuff in different metrics.

[00:53:05] Alex Volkov: So I don't know if they selected the right ones, but definitely the folks from no show the, that they outperform text, embedding small from open AI and text embedding ada, which is quite significant, like recently released from OpenAI embeddings model is now beaten by open source with a fully open data and open training code as well.

[00:53:26] Alex Volkov: That's pretty cool. I don't know about languages from NOMIC. So unlike the BGE a hundred plus languages, I don't know if NOMIC supports like a bunch of languages as well. We're going to have to follow up with NOMIC but definitely open embeds. And one thing to mention is they have also.

[00:53:44] Alex Volkov: They're hosting those embeddings on their API, and they're giving like 1 million embeddings for free. Super, super cheap and easy to get started, evaluate for yourself and your use case whether or not this type of stuff worth it for you to not go through OpenAI. Anybody here on stage used any of the models that we talked about and want to mention?

[00:54:02] Alex Volkov: And if not, I think we're moving on to the next topic.

[00:54:06] PicoCreator: /Yeah. So sorry for the connectivity earlier. Actually. I'm really glad and excited to see BGE as well because I think as you mentioned that a lot of AI tends to be focused on English. And one thing that sets RWKV apart, other than I think you all probably introduced it, is a new architecture, 10 to 100 times cheaper, potentially can replace all the AI today, as we scale up, is that we took the approach of doing multilingual, and that's because we have a multinational team, and this hurts our English evals, we we don't care, we care about supporting Everyone is a team in the world and we also face this same problem architecturally and we also know, knew about the multilingual problem, especially in embedding space.

[00:54:51] PicoCreator: We just haven't gotten around to doing that and I'm like, yes, someone has helped that. Now I can use that together with my model for non English use cases. Yeah,

[00:55:00]

[00:55:30] This weeks Buzz - what I learned in Weights & Biases this week

[00:55:30] Alex Volkov: As you may have heard, ThursdAI is brought to you from Weights Biases. And one of the segments that I often do on the newsletter, and I decided to do on the podcast as well, is called This Week's Buzz. And the reason why it's called This Week's Buzz is because Weights Biases shorthand is wand b, and it sounds like a bee holding a wand.

[00:55:53] Alex Volkov: So we have this internal joke. Haha, get it? And so This Week's Buzz, this week's buzz for this week is all about internal projects. I am announcing, I guess we're going to have a live recording with my team. So my team is the growth ML team on Weights Biases. And in December, we all got requested, or I guess we all proposed different projects for adding LLM features into the business flows to optimize them using OpenAI and different other projects like that.

[00:56:29] Alex Volkov: And. The team did a bunch of incredible things, and my job here is Summarizing and giving folks the platform. And so we presented it to the whole company and we're going to do a series of live shows. I think there's going to be two parts with the team. So you'll get to meet the team, but you also get to hear about the projects as we build them using the best practices as we know them at the time.

[00:56:54] Alex Volkov: And also what we've learned, what are the struggles, what are the technical challenges. So that's coming up on next Monday. That's going to be. Very interesting conversation and please look out for that on our socials. I definitely learned a ton and it's going to be very interesting. So we're probably going to have part one on Monday.

[00:57:13] Alex Volkov: Watch out, watch our socials for announcements. I think 2. 30 PM Pacific time. And if that type of stuff interests you, the whole reason we're doing this live is for other folks to learn from our experience. So more than welcome. This was this week's buzz. And now back to the show.

[00:57:35] ## DATASETS - new ThursdAI segment

[00:57:35] Alex Volkov: The next segment is a new segment for us, and I don't even have a transition planned for it. But it's a dataset segment, and I just want to mention that, We've been talking about open source LLMs. We've been talking about all these things. Multiple times, and multiple folks on stage here are, and in the audience, are, the main voodoo, if you will is creating or curating these datasets.

[00:58:00] Alex Volkov: For many folks here, I see Alignment Labs, I see LDJ, I see Technium in the audience, I see like other folks, and this is For many of these models that outperform different models, or for the continued fine tuning of, like I say, Mistral, for example, this is the main area of interest. This is the main reason why some models succeed more than other models.

[00:58:18] Alex Volkov: We've talked with multiple [00:58:20] folks like this. We've talked with John Durbin from the Bagel example and his Ouroboros dataset, etc. And I just want to shout out that we didn't have a full Segment dedicated to data sets before because part of it was just like open source LLMs, obviously But this is also could be the competitive advantage of OpenAI because they apparently paid a bunch of folks to manually build data sets for them Yeah, potentially Mistral as well, right?

[00:58:43] Alex Volkov: So before we get the huge announcements, I want to like highlight Luigi here, Luigi you wanna You want to talk about the stuff that you and Arguello just released for the copy Hermes stuff? I would love to mention Copybar because it's one of the best data sets that we get to play with.

[00:58:58] LDJ: Yeah, sure. Actually, I wish Daniel from Argia was here, but I guess I could speak on behalf of Hermel.

[00:59:04] Alex Volkov: Yeah, we'll get

[00:59:05] LDJ: Yeah, so they pretty much made a, so DPO is just in short, like a reinforcement learning method and it's like a type of data. Imagine you have just a question in a dataset, and usually you would just have one response for every question, but instead, let's say you have a scenario where you have multiple alternative responses for that question, and then you just train the model on kind of understanding and giving more importance to different ones in that way, and it's just a little bit more advanced way of training, and it's the standard of how people are training now to get better models.

[00:59:39] LDJ: Thank you. Pretty much they took the Capybara dataset that I developed and made a DPO version of Capybara, which is they just generated a bunch of alternative responses for the conversations in Capybara with other models, and then they added a bunch of preference data and things like that, and then they took the Open Hermes 2.

[00:59:59] LDJ: 5 model, and then they continued training it specifically using the DPO objective, and then they took with this Capybara DPO dataset that they made. And, yeah, it has really good results, seems to improve pretty much on all the benchmarks, and they only used half of the Capybara data as well, and they didn't even use that powerful of models to generate these alternative responses.

[01:00:23] LDJ: So I was already talking with Daniel from Argilla about a bunch of ideas for their next version of this that could even be significantly better.

[01:00:30] LDJ: Yeah.

[01:00:31] Alex Volkov: Yeah, so I'm going to add this to the show notes. Thank you, LDJ. , Argia is an open source tool. As far as I've seen, like it's on GitHub. You can use, we use this in Weights Biases to do like different preferences as well.

[01:00:41] Alex Volkov: So you can like actually run and say, hey this is better. And then there's a bunch of other tools in datasets area. So the other news in the datasets that we want to cover is, If you like, follow Thursday at all to any extent. Or if you use follow the open source stuff copy bar is a great dataset.

[01:00:57] Teknium open sources Hermes dataset

[01:00:57] Alex Volkov: Technium has the Hermes dataset that been, it is been used by many of the, like top leading, definitely the Mytral Hemes one. After I think the eye went down for a bit, got like significant attention. but definitely other examples like the Hermes Yee, 34B is mentioned. And this is the data set is now open.

[01:01:16] Alex Volkov: Technium released it, I think yesterday, was that yesterday, Technium? Yeah. Technium does not want to come on stage, no matter how persuasive I am, even though we hang out, but I will just do it justice at this data set is quite incredible. It's open for you now on, on Hug and Face. You can go and check it out.

[01:01:33] Alex Volkov: And then I can't wait to see what it will like what it will lead because people will be able to build on top of this as well. Just a shout out to the dataset like builder here. This dataset is built of a bunch of other datasets as well. So definitely LMC's chatbot arena and error borers by John Durbin.

[01:01:51] Alex Volkov: It's like the sources. So Technium annotated most of the sources as well. His own collective cognition dataset. Alpaca and vol instruct shout out to folks from gla, Sahil and Anton. GLA code assistant is also part of their, so this is like the work that builds on other works.

[01:02:06] Alex Volkov: This also makes it really hard to fully attribute license wise. So if, if you're listening to this and you're like, ah, I'm gonna use this for commercial purposes, it's on you to check whether or not it's possible and you take the responsibility because. Given so many datasets that it builds on top of, I don't think it's possible to like very quickly figure out the license here.

[01:02:25] Alex Volkov: But also like SlimOrca, Winglian was here before. Oh, he's in the audience. Shout out Wing from Axolotl and other folks. What else? Oh yeah, Platypus as well. So if you guys remember, I see Ariel in the audience Platypus folks, we had a great conversation with the folks from GarageBand from the Platypus.

[01:02:43] Alex Volkov: So all these like Incredible datasets climb into one and then sorted and filtered and it's really a great kind of release from Technium. So thank you. Thank you for that. And folks, we'll start building even better datasets on top of this, hopefully.

[01:02:57] ArcMax releading WhisperKit - IOS native whisper for near real time ASR

[01:02:57] Alex Volkov: folks, we're moving forward because we have three more big things to talk about, or two more big things and one kind of tiny thing, probably. So I'll just cover them. Very briefly, the voice and audio, because we don't have we don't have our audio expertise person on here on stage, but you guys know VB from Hug Face.