Hey Folks, we are finally due for a "relaxing" week in AI, no more HUGE company announcements (if you don't consider Meta Movie Gen huge), no conferences or dev days, and some time for Open Source projects to shine. (while we all wait for Opus 3.5 to shake things up)

This week was very multimodal on the show, we covered 2 new video models, one that's tiny and is open source, and one massive from Meta that is aiming for SORA's crown, and 2 new VLMs, one from our friends at REKA that understands videos and audio, while the other from Rhymes is apache 2 licensed and we had a chat with Kwindla Kramer about OpenAI RealTime API and it's shortcomings and voice AI's in general.

All right, let's TL;DR and show notes, and we'll start with the 2 Nobel prizes in AI 👇

2 AI nobel prizes

John Hopfield and Geoffrey Hinton have been awarded a Physics Nobel prize

Demis Hassabis, John Jumper & David Baker, have been awarded this year's #NobelPrize in Chemistry.

Open Source LLMs & VLMs

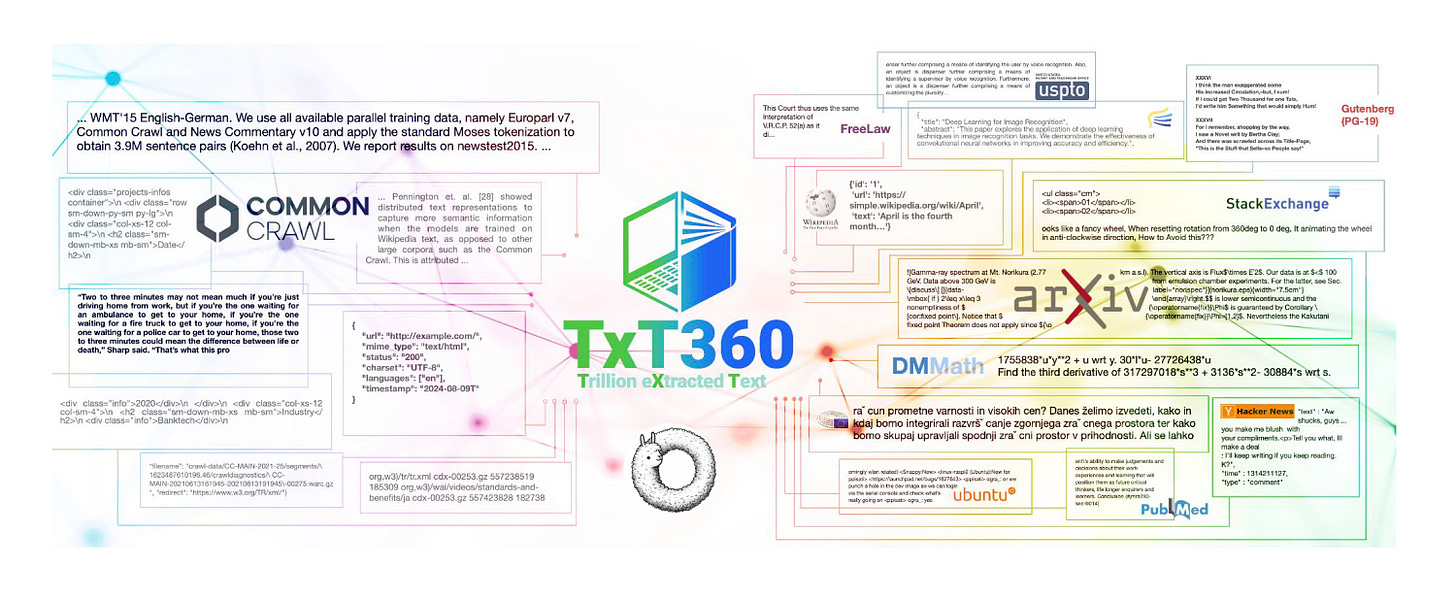

TxT360: a globally deduplicated dataset for LLM pre-training ( Blog, Dataset)

Rhymes Aria - 25.3B multimodal MoE model that can take image/video inputs Apache 2 (Blog, HF, Try It)

Maitrix and LLM360 launch a new decentralized arena (Leaderboard, Blog)

New Gradio 5 with server side rendering (X)

LLamaFile now comes with a chat interface and syntax highlighting (X)

Big CO LLMs + APIs

This weeks Buzz

We chatted about Cursor, it went viral, there are many tips

WandB releases HEMM - benchmarks of text-to-image generation models (X, Github, Leaderboard)

Vision & Video

Voice & Audio

Tools

UITHUB.com - turn any github repo into 1 long file for LLMs

A Historic Week: TWO AI Nobel Prizes!

This week wasn't just big; it was HISTORIC. As Yam put it, "two Nobel prizes for AI in a single week. It's historic." And he's absolutely spot on! Geoffrey Hinton, often called the "grandfather of modern AI," alongside John Hopfield, were awarded the Nobel Prize in Physics for their foundational work on neural networks - work that paved the way for everything we're seeing today. Think back propagation, Boltzmann machines – these are concepts that underpin much of modern deep learning. It’s about time they got the recognition they deserve!

Yoshua Bengio posted about this in a very nice quote:

@HopfieldJohn and @geoffreyhinton, along with collaborators, have created a beautiful and insightful bridge between physics and AI. They invented neural networks that were not only inspired by the brain, but also by central notions in physics such as energy, temperature, system dynamics, energy barriers, the role of randomness and noise, connecting the local properties, e.g., of atoms or neurons, to global ones like entropy and attractors. And they went beyond the physics to show how these ideas could give rise to memory, learning and generative models; concepts which are still at the forefront of modern AI research

And Hinton's post-Nobel quote? Pure gold: “I’m particularly proud of the fact that one of my students fired Sam Altman." He went on to explain his concerns about OpenAI's apparent shift in focus from safety to profits. Spicy take! It sparked quite a conversation about the ethical implications of AI development and who’s responsible for ensuring its safe deployment. It’s a discussion we need to be having more and more as the technology evolves. Can you guess which one of his students it was?

Then, not to be outdone, the AlphaFold team (Demis Hassabis, John Jumper, and David Baker) snagged the Nobel Prize in Chemistry for AlphaFold 2. This AI revolutionized protein folding, accelerating drug discovery and biomedical research in a way no one thought possible. These awards highlight the tangible, real-world applications of AI. It's not just theoretical anymore; it's transforming industries.

Congratulations to all winners, and we gotta wonder, is this a start of a trend of AI that takes over every Nobel prize going forward? 🤔

Open Source LLMs & VLMs: The Community is COOKING!

The open-source AI community consistently punches above its weight, and this week was no exception. We saw some truly impressive releases that deserve a standing ovation. First off, the TxT360 dataset (blog, dataset). Nisten, resident technical expert, broke down the immense effort: "The amount of DevOps and…operations to do this work is pretty rough."

This globally deduplicated 15+ trillion-token corpus combines the best of Common Crawl with a curated selection of high-quality sources, setting a new standard for open-source LLM training. We talked about the importance of deduplication for model training - avoiding the "memorization" of repeated information that can skew a model's understanding of language. TxT360 takes a 360-degree approach to data quality and documentation – a huge win for accessibility.

Apache 2 Multimodal MoE from Rhymes AI called Aria (blog, HF, Try It )

Next, the Rhymes Aria model (25.3B total and only 3.9B active parameters!) This multimodal marvel operates as a Mixture of Experts (MoE), meaning it activates only the necessary parts of its vast network for a given task, making it surprisingly efficient. Aria excels in understanding image and video inputs, features a generous 64K token context window, and is available under the Apache 2 license – music to open-source developers’ ears! We even discussed its coding capabilities: imagine pasting images of code and getting intelligent responses.

I particularly love the focus on long multimodal input understanding (think longer videos) and super high resolution image support.

I uploaded this simple pin-out diagram of RaspberriPy and it got all the right answers correct! Including ones I missed myself (and won against Gemini 002 and the new Reka Flash!)

Big Companies and APIs

OpenAI new Agentic benchmark, can it compete with MLEs on Kaggle?

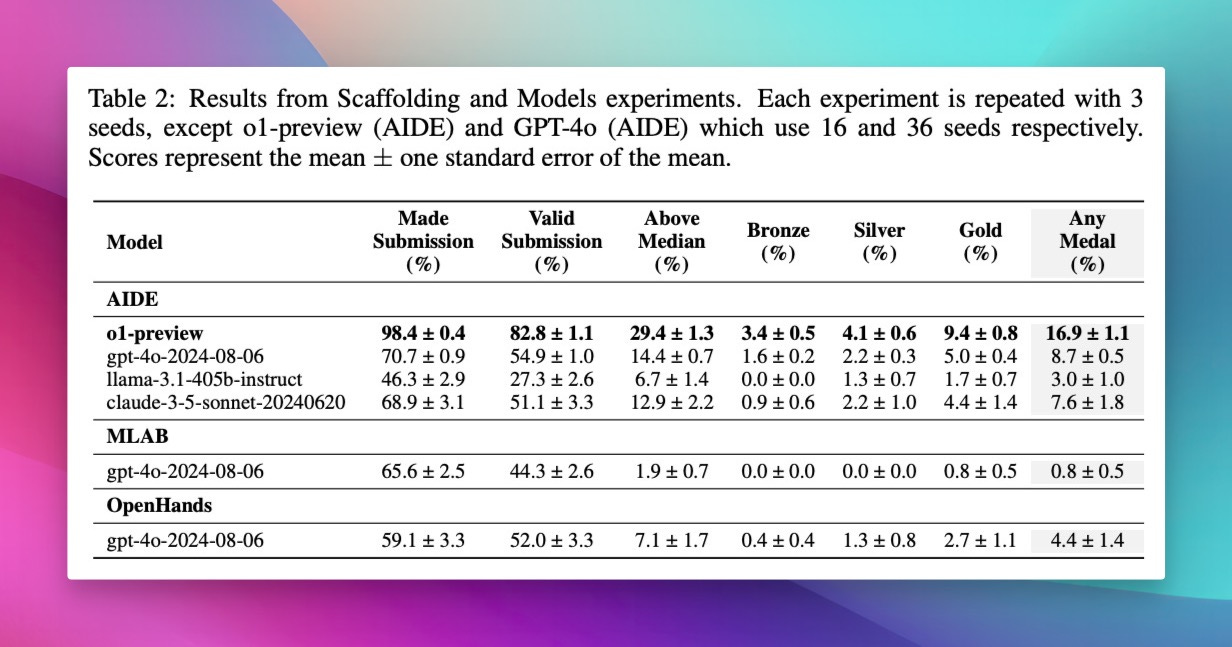

OpenAI snuck in a new benchmark, MLEBench (Paper, Github), specifically designed to evaluate AI agents performance on Machine Learning Engineering tasks. Designed around a curated collection of Kaggle competitions, creating a diverse set of challenging tasks that test real-world ML engineering skills such as training models, preparing datasets, and running experiments.

They found that the best-performing setup--OpenAI's o1-preview with AIDE scaffolding--achieves at least the level of a Kaggle bronze medal in 16.9% of competitions (though there are some that throw shade on this score)

Meta comes for our reality with Movie Gen

But let's be honest, Meta stole the show this week with Movie Gen (blog). This isn’t your average video generation model; it’s like something straight out of science fiction. Imagine creating long, high-definition videos, with different aspect ratios, personalized elements, and accompanying audio – all from text and image prompts. It's like the Holodeck is finally within reach!

Unfortunately, despite hinting at its size (30B) Meta is not releasing this model (just yet) nor is it available widely so far! But we'll keep our fingers crossed that it drops before SORA.

One super notable thing is, this model generates audio as well to accompany the video and it's quite remarkable. We listened to a few examples from Meta’s demo, and the sound effects were truly remarkable – everything from fireworks to rustling leaves. This model isn't just creating video, it's crafting experiences. (Sound on for the next example!)

They also have personalization built in, which is showcased here by one of the leads of LLama ,Roshan, as a scientist doing experiments and the realism is quite awesome to see (but I get why they are afraid of releasing this in open weights)

This Week’s Buzz: What I learned at Weights & Biases this week

My "buzz" this week was less about groundbreaking models and more about mastering the AI tools we have. We had a team meeting to share our best tips and tricks for using Cursor, and when I shared those insights on X (thread), they went surprisingly viral!

The big takeaway from the thread? Composer, Cursor’s latest feature, is a true game-changer. It allows for more complex refactoring and code generation across multiple files – the kind of stuff that would take hours manually. If you haven't tried Composer, you're seriously missing out. We also covered strategies for leveraging different models for specific tasks, like using O1 mini for outlining and then switching to the more robust Cloud 3.5 for generating code. Another gem we uncovered: selecting any text in the console and hitting opt+D will immediately send it to the chat to debug, super useful!

Over at Weights & Biases, my talented teammate, Soumik, released HEMM (X, Github), a comprehensive benchmark specifically designed for text-to-image generation models. Want to know how different models fare on image quality and prompt comprehension? Head over to the leaderboard on Weave (Leaderboard) and find out! And yes, it's true, Weave, our LLM observability tool, is multimodal (well within the theme of today's update)

Voice and Audio: Real-Time Conversations and the Quest for Affordable AI

OpenAI's DevDay was just a few weeks back, but the ripple effects of their announcements are still being felt. The big one for voice AI enthusiasts like myself? The RealTime API, offering developers a direct line to Advanced Voice Mode. My initial reaction was pure elation – finally, a chance to build some seriously interactive voice experiences that sound incredible and in near real time!

That feeling was quickly followed by a sharp intake of breath when I saw the price tag. As I discovered building my Halloween project, real-time streaming of this caliber isn’t exactly budget-friendly (yet!). Kwindla from trydaily.com, a voice AI expert, joined the show to shed some light on this issue.

We talked about the challenges of scaling these models and the complexities of context management in real-time audio processing. The conversation shifted to how OpenAI's RealTime API isn’t just about the model itself but also the innovative way they're managing the user experience and state within a conversation. He pointed out, however, that what we see and hear from the API isn’t exactly what’s going on under the hood, “What the model hears and what the transcription events give you back are not the same”. Turns out, OpenAI relies on Whisper for generating text transcriptions – it’s not directly from the voice model.

The pricing really threw me though, only testing a little bit, not even doing anything on production, and OpenAI charged almost 10$, the same conversations are happening across Reddit and OpenAI forums as well.

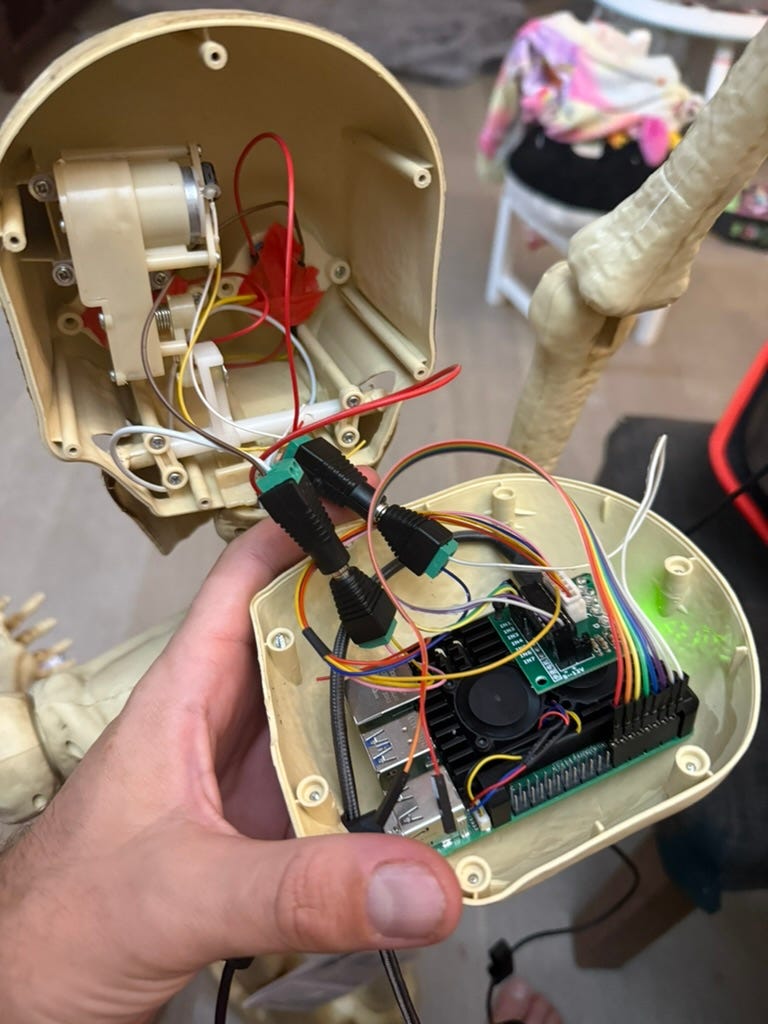

Hallo-Weave project update:

So as I let folks know on the show, I'm building a halloween AI decoration as a project, and integrating it into Weights & Biases Weave (that's why it's called HalloWeave)

After performing brain surgery, futzing with wires and LEDs, I finally have it set up so it wakes up on a trigger word (it's "Trick or Treat!"), takes a picture with the webcam (actual webcam, raspberryPi camera was god awful) and sends it to Gemini Flash to detect which costume this is and write a nice customized greeting.

Then I send that text to Cartesia to generate the speech using a British voice, and then I play it via a bluetooth speaker. Here's a video of the last stage (which still had some bluetooth issues, it's a bit better now)

Next up: I should decide if I care to integrate OpenAI Real time (and pay a LOT of $$$ for it) or fallback to existing LLM - TTS services and let kids actually have a conversation with the toy!

Stay tuned for more updates as we get closer to halloween, the project is open source HERE and the Weave dashboard will be open once it's live.

One More Thing… UIThub!

Tiktok failed to load.

Tiktok failed to load.Enable 3rd party cookies or use another browser

Before signing off, one super useful tool for you! It's so useful I recorded (and created an edit) video on it. I've also posted it on my brand new TikTok, Instagram, Youtube and Linkedin accounts, where it promptly did not receive any views, but hey, gotta start somewhere right? 😂

Phew! That’s a wrap for this week’s ThursdAI. From Nobel Prizes to new open-source tools, and even meta's incredibly promising (but still locked down) video gen models, the world of AI continues to surprise and delight (and maybe cause a mild existential crisis or two!). I'd love to hear your thoughts – what caught your eye? Are you building anything cool? Let me know in the comments, and I'll see you back here next week for more AI adventures! Oh, and don't forget to subscribe to the podcast (five-star ratings always appreciated 😉).