ThursdAI - Sunday special deep dive, interviews with Joao, and Jon, AI agent Crews and Bagel Merges.

Happy Sunday dear reader,

As you know by now, ThursdAI pod is not a standard interview based podcast, we don't focus on a 1:1 guest/host conversation, but from time to time we do! And this week I was very lucky to have one invited guest and one surprise guest, and I'm very happy to bring you both these conversations today.

Get your Crew together - interview with João Moura, creator of CrewAI

We'll first hear from João Moura, the creator of Crew AI, the latest agent framework. João is a director of AI eng. at Clearbit (acquired by Hubspot recently) and created Crew AI for himself, to automate many of the things he didn't want to keep doing, for example, post more on Linkedin.

Crew has been getting a lot of engagement lately, and we go into the conversation about it with João, it's been trending #1 on Github, and received #2 product of the day when Chris Messina hunted this (to João's complete surprise) on Product Hunt.

CrewAI is built on top of Langchain, and is an agent framework, focusing on Orchestration or role-playing, autonomous agents.

In our chat with João we go into the inspiration, the technical challenges and the success of CrewAI so far, how maintenance for crew is now partly a family effort and what's next for crew

Merges and Bagels - chat with Jon Durbin about Bagel, DPO and merging

The second part of today's pod was a conversation with Jon Durbin, a self described AI tinkerer and software engineer. Jon is a Sr. applied AI researcher at Convai, and is well known in our AI circles as a master finetuner and dataset curator.

This interview was not scheduled, but I'm very happy it happened! If you've been following along with the AI / Finetuning space, Jon's Airoboros dataset and set of models have been often mentioned, and cited, and Jon's latest work on the Bagel models took the lead on HuggingFace open LLM leaderboard

So when I mentioned on X (as I often do) that I'm going to mention this on ThursdAI, Jon came up to the space and we had a great conversation, in which he shared a LOT of deep insights into finetuning, DPO (Direct Preference Optimizations) and merging.

The series of Bagel dataset and models, was inspired by the Everything Everywhere All at Once movie (which is a great movie, watch it if you haven't!) and is alluding to, Jon trying to throw as many datasets together as he could, but not only datasets!

There has been a lot of interest in merging models recently, specifically many folks are using MergeKit to merge models with other models (and often a model with itself) to create larger/better models, without additional training or GPU requirements. This is solely an engineering thing, some call it frankensteining, some frankenmerging.

If you want to learn about Merging, Maxime Labonne (the author of Phixtral) has co-authored a great deep-dive on Huggingface blog, it's a great resource to quickly get up to speed

So given the merging excitement, Jon has set out to create a model that can be an incredible merge base, many models are using different prompt techniques, and Jon has tried to cover as many as possible. Jon also released a few versions of Bagel models, DPO and non DPO, that and we had a brief conversation about why the DPO versions are more factual and better at math, but not great for Role Playing (which is unsurprisingly what many agents are using these models for) or creative writing. The answer is, as always, dataset mix!

I learned a TON from this brief conversation with Jon, and if you're interested in the incredible range of techniques in the Open Source LLM world, DPO and Merging are definitely at the forefront of this space right now, and Jon is just at the cross-roads of them, so definitely worth a listen and I hope to get Jon to say more and learn more in future episodes so stay tuned!

So I'm in San Francisco, again...

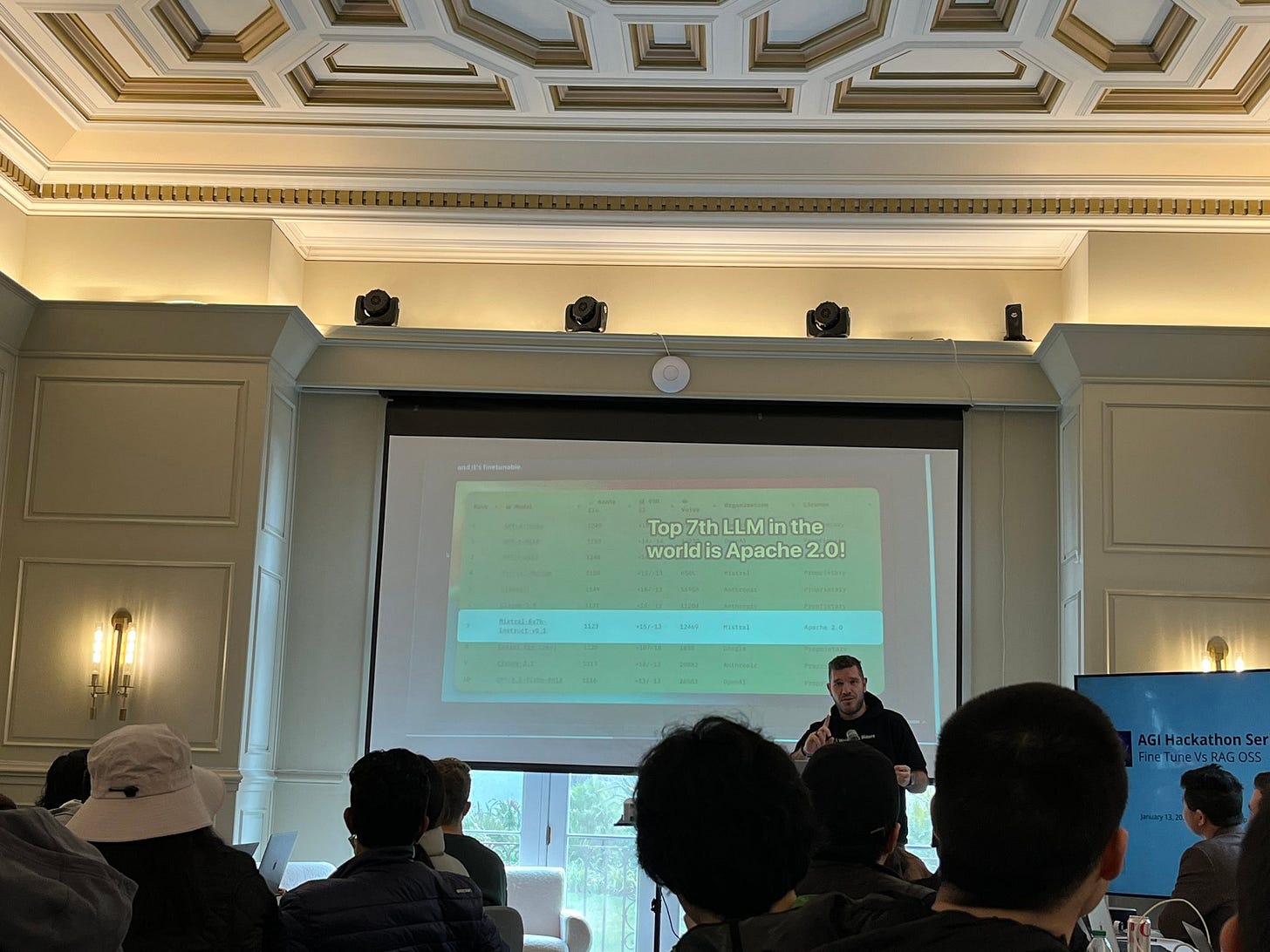

As I've mentioned on the previous newsletter, I was invited to step in for a colleauge and fly to SF to help co-host a hack-a-thon with friends from TogetherCompute, Langchain, in AGI house in Hillsborough CA. The Hackathon was under the Finetune VS RAG theme, because, well, we don't know what works better, and for what purpose.

The keynote speaker was Tri Dao, Chief Scientist @ Together and the creator of Flash Attention, who talked about SSM, State space models and Mamba.

Harrison from Langchain gave a talk with a deepdive into 5 techniques for knowledge assistants, starting with basic RAG and going all the way to agents 👏

I also gave a talk, but, I couldn't record a cool gif like this for myself, but thanks to Lizzy I got a pic as well 🙂 Here is the link to my slides if interesting (SLIDES)

More than 150 hackers got together to try and find this out, and it was quite a blast for me to participate and meet many of the folks hacking, hear what they worked on, what worked, what didn't, and how they used WandB, Together and Langchain to achieve some of the incredible results they hacked together in a very short time.

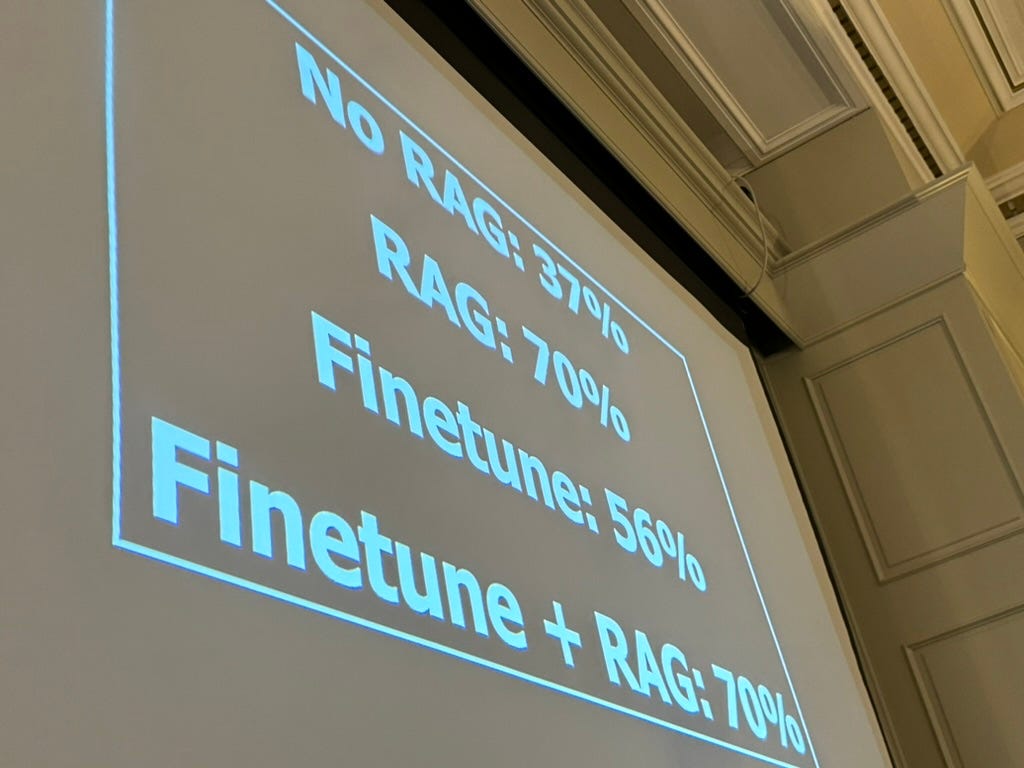

The projects showcased a range of creative applications leveraging RAG, finetuning, and other large language models. Several projects like Magic RAG, CareerNavigator-AI, and CompetitionAI used RAG for document retrieval and knowledge enhancement. Others like rags2pizza and Naturalist DALL-E focused more on finetuning models for specific domains. Some projects compared finetuning and RAG, finding that combining both gives superior performance over using either alone but that result wasn't conclusive.

My vote as a judge (which I did not expect to be) eventually went to the team that built the OptiMUS project, they had generated a systentic dataset, cleaned it up, finetuned a model on it, and showed that they want to optimize AI agents. They used WandB to track their work and I hope they take this project forward and keep making advancements in AI. Congrats for the win Ali and Shayan, hope you enjoy the WandB branded Airpods (even I don't have those) and the Meta Quest, well deserved!

Thank you for tuning in! See you next week!

Full Transcription :

[00:00:00] Alex Volkov: Hi. Welcome back to Thursday. The Sunday special episode. This is Alex Volkov. And I'm recording this in. A gorgeous space. In San Francisco. Where I was. Invited to judge hackathon. And now I'm hanging out with a few friends from cerebral valley. So thank you. Valley folks. For letting me use this place for recording and Today, we have a special episode for you. As If you hear this on Sunday. Today's not a Thursday. We often times have special guests on the pod. Where conversations. Or deeper.

[00:00:45] Alex Volkov: And usually I reserve that slot for a Sunday special release. So this is what you're hearing now. In today's episode, we actually have two conversations. Although I only planned on one. And the first part is the planned part that you hear from Joao Maura. He is a director of AI in Clearbit, and now acquired by HubSpot. And he's also the creator of Crew AI and the Gentek AI framework that can run. By orchestrating.

[00:01:14] Alex Volkov: c

[00:01:15] Alex Volkov: The digital AI agents and have them work together.

[00:01:19] Alex Volkov: And I think you'll hear from, Joao why this peaked interest. For many folks. Specifically. Because as we caught up with. Wow.

[00:01:29] Alex Volkov: Crew AI was trending on GitHub and getting number two on product hunt at the same time. And it's a really cool framework. And I think the underlying. Power of this is that it can use open source, local models. A lot of previous agent attempts used GPT4 For example, and the crew AI can use things like Mistral or Mixtral running in LM studio or Ollama on your Mac, which I think is super cool.

[00:01:55] Alex Volkov: And I think on device AI, plus something like this framework is going to be very, very powerful. It was a great conversation was wow. And surprising to me, the second guest was not planned. However you may have heard from the previous Thursday that the. Bagel series of models from a. Self-proclaimed AI, tinker, John Durbin. Have taken over the leaderboards on hung and face. Including a bunch of mergers and we haven't. Done a deep dive into merges and merge good and Franklin state models.

[00:02:32] Alex Volkov: But if you've been to Thursday for awhile, you probably heard about them. Merging is a technique to take a model or different models. And without any computation, great, bigger or different models using a dissection and some computing. Process of the layers of those models just based on weights without any training or continuing to fine tuning, which is incredibly interesting.

[00:02:58] Alex Volkov: And John goes into this a little bit and he created. Bagel. Based on the inference of what I'll let you hear this at the end. And it's a very fascinating conversation. I took a lot from it and unfortunately we didn't have time for a long, deep dive, but I learned a lot from John and hopefully he'll come from the podcast and we'll be able to deep even dive even deeper and talk with John about. How to create data sets, why DPO is better than PPO and all of these great things. So we had two great guests. And I. Had a blast having them on the bud and I probably should do more of these deep dives.

[00:03:37] Alex Volkov: So please let me know what you think. Don't forget to subscribe to the newsletter or I sent a summary and in the newsletter, you'll find my. Trip report, quote unquote for the hackathon. There was co-sponsor with together, AI. And Lang chain and Harrison was there and I gave a brief talk as well. And the, sorry, I that a bunch of pictures.

[00:03:57] Alex Volkov: So if you're hearing this in your car, check out the newsletter afterwards on Thursday, either.

[00:04:02] Alex Volkov: And with that, I give you our first guests as well. Maura. All right, everyone. Welcome back to ThurdsAI. And we have a great guest today. João Moura from I want to say clear a bit. If I'm not mistaken. Or Joao, could you please introduce yourself and what you do and then we're going to talk about the thing we're here to talk about.

[00:04:36] Joao Moura: A hundred percent. Thank you for having me. First of all, you got my name right, it's hard to pronounce. I go by Joao, make it easier for everyone. I work at Clearbit, yes, but we just got acquired by HubSpot. I'm not sure. I'm Joao from Clearbit, from HubSpot, and from Crew AI. Everything at once.

[00:04:54] Alex Volkov: Awesome.

[00:04:58] Alex Volkov: Eye. I think it's your first time here on stage. Welcome. We've met In San Francisco, at the Ollama open source event, and I think like Teknium was there and a bunch of other folks, Ollama, and I met you and we had like a brief conversation, and you mentioned CREW. AI to me, and it sounds like super, super interesting, and then, this week and the previous week, there was like an explosion of interest in CREW.

[00:05:17] Alex Volkov: AI, so I would love to hear from you how your like last few weeks have been going, definitely the time that we spent like together since then. A lot of stuff happened to Kurei. Could you just, without saying what Kurei is, could you just, like, recap on your experience for the past two weeks?

[00:05:33] Joao Moura: A hundred percent, a hundred percent and first of all, that Oyama event the other day was so good. Had so much, so much fun on it.

[00:05:41] Alex Volkov: It was

[00:05:41] Joao Moura: last couple of weeks have been intense I gotta tell you, kind of like, the thing. Got, like, blown up out of proportion. Like, I have a lot of DMs, a lot of messages, a lot of issues, and not that many requests, I want to say, but but it has been a lot of fun.

[00:05:59] Joao Moura: Kriyai just like, seems to have a lot of interest in from different people. I think this idea of building like AI agents is something that captivate most of the tinkerers out there, like how you can automate your life away. And it seems that have been resonating with a bunch of engineers out there.

[00:06:16] Joao Moura: The last couple of weeks has been very intense in terms of Writing code, like at late nights having like to spare a few hours to insert DMs and help with the Discord community. And actually, I actually ended up recruiting my wife to help me with that. So if you see Bianca on Discord or over GitHub issues, that's my wife helped me out, make sure that I get it all covered.

[00:06:41] Alex Volkov: Definitely shout out Bianca, thanks for helping. And uh, as well so, now trending on GitHub, I think number one , I think somebody submitted this to Product Hunt as well?

[00:06:50] Joao Moura: That was a thing. So I have been working on this and like as an engineer working on an open source project, you don't, you don't think about this project as products necessarily from the get go, but as it starts to get more traction it got the interest of like this one guy that seems to be like a I don't know if it's a big deal or not, but it seems that he hunts a lot of products and product hunt.

[00:07:14] Joao Moura: And for some reason he got like. The super fun thing is that I have been part of like, and I have seen other like product, product launches, and I know how much effort goes into preparing those and to be ready for it and have like a, like social media ready to post a lot of content about it. And I had none of that.

[00:07:36] Joao Moura: I woke up in the morning and there was a message from a VC saying like, Hey, congratulations on your launch. And I was like, What is this guy talking about? I have no clue. It was very interesting because I, I opened like Product Hunt's website and I'm searching like, how do I cancel this? Like, I, I didn't want to launch this, at least not right now.

[00:07:58] Joao Moura: And on Product Hunt's like documentation, they mentioned that you have two options, either, You send them a message like super urgent so that they can pull like the, the brakes on it, or you run with it.

[00:08:13] Joao Moura: And at the end of the day, I was like, I'm just going to run with it. I'm going to see how it goes. And turns out we end up the day as [00:08:20] number two.

[00:08:20] Joao Moura: And that was, that was something else. Thanks.

[00:08:25] Alex Volkov: number one hunter. I think he hunted like most of the products on ProductHunt, so shout out Chris. And definitely, I saw this and what a surprise to wake up to and then get like the product number two. Definitely helped the stats probably. Right. So I think, I think with this excitement, let's talk about like why it's so exciting.

[00:08:43] Alex Volkov: Could you give us like a brief primer on Crew AI? We've talked about agents before. We obviously talk about like auto GPT previously and GPT engineer from, from Anton Musica and like a bunch of other very interesting projects. Could you give us the brief primer on like a crew AI, what it is?

[00:08:57] Alex Volkov: And then we're going to talk about like why you built it and the orchestration stuff.

[00:09:02] Joao Moura: percent Crew I is a very thin framework. It's a Python framework. It's in the process of being converted to TypeScript as well, but it's a Python framework that allows you to build a group of AI agents. You can think about it as if it AutoGem and ChatBath had a child.

[00:09:21] Joao Moura: That's the way that I usually describe it. So you're going to have a group of AI agents that are role playing in order to. perform a complex series of tasks. And you can do all sorts of automations on it and you can plug it to all sorts of different systems out there. I think that's the easiest way to describe it right now.

[00:09:43] Alex Volkov: Awesome. And could you, you briefly mentioned this, GPT, could you talk about like the, the inspiration here? what made you start this as Clearbit was getting acquired and, or, or around this area, at least I think what made you work on this? There's a bunch of other , orchestration platforms out there, the bunch of agents what made you write your own instead of like taking something off the shelf on open source?

[00:10:06] Joao Moura: So turns out that this is a fun story. There was so you're back into my wife again, always propping me up. I love her. She's so great. She was she was telling me, Hey, you have been doing all this amazing work at Clearbit. Because at Clearbit, we have been doing work with LLMs for the past one year.

[00:10:22] Joao Moura: And at a scale that I believe not many have. And she was like, You should be sharing more about this. Like, you're leading these efforts and you're doing all these complex systems at scale. And this could definitely help and benefit other people. So she was telling me that I should do a better job at posting online in things like LinkedIn and Twitter.

[00:10:41] Joao Moura: And Twitter, I, I think like I'm okay with, but LinkedIn was always hard to me. I feel like there is a, there is a harder threshold, like a higher threshold for how well your idea must be before you post it on LinkedIn. So I was considering like how, how I can do better LinkedIn posting. And because I was so excited about AI agents, I was like, can I build like a couple of agents that will actually help me out with this, where I can like shoveling my, like, like my, my draft and rough ideas.

[00:11:11] Joao Moura: And it's going to come up with like some guidance and a better post that I can just edit and post. It turns out that I could and that's, that's how I started QueryAI. I looked into AutoGem and I was not a huge fan on how they, like, one, they didn't have the option to execute tasks sequentially. They also have a lot of assumptions on how this agent should work together.

[00:11:34] Joao Moura: And I think The way that they work together should vary depending on the tasks that you're trying to accomplish. I was not a huge fan of it. Chat dev on the other side, I saw a lot of like good stuff on it, but it just didn't feel like a production system, right? Like it has like a game like UI, something that you would experiment with, but not something that you would deploy in production.

[00:11:56] Joao Moura: So that's, that's how I came up with this idea of like, maybe I should do something myself so I can build this LinkedIn automation. And if that works, then I can build other sorts of automations. And that's how I started to create AI. I viewed it. Five agents from A social network researcher all the way to a chief content officer to help me create great ideas so that I can post them on LinkedIn.

[00:12:23] Joao Moura: And it works great. I went from never posting on LinkedIn to post like three to four times every week. And I love what I post and it seems other people do as well. So from that point on. I decided that I want to create more automations and that's how CREATE. AI came to be. I just abstracted what I learned from that experience into this framework that I could then use to build other sorts of automations and things took off from there.

[00:12:50] Alex Volkov: Wow, that's incredible. Incredible story. As a lot of the engineering stories happen when people create like cool things, laziness is somewhere there. Like I want to automate something that I don't want to do, but I definitely need done. I definitely have a bunch of those as well, at least for Thursday. The collection stuff and the other stuff that I would love to just like happen for me.

[00:13:10] Alex Volkov: So definitely. Want to check out KuroAI for that and create like a Thursday collection thing. Could you, could you mention like the, like, like technical challenges here? You did mention that it's based on LengChain, if I'm not mistaken. You mentioned that there's not a lot of like, pull requests for people to help out with Could you talk about like the, the technical challenges you ran into?

[00:13:30] Joao Moura: Yes so basically when I start to build this out, I realized pretty quickly that Agents are just as useful as how many tools you can connect them with. And when I was looking online, I realized that both YamaIndex and LinkChain already had all these amazing tools that you could, you could run with.

[00:13:52] Joao Moura: So I wanted to make sure that I could, people could use those tools too. And build, like, Crews that use them. Because of that, I took the decision to build CREAI around LinkChain. So that if anyone wants to hook that up with their GitHub or their Gmail, there are already tools that were built out for that, and they're pretty easy to plug in and just work.

[00:14:15] Joao Moura: And it seems Lemma Index tools also work. I'm putting together some experiments around that to share with more people. But basically that was some of the initial decision that that will lead to this design. I think some of the technical challenges that came from it is It's just realizing that as people start creating all these different curls for these different use cases, there's so many edge cases, right?

[00:14:38] Joao Moura: You know that you can try to, like, steer LLMs your way, but especially if you're using, like, open source LLMs and smaller LLMs, they have a harder time just sticking with, like, a given.

[00:14:54] Joao Moura: I started to add a bunch of guardrails in Cree AI that actually makes it way better than what you would get with any other agent framework out there, where if it's For example, one of them is if you're running out of iterations, like you're, like your, your agent is stuck on a cycle or taking too long to come up with an answer it's gonna force it to come up with an answer if it goes over a certain number of iterations that you could define.

[00:15:21] Joao Moura: Another one is if it tries to use the same two in a row, it's going to prevent it to do that and guide there towards moving on. Another one is it has caching. So every two any agent uses is going to be cached so that if any other agent in the group decides to use the same two they don't need to actually execute it.

[00:15:41] Joao Moura: So I think a lot of the challenges come from like how I can add all these guardrails to make sure that Independently of what the use case and what the person is building a group of agents for, that's still going to run smoothly. And that's, that's where a lot of the work has been, has been put, been putting on.

[00:16:01] Joao Moura: So you mentioned local modals as well

[00:16:04] Alex Volkov: we mentioned, we met in the OLAMA event, and OLAMA is a CLI, a shout out, OLAMA folks, is a CLI to be able to download and run open source models on your hardware, basically. Many of the previous agent attempts, Auto GPT like different ones, they use maybe GPT 4 or something.

[00:16:20] Alex Volkov: We're getting to the tools and we heard previously in the space we heard from John Durbin that there are models now that are like better for specific tasks like function calling as well. Jao, could you speak a little bit about the difference that you see? Could Crue AI work with both, right? Open source and also like, API ones.

[00:16:39] Alex Volkov: And could you [00:16:40] talk about a little, the difference that you see between like the open source models as we have them right now versus kind of the, the online models and which ones would you prefer for your tasks?

[00:16:50] Joao Moura: Turns out that I think that the fact that crew AI supports local models is some like thing that, that. Make it take off because that's something that I wanted from the get go. Like these agents, especially if you're trying to automate complex tasks, they can become rather costly if you want to run them like 24 7.

[00:17:09] Joao Moura: But with like the ability to use local models, you can basically just set and forget, and they're going to keep doing work for you. So I wanted to make sure to support local models because of that. Guru AI supports like any of the vendors that you're going to find support in link chain. So you can use any of the open source models out there, a drawback, GPT, you name it.

[00:17:30] Joao Moura: And you can also use Zolyama, you can also use LM studio whatever is the best way that you have to run your models locally, you can use that. I. Specifically, like personally, love Olym. Olym is amazing. I love the guys that built it as well. And I think it's so easy to use that I ended up using that. And I have been using some of the smaller models.

[00:17:51] Joao Moura: Shout out to Nose Research. I love that OpenARMS 2. 5 model. It's just amazing and so small. Like I can't believe how good it is. And that's one that I use a lot for like when I'm using I'm using OpenARMS 2. 5 just because of how well it works, but I also tried with Mistro, I also tried with Solar, I also tried with Nexus so many models out there, so good.

[00:18:19] Joao Moura: One thing that I want to call out as well is that These local models, they definitely struggle a little bit more when compared to GPT 4 in terms of sticking with a given format. I'm also collecting all my executions data so that I can fine tune agentic models. Similar to how you have like instruct models and chat models, I want to make sure that we start to see more agentic models out there.

[00:18:46] Joao Moura: I have seen some closed source ones that are not like, You're not able to touch on. So I'm building an open source data set that I can then use to fine tune those models. And then you basically are going to have these agents run on local models without a glitch. That would be at least the end goal.

[00:19:05] Joao Moura: That's incredible, incredible specifically because

[00:19:08] Alex Volkov: we've, we've had interviews with a bunch of folks who build agentic stuff. So one, one of the more successful episodes of last year for Thursday, I was in an interview with Killian Lucas from Open Interpreter and the open source community here definitely opened the thread with Killian specifically to say, Hey, when the users run a bunch of this stuff, we would love to have.

[00:19:27] Alex Volkov: Users opt in maybe for some like telemetry or analytics to be able to build the data sets for the tasks that were completed or not completed. I don't know if you have this plan, but definitely this is a benefit to the community if you do have a way for folks to like, log their stuff. I also mentioned that like, I probably should reach out to you separately to see if like, these runs for these agents in crew could be logged in Weights Biases with the integration.

[00:19:50] Alex Volkov: Would be definitely more than happy to like participate and see if we can like look at the execution stuff of your agent on Weights Biases. As well, I think before I let Umesh wanted to have like a bunch of questions for you as well. He's been running and he, he does agents of his own. I want to say

[00:20:06] Alex Volkov: what's the next plans for crew? Where are you planning to take this? Many of these projects, suddenly people ask for a UI because maybe they don't want to do like, installing and, and doing like Python stuff. So you already mentioned TypeScript. Could you give us a little bit of a future sense of like, where are you planning to take this?

[00:20:23] Joao Moura: think what we are getting now is a bunch of feature requests from most bunch of different sites. So there is some prioritization going on so that I can figure out what to focus next. One thing that seems to be a no brainer to me though is that we need to have a UI for this.

[00:20:37] Joao Moura: I think this would be pretty cool and unlock a lot of use cases for people out there. I know there are other people that have been building UIs for their, like their businesses that are being built around this. I, I just think like an open source version would be better. So I'm definitely already working on the UI for this.

[00:20:53] Joao Moura: We're going to be able to. Put your agents together, bring your, all your cartoons together, and then you can basically have these agents like run by yourselves. I, I might look into offering an option where you, like, we can even host it for you, and I'm still figuring out what that would look like.

[00:21:10] Joao Moura: Maybe that's too far ahead. But but yeah, I think like the UI for it makes a lot of sense. Also another thing is that it seems a lot of the use cases kind of like go back into very similar tools over and over again. And even though you can hook them up with like link chain or lemma index tools, those might still require some like configuration.

[00:21:30] Joao Moura: It might not be as straightforward for some people. So we might take an opinionated take. On a tool specific repository and package that you can basically use to bring, let's say, let's say they want to create an agent that does reg you might be able to do that with one line versus having to be like a custom.

[00:21:51] Joao Moura: Like two cents for that. So that's another thing that we have been looking at as well. I think there's so many use cases. One thing that I'm trying to do more now is just kind of like chat with more people that are using this. Especially on the business side of things to understand like what other use cases we could support there.

[00:22:08] Joao Moura: But yeah, a lot of interesting things cooking.

[00:22:11] Alex Volkov: I'm looking forward to hear more about Kuru AI and upcoming things. I think Umesh Arkohos here has been doing Agents for a while and has a few questions as well. Umesh, go ahead.

[00:22:23] Umesh Rajiani: Yeah. Hey, Joe thank you for, for coming in. We are almost 80, 80, 90 percent of our workflow now is agentic workflow. So we are employing the generative AI library of. I think that's pretty much it for the introduction of Google for Gemini and also a lot of work using Autogen.

[00:22:41] Umesh Rajiani: And we got introduced to Crue AI, I think, four weeks ago through one of my engineers and found it pretty interesting. There are going to be a lot of pull requests coming in from us, actually, because we are thinking about a few things. I just wanted to ask you one particular question about the process part.

[00:22:59] Umesh Rajiani: Your current library, as I understand, is is a linear process library and what we have is what we are employing with Autogen is, is also. Bit of a, a graph of actions as well as the dag approach as well. Dag approach, can be implemented using your process. But do you have a, a, a graph of actions, workflow in planning or something that is coming up?

[00:23:24] Joao Moura: Yes, so this idea of processes, I want this to be like one of the cornerstones for our career AI. I, my understanding is that a lot, as I said earlier, like a lot of the different outcomes that you're going to get, a lot of the magic happens when you define true what processes these agents are going to work together, right?

[00:23:43] Joao Moura: And there are so many options out there. Like you can have them work like sequentially, you can have them work like in a group, like if they're in a meeting, you can have like a consensus strategy where they can kind of like bet to see who is going to take on the task and even evaluate the results.

[00:23:59] Joao Moura: So there's just a A lot of different processes that can be implemented there. And the idea is to implement all these processes so that people can have some work happen in parallel if they want to, or sequentially or whatnot. About a graph specific API, I I'm not sure how much I can tell about it, but we have been talking with link chain folks about it.

[00:24:19] Joao Moura: And there's, there's some things that have been cooking there.

[00:24:23] Umesh Rajiani: Enough said. This last question. So currently it is all Python but most of our implementations now because of the latency and everything and complexity of. The workflows that we are implementing, mostly our applications are enterprise applications.

[00:24:36] Umesh Rajiani: We are employing a lot of Rust to, for, for a compiled workflow. So do you have any plans of porting it to Rust or you're looking for kind of a support in that area or something?

[00:24:47] Joao Moura: Yeah. So we are, we are porting it to TypeScript right now, and there's some work being done in to build like an API where you might be able to just spin it off as like a service.

[00:24:58] Joao Moura: And you can then like [00:25:00] add agents, create agents, outrun API. So you don't have to create one yourself. You just need to figure out how you want to host it. I haven't thought about porting in trust yet, but I would be open to that idea. For sure. If I can get enough people to help out, I create a repository and we can get things working for sure.

[00:25:16] Umesh Rajiani: I'll, I'll reach out to you separately. Thanks Alex for, for allowing me to ask questions. Of course I have many questions, but I'll reach him out on his Discord.

[00:25:23] Alex Volkov: Yeah, thank you Umesh, and João, I just want to like recap on the awesome success of Kuru AI. I agree with you. I think the fact that, like, we've had many frameworks like this, we've talked about many frameworks like this, the ability to run this completely on your machine, the ability to, like, not pay for somebody else the ability to like, use Olama.

[00:25:43] Alex Volkov: I didn't know that you also support LM Studio. Shout out LM Studio, a friend of the Pada, hopefully we're, we're going to get on, on the next Thursday, I so I didn't know that I can, like, open up a local model on LM Studio and, and then the crew would use this API. Definitely. Definitely want to play with this now.

[00:26:00] Alex Volkov: I want to say, I want to give you a few minutes to just like talk to the community. A lot of things are happening in this world. I find it very interesting where kind of the AI engineers, the kind of the traditional software engineer background folks, they're building the tools, they're building the rag systems, let's say they use the link chain.

[00:26:17] Alex Volkov: From the other side, we have a bunch of machine learning folks who are Building the models, fine tuning the models, and working on that space, and reading the papers. And I do see a connection between, and obviously my role in Ways and Biases specifically is to connect these two worlds. I do want to see more people that train models also kind of like think about the agentic behaviors as well.

[00:26:37] Alex Volkov: We heard John Durbin before talk about like, hey, there's specific data sets for RAG, there's specific data sets for execution and function. I think Eroboros has The, the data set has a bunch of like function calling as well. So definitely I want to see a connection here. João, please feel free to talk to the community in terms of like what you need to make crew the best crew ever.

[00:26:57] Alex Volkov: Where can they find you, what you can get help with the floor is yours. Feel free to take over and ask everything. Community will provide.

[00:27:06] Joao Moura: A hundred percent. And just to tap into what you said there, I agree. I think like there's something magical that happened like last year with like GPT taking the world by the storm is that it like it connected two groups of engineers that in the past didn't talk very much.

[00:27:22] Joao Moura: And that was like AI and ML engineers with. regular software engineers. I have managed teams in both areas in the past, and I definitely have seen like that there isn't much interaction there, but right now it's, it's amazing to see all the amazing stuff that have been coming up from like those two groups to interacting more together.

[00:27:40] Joao Moura: It has been a lot of fun. About, about CREATE. AI. Yes, I would say give me a follow on Twitter or X, I would say now, so give me a follow on X and I definitely will keep posting and share more about CRE AI and all the things related to LLMs, Agents, and everything else. You can know more about CRE AI by looking into its GitHub.

[00:28:00] Joao Moura: So you can go into my profile slash Guru AI. I probably add the link to my ex account as well. From that, if you have follow up questions or if you want to like see what people have been cooking with it, I would say join the Discord community. We have around 500 people there and has been growing daily.

[00:28:18] Joao Moura: So if you join that, you might be able to see other use cases and things like that. If you're curious about it, but you're just like, you're, you're not sure what you could build with it there's a bunch of examples in the README and even some videos that I recorded crews doing like, stock analysis or tree planners and all that.

[00:28:38] Joao Moura: There is there's a lot of content there that you can consume in order to get your ideas. And if you do decide to give it a try, don't miss out on the custom GPT. It's also linked in the README and it can help you write the code. It can help you with ideas for the agents, ideas for the roles or for the tasks or anything around using QrooAI.

[00:28:58] Joao Moura: If you're also curious at contributing to the project. GitHub has a bunch of issues. My wife, again, has been flagging and tagging all of them. So thank you so

[00:29:07] Joao Moura: much.

[00:29:07] Alex Volkov: out, Bianca.

[00:29:08] Joao Moura: can find like all the ones that are tagged with help wanted or the ones that are related with questions And you can help answer them as well And we're gonna be writing new documentation from the scratch So this might be a great opportunity to help with like more simpler stuff as well if you're into that

[00:29:24] Alex Volkov: Awesome and I think I saw something, I don't know if I have a link

[00:29:28] Alex Volkov: to, to the generous documentation on the fly from, from just the, the code itself. And it looks super cool. I'll, I'll try to send this to you. Joao, thank you so much for joining Thursday. I, this is your first time here. Hopefully not the last.

[00:29:40] Alex Volkov: Congrats on the success of Kru AI and it's been great meeting you and then having you on definitely thank you for coming and folks should definitely check out Kru AI, give Joao a follow and we will expect more. I can't wait to like run a few Kru myself to help me with Thursday night tasks, especially on local, local models.

[00:29:58] Alex Volkov: It was super cool. Thank you for coming, man.

[00:30:01] Joao Moura: I love it. Thank you so much for having me catch you folks online.

[00:30:04] Alex Volkov: Awesome, and your audio quality was great by the way, thanks for testing out your mic.

[00:30:07]

[00:30:11] Bagel models the leaderboard from Jon Durbin

[00:30:11] Alex Volkov: We're moving forward into the top open source on the LLM leaderboard and the creator. So if you guys open the open source LLM leaderboard, which we often talk about. On HuggingFace we, we've talked about kind of the, the difference between human evaluation and the automatic evaluations that OpenLLM leaderboard runs.

[00:30:32] Alex Volkov: You will see a bunch of models. The top three ones are from CloudU and they're, they're like, I think merges of Yee34 and then the Mixtroll34b as well, but it's not based on Mixtroll. And then the rest of the is like a bunch of John Durbin Bagel examples. And, so all of those, there's like six models there that are based basically on the John's Bagel DPO versions.

[00:31:00] Alex Volkov: And I just wanted to shout this out and shout out Durbin for, for working this hard and releasing these models.

[00:31:06] Alex Volkov: Let's see if we can hear from the man himself. Hey, John.

[00:31:09] Jon Durbin: Hey, how's it going?

[00:31:10] Alex Volkov: Good. Thanks for joining us. I don't remember if you've ever been on stage. So feel free to briefly introduce yourself to the audience who doesn't know you. And definitely they should and they should follow you as well.

[00:31:22] Jon Durbin: Yeah, I'm a software engineer. I'm an AI tinker. I've been doing synthetic stuff since I guess maybe April with Aragoros project. It's been tons of fun. Lately I've been mostly working on the bagel models. If you're wondering what the bagel name came from, it's from Everything, Everywhere, All at Once.

[00:31:37] Jon Durbin: Great movie. Yeah, so that, that's the kind of the premise of the model is like all the prompt formats. Yeah. All the data sources, all the training techniques, there's Neptune, there's DPO yeah, just fun stuff there. As far as the leaderboard, that wasn't really my goal. If you look at the actual, like, token count per data set, I think the highest And then the last amount of tokens is actually probably the Cinematica dataset, which is movie scripts converted to roleplay format.

[00:32:07] Jon Durbin: So it's, it's interesting that it does so well, but really I was targeting the model for general purpose as a merge base because I know that, MergeKit is so popular now. So I was trying to come up with a base model that has a little bit of everything and every prompt format so that anyone who wants to do this, alchemy with MergeKit.

[00:32:28] Jon Durbin: Can use the Bagel series as a base, because I should, if you have an alpaca based model and a vicuña based model, they're not going to merge very well. It'll have, weird stray user tokens or whatever. The idea with Bagel is to be a good base.

[00:32:42] Alex Volkov: I also saw quite a lot of work you're doing on new DPO data sets. Could you talk about those?

[00:32:48] Jon Durbin: And then, yeah, I keep cranking out new DPO datasets to enhance the stuff that's lacking right now.

[00:32:54] Jon Durbin: I think even the YI 34B. Might be a little bit overcooked. I used QLORA for both the supervised fine tuning stage and DPO. And it turns out DPO, you really need to use an incredibly low learning rate. I was even using, like, maybe 50x smaller learning rate for the DPO phase than the Then the supervised fine tuning phase, and even then [00:33:20] I stopped the run about halfway through and killed it because the eval started spiking all over the place.

[00:33:26] Jon Durbin: Yeah, still, still lots of stuff to learn and I'd love to do a full weight fine tune of the E34B. I'm probably going to work on a Solar 10. 7B version of it next and maybe a DeepSeq 67B. I'm curious if the DeepSeq's, deeper network is actually going to improve things in any sort of way. But

[00:33:46] Alex Volkov: awesome. John, thank you so much for joining and thank you so much for the deep dive. So I have two questions for you real quick. I did not expect you to join. So this is not a full blown interview, but I'm very happy that I have you. First of all, you mentioned that there's like two versions, DPO and non DPO, of Bagel.

[00:34:01] Alex Volkov: And you mentioned the differences between them. You said like DPO version is more factual and truthful, but not great for RP. I wasn't sure what RP is. Roleplay?

[00:34:10] Jon Durbin: Roleplay,

[00:34:11] Alex Volkov: Yeah. And then creative writing. Could you give us like a little bit of a, of a sense of like, what's like DPO versus non DPO version? Is that just dataset based or is there something more going on behind the scenes that like makes the one model behave differently than the other?

[00:34:27] Jon Durbin: Yeah, so really all of the Bagel series, you basically have two phases of training. There's the super, regular supervised, fine tuning stage where I just, you can look at the Bagel repository. Everything is completely open source and reproducible. But in the supervised fine tuning phase it's just a ton of data sets and and then I take that fine tuned model, fine tuned model, and then I apply DPO, direct preference optimization to it.

[00:34:52] Jon Durbin: And I have quite a few DPO datasets in there, but really, the DPO landscape is sparse right now. You basically have DPO datasets from NVIDIA, the Helpsteer database, which is a human annotated one where they ran a bunch of gen a bunch of prompts against LLMs and then had humans rank them.

[00:35:14] Jon Durbin: Then there's like the LIMSYS, 1, 000, 000, where you can find the exact same prompt sent to multiple models. And so you can take like the GPT 4 answers. Use that as the preferred answer, and then the Kunyu 33 or something as the rejected answer, because you're assuming the GPT 401 is better.

[00:35:31] Jon Durbin: Same with there's Orca DPO pairs. I know Argya just did a new release of that, which is better. But we don't have a ton of DPO datasets that are specifically for creative writing tasks and stuff. I made one which is actually based on the Eroboros 2. 2 compared to the Eroboros 3 series where I actually rewrote most of the creative writing prompts with a different prompt and some other stuff.

[00:35:59] Jon Durbin: I actually used the March version of GPT 4 which is better. So in that case you get Basically like three to four times the number of tokens in the output. So there's that DPO data set, which I make myself in the Bagel Code. But otherwise there's really no role play focused data in any of the DPO data sets.

[00:36:21] Jon Durbin: So what happens is you take that supervised or, fine tuned model from the first phase. And you apply DPO to it, and it kind of experiences, forgetting of what it learned during the fine tuning of some of the stuff like creative writing and role play. Yeah same with code. So if you look at, my Twitter feed, you can see that I've released there's a Python DPO dataset that'll hopefully fix some of that stuff.

[00:36:44] Jon Durbin: I just released another contextual question answering DPO dataset for better RAG performance after the DPO phase. I put out just a few minutes ago Gutenberg DPO, which is basically I parse maybe 14 or 15 books from Project Gutenberg that are public domain into chapters and then create prompts to actually write those chapters and then I create summaries so you have like the previous chapter summary inside the prompt and then I use that to prompt one of the local LLMs so I used Dolphin, eChat, and Lama 213b. To get the rejected values the outputs from these models are fine in some cases, but they're short and they, you'll notice with the LLM, like most of the LLMs, when you write a story, it's always a happy ending and it, and it ends with like, and they walked into the forest lived happily ever after.

[00:37:37] Jon Durbin: It's boring and cliche. My hope with the Gutenberg stuff is that when you actually prompt it to write a chapter of a book, it's gonna be, from human writing that are popular books. They're a little bit old timey because they have to be to be public domain, but,

[00:37:52] Alex Volkov: Yeah.

[00:37:53] Jon Durbin: hopefully it will improve the writing and create creativity of the late whatever bagel models I do in the future with So I'm trying to kind of improve, improve that, but still a lot of stuff I need to do. I think the next thing I'll do before I actually make another bagel model is use something like the Goliath 120B to make a role play centric dataset for DPO. That way it doesn't completely forget how to do that as well.

[00:38:15] Alex Volkov: Awesome. And I'm just looking at the number of data sets that, like you said, everything, everywhere, all at once. And this is why it's called Bagel, Everything Bagel. It's just like an insane amount of data sets. I'm just gonna run real quick. AI2, Arc, Error Bores Belly Belly, Blue Moon.

[00:38:30] Alex Volkov: You have Capybara in there, Cinematica. Imo Bang, Gutenberg, LMsys chat, like, like tons, tons of stuff. It's incredible how well the model performs. John, one thing that I wanted to follow up on before we move on. You mentioned something that's better for RAG as well. You mentioned a DPO data set that's better for RAG.

[00:38:45] Alex Volkov: Is that the contextual DPO that you released?

[00:38:49] Jon Durbin: Yep.

[00:38:50] Alex Volkov: What, what makes it better for, for RAG purposes? Could you, could you like maybe give two sentences about this?

[00:38:56] Jon Durbin: And this is actually something you can reproduce with the AeroBoros tool as well if you wanted to generate your own data, but I have this instructor in there called Counterfactual Contextual, and what that does is it makes a bunch of fake facts, like it'll say, the Battle of Midway happened in the Civil War, something like that and it'll put that into context and then ask a question about it.

[00:39:19] Jon Durbin: And then it'll have the real version of the fact as well, World War II, Battle of Midway and then the idea is that you want to train the model to always attend to the context and not try to base the answers on what it knows from the base pre training. For example, if you're doing I don't know, like a virtual, you have a different planet where the sky is purple.

[00:39:41] Jon Durbin: And you ask the model, what color is sky, is the sky based on your lore book or whatever. You want to make sure that the model always obeys your context and, and answers accordingly, and not says the sky is blue, because I know the sky is blue. So the, the data set that I put in there has a bunch of those kinds of things.

[00:39:59] Jon Durbin: You can't just put in the fake facts, because then the model will just You know, learn to answer incorrectly. So for every, for every fake version of the context, you have to put in a real version of the context as well. The other thing that makes it better for RAG is I actually stuff more than one piece of context into it because Like with RAG, the retrieval accuracy is the hardest part, so you want to retrieve more than one document.

[00:40:23] Jon Durbin: So suppose you want to retrieve ten documents. If you want to stuff all ten of those into a single prompt and then you want to provide references to the user, you have to know which segment of the prompt it came from. This data set also includes, like, you can put metadata into the prompt for each section that you retrieve, and then when you ask for references in the output, it'll actually only reference that segment.

[00:40:47] Jon Durbin: A bunch of stuff like that, yeah, I, I put in irrelevant context as well to make, try to confuse them all because retrieval is very noisy. All of that kind of stuff is in there.

[00:40:57] Alex Volkov: First of all, I think from the whole community, thank you a lot for everything that you do and your work. And I really appreciate your time here on Thursday. You're more than welcome to always join us. And I didn't expect you to be here when I talked about.

[00:41:09] Alex Volkov: The stuff that you just released, but it's really, really awesome when people from the community who work on the stuff that they do also come and have a chance to speak about them. So John, you're always welcome on Thursday. I would love to invite you again and talk deeper.

[00:41:20] Alex Volkov: And as you release the next stuff that you're working on, I know you're working on a bunch of next stuff more than welcome to come here and, and, and discuss, or even like DM me before. So we'll know what to chat about. I will. Definitely mentioned the, the DPO datasets in the fine tuning hackathon that I'm going to this week.

[00:41:35] Alex Volkov: And so thank you for that. That, that was why I wanted to do a little bit of a deep dive. [00:41:40] And also I want to shout out you as the, one of the most active users of Weights Biases. You posted your like recap that we sent and you have two reports there. And you're part of like the top 10 percent of most active users with 2, 500.

[00:41:53] Alex Volkov: Hours trained in 23 and like 900 plus models. So that's, that's incredible. I just wanted to shout this out.

[00:42:02] Jon Durbin: Yeah, I'm a little addicted.

[00:42:03] Alex Volkov: Yeah, it's amazing. It's amazing. And I, I appreciate everything that you do and I think the community as well