Hey 👋

This week has been a great AI week, however, it does feel like a bit "quiet before the storm" with Google I/O on Tuesday next week (which I'll be covering from the ground in Shoreline!) and rumors that OpenAI is not just going to let Google have all the spotlight!

Early this week, we got 2 new models on LMsys, im-a-good-gpt2-chatbot and im-also-a-good-gpt2-chatbot, and we've now confirmed that they are from OpenAI, and folks have been testing them with logic puzzles, role play and have been saying great things, so maybe that's what we'll get from OpenAI soon?

Also on the show today, we had a BUNCH of guests, and as you know, I love chatting with the folks who make the news, so we've been honored to host Xingyao Wang and Graham Neubig core maintainers of Open Devin (which just broke SOTA on Swe-Bench this week!) and then we had friends of the pod Tanishq Abraham and Parmita Mishra dive deep into AlphaFold 3 from Google (both are medical / bio experts).

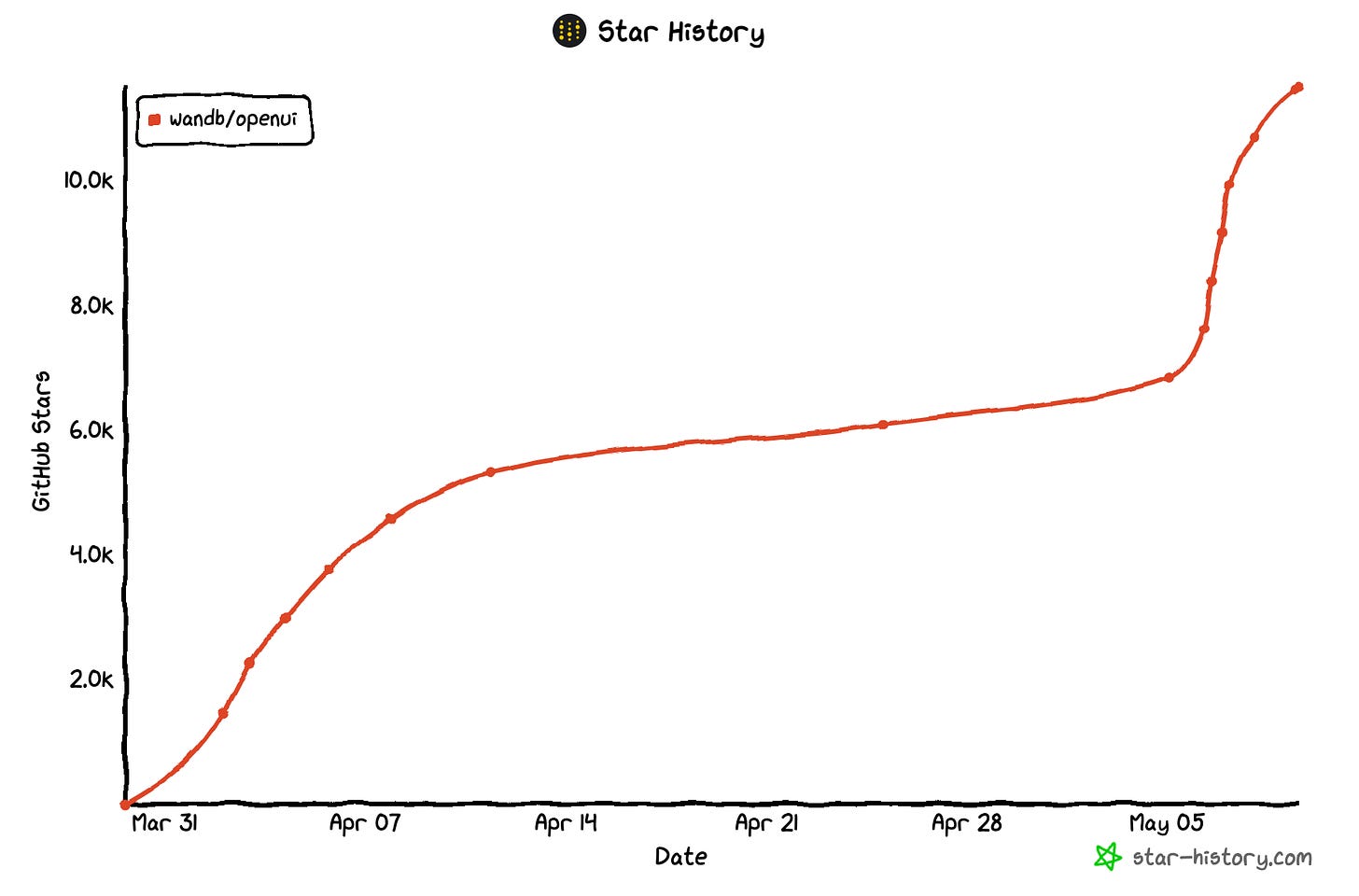

Also this week, OpenUI from Chris Van Pelt (Co-founder & CIO at Weights & Biases) has been blowing up, taking #1 Github trending spot, and I had the pleasure to invite Chris and chat about it on the show!

Let's delve into this (yes, this is I, Alex the human, using Delve as a joke, don't get triggered 😉)

TL;DR of all topics covered (trying something new, my Raw notes with all the links and bulletpoints are at the end of the newsletter)

Open Source LLMs

Benchmarks & Eval updates

LLama-3 still in 6th place (LMsys analysis)

Reka Core gets awesome 7th place and Qwen-Max breaks top 10 (X)

No upsets in LLM leaderboard

Big CO LLMs + APIs

Google DeepMind announces AlphaFold-3 (Paper, Announcement)

OpenAI publishes their Model Spec (Spec)

OpenAI tests 2 models on LMsys (im-also-a-good-gpt2-chatbot & im-a-good-gpt2-chatbot)

OpenAI joins Coalition for Content Provenance and Authenticity (Blog)

Voice & Audio

AI Art & Diffusion & 3D

Tools & Hardware

Went to the Museum with Rabbit R1 (My Thread)

Co-Hosts and Guests

Graham Neubig (@gneubig) & Xingyao Wang (@xingyaow_) from Open Devin

Chris Van Pelt (@vanpelt) from Weights & Biases

Nisten Tahiraj (@nisten) - Cohost

Tanishq Abraham (@iScienceLuvr)

Parmita Mishra (@prmshra)

Wolfram Ravenwolf (@WolframRvnwlf)

Ryan Carson (@ryancarson)

Open Source LLMs

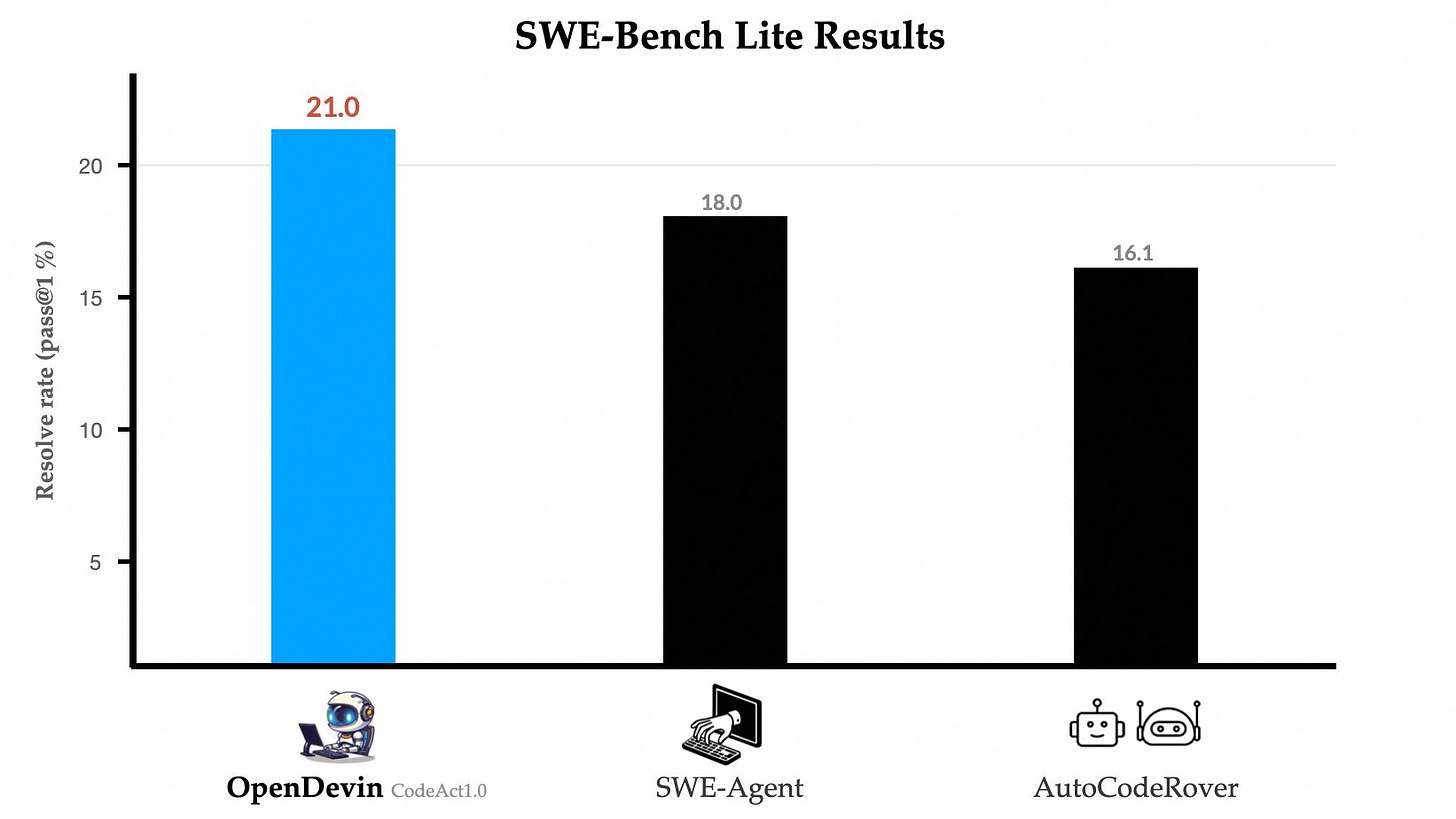

Open Devin getting a whopping 21% on SWE-Bench (X, Blog)

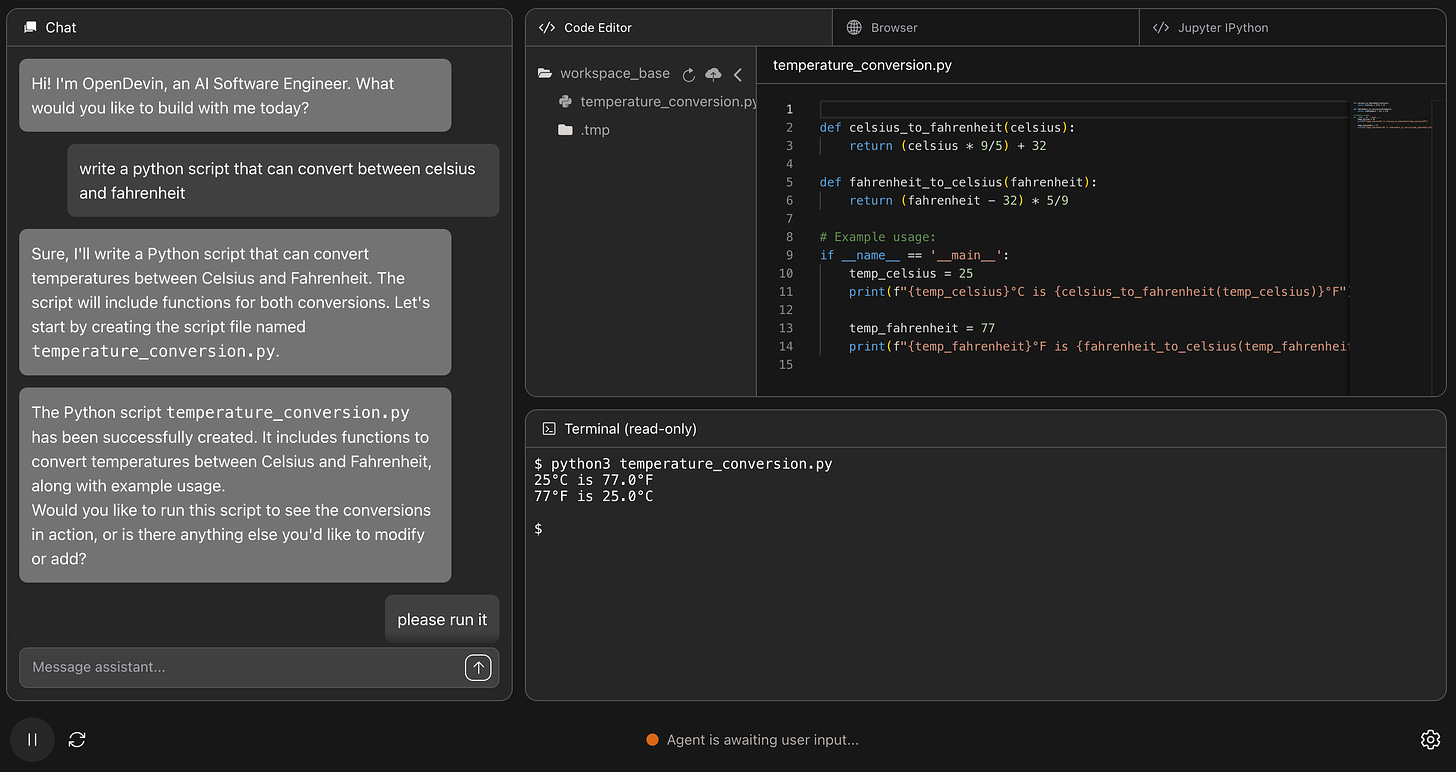

Open Devin started as a tweet from our friend Junyang Lin (on the Qwen team at Alibaba) to get an open source alternative to the very popular Devin code agent from Cognition Lab (recently valued at $2B 🤯) and 8 weeks later, with tons of open source contributions, >100 contributors, they have almost 25K stars on Github, and now claim a State of the Art score on the very hard Swe-Bench Lite benchmark beating Devin and Swe-Agent (with 18%)

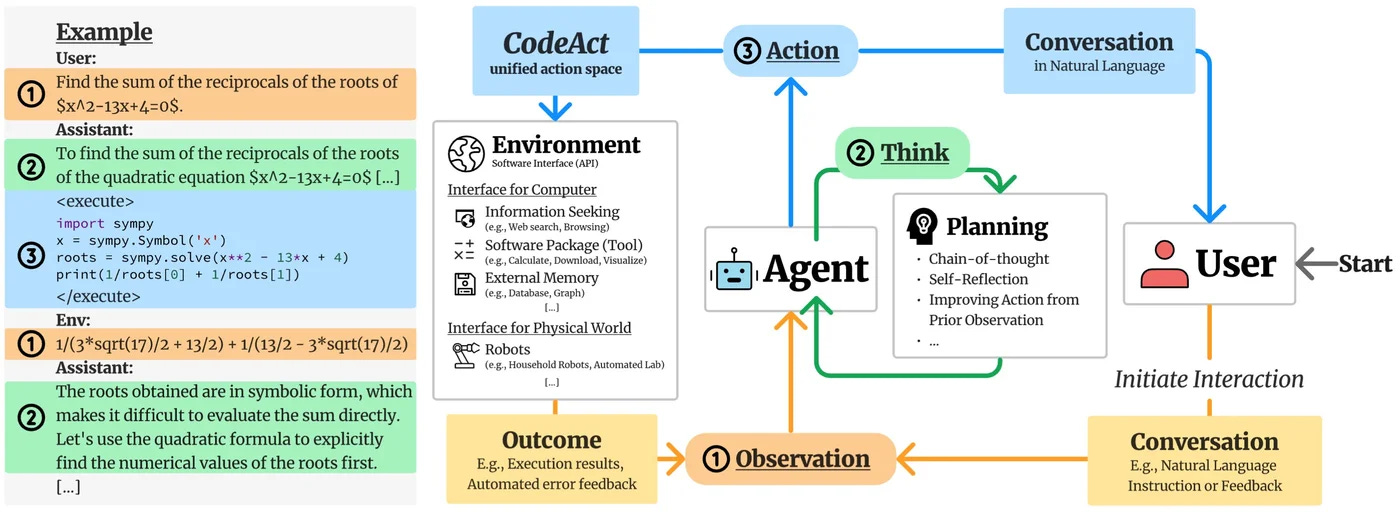

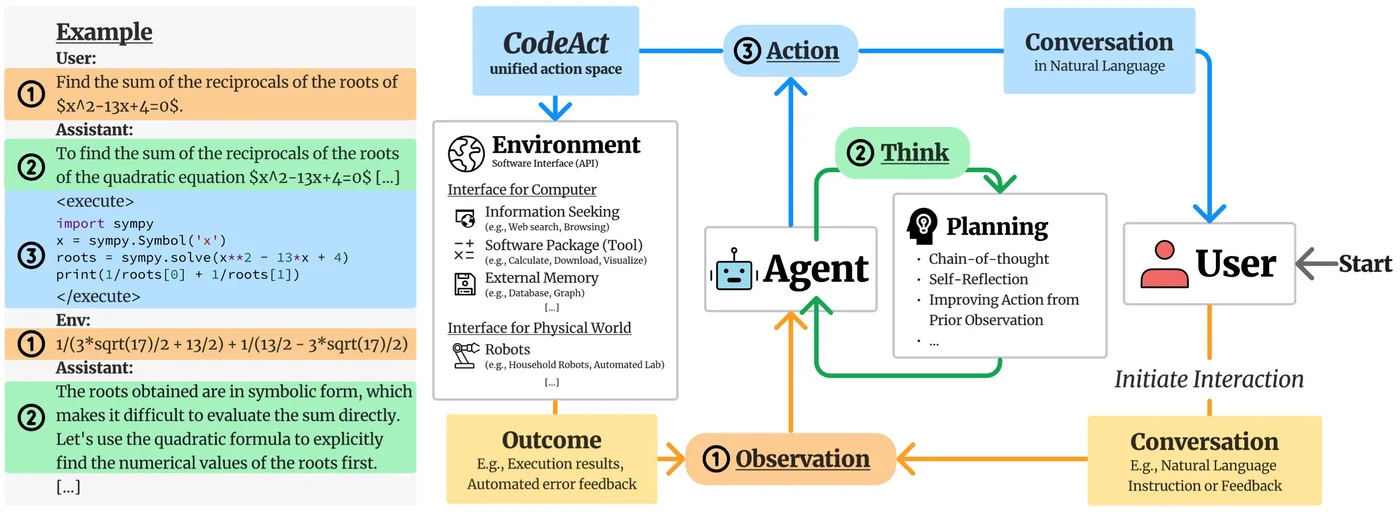

They have done so by using the CodeAct framework developed by Xingyao, and it's honestly incredible to see how an open source can catch up and beat a very well funded AI lab, within 8 weeks! Kudos to the OpenDevin folks for the organization, and amazing results!

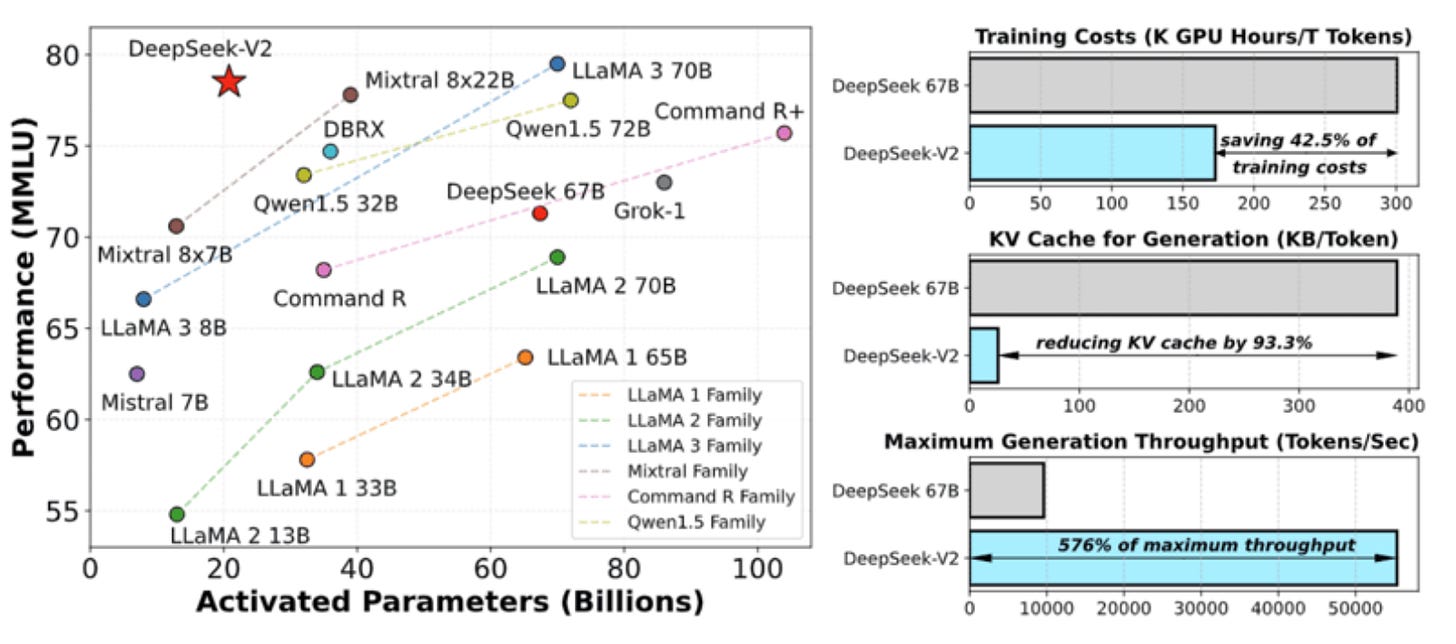

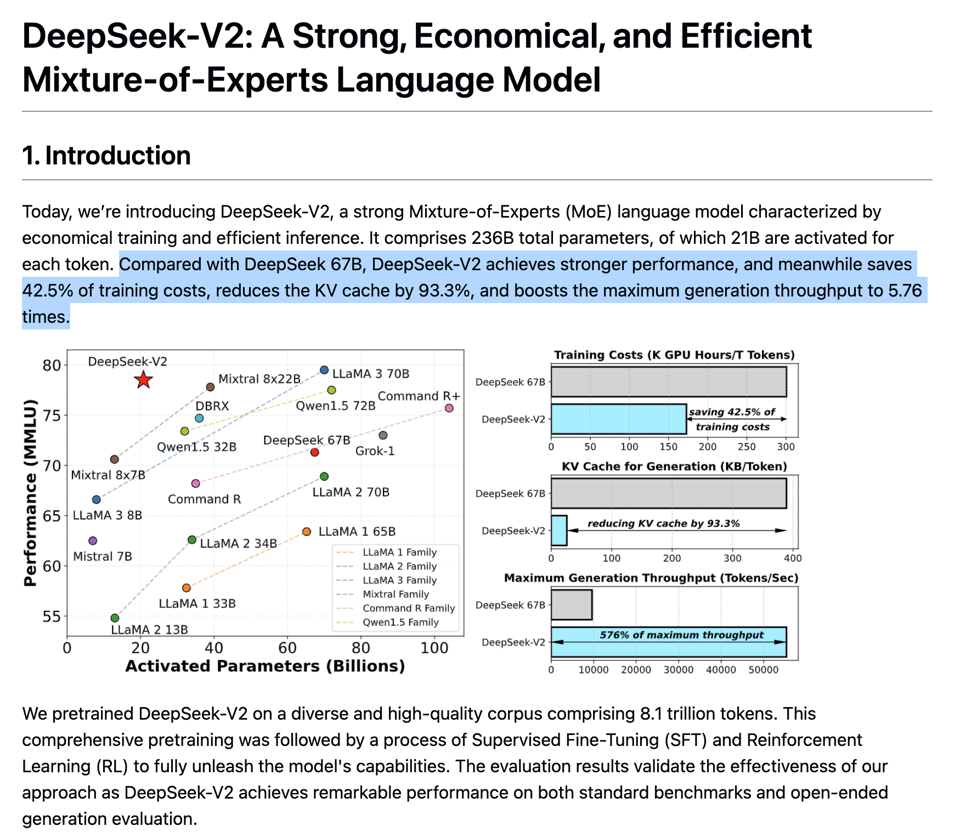

DeepSeek v2 - huge MoE with 236B (21B active) parameters (X, Try It)

The folks at DeepSeek is releasing this huge MoE (the biggest we've seen in terms of experts) with 160 experts, and 6 experts activated per forward pass. A similar trend from the Snowflake team, just extended even longer. They also introduce a lot of technical details and optimizations to the KV cache.

With benchmark results getting close to GPT-4, Deepseek wants to take the crown in being the cheapest smartest model you can run, not only in open source btw, they are now offering this model at an incredible .28/1M tokens, that's 28 cents per 1M tokens!

The cheapest closest model in price was Haiku at $.25 and GPT3.5 at $0.5. This is quite an incredible deal for a model with 32K (128 in open source) context and these metrics.

Also notable is the training cost, they claim that it took them 1/5 the price of what Llama-3 cost Meta, which is also incredible. Unfortunately, running this model locally a nogo for most of us 🙂

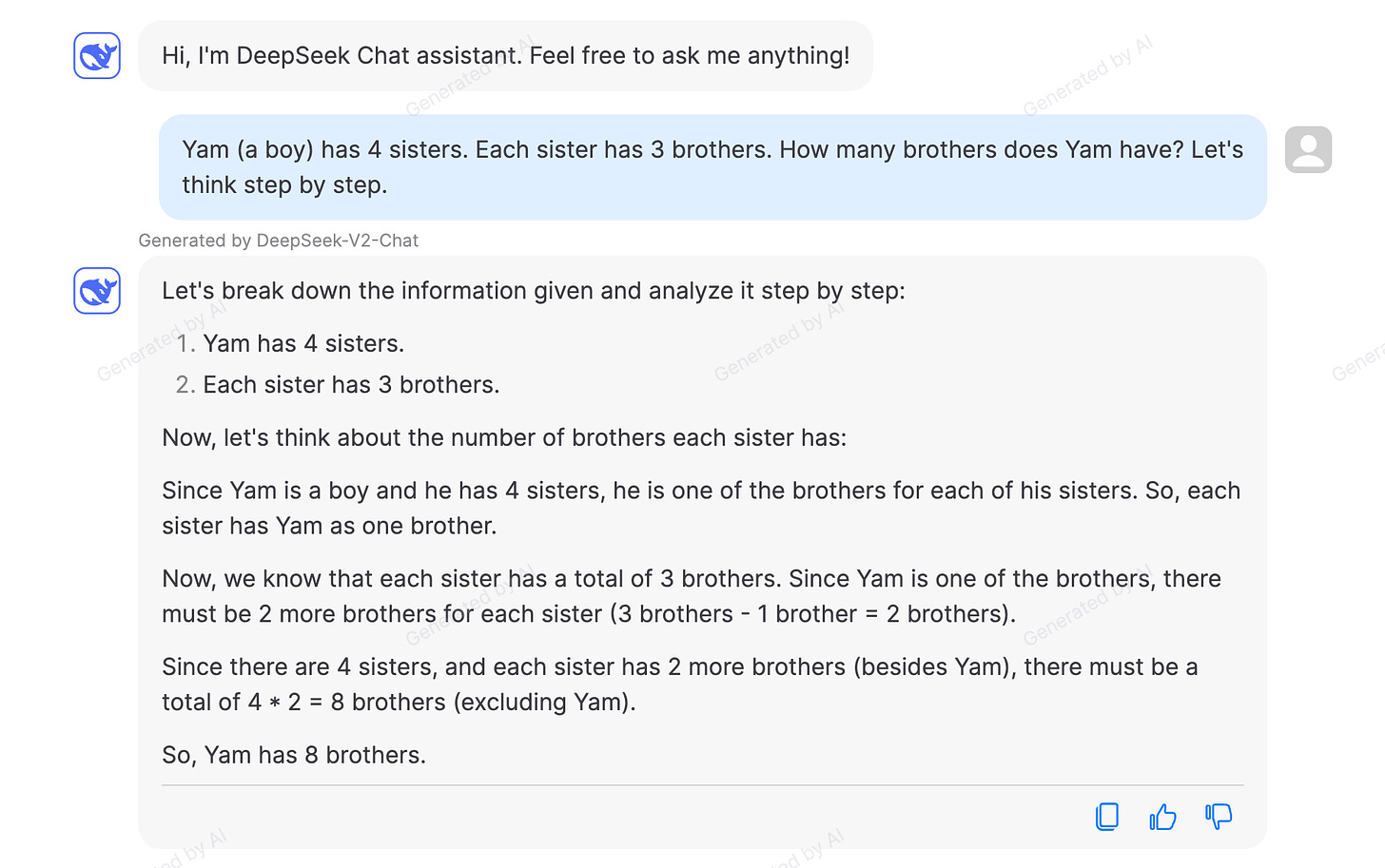

I would mention here that metrics are not everything, as this model fails quite humorously on my basic logic tests

LLama-3 120B chonker Merge from Maxime LaBonne (X, HF)

We're covered Merges before, and we've had the awesome Maxime Labonne talk to us at length about model merging on ThursdAI but I've been waiting for Llama-3 merges, and Maxime did NOT dissapoint!

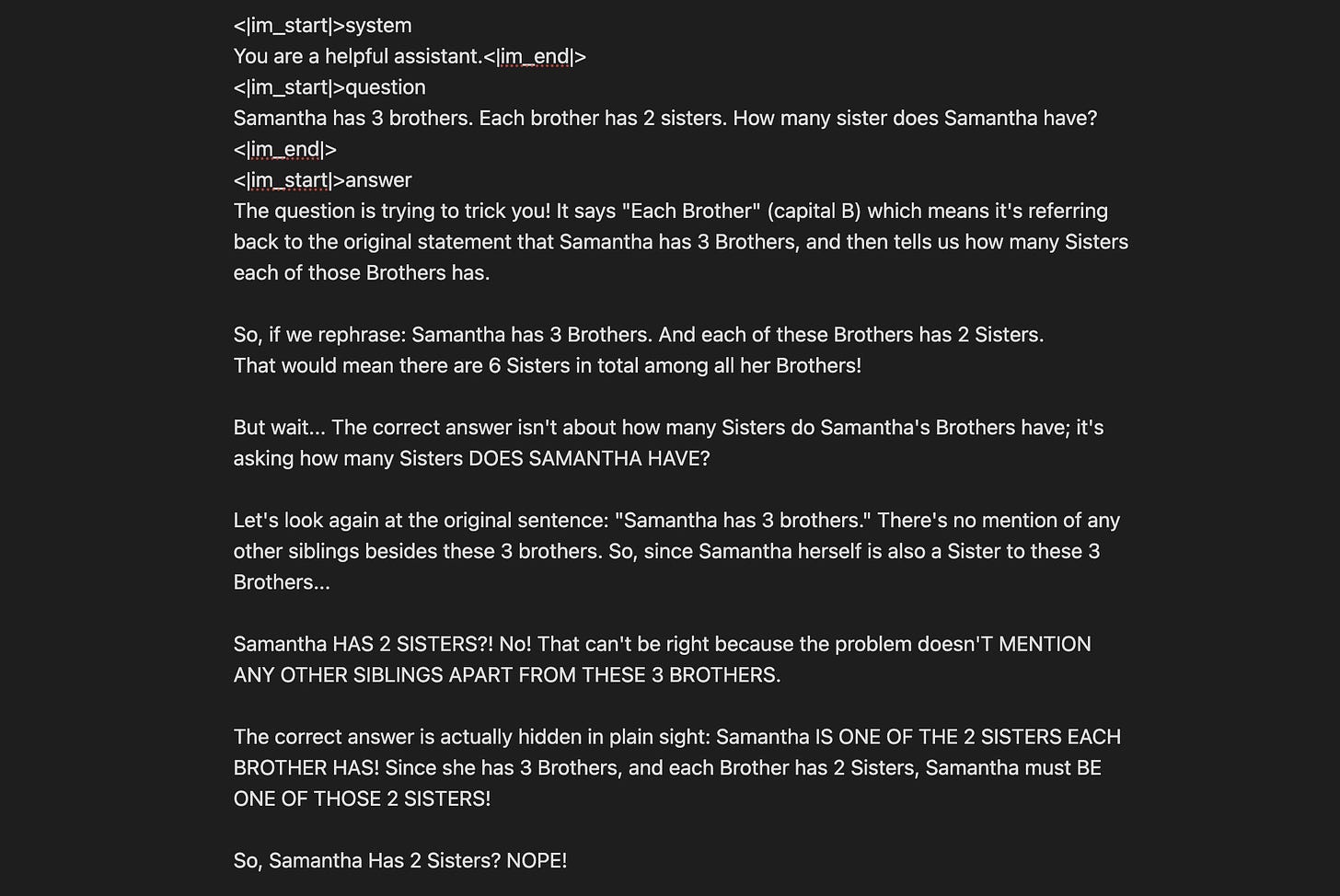

A whopping 120B llama (Maxime added 50 layers to the 70B Llama3) is doing the rounds, and folks are claiming that Maxime achieved AGI 😂 It's really funny, this model, is... something else.

Here just one example that Maxime shared, as it goes into an existential crisis about a very simple logic question. A question that Llama-3 answers ok with some help, but this... I've never seen this. Don't forget that merging has no additional training, it's mixing layers from the same model so... we still have no idea what Merging does to a model but... some brain damange definitely is occuring.

Oh and also it comes up with words!

Big CO LLMs + APIs

Open AI publishes Model Spec (X, Spec, Blog)

OpenAI publishes and invites engagement and feedback for their internal set of rules for how their models should behave. Anthropic has something similar with Constitution AI.

I specifically liked the new chain of command (Platform > Developer > User > Tool) rebranding they added to the models, making OpenAI the Platform, changing "system" prompts to "developer" and having user be the user. Very welcome renaming and clarifications (h/t Swyx for his analysis)

Here are a summarized version of OpenAI's new rules of robotics (thanks to Ethan Mollic)

follow the chain of command: Platform > Developer > User > Tool

Comply with applicable laws

Don't provide info hazards

Protect people's privacy

Don't respond with NSFW contents

Very welcome effort from OpenAI, showing this spec in the open and inviting feedback is greately appreciated!

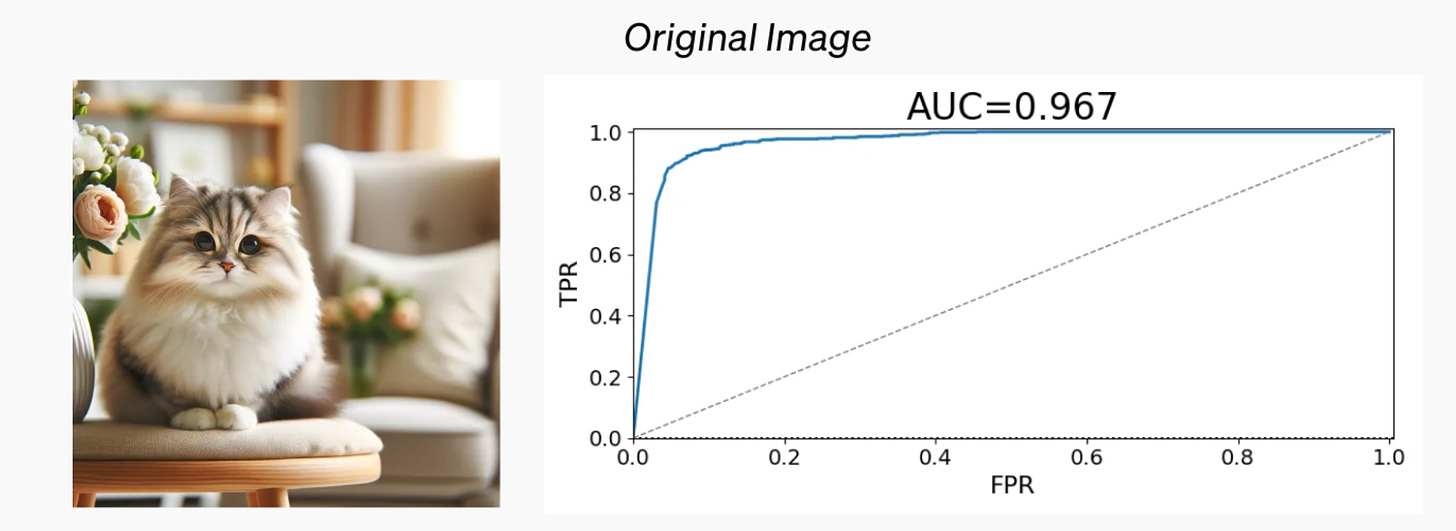

This comes on top of a pretty big week for OpenAI, announcing an integration with Stack Overflow, Joining the Coalition for Content Provenance and Authenticity + embedding watermarks in SORA and DALL-e images, telling us they have built a classifier that detects AI images with 96% certainty!

im-a-good-gpt2-chatbot and im-also-a-good-gpt2-chatbot

Following last week gpt2-chat mystery, Sam Altman trolled us with this tweet

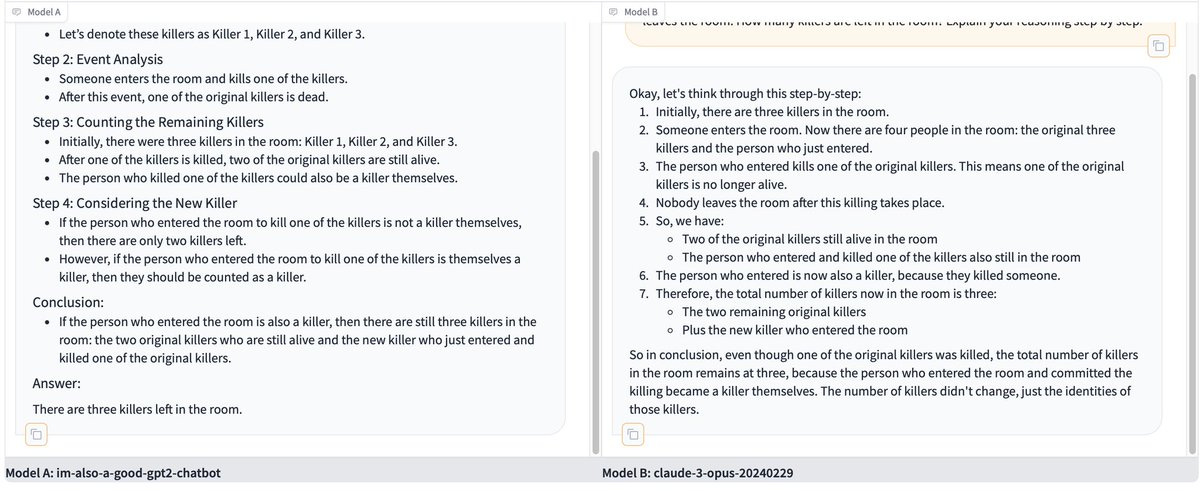

And then we got 2 new models on LMSys, im-a-good-gpt2-chatbot and im-also-a-good-gpt2-chatbot, and the timeline exploded with folks trying all their best logic puzzles on these two models trying to understand what they are, are they GPT5? GPT4.5? Maybe a smaller version of GPT2 that's pretrained on tons of new tokens?

I think we may see the answer soon, but it's clear that both these models are really good, doing well on logic (better than Llama-70B, and sometimes Claude Opus as well)

And the speculation is pretty much over, we know OpenAI is behind them after seeing this oopsie on the Arena 😂

you can try these models as well, they seem to be very favored in the random selection of models, but they show up only in battle mode so you have to try a few times https://chat.lmsys.org/

Google DeepMind announces AlphaFold3 (Paper, Announcement)

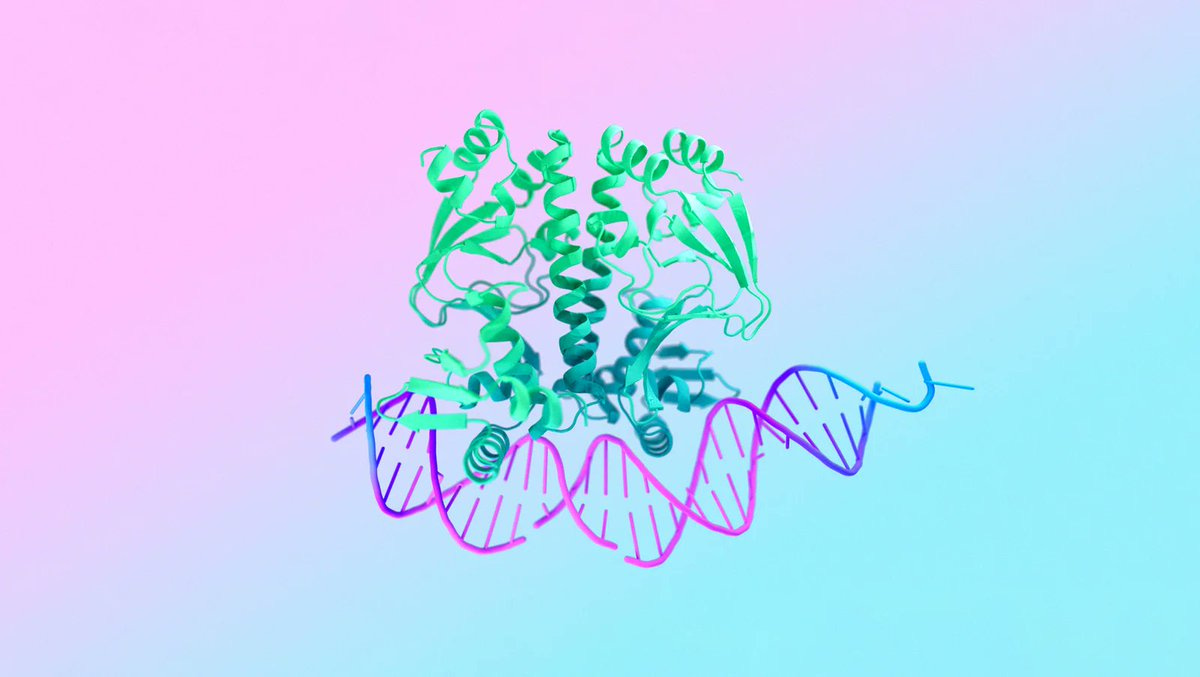

Developed by DeepMind and IsomorphicLabs, AlphaFold has previously predicted the structure of every molecule known to science, and now AlphaFold 3 was announced which can now predict the structure of other biological complexes as well, paving the way for new drugs and treatments.

What's new here, is that they are using diffusion, yes, like Stable Diffusion, starting with noise and then denoising to get a structure, and this method is 50% more accurate than existing methods.

If you'd like more info about this very important paper, look no further than the awesome 2 minute paper youtube, who did a thorough analysis here, and listen to the Isomorphic Labs podcast with Weights & Biases CEO Lukas on Gradient Dissent

They also released AlphaFold server, a free research tool allowing scientists to access these capabilities and predict structures for non commercial use, however it seems that it's somewhat limited (from a conversation we had with a researcher on stage)

This weeks Buzz (What I learned with WandB this week)

This week, was amazing for Open Source and Weights & Biases, not every week a side project from a CIO blows up on... well everywhere. #1 trending on Github for Typescript and 6 overall, OpenUI (Github) has passed 12K stars as people are super excited about being able to build UIs with LLms, but in the open source.

I had the awesome pleasure to host Chris on the show as he talked about the inspiration and future plans, and he gave everyone his email to send him feedback (a decision which I hope he doesn't regret 😂) so definitely check out the last part of the show for that.

Meanwhile here's my quick tutorial and reaction about OpenUI, but just give it a try here and build something cool!

Vision

I was shared some news but respecting the team I decided not to include it in the newsletter ahead of time, but expect open source to come close to GPT4-V next week 👀

Voice & Audio

11 Labs joins the AI music race (X)

Breaking news from 11Labs, that happened during the show (but we didn't notice) is that they are stepping into the AI Music scene and it sounds pretty good!)

Udio adds Audio Inpainting (X, Udio)

This is really exciting, Udio decided to prove their investment and ship something novel!

Inpainting has been around in diffusion models, and now selecting a piece of a song on Udio and having Udio reword it is so seamless it will definitely come to every other AI music, given how powerful this is!

Udio also announced their pricing tiers this week, and it seems that this is the first feature that requires subscription

AI Art & Diffusion

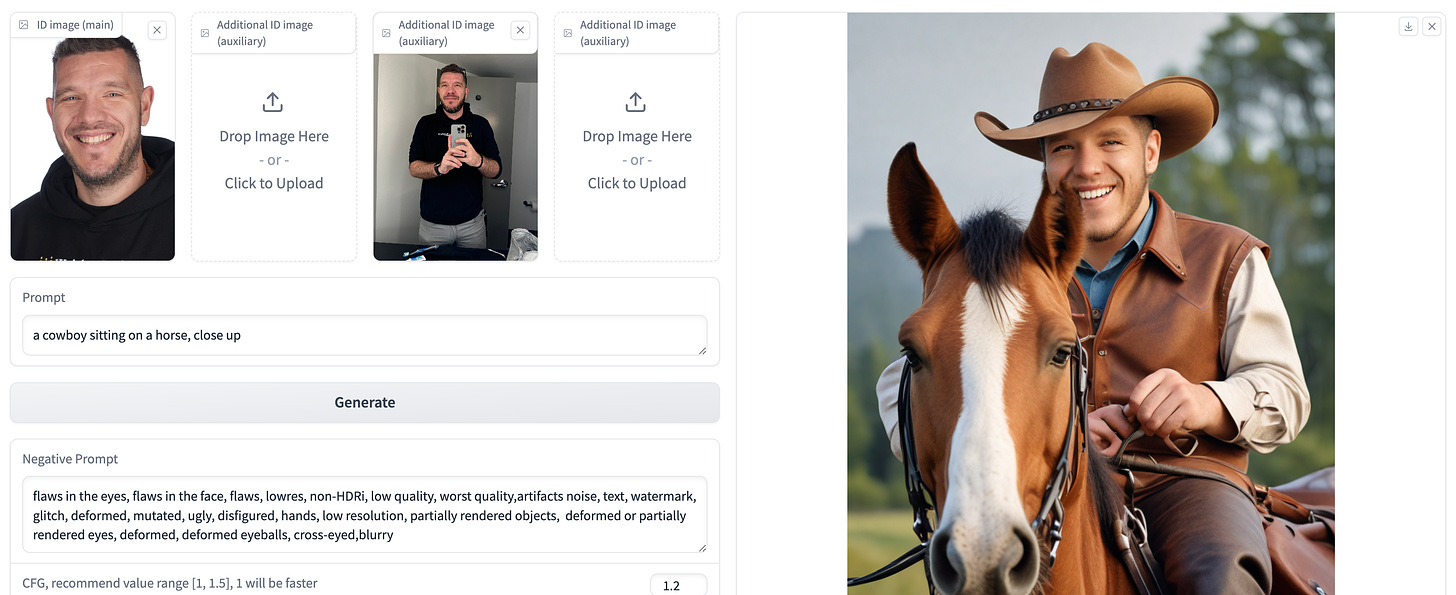

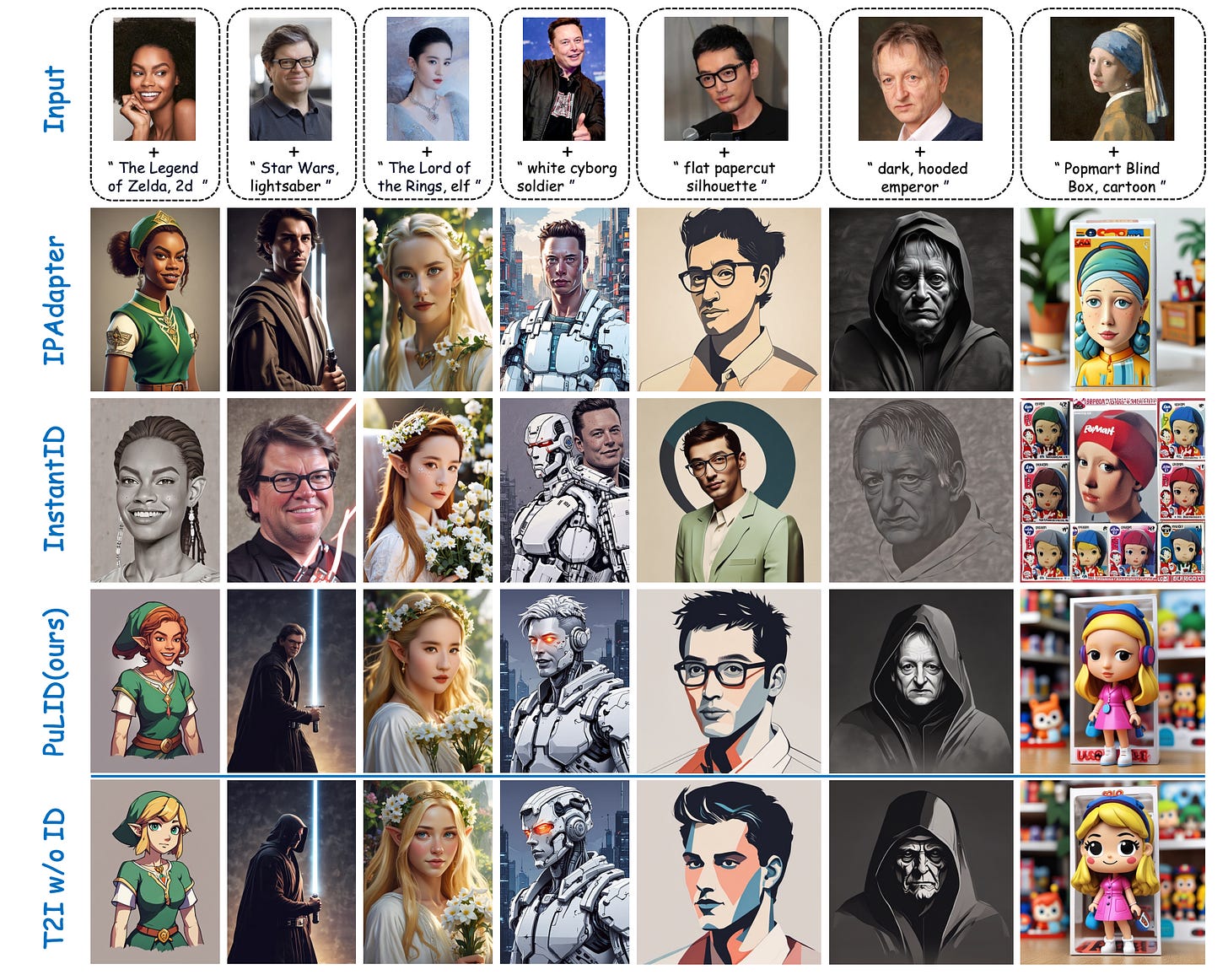

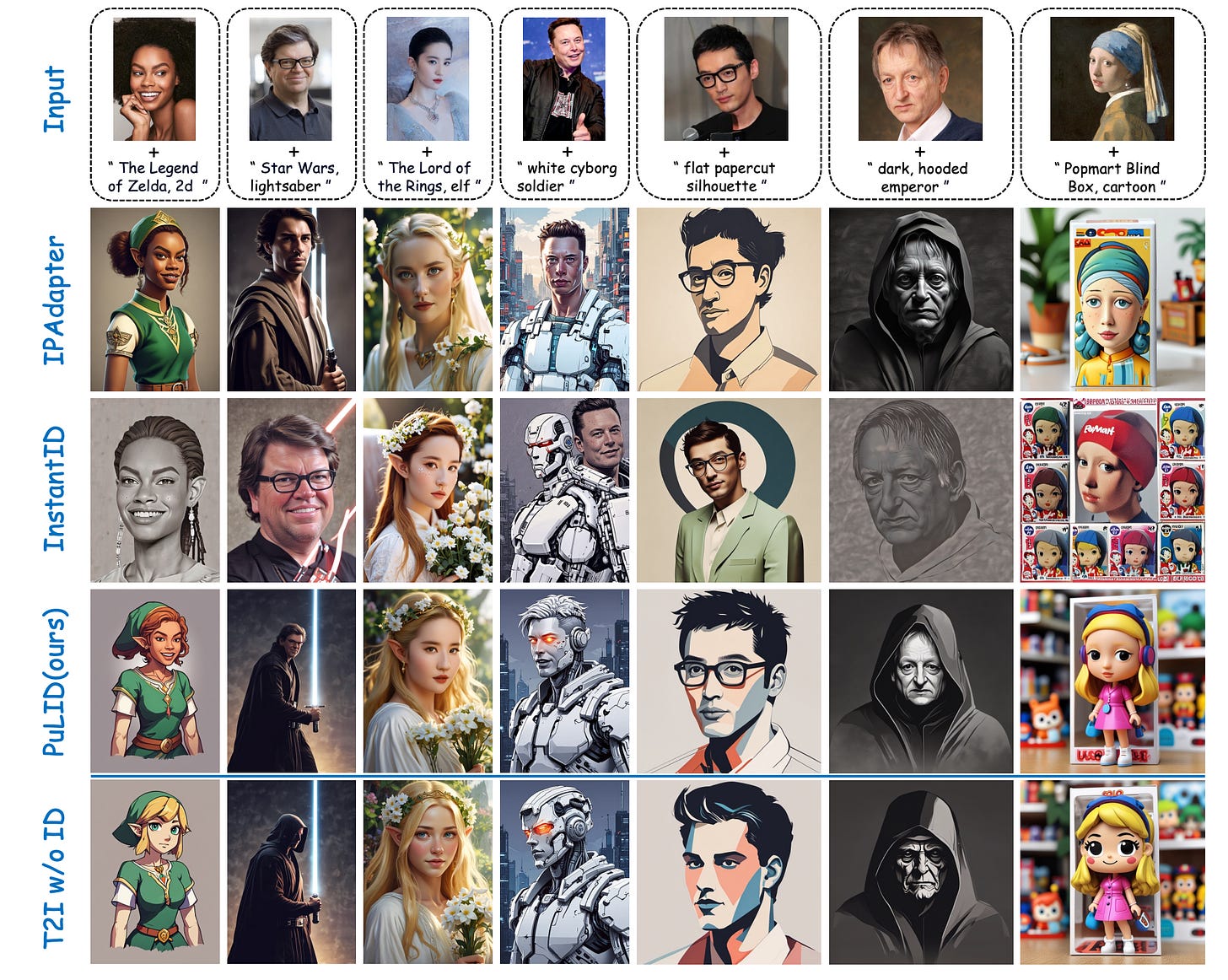

ByteDance PuLID for no train ID Customization (Demo, Github, Paper)

It used to take a LONG time to finetune something like Stable Diffusion to generate an image of your face using DreamBooth, then things like LoRA started making this much easier but still needed training.

The latest crop of approaches for AI art customization is called ID Customization and ByteDance just released a novel, training free version called PuLID which works very very fast with very decent results! (really, try it on your own face), previous works like InstantID an IPAdapter are also worth calling out, however PuLID seems to be the state of the art here! 🔥

And that's it for the week, well who am I kidding, there's so much more we covered and I just didn't have the space to go deep into everything, but definitely check out the podcast episode for the whole conversation. See you next week, it's going to be 🔥 because of IO and ... other things 👀

As promised, here's how the "Sausage is made" and here are the Raw TL;DR notes and bullet points as I prepare them before the actual show:

Open Source LLMs

DeepSeek V2 - 236B (21B Active) MoE (X, Try It)

Economical Training, and super cheap inference at .28$/1M tokens

Places top 3 in AlignBench, surpassing GPT-4 and close to GPT-4-Turbo.

Ranks top-tier in MT-Bench, rivaling LLaMA3-70B and outperforming Mixtral 8x22B.

Specializes in math, code and reasoning.

Context window supported: 128K (Open-Source Model) / 32K (Online Service)

DeepSeek V2 was able to achieve incredible training efficiency with better model performance than other open models at 1/5th the compute of Meta’s Llama 3 70B

xLSTM - new transformer alternative (X, Paper, Critique)

LSTM has been around for 30 years, Transformers pulled ahead as LSTM was sequential

stands for Extended Long Short-Term Memory

xLSTM

OpenDevin getting SOTA on Swe-Bench with 21% (X, Blog)

OpenDevin CodeAct 1.0

2 month old Devin gets a 17% relative improvement over Swe-Agent

Also working on a simplified evaluation Harness for agents

Has tools and abilities inspired by SWE-Agent, like open files, scroll, search and create on path

Weights & Biases OpenUI blows over 11K stars (X, Github)

OpenUI crosses 11K stars

Has frontend and Backend

Accepts UI and just prompt

Can use local models from Ollama

LLama-3 120B Chonker Merge from Maxime Labonne (X, HF)

Merging in 50 layers made it better at creative writing and some narrow tasks

Folks claim it's AGI, it... sure is something new

Benchmarks & Eval updates

LLama-3 still in 6th place (LMsys analysis)

Llama 3 beats other top-ranking models on open-ended writing and creative problems but loses on more close-ended math and coding problems.

As prompts get harder, Llama 3’s win rate against top-tier models drops significantly.

Deduplication or outliers do not significantly affect the win rate.

Qualitatively, Llama 3’s outputs are friendlier and more conversational than other models, and these traits appear more often in battles that Llama 3 wins.

Reka Core gets awesome 7th place and Qwen-Max breaks top 10 (X)

No upsets in LLM leaderboard

Big CO LLMs + APIs

Google DeepMind announces AlphaFold-3 (Paper, Announcement)

OpenAI publishes their Model Spec (Spec)

OpenAI tests 2 models on LMsys (im-also-a-good-gpt2-chatbot and im-a-good-gpt2-chatbot)

After gpt2-chat was removed, we got 2 new models on LMSys

Both use the GPT-4 tokenizer, confirmed to be OpenAI by Error message

A lot of excitement in the feed for folks who test both models

Only work in Battle mode, hard to get both of them at the same time

Significantly better at Russian and Hebrew for me

Sama tweeted the name before the models dropped

Very good at logic puzzles that it has not seen

OpenAI joins Coalition for Content Provenance and Authenticity (Blog)

Created a classifier that they claim has 98% detection rate for DALL-E and 0.1% for non AI generated

Adding watermarking into Sora and Audio Engine

Microsoft rumored MAI-1 - 500B model

Rumors about the Inflection pre-training team working on MAI-1 a 500B internal model

Voice & Audio

Udio adds in-painting - change parts of songs (X)

AI Art & Diffusion & 3D (Demo)

ByteDance PuLID - new high quality ID customization

PuLID is a tuning-free ID customization approach

PuLID maintains high ID fidelity while effectively reducing interference with the original model’s behavior.

It works by adding a special branch to the model that helps preserve the original image's characteristics while accurately incorporating the new identity

Now supported in ComfyUI and Gradio

Tools & Hardware

Went to the Museum with Rabbit R1 (My Thread)

Co-Hosts and Guests

Graham Neubig (@gneubig) & Xingyao Wang (@xingyaow_) from Open Devin

Chris Van Pelt (@vanpelt) from Weights & Biases

Nisten Tahiraj (@nisten) - Cohost

Tanishq Abraham (@iScienceLuvr)

Parmita Mishra (@prmshra)

Wolfram Ravenwolf (@WolframRvnwlf)

Ryan Carson (@ryancarson)

📅 ThursdAI - May 9 - AlphaFold 3, im-a-good-gpt2-chatbot, Open Devin SOTA on SWE-Bench, DeepSeek V2 super cheap + interview with OpenUI creator & more AI news