Hello hello everyone, happy spring! Can you believe it? It's already spring!

We have tons of AI news for you to cover, starting with the most impactful one, did you already use Claude 3? Anthropic decided to celebrate Claude 1's birthday early (which btw is also ThursdAI's birthday and GPT4 release date, March 14th, 2023) and gave us 3 new Clauds! Opus, Sonnet and Haiku.

TL;DR of all topics covered:

Big CO LLMs + APIs

🔥 Anthropic releases Claude Opus, Sonnet, Haiku (Announcement, try it)

Inflection updates Pi 2.5 - claims GPT4/Gemini equivalent with 40% less compute (announcement)

Elon sues OpenAI (link)

OpenAI responds (link)

ex-Google employee was charged with trading AI secrets with China (article)

Open Source LLMs

01AI open sources - Yi 9B (Announcement)

AnswerAI - Jeremy Howard, Johno & Tim Detmers - train 70B at home with FSDP/QLoRA (X, Blog)

GaLORE - Training 7B on a single consumer-grade GPU (24GB) (X)

Nous open sources Genstruct 7B - instruction-generation model (Hugging Face)

Yam's GEMMA-7B Hebrew (X)

This weeks Buzz

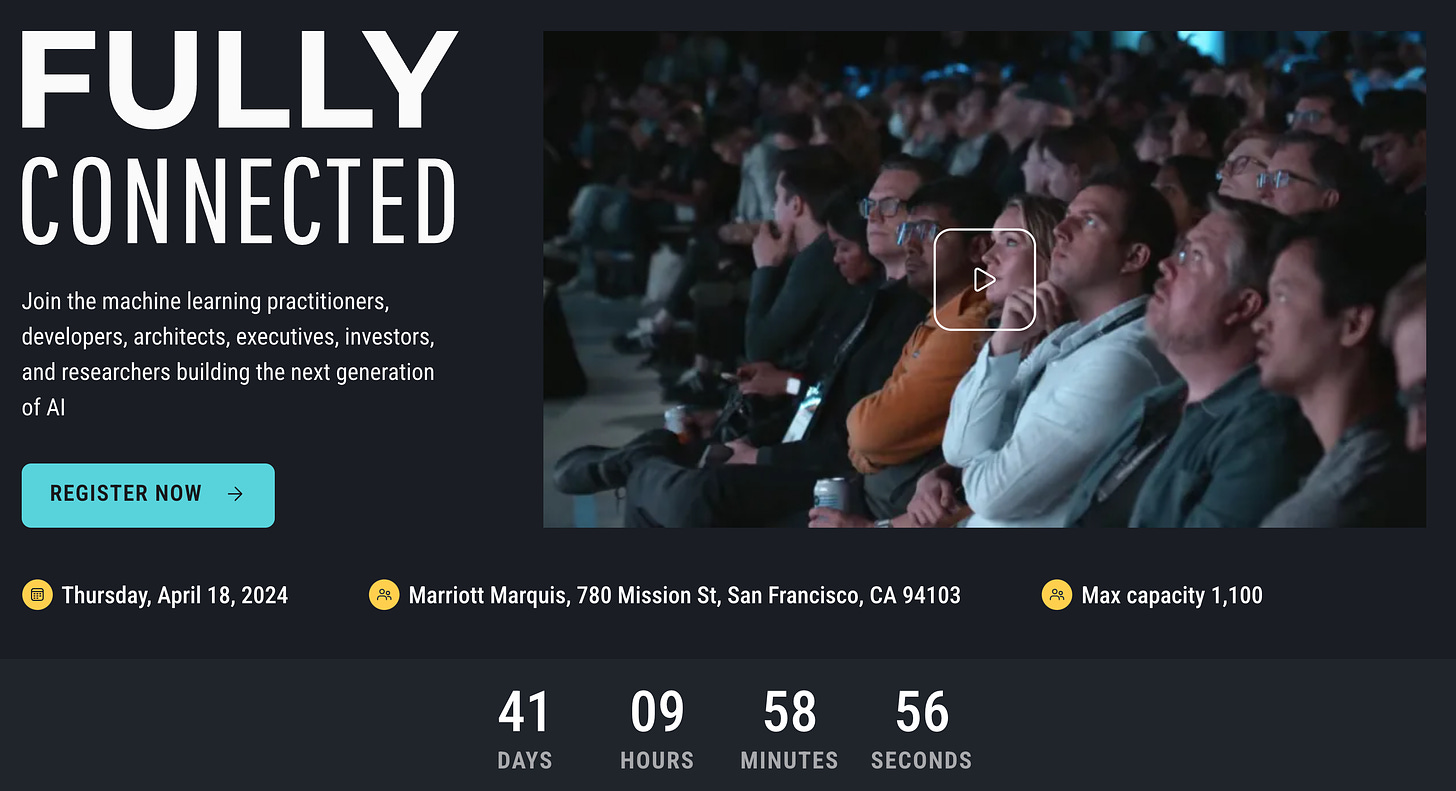

Weights & Biases is coming to SF in April! Our annual conference called Fully Connected is open for registration (Get your tickets and see us in SF)

Vision & Video

Vik releases Moondream 2 (Link)

Voice & Audio

Suno v3 alpha is blowing minds (Link)

AI Art & Diffusion & 3D

Big CO LLMs + APIs

Anthropic releases Claude 3 Opus, Sonnet and Haiku

This was by far the biggest news of this week, specifically because, the top keeps getting saturated with top of the line models! Claude Opus is actually preferable to many folks in blind studies over some GPT-4 features, and as we were recording the pod, LMSys released their rankings and Claude Opus beats Gemini, and is now 3rd in user preference on the LMSys rank.

There release is vast, they have announced 3 new models but only gave us access to 2 of them teasing that Haiku is much faster / cheaper than other options in that weight class out there.

In addition to being head to head with GPT-4, Claude 3 is now finally also multimodal on inputs, meaning it can take images, understand graphs and charts. They also promised significantly less refusals and improved accuracy by almost 2x.

One incredible thing that Claude always had was 200K context window, and here they announced that they will be supporting up to 1M, but for now we still only get 200K.

We were also promised support for function calling and structured output, but apparently that's "coming soon" but still great to see that they are aiming for it!

We were all really impressed with Claude Opus, from folks on stage who mentioned that it's easier to talk to and feels less sterile than GPT-4, to coding abilities that are not "lazy" and don't tell you to continue writing the rest of the code yourself in comments, to even folks who are jailbreaking the guardrales and getting Claude to speak about the "I" and metacognition.

Speaking of meta-cognition sparks, one of the prompt engineers on the team shared a funny story about doing a needle-in-haystack analysis, and that Claude Opus responded with I suspect this pizza topping "fact" may have been inserted as a joke or to test if I was paying attention

This split the X AI folks in 2, many claiming, OMG it's self aware, and many others calling for folks to relax and that like other models, this is still just spitting out token by token.

I additional like the openness with which Anthropic folks shared the (very simple but carefuly crafted) system prompt

My personal take, I've always liked Claude, even v2 was great until they nixed the long context for the free tier. This is a very strong viable alternative for GPT4 if you don't need DALL-E or code interpreter features, or the GPTs store or the voice features on IOS.

If you're using the API to build, you can self register at https://console.anthropic.com and you'll get an API key immediately, but going to production will still take time and talking to their sales folks.

Open Source LLMs

01 AI open sources Yi 9B

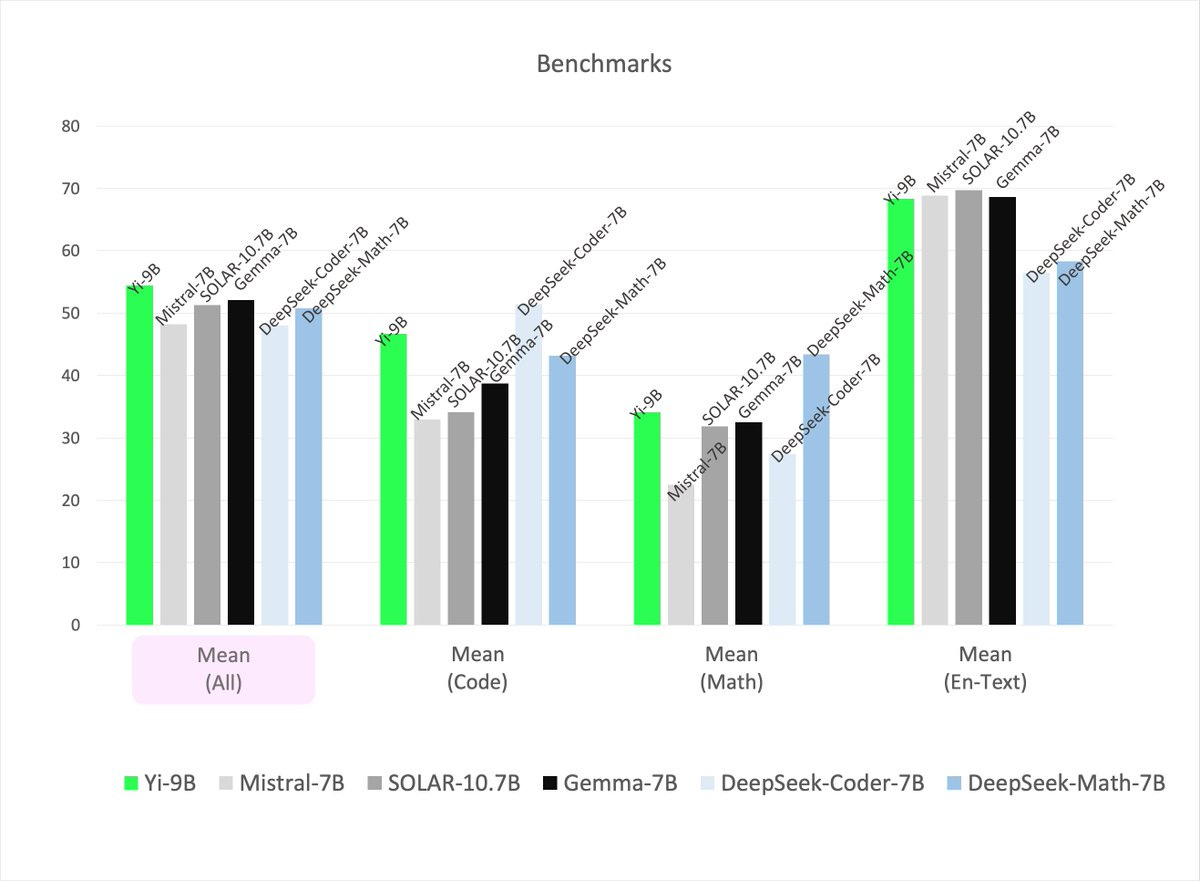

Announcement claims that "It stands out as the top-performing similar-sized language model friendly to developers, excelling in code and math." but it's a much bigger model, trained on 3T tokens. I find it confusing to create a category of models between 7B and almost 12B.

This weeks Buzz (What I learned with WandB this week)

We're coming to SF! Come join Weights & Biases in our annual conference in the heart of San Francisco, get to hear from industry leaders about how to build models in production, and meet most of the team! (I'll be there as well!)

AI Art & Diffusion

Last week, just last week, we covered the open sourcing of the awesome Playground 2.5 model, which looked really good in user testing. I really wanted to incorporate this to my little demo, but couldn't run it locally so asked a few friends, and I gotta say, I love how competitive but open the inference providers can get! Between Modal, Fal and Fireworks, I somehow started a performance competition that got these folks to serve Playground 2.5 model in sub 1.5 second per generation.

Recorded the story to highlight the awesome folks who worked on this, they deserve the shoutout!

You can try super fast Playground generation on FAL and Fireworks

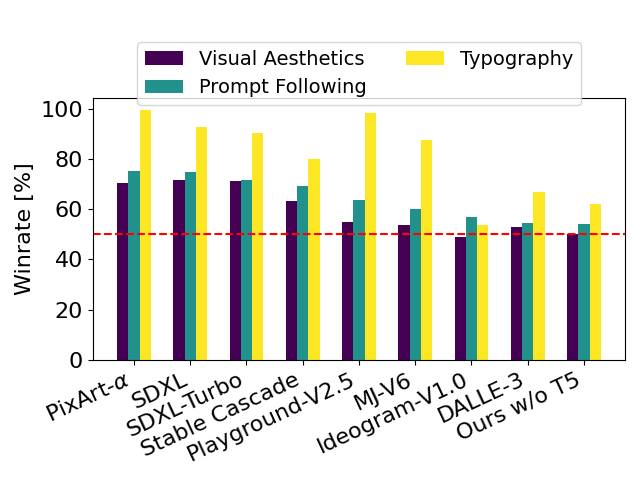

Stability releases Stable Diffusion 3 research paper + Model coming soon

Stability released the research paper for SD3, their flagship latest iteration of an image model. While this field is getting a little saturated, we now have DALL-E, MidJourney, Adobe Firefly, Playground, SDXL, Stable Cascade and Ideogram, SD is definitely aiming for the title.

They released a few metrics claim that on user preference, Visual Aesthetics, Typography and Prompt following, SD2 beats all of the above.

They also mentioned the architecture, which is a MM-DiT - multi modal diffusion transformer architecture (DiTs were used for SORA from OpenAI as well) and that they used 50% synthetic captions with COGvlm, which is quite impressive.

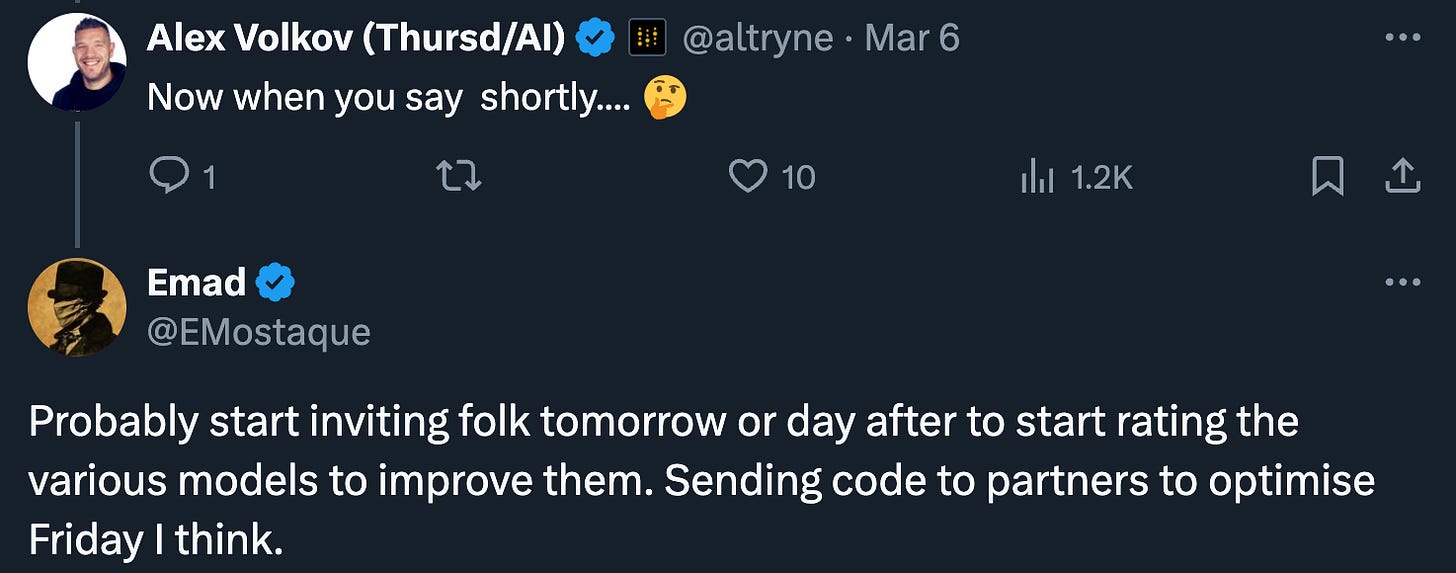

Emad has mentioned that access to SD3 will start rolling out soon!

TripoSR (Demo)

We previously covered LUMA models to generate text to 3d, and now we have image 2 3D that's open sourced by the folks at Tripo and Stability AI.

TripSR is able to generate 3d shapes from images super super fast, and here's a very nice flow that @blizaine demonstrated of how to use these models to actually bring 3D objects into their environment using a few steps.

And that's it for today folks, we of course chatted about a LOT more stuff, I really welcome you to listen to the episode and skip around in the chapters, and see you next week, as we celebrate ThursdAI's birthday (and GPT4 and Claude1) 🎉

P.S - as I always do, after writing and editing all by hand (promise) I decided to use Opus to be my editor and tell me how was my writing, what did I forget to mention (it has the context form the whole transcription!) and suggest fixes. For some reason I asked Opus for a message to you, the reader.

Here it is, take it as you will 👏

Full Transcript for the deep divers:

[00:00:00] Alex Volkov: Right, folks. So I think recording has started. And then let's do our usual. Welcome. Welcome, everyone. Those who know the sound from week to week. This is Alex Volkov. You're listening to ThursdAI, March 7th. I'm an AI evangelist with Weights Biases, who you can see here on stage as well. So, you know, you see the little square thing, give it a follow. Follow us on socials as well. And, uh, today is obviously Thursday.

[00:00:45] Alex Volkov: Uh, Thursday was a lot of stuff to talk about. Um, so, let's talk about it. Uh, I think, I think, um, our week is strange, right? Our week starts at the Friday. Almost, not even Friday. The updates that I need to deliver to you start at the end of the previous ThursdAI. So as, as something happens, uh, and I, I have a knowledge cutoff, actually, at some point we considered calling this podcast knowledge cutoff.

[00:01:14] Alex Volkov: Um, I have a knowledge cutoff after Thursday afternoon, let's say when I start and send the newsletter, but then AI stuff keeps happening. And, uh, Then we need to start taking notes and taking stock of everything that happened and I think on Friday We had the the lawsuit from Elon and there's a whole bunch of stuff to talk about and then obviously on Monday We had some big news.

[00:01:37] Alex Volkov: So As always I'm gonna just run through all the updates. There's not a lot today There's not a ton of updates this week, but definitely there's a few interesting things. Let me un save as well And then I'll just say hi to a few, a few of the folks that I got on stage here to chat. Um, we got Vic, and Vic is going to give us an update about, about something interesting. Uh, Vic, feel free to just unmute and introduce yourself briefly. And then we're going to go through the updates.

[00:02:07] Vik: Hey, my name is Vivek, uh, I've been training ML models for the last two years or so. Um, recently released a new model called OneDream2. It's a very small vision language model that excels at a lot of real world use cases that you could use to build computer vision applications today, so I'm very excited to chat about that.

[00:02:30] Alex Volkov: Awesome. And, uh, we have Akshay as well. Akshay, it's been a while since you joined us. What's up, man? How are you?

[00:02:36] Vik: Greetings of the day everyone, and it's lovely to join again. Uh, I have been listening, I have been here in the audience. Uh, for each and every ThursdAI, and, uh, I've been building some exciting stuff, so I've not been joining much, but, uh, things are going great.

[00:02:54] Alex Volkov: Awesome. And, uh, for the first time, I think, or second time we're talking with Siv. Hey, Siv.

[00:03:01] Far El: Hey, how's it going, everyone? Uh, just a little background on me. Um, I come from startups and from Amazon Web Services. Um, I've been in the AI space for the last six years. And I'd love to be able to chat today about social algorithms and, uh, researchers

[00:03:21] Nisten: having

[00:03:22] Far El: trouble with, uh, socials, particularly Twitter.

[00:03:26] Far El: Anywhere else where you're trying to distribute your

[00:03:28] Nisten: models?

[00:03:30] Alex Volkov: Yeah, so we'll see if we get to this. The setup for ThursdAI is usually just, uh, updates and conversation about updates, but if we get to this, uh, definitely we'll, we'll, we'll dive in there. Um, right, so folks, with this, I'm gonna say, um, uh, that we're gonna get started with just an update, and then I think Nisten will join us in a second as well.

[00:03:50] Alex Volkov: Oh, I see somebody else I wanna, I wanna add.

[00:03:55] Alex Volkov: So, here's everything for March 7th that we're going to cover today. Um, so in the area of open source, we didn't actually have a ton of stuff happen, um, up until, I think, yesterday and today. So, the most interesting thing we're going to talk about is, um, the company O1AI, um, which is a, The folks who released YI 34b, and we've talked about YI and the new Hermes kind of updates for YI as well.

[00:04:23] Alex Volkov: They released a new 9 billion, 9 billion parameter model, which is very competitive with Mistral and the like. Um, and then also the new company, newish company called Answer. ai from Jeremy. Jeremy Howard, if you know him, and Joanna Whittaker, and they collaborated with Tim Dittmers from Qlora, and they released something that lets you train a 70 billion parameter at home, a 70 billion parameter model at home.

[00:04:51] Alex Volkov: We're going to chat about this a little bit. Um, even though today I saw another thing that is kind of around this area, so we're going to have to go and find this and discuss how these huge models are now being able to get trained at home as well. Uh, very brief open source stuff, then we're going to talk about big companies and obviously, um, actually going to put cloud last because we're going to talk about cloud probably a lot.

[00:05:16] Alex Volkov: But, uh, in the big companies area, we will not be able to escape the drama that Elon Musk sues OpenAI. And then the OpenAI response, we're going to chat about this as well. Excuse me. Oh yeah, this is going to keep happening, just one sec. Um, maybe we'll briefly mention that Logan has left OpenAI, and for a brief period of time, he and Ilya had the same, um, bio on Twitter, not anymore, but very interesting as Logan starts to post some stuff as well.

[00:05:46] Alex Volkov: Um, I really want to chat about the Google employee who was charged with AI secret trading, uh, and received like a CTO position in China. That's a very interesting update as well. And, uh Inflection from, uh, there we go, we have Nisten as well, uh, Inflection just released an update today, which is kind of like breaking news, uh, a 2.

[00:06:09] Alex Volkov: 5 update, and they, they say they come to GPT 4 and Gemini equivalent, uh, performance level, which remains to be seen, and I've tested this a little bit, and I definitely want to chat about this as well. Uh, in the vision and video, and We have only the one thing, but we have the author of said thing here. Uh, so I haven't seen any, anything else besides Moondream and we have Vic here.

[00:06:33] Alex Volkov: We're going to talk about Moondream too, and how you can use this and what we can, we can use it for. Um, Voice and audio. There's something that probably didn't happen for the past week. I think it happened a little bit before and I don't have access yet, but Suno if you guys know Suno released the alpha and there's a bunch of videos floating around of their songs with like the V3 alpha of theirs and it's quite something if I if I'm gonna be able to find those tweets and pin them for you That's gonna be a mutual listening Maybe I can actually find the tweet to to actually play this for you.

[00:07:07] Alex Volkov: We'll see if the multimedia department will work. Um, and I think in AI art and diffusion stuff, there's a bunch to talk about. Um, there is, uh, Stable Diffusion 3 research paper was released, and we've talked about Stable Diffusion 3 a little bit. After the announcement, and we haven't covered the research paper, we can chat about the research paper.

[00:07:29] Alex Volkov: But also, potentially today, Imad is going to open some invites, as he mentioned on X as well. So, I'm ready with the breaking news button there. Stability, also in the news, they released a collaboration with Tripo, which created a very fast image to 3D model called Tripo SR. And that's been very cool, and there's a few very Viral examples of, of said thing, uh, floating around, so definitely worth talking about this as well.

[00:07:57] Alex Volkov: And I think, uh, Nisten is just joined us, hey Nisten, and you just shared that, um, That we can train a 70 billion parameter, Oh, 7 billion parameter at home with 24 gig memory, right? A galore. Nisten?

[00:08:17] Nisten: so, so it's a combination of a lot of [00:08:20] techniques that people have been using. And, uh, I'll try to pin it up in a second. But the. The research is that now you can train one from scratch. Not Finetune. Start one from scratch. Start your own. So this is why it's pretty, um, it's relatively groundbreaking.

[00:08:40] Nisten: And they released a repository for that as well. So it's not simply just a paper. They have a code base. It's pretty legit.

[00:08:50] Alex Volkov: So I guess let's, let's get into the open source stuff, um, and then we'll get to the open source, and then we're going to discuss everything else, because I think the main, the bread and butter of this discussion is going to be, is going to be, um, Anthropic. Anthropic's, uh, coming back to the limelight, but let's, let's start with, let's start with open source.

[00:09:09] Alex Volkov: Where's my open source button?

[00:09:27] Alex Volkov: Alright, so I guess, uh, Nisten, welcome, uh, and I guess let's start with, with Galore, uh, as much as we can. We can get from the, from the release, a fairly, fairly new release as well. And I think it's connecting to the other, uh, to the other thing from Answer. ai, but let's start with Galore. Um, so basically, these folks released something called Galore, which is, um, kind of playing on the same, on the same LoRa, QLoRa stuff.

[00:09:52] Alex Volkov: Uh, what are some of the techniques they're adding there? I'm, I'm trying to, to take a look as I'm learning. Uh, Nisten, do you have any, any Any info to share with us about this?

[00:10:05] Nisten: Yeah, yeah, same. more for an actual full paper reading because I have not read it entirely. Mainly looking at it again, it looks like it's, uh, it's another stack of tricks like most good projects are, uh, but it is the, it enables a very, very large capability. And that is that now you can make your own full LLM from, from nothing.

[00:10:29] Alex Volkov: So not a fine tune.

[00:10:31] Nisten: Uh, yeah. Not a fine tuned, not initiated weights. You just, you just start from, uh, from nothing. So, it's I see that it uses, uh, like it offloads a lot of the weight activations and offloads some of them on, uh, on CPU memory. And I know there are options in Axolotl, which is the Docker container that people use to train, that you can also offload on very fast NVMe drives.

[00:10:55] Nisten: So if you have like very fast PCI Express NVMe storage, you can kind of use that as another RAM for, for the training. So this combines all of those. And then some on top and the end result is, is very impressive because you can train a very capable model. And, uh, yeah, again, pending further, uh, research and stuff.

[00:11:21] Nisten: But I think this is one of those repositories that, uh, a lot of people will use or it's likely to.

[00:11:30] Alex Volkov: Yeah, and I think this adds to the, so this, this kind of in the same vein of the next thing we're going to chat about and, um, um, I actually can't find any mention of this on X, believe it or not, so not everything is fully on X. I just got a link, uh, to this from, from, uh, Omar, uh, from Hug and Face. And AnswerAI is a new research lab, um, that Jeremy Howard, uh, if you guys are not familiar with Jeremy Howard, hopefully everybody is, but if you're not, um, I guess look them up.

[00:12:04] Alex Volkov: Um, Jeremy, uh, joined Answer. AI, like, um, I think around NeurIPS he was talking about. They got funded, I think, 10 million dollars. And, um, they released their first project, a fully open source system, uh, that can efficiently train a 70 billion large language model on regular desktop computers with two or more gaming GPUs.

[00:12:27] Alex Volkov: They're talking about RTX 3090 or 4090. Um, Which, you know, compared to, um, Niton what you just shared, I think that sounds very impressive. Um, they combine FSDP, which is, I'm not familiar with FSDP, with SFDP and uh, q and, uh, they brought kind of the, the Cuda Avengers to, to the flow. So Jeremy Howard obviously.

[00:12:52] Alex Volkov: Um. I think FastAI, right? And then Kaggle, I think, competition is definitely behind Jeremy. And then they brought Team Ditmers from Qlora, and we've covered Qlora multiple times, um, very efficient methods. And then they also brought Hugging Faces, Tyrus Von Koller, and, um, they brought the CUDA Avengers in there to, to Basically combine a bunch of techniques to let you train 70 billion parameters.

[00:13:20] Alex Volkov: I see we have Yam joining us. Hey Yam, did you see the Answer. ai stuff that I'm covering or is this new to you?

[00:13:26] Yam Peleg: No, no, all new to me.

[00:13:28] Alex Volkov: Oh wow, okay, so I need, I need, uh, I would love your reaction in real time. Let me DM you this real quick because, um, The number of, actually, let me, let me paste this in the link below so we can actually paste this up.

[00:13:43] Alex Volkov: Um. Yeah, there we go. Okay. So it's now pinned to the top of the space for folks to, to find out. I wasn't able to see any, uh, update on X from any of them, which is very interesting. Um, and the, the very interesting idea is that, you know, all of these systems and all of these models, 70 billion models, they cost an insane amount of money.

[00:14:07] Alex Volkov: And now these folks are considering that under 10, 000, you'd be able to train something like 7TB at home. Which I'm not training models, but I know that some folks here are. And, um, I assume that this is a very big unlocking capability. Um, which, which is what Answer. AI is trying to achieve.

[00:14:32] Alex Volkov: Let's see what else is very interesting here. Um, just something about Answer. AI generally. Uh, they claim that they're like an unusual type of organization. I actually tried to ask Jeremy a couple times what did this mean. Um, and, uh. They, they claim to be a for profit, like, lab, R& D lab, and, um, more in spirit to 19th century labs than today's AI research groups, and, um, I think Eric Ries and Jeremy Howard launched this in Europe, um, and, I think, I'm actually not sure what's the, the, how much did I say?

[00:15:14] Alex Volkov: Um. What are they up against? But the first release of theirs is the open source OS, fully open source. Uh, that includes one of the, like several of the top people in the industry, uh, to create something that wasn't possible before. Um, and I think it's remains to be seen. They didn't release any metrics, but they said, Hey, we're about to release some metrics, but, um, this keeps improving from week to week.

[00:15:39] Alex Volkov: So we actually didn't release any metrics. Go ahead Nisten.

[00:15:43] Nisten: Sorry, is this from Answer. ai? They said they were going to release one, or? They

[00:15:49] Alex Volkov: think, already. They didn't release metrics, uh, for the training. Uh, but I think the, the whole repo is open source. Yeah.

[00:15:58] Nisten: released an open source OS, or?

[00:15:59] Alex Volkov: Yeah, yeah, open source, FSDBQLora. Um, and I think

[00:16:03] Nisten: Oh, okay, so it's not a real operating system, it's another,

[00:16:07] Alex Volkov: It's, well, they call it an operating system, but yeah,

[00:16:10] Nisten: Oh, okay,

[00:16:11] Alex Volkov: it's not like Linux competitive.

[00:16:12] Nisten: okay, I thought it was like an actual one. Okay, actually, go ahead, because there are some other huge hardware news that I wanted to quickly cover.

[00:16:23] Alex Volkov: Go ahead,

[00:16:23] Yam Peleg: Yeah,

[00:16:23] Vik: I just wanted to add about this answers. ai thing that they have released this system that you guys were talking about, which basically claims to be able to train 70 billion parameter model on only 224 [00:16:40] GB GPUs.

[00:16:40] Vik: So basically, you know, two 4090s and you can train a 70 billion parameter model, which is mind boggling if you think about it. But, uh, I tried to find like how to get access to this. So I was still not sure if this is fully supported in every, uh, rig and system. So that is something I

[00:16:58] Nisten: wanted to mention.

[00:17:00] Alex Volkov: Yeah.

[00:17:00] Nisten: By the way that that has been, oh, sorry.

[00:17:02] Nisten: That that has been do, uh, doable for a while because Kilo actually trains it all in four bit. And, uh, there are only like a few tricks, which you can also apply if you go to apps lot, uh, the directory. You, you can also do that on your own if you do a four bit kilo training and you just say, offload all the gradients and all this stuff, you can also do that with a, the 48 gig, uh, stuff.

[00:17:26] Nisten: But, uh, again, I'll look into the actual directory instead.

[00:17:32] Alex Volkov: Right, so, um, Nisten, you mentioned some hardware news you want to bring? Go ahead.

[00:17:39] Nisten: Yep. Okay, so we have two hardware news, but they are actually kind of related. Uh, first of all, uh, TenseTorrent, the company by legendary computer scientist, Jim Keller, who worked on the iPhone chip, AMD, who brought AMD back to life. Uh, legendary computer scientist, and has been working on TenseTorrent, which is another, uh, accelerator for, which also does, does training.

[00:18:07] Nisten: So, uh, so they released these cards, and I'm not sure what the capabilities are, uh, but I saw that George Hotz, uh, from TinyCorp, uh, posted them, and, uh, they are actually, so I just wanted to give them a big shout out to actually making them commercially viable, and it's just something you can buy, you don't have to, uh, You know, set up a UN meeting for it, right?

[00:18:31] Nisten: And get the votes and stuff. You can just go and buy it. So, that's pretty awesome of them, and I wish more companies did that. The second news is also kind of huge, because one of the engineers that left TestTorrent last year now started a startup here in Toronto. And this has been an idea that's been around for some time and discussed privately and stuff.

[00:18:59] Nisten: Uh, they're making AI chips. Again, they do not. These ones do not do training, but they're going to make them hard coded, which will be the judge of how much that makes sense given the how rapidly models improve. But there is a business case there because the hard coded chips, they can perform literally a thousand to 10, 000 times faster.

[00:19:25] Nisten: So

[00:19:26] Alex Volkov: you say hard coded, is that one of those, like, transformer specific chips you mean?

[00:19:33] Nisten: no, the entire weights are etched into the chip and you cannot change them. So the benefit of this is that you can get up to a thousand to ten thousand times faster inference. So we might end up with a case where, according to calculations from What Sam Altman said on how much chat GPT serves in a single day, which is a hundred billion tokens, and that works out to about 1.

[00:20:02] Nisten: 4 million tokens per second. We might very soon, like in a year or two or sooner, be in a spot where we have this company's using 60 nanometer chips. We might have a single chip pull the entire token per second performance of all of global chat GPT use. I don't know if that includes enterprise use, but that's how fast things are accelerating.

[00:20:29] Nisten: So that's the, that's the benefit of, uh, yeah, that's the benefit of going with a hard coded chip. So yeah, call, uh, inference costs are, um, are dropping in that

[00:20:43] Alex Volkov: You also mentioned George Hotz and, uh, he also went on a, on a, on a rant this week again. And again, I think, do you guys see this? Um, the CEO of AMD that doesn't use Twitter that much. But she replied to one of him, uh, one of his demands, I think, live demands, and said, Hey, uh, we have a team dedicated working on this.

[00:21:05] Alex Volkov: And then we're gonna actually make some changes. in order to get this through. So, I love it how, uh, George Hotz, um, folks probably familiar with George Hotz in the audience, um, Should we do a brief, a brief recap of George Hatz? The guy who hacked the first iPhone, the first PlayStation, then, uh, built a startup called Com.

[00:21:25] Alex Volkov: ai to compete with Autonomous Driving, and now is building tiny, uh, we mentioned tiny boxes ready to ship Nisten last time, and I think that paused because they said, hey, Well, we don't have enough of the open sourcing of the internal stack of AMD Which led the CEO of AMD, Linda, or Lisa? I'm very bad with names.

[00:21:46] Alex Volkov: I think Linda, to reply and say hey, we have dedicated teams working on this Actually do want to go find this tweet Go ahead Nisten

[00:21:57] Nisten: Yeah, so there has been a big misconception in the software industry that, um, a lot of the, the code monkey work is something that, you know, you just hire someone to, like, clean your toilets and, and do it. But, in fact, the reason that NVIDIA has a 2 trillion valuation, and I'll beat Saudi Aramco, is because that Their toes are a lot cleaner in terms of the software.

[00:22:27] Nisten: So, the CUDA software is a lot more workable, and you can do stuff with it, and it doesn't have the bugs. So, in essence, what George Haas is doing by pushing to open source some key parts, which some people might freak out that China might steal them, but they've already stolen everything. So, it really doesn't, doesn't matter that they're very small hardware parts, but they make a huge difference in developers being able to.

[00:22:56] Nisten: to use that software, and those parts are buggy. So, in essence, like, George Haas, with this stupid CodeMonkey fix, might double or triple AMD's stock

[00:23:07] Alex Volkov: Yeah,

[00:23:08] Nisten: Just because he's getting in there, and he's cleaning that crap code out.

[00:23:14] Alex Volkov: and he's popular enough to pull attention from the CEO of this company to actually come and react and, you know. One of the reasons I love X is that I think, um, uh, she retweeted their official tweet. I think there's more folks commenting on and reacting to her, um, comment, and that's on top of the space now, uh, than the actual kinda tweet itself.

[00:23:37] Alex Volkov: Which is, I think, a good type of ratio, or ratio, yeah. I think, uh, more hardware news, I think we're satisfied with Oh, yeah, yeah. The, the, the only other hardware news related to this, 'cause ni I think you mentioned Saudi Aramco. Uh, we chatted with the GR folks with a Q not with a K grok. The, the LL uh, LPU chip.

[00:23:58] Alex Volkov: And they're like super fast, uh, inference speed, and I think this week. They showed that they have a collaboration with, I think said, Saudi Aramco, um, about bringing AI. Um, and I saw a few, a few folks post about this and, um, if that's of interest to you, we had a full conversation with the Grok team. They also, they also, um, Release, kind of, uh, they had a waitlist and many, many people, I think the waitlist jumped after we chatted with them at the peak of their very viral week, which started with match rumor going, going off.

[00:24:32] Alex Volkov: Uh, and then I think they said something about, they had like 50 or a hundred waitlist signups before this. And then the week after they had like 3, 600 a day or something like this. So they revamped the whole system. And now, you can actually sign up with a self served portal to Grok, and uh, let me see if I can find this tweet for you.

[00:24:55] Alex Volkov: So you can actually now go and sign up, um, to Grok yourself, [00:25:00] they have a nice console, very reminiscent for, um, for every other, like, console out there. You can create an API key, very simple, so no longer like a manually, manual approval of, um, Grok. I can't find this tweet though, so give me, give me just a second.

[00:25:22] Alex Volkov: So, yeah, they, they're, uh, collaborating with, with Saudi Encore. Go ahead Nisten, real quick.

[00:25:28] Nisten: Uh, yeah, just really quickly, the part that I missed was that, uh, the fix that George Haas is doing for AMD, that's to enable distributed training. Because they cannot distribute training across GPUs because it crashes. So it's pretty important. Uh, yeah, and those are my comments on that.

[00:25:48] Alex Volkov: Awesome. Okay, so I, I found the tweet. Uh, so if, if you follow this tweet, the, the kind of the, the quoted tweet there is, uh, getting you to the Grok console. You get like two weeks for free and you get the API access to this like incredibly fast inference, inference machine from Grok.

[00:26:05] Nisten: I think Far El and Yam wanted to say something on it.

[00:26:10] Alex Volkov: Yeah, go ahead.

[00:26:11] Yam Peleg: Yeah, I got a lot of technical issues. So if you can go before me, I'll try to

[00:26:17] Vik: fix it.

[00:26:19] Alex Volkov: You're coming through finally, loud and clear. Far El, if you wanted to comment, go ahead, man.

[00:26:30] Alex Volkov: Alright, um, looks like Far El is also, um, not available. Okay, I think we're moving

[00:26:38] Vik: touch on this for a sec. Um, so Grok has a white paper out about how they've designed their chips and it's super interesting. I'd strongly recommend everyone go read it. Uh, they've basically from the ground up rethought how, uh, inference oriented compute should work. It's a fascinating read and kind of surprising that they're sharing all of those details.

[00:27:00] Vik: One would think they'd keep it proprietary.

[00:27:05] Alex Volkov: yeah, we had a full conversation with them. It is fascinating. Again, you know, for, for The level of discussion that we have here, um, we, you know, honestly, we couldn't dive like super, super deep, but I've played with it, and the demos I was able to do, uh, Vic, I don't know if you have the chance to see, uh, they're only possible with almost instant, uh, speed.

[00:27:28] Alex Volkov: You know, guys, what, like, even though I love the Grock team, and we're collaborating with them, we're gonna do some stuff with them as well, um, it turns out that for some Use cases, inference speed, like a lot of inference speed on big documents, and I think that's what Grok is like definitely incredible with.

[00:27:49] Alex Volkov: You take Mixtral and you dump a bunch of tokens in, and then you get like a super fast reply. So I was actually able to get a transcript in there for all of ThursdAI, and to get chapters within less than like 3 5 seconds, which is ridiculous. For the demo that I built, I actually didn't need inference speed.

[00:28:09] Alex Volkov: I did need infraspeed, but as much as I needed a faster response on smaller kind of prompts multiple times. And I noticed that even though their infraspeed is incredible, their latency is not great, probably because they're still fairly young in this. And I went and looked, and Together also offers Mixtral over API.

[00:28:31] Alex Volkov: Not Together, sorry. Together also does this, but specifically Perplexity. If you use Perplexity for search, you may not know that they also have an API that you can use, and they serve Mixtral and Mistral, and I think some other open source models and some of theirs. Um, and they keep improving their scores there, and specifically they're now up to 200 tokens per second for Mixtral and Mixtral, which is impressive.

[00:28:56] Alex Volkov: And, you know, um, they don't have custom hardware, and they're getting 200 tokens per second, which is ridiculous. But what I notice is Perplexity is web engineers because they're now rumored to be a unicorn. I don't know if that's a rumor, so that's not confirmed. But their web engineers are really top notch.

[00:29:16] Alex Volkov: And so it turns out that if I use Perplexity is API for Mixtral. I get less tokens per second. So I get less than half, right? So Grok is at around 500, um, Perplexity is around 200. But I actually get better performance because I need kind of low latency on the request itself and Perplexity is better at this.

[00:29:36] Alex Volkov: Um, obviously something Grok can and will fix. And also the stuff that the Grok team told us were like, it's only, they're only scratching the itch. And Nisten, you mentioned something with them in the conversation that I wanted to repeat is that They're also working on figuring out the input latency of how fast the model not just spits out tokens, but processes the whole prompt input, which is a big deal, especially for long context prompts.

[00:30:00] Alex Volkov: And they said that they're looking at this and they're gonna release something soon.

[00:30:07] Nisten: Yeah, that's something that the NVIDIA cards excel at, and something that's holding back CPU based inference, because the prompt evaluation is, is, is slow. So, yes, it's not an easy problem to solve, but their chip is already so fast that the 3 to 1 ratio does not hurt them as much. Whereas With NVIDIA, the chips are slower and stuff, but they have like a 10 to 1 ratio, so if you're running at 100 TPS, your prompt eval is going to be like over, over a thousand.

[00:30:42] Nisten: So it's going to read. If you dump in like 10, 000 tokens, it's going to read them in 10 seconds or less. Usually it's a few thousand with NVIDIA, but I'm not sure actually, because when you dump in a huge amount of text in Grok, it does not take multiple seconds to evaluate it. It's like instance,

[00:31:04] Alex Volkov: It's quite, it's quite fast, yeah.

[00:31:06] Nisten: yeah, so I'm not too sure that that needs some proper benchmarking to say for sure.

[00:31:11] Alex Volkov: Yep. So, uh, speaking of Grok, let's, let's talk about the other Grok, but before that, you guys want to acknowledge, like, what's, what's going on with the rumors? Far El, you, you just texted something. I'm seeing Foster post something. Uh, what's, what's going on under, under the current of, of the Twittersphere?

[00:31:27] Alex Volkov: Um,

[00:31:28] Far El: Just, just speculation at this point, but, uh, you know, you know, those, uh, those people that, uh, that, uh, leak, you know, uh, stuff about OpenAI and all these AI companies, and most of the time, some of them are, are right. Uh, of course we don't see what they don't delete,

[00:31:49] Alex Volkov: yeah.

[00:31:50] Far El: uh, uh, yeah, like some of them are saying right now that, uh, there's like a rumor that GPT 5 is dropping.

[00:31:57] Far El: That GPT

[00:31:58] Alex Volkov: Say, say this again slower, because

[00:32:01] Far El: 5 is dropping, that

[00:32:02] Alex Volkov: there is a rumor that GPT 5 is dropping today. Wow. All right. Um, yeah. That's, that's quite, and I've seen this from like several folks, but

[00:32:11] Far El: Could be complete bullshit, right?

[00:32:12] Yam Peleg: But yeah.

[00:32:14] Alex Volkov: well, I'm ready with my button. I'm just saying like, let's acknowledge that there's an undercurrent of discussions right now with several folks who are doing the leaking.

[00:32:22] Alex Volkov: Um, and then if this drops, obviously, obviously we're going to do an emergency, uh, and convert the whole space. I will say this, GPT 4 was released. Almost a year ago, like less than a week to the year ago, March 14th. Um, Cloud, I actually don't remember if Cloud 1 or Cloud 2. I think it was Cloud 1 that released the same day that people didn't even notice because GVT 4 took, took the whole thing.

[00:32:52] Alex Volkov: Um, and now like Cloud releases their, um, Which we're gonna talk about, so I won't be surprised, but let's talk about some other stuff that OpenAI is in the news for. And then, and then if, if anything happens, I think we all have the same, uh, the same profiles on x uh, on notification. So we'll get the news as it comes up.

[00:33:13] Alex Volkov: And we love breaking news here in, in, in, in Thursday. Okay,

[00:33:17] Nisten: Yeah, for sure.

[00:33:18] Alex Volkov: Um, let's [00:33:20] move on. Let's move on from open source. So, so I think we've covered. A few open source, I will just mention briefly that we didn't cover this, um, the, the folks, uh, from Yi, uh, 01AI, 01AI is a Chinese company, uh, they released the small version of Yi, and we've talked about Yi 34B multiple times before, there's a, a great fine tune from Nous, uh, they released a 9, 9 billion parameter version of Yi, which, uh, they trained for a long time, looks like, and, um, They showed some benchmarks, and it's very, very interesting how confusing everything is right now, because even, you know, even Gemma is not really 7 billion parameters.

[00:33:58] Alex Volkov: Yeah, we talked about this, right? But then they now compare, they say in the same category broadly, and they now compare like Yi 9 billion parameters to Mistral 7 billion to Solr 10. 7 billion. So I'm not sure like what this category is considered, but maybe folks here on stage can help me like figure out what this category is considered.

[00:34:19] Alex Volkov: But Yi is fairly performative on top of Mistral 7b, and I think it's still one of those models that you can run. I think, if anything, comparing this to Solr, 10 billion parameters, we've talked about Solr multiple times from the Korean company, I think. Yi is very performative, and the 34 billion parameter model of it was very good, and many folks really, really did some fine tunes of it.

[00:34:45] Alex Volkov: So, asking the fine tuner folks here if you have a chance to look at it, and if not, is this something interesting? It looks like, unfortunately, YAML is having a lot of like X problems, uh, but once you come up, we're going to talk about the Hebrew GPT as well. Um,

[00:35:02] Far El: What I do find interesting is, uh, how, yeah, like the, the, the broad evaluation spectrum that a lot of these models are, are comparing themselves to now, uh, and, and we're going to see more of these, uh, going forward, like, uh, I've seen early, uh, private researchers, Stuff, but like I feel like the category is no longer all just compare 7b to 7b It's it's just expanded to like sub 10b, right?

[00:35:27] Far El: Like that's pretty much what it is like those those numbers even from players like Google are very You know, um, like it, it just doesn't feel as rigid as it used to be, but also like we should keep in mind that not all parameters are the same, right? So, like, uh, like we've seen with certain MOE architectures.

[00:35:51] Alex Volkov: yeah, that's true. And, um, and I will say it's, uh, it looks like there's a art to train these models and some, some amount of art to also, uh, cherry pick which metrics you're, you're testing and against which models and which category you're placing your model in as well. Um, but just. And, and again, this was released like so recently that I don't think, I think yesterday, so definitely folks didn't have a chance to try this, but Yi, the, the other models of theirs were trained and performing very well, so, um, we're gonna be very excited to see if the Finetuning folks are jumping on this, uh, 9 billion parameter, and, and it performs better than, I think, Gemma is, ahem, The leading one, even though Mistral is still the leading one in our eyes.

[00:36:36] Alex Volkov: Okay, I think this is it in the, um, Oh, let's see, a few more details here for Yi, and before I finish, Uh, it's trained on 3 trillion tokens, so a lot, uh, It's decent at coding and math, and then it has open access weights, and then bilingual. That's basically what we were able to get, uh, and thanks to the folks at Hug Face, VB.

[00:36:59] Alex Volkov: I should probably add this as well. I think we're moving on to the main topic, which is the big companies, APIs and LLMs. I think it's, uh, you know what, you know, before this, I would go to vision category because we have Vic here. And, uh, I really want to chat about Moondream too. So, um, we've talked about Moondream 1, but folks who weren't with us, Vic, do you mind, uh, unmuting and then doing a little bit of a, of a intro for you as well?

[00:37:26] Alex Volkov: And then we'll talk about what's changed in Moondream.

[00:37:30] Vik: Yep, sounds good. Um, so, uh, Moondream is a small vision language model. Basically a vision language model is, uh, basically it's a language model where you can, Show it an image, ask it questions. You can ask it to describe the image. And the reason this is useful is not because it unlocks any new capability that people didn't have like five years ago.

[00:37:56] Vik: All the stuff you could do with it, object detection, captioning, etc. It was all possible. The thing that's helpful about models like this is they're a lot easier to use. Whereas historically, if you wanted to do a computer vision task, you'd have to collect a bunch of data, train your own YOLOV, 7, 8, I think there are 9, V9 now, model, um, and that usually works super well, but it's, uh, when you're trying to build an app, it's just unnecessary extra work for you, whereas with a general equation language model, similar to how you use chatGPT with text, you can just ask it questions in natural language, and it It makes developing computer vision apps a lot easier.

[00:38:38] Vik: Um, so I released Moondream 1 maybe about a month ago. Um, it's, it's not unique by the way. There's other open source, well, open ish source vision language models out there today. Uh, but they're all in the 7 billion to 34 billion to 70 billion param range. Uh, Moondream is 1. 8. 86 billion params, which makes it very easy to run, um, cheap to run on edge devices, like you literally don't even need a GPU to run it, you can just run it on CPU and get acceptable performance. Um. Yeah, so, Moon Dream 1 was trained on some datasets that were derived from GPT 4, and so the licensing was, uh, non commercial. Like, you could use the model, but not. It was research only. For Moon Dream 2, which I released earlier this week, maybe last week, time's a little bit of a blur, um, I re did the datasets, um, All of the synthetic data used to train it is now generated using Mixtral, uh, and as a result, like, it's all clean.

[00:39:47] Vik: So I was able to license it as Apache 2. 0. There's no restrictions on you can use it or

[00:39:53] Alex Volkov: Vic, I have a question real quick. Uh, when you say synthetic data, and we're going to talk about some synthetic data in, in SD3 as well. Um, do you mean captions for images for, for, to train? Like what, what synthetic data are you generating with Mistral? Because Mistral is not multimodal.

[00:40:08] Vik: Yep. Great question. I'm going to post a more detailed breakdown of how I did it, uh, later. But basically to train these visual language models, you need, uh, paired image and text data. And the text needs to be read. You want like a mix of, hey, can you caption this image? Hey, can you caption this image in a lot of detail?

[00:40:29] Vik: Can you answer questions about this image? Um, there's a lot of images available with high quality captioning information, like common captions, whatnot. There's, there's a bunch of datasets. And so you use a model like Mistral to transform it into the types of queries that you want your Um, VLM to be able to answer.

[00:40:51] Vik: Basically you take Coco for example, common captions information and have the model convert those image captions into questions and answers about the image.

[00:41:04] Alex Volkov: So how long did it take you to train the second version of Moondream? And, um, what else can we do that the previous one or what else can you do better?

[00:41:14] Vik: It took about a month to get the same level of performance from the new data collection pipeline. One of the things that was really hard was I think when you're generating synthetic data, life is just so much easier when you have a GPT 4 class model. But unfortunately, the terms of use don't allow you to train a competing model and it gets a little iffy.

[00:41:33] Vik: Um, and so just basic things like tone of the response, right? Like if you use Mixtral to generate the [00:41:40] data, your prompt is something like, hey, I'm going to give you five captions for this image, consolidate all the information in it, and generate a caption. But you want it to pretend that it's looking at the image, um, not say something like, hey, based on the five captions that you have provided, there is a dog and a man is petting and whatnot.

[00:41:58] Vik: So. Getting that tone right required a lot of work. Uh, I ended up using DSPY. It's a super cool

[00:42:06] Alex Volkov: Oh,

[00:42:06] Vik: framework for prompt optimization. Um, everyone should check it out. But basically you can do stuff like manually annotate 400 examples and then it uses OptiUnit to figure out like what's the best chain of thought few shot setup that you can get to optimize performance based on metrics you can define.

[00:42:25] Vik: Uh, but yeah, getting that tone right was a lot of work. The other thing I focused on a ton was reducing hallucinations. Uh, I don't know if anyone's dug into the Lava training dataset, but one of the reasons Lava style models hallucinate a lot is just because they're trained on bad data. And you'll notice that a lot of hallucinations are oriented around COCO objects, like it tends to hallucinate handbags, ovens, um, people.

[00:42:53] Vik: A lot in images when they're not present, and then coffee cups, very, very common. And that's mostly because of bad object annotations in COCO, so we'll spend a lot of time filtering those out. Um, currently the benchmarks are slightly better on Moon Dream 2 than Moon Dream 1. Um, but qualitatively, if you try it out, the model hallucinates a ton less, and a big part of that was just the data pipeline.

[00:43:15] Alex Volkov: Interesting how that's not part of the benchmarks or evals. Right. Just underlines how, um, how far we still have to go in, in terms of evaluations that, you know, qualitatively you feel that it hallucinates less, uh, but there's not a lot of, uh, benchmarking or evaluation for hallucinations, I guess. Um, and you said this is like,

[00:43:38] Vik: in the long form, right? Like, if you, there's OAP, which asks a bunch of yes, no questions about your image. And so you can use that to measure hallucinations in that sense. But, like, uh, how do you measure hallucinations when you ask the model to describe an image? It gives you a long

[00:43:57] Yam Peleg: form answer.

[00:44:01] Alex Volkov: That's awesome. Congrats on the work, Vic. Uh, can folks try it right now? You said this is now commercially viable, right? Like, folks can actually use

[00:44:08] Vik: Yep, it's open source. You can build it into your app. Uh, there's a demo on Hugging Face Spaces if you want to try it out before.

[00:44:14] Alex Volkov: Yeah,

[00:44:15] Vik: You start building on it. I'm going to get Lama. cpp integration going here this week or early next week. So, uh, that'll unlock getting it into all the standard applications that people use, Olama, LMStudio, JanAI, etc.

[00:44:29] Vik: So it's going to get a lot easier, but the weights are available. The code is available. It's all open source, Apache 2. 0. You can use it today.

[00:44:35] Alex Volkov: that's awesome. Vic, congrats on this. What is this, uh, Hugging Face 0A100 space thing that you got as well? I was looking at this, I think, did they, like, start giving A100s to demo spaces now?

[00:44:50] Vik: Uh, yeah, so zero is kind of like AWS Lambda, but for GPUs. So rather than having a provisioned GPU for your space, Anytime a user comes in and there's a pool of GPUs and it pulls one and loads your model into it and runs it. Until recently, they had 8NGs, I think, available for this, but they switched to 800s.

[00:45:11] Vik: So, uh, there's a bit of latency if your model hasn't been tried out for a bit while it's loading it onto the GPU. But once it's on the GPU, it's super fast.

[00:45:22] Alex Volkov: Nice. Even for, for a tiny model like this, I wanna say a 100 is probably like just poof and it

[00:45:28] Vik: It's, yeah,

[00:45:31] Alex Volkov: awesome. Uh, Vic, congrats on this and thanks for sharing with us and folks. Definitely give Vic uh, a follow moon dream. When I tested this a couple, when the first one released, I tested this against gonna significantly larger vision models and it performed very well.

[00:45:45] Alex Volkov: Especially now that it's like Apache license, you can build it into your own pipelines. Um. And, um, I think the one thing to not miss from what you said is that there are specific vision models like YOLO and different things. And, uh, we have, um, one of the YOLO masters, Skalski, uh, Pyotr is a friend of the pod and he trains these models and he, he has demos and videos of how to actually use them.

[00:46:10] Alex Volkov: Uh, it's more, significantly more complex than using a vision VLM like Vic said. Um, you have to You have to learn this field, uh, it's like the, the very, like the standard machine learning in vision field as well. Uh, even though those models are tinier and probably run faster, some of them, I think YOLO can probably run in real time.

[00:46:29] Alex Volkov: Um, getting these tiny models, uh, to be able to talk to them, I think is significantly easier for many folks. And, uh, definitely, definitely check it out. Um,

[00:46:39] Vik: yeah. Just to clarify, um, Moondream. is great for vision tasks. If you ask it to write a poem about an image or roast you or something, it's not going to do as well. Because the sole priority I had was like make a model that's really, really good at computer vision. Um, and if you need more advanced reasoning, like you wanted to solve a math problem for you, like you take the outputs from Moondream and feed it into a bigger LLM.

[00:47:03] Vik: But Moondream is going to be great at vision tasks, other stuff, not so much.

[00:47:09] Alex Volkov: Absolutely. And, uh, if folks want to help, uh, the link is in the top of the space. Go into the GitHub, give it a star, and check it out and give, uh, Vic feedback. Um, moving on, uh, Vic, uh, feel free to stick with us and, and chat about the next stuff. Uh, speaking of folks who built and released things, uh, Yam, you have also Nous of your own, and hopefully, finally, your tech stuff is solved and you're now with us in the space.

[00:47:31] Alex Volkov: So let's do a sound check.

[00:47:34] Yam Peleg: Can you hear me?

[00:47:36] Alex Volkov: Um You've been, you've been cooking, and we've been, we've been waiting, so you wanna, you wanna tell us the end result of this set cooking?

[00:47:45] Yam Peleg: Yeah, yeah, uh, I've, uh, I've grouped, uh, two different interesting models this week. Um, uh, first one is, uh, a little bit of a surprise to myself as well. Uh, one of the experiments, uh, ended up, uh, being the, the top 7B model on Hugging Face at the moment, Hugging Face leaderboard. Um, uh, I suspect it a little bit, so, uh, take it with a grain of salt.

[00:48:10] Yam Peleg: So, it's under investigation whether or not the model, uh, overfitted. The little board. Uh, I think that there's no attempt to over repeat the little board, but, um, I'm always, uh, suspicious when something like this happen. Uh, but, uh, yeah, it's out there. Experiment 26 if you are interested in trying it out.

[00:48:29] Yam Peleg: And, uh, maybe further fine tuning, uh, this model or merging with it. It's yours. Um, and another model, which is, uh, the Gemma fine, uh, that, um, the Gemma continuous pretrained that I'm, uh, working on for the past two weeks. Uh. Uh, it had been released, uh, this morning, uh, it's, it's a continuous pre train of, uh, GEMMA and extended from 7b to, uh, 11b, um, and then, uh, continuously pre trained on Hebrew and English multilingual.

[00:49:02] Yam Peleg: Um, there is, there are other tricks that went into, uh, into training this model. Uh, you're more than welcome to read, uh, the write up that I did summarizing the whole thing. Um, But, uh, Benchmark's coming soon, and I think that the model is really, really good, uh, for the Hebrew part, put that aside, but, uh, just on the English part, I used, uh, Cosmopedia from, uh, HuggingFace, the new, new dataset that is a replication of PHI, uh, based on, uh, Mixtral from HuggingFace, really good dataset, I used it as the English part of the model, and, uh, that's about it, um, it was a long two weeks struggling with, uh, training Gemma, but, uh, It paid off and, uh, the model is yours now, so, uh, enjoy.

[00:49:48] Alex Volkov: let's talk about the struggles with Gemma, um, a little bit more, because definitely you were very, very vocal about this. What changed, like, uh, um, Did they [00:50:00] release anything else, or did the communities, like, figure out, or did you figure out some stuff that you wanna share?

[00:50:04] Yam Peleg: both, both, both. They, uh, first, uh, Gemma was trained, uh, using JAX on TPUs. Uh, makes sense, it's from Google. Um, and, but Google released, uh, two, I think even four different implementations of Gemma. Um, apparently, uh, on the Torch version, there were subtle, tiny details that were different. Um, but they are very hard to detect if you just follow the code.

[00:50:34] Yam Peleg: It's rounding errors, things that are done by default differently between PyTorch and JAX, and those things influence the training, um, just silently. They don't crash your code, but when you train with those things, the model is not 100 percent as it was trained initially. You're basically losing performance.

[00:50:56] Yam Peleg: It's suboptimal. So, uh, it took, I think, two, I think two weeks, and it's still going on, for people to go meticulously through all the details to just clear everything out, um, since many people just I just felt a little bit confused that the model didn't work that well, even though on, on paper and, and in general, it's, it should be an extremely good model.

[00:51:28] Yam Peleg: It is trained for 6 trillion parameters, 6 trillion tokens, which is insane. just didn't see the performance, the qualitative performance of the model. So it got people to be suspected, and just people are now investigating. For me, it is what it is. I started the training two weeks ago. So, uh, I, uh, I ended up with this sub optimal training, unfortunately.

[00:51:56] Yam Peleg: But I do continue and I plan to nudge the model a little bit once all the, all the bugs and issues are cleared out. I plan to just take the final architecture, my weights, and just nudge the model a little bit to clear out all the, all the issues and, and get you all a better model. But, uh, yeah. It was a rough, it was a rough two weeks.

[00:52:19] Alex Volkov: two weeks, um, especially during, during the Hackenface went down and, um, you had to check on your other model. That

[00:52:28] Yam Peleg: oh yeah, that was hard. Very, very hard.

[00:52:30] Alex Volkov: We did spend a bunch of quality time together, all of us, while this happened. Uh, so Yam, how can folks, uh, try this out? And, uh, you mentioned something. You also have Hebrew GPT, uh, and this, this model was just trained with the Hebrew stuff, but with less knowledge as well, right?

[00:52:46] Alex Volkov: Can you talk about the difference there?

[00:52:49] Yam Peleg: Yeah, there are two models, uh, one of them is, uh, is called, uh, Okay. Hebrew GPT is, is a model that is heavily trained for, uh, three, three, nearly four months straight, uh, on, uh, 300 billion tokens in Hebrew. Uh, it is, it is heavy project. And, uh, yeah, it was, it was done at the summer, I think. Yeah, at the summer.

[00:53:15] Yam Peleg: Uh, but this one is basically because they have all the data and, and we just. We just detected, because people played with Gemma, and hours after it was launched, people already detected that the tokenizer probably was trained multilingually. without Google, uh, announcing anything about it because, uh, many different people found out that the model is surprisingly good in, in languages that are not English, even though Google announced that the model is just English pre trained.

[00:53:47] Yam Peleg: So, uh, just from, from our perspective, you know, me and my buddies, we were looking at this and just thought to myself, wait, we have. We have an opportunity here. If there are tokens in the model that are multilingual, and clearly the model has some bases, especially in Hebrew, we can just fine tune it just a bit and get an extremely good model in Hebrew,

[00:54:10] Alex Volkov: So it's missing just data. So it's, it's capable, but it's missing data, basically.

[00:54:16] Yam Peleg: Yep, because it was not specifically trained in Hebrew, it just saw a little bit, but you can clearly see that it has a basis in Hebrew. So what I did, I followed LamaPro, which is, which basically says that you can extend the model, you can just stretch it out, add more layers, and freeze the base model such that you won't lose, do catastrophic forgetting what the model already learned before.

[00:54:43] Yam Peleg: So you just train the extended blocks. So, I literally just added blocks and trained another language to these blocks only. So, now I have a model that is, that, you know, has the same base scores as before, but also knew another language. So, that's the whole trick of this project, and, uh, it saves a lot of compute, pretty much.

[00:55:08] Vik: Hey, that's super cool. Can you talk a little bit more about, like, how the blocks were arranged?

[00:55:13] Yam Peleg: Yeah, sure. Uh, it is If you follow the Laman paper, they tried different configurations, like a mixture of experts and so on and so forth. They ended up, after experiments, that if you just copy a couple of the attention blocks, just like that, just copy them and stretch the model, deepen it, and train only the blocks that you copied, leaving also all the original in place. That experimentally gets to the best performance, so I did exactly that, I just followed exactly what they said in the paper, and the result, it looks really well.

[00:55:57] Alex Volkov: That's awesome. Um, all right, so folks can check out the, the deeper dive that Yam usually writes up in the tweet that's been above, um, with, with a lot of detail as well, and definitely give Yam a follow because this is not the first time that Yam trains these things and then shares. Very verbosely, Soyam, thank you.

[00:56:15] Alex Volkov: Uh, and it's great to see that the GEMMA efforts that you have been cooking finally, finally turned into something. And we'll see, we'll see more from this. Uh, I want to acknowledge that we've been here for an hour. There's like one last thing that I want to talk about in open source stuff. And then we should talk about Cloud 3 because like it's a big deal.

[00:56:33] Alex Volkov: So unless the rumors about today are true, Cloud 3 will still be the biggest deal of the space. So let's quickly talk about this. I want to just, uh, find the, the, the, the thread and then kind of thread the needles. So there's a paper that was released. It's called tiny benchmarks, uh, evaluating LLMs with fewer examples from, from folks, uh, fairly familiar folks.

[00:56:54] Alex Volkov: Leshem Chosen is the, the most like standing out there name for me as well. Um, quick and cheap LLM evaluation. And the way I saw this. Uh, this paper is that Jeremy Howard, the same guy from AnswerAI that we've talked about, uh, before, he tweeted about this and says, hey, this looks like a really useful project that we can take, uh, tiny benchmarks and then make them run, uh, on our models significantly faster and spend significantly less GPU.

[00:57:19] Alex Volkov: And then he specifically, uh, Jeremy specifically tagged Far El here with us on stage about his project called Dharma. So Far El, let's talk about Dharma and let's talk about this tiny benchmarks thing and why like smaller benchmarks are important. Uh, and I think I will just say that, uh, the way I learned about this is LDJ showed me.

[00:57:37] Alex Volkov: Um, Awaits and Biases. When we did like Awaits and Biases deep dive, he showed me Dharma there and it looked super cool. So let's talk about this just briefly and then we're going to talk about Cloud afterwards.

[00:57:48] Far El: Yeah, for sure. Um, so about, like, about six, seven months ago, uh, I released Dharma. Basically, the idea was that we wanted, uh, we found that eval loss alone is not a really good, uh, indicator of model performance, um, throughout the training run. So, specifically within a training run, um, and we were trying to find Um, other [00:58:20] ways of evaluating the models throughout the training graph.

[00:58:22] Far El: And, uh, one idea was, you know, let's take a statistically significant sample, uh, or sub sample of, uh, the benchmarks, uh, out there. Uh, MMLU, ARX C, uh, AGI, Eval, BigBank, and so on. Um, And use those subsets as, um, markers of performance across these different downstream tasks. Of course, you know, like, uh, my, my opinion in benchmarks is that, you know, like, it's, it's a good indicator, but just on MCQ format and so on, so it's not the only way you want to evaluate your model, but, um, it's, uh, it's a really, um, it's a, it's a, it's a, just added information you can have, um, uh, basically collect the model's performance across different tasks and subjects, essentially quizzing it throughout the training.

[00:59:21] Far El: And the recent paper, um, that Jeremy mentioned, it came out about two weeks ago or something, um, approves and validates this, uh, This idea, which is awesome, because it does show that you can actually get a somewhat accurate picture of the performance on these benchmarks from a sample, 100 examples, which is very much in line with what we did with Dharma.

[00:59:51] Far El: Um, so, like, uh, we're actually, uh, going to release, um, uh, like a repo on GitHub for anyone to. Make their own Dharma datasets, in the works for a few months, but got trailed away. But we're gonna have that in the next, um, in the next few days. It's already on GitHub, but just, uh, just like getting polished. Uh, so, uh, hopefully anyone can easily make their own eval datasets and run them during their training grounds.

[01:00:23] Alex Volkov: I want to stress how, how big deal this seemed to me when LDJ showed, showed this to me as well, uh, because in, in your weights and biases dashboard, you can basically look at the loss curve and try to understand surmise. Many folks like, like you guys and Jan probably already have the instinct for, oh, something's going wrong, uh, with the loss curve.

[01:00:41] Alex Volkov: But, uh, then after the model is finished, many folks only after that, they start doing evaluation. Many folks don't even do evaluations after that. Um, but. I think I saw the same thing also with Olmo from Allen Institute for AI, that they released everything end to end. I think they also had like, uh, evaluations, uh, actually don't know if part of the training run or afterwards, but they definitely had this in the same, in the same view.

[01:01:04] Alex Volkov: And then LDJ, when, when you were showing me Dharma, Dharma actually does a subset of those evaluations, maybe not as precise, right? For like, it's not exactly the same, but you can definitely see from, from checkpoint to checkpoint when the model trains, how. How potentially it could respond on those evals.

[01:01:22] Alex Volkov: And then, um, it just adds a bunch of information for you. Which is, I think, great.

[01:01:30] Far El: Look, like, even just with training loss and eval loss alone, like, we can't really tell, like, uh, whether the models, like, there's some, some things we can grasp, but it's not the full picture. So, uh, having these added, um, uh, like, this added information from these benchmarks is interesting because. You know, it does, it does add another kind of, uh, uh, dimension to the evaluation itself.

[01:01:57] Far El: And then you can break it down by all the different subjects. So, I can, I can see if, um, if my model is generalizing well across, um, all the different subjects. Uh, sometimes you see, for instance, that Uh, it, uh, like, the model gets better at math, but then it actually gets worse on, like, uh, law, for instance, or, uh, uh, all these different kind of, like, tiny markers of whether the model is getting better at specific subjects or not.

[01:02:29] Far El: Of course, you have to take into consideration always that this is benchmarks in the sense that it's, like, MCQ based. So, there, like, you do want to go beyond that. Um, if you want to get a full picture, but this, this is a good way to, uh, to eval your mobs. Uh, also, uh, the, uh, uh, like with the tool we're releasing, uh, you're going to be able to control, uh, the types of subjects that you can actually like target.

[01:02:59] Far El: Because, you know, not every single training run is the same and you might be, uh, trying to achieve something very different than, uh, let's say a generalized. Uh, like, uh, model that's good at benchmarks, right? But, um, so, so, with this tool, we're gonna basically allow you to, to customize those, uh, those datasets for your, uh, training room.

[01:03:22] Alex Volkov: That's awesome. And I should say one thing that I remember is folks do eval on checkpoints, right? The model as it trains generates several checkpoints. Uh, the process there is like slow. And I think that's the benefit, let's say, from weights and biases, um, which, which I feel like is a good place to plug as well.

[01:03:39] Alex Volkov: And I think LDJ, you remember you showed me like, otherwise folks will SSH the machine, download this weight, start like running a separate process. And the importance of tiny benchmarks on like Dharma, Dharma is. Significantly faster evals, they're able to run probably as part of your training as well and expose the same with the same dashboard so you don't have to deal with this significantly improving everybody's life which is what we're all about here in Weights Biases.

[01:04:04] Alex Volkov: So definitely folks, Far El is going to release the Dharma toolkit you called it? What do you call this iteration of Dharma?

[01:04:12] Far El: It's just, uh, the, like, the, the repo is just called Dharma, uh, uh, I'll, I'll make a public post on Twitter. It's, it's public right now, the repo, so you can use it. It's just like It needs a bit of polishing, um, and uh, some features are not fully implemented yet, but like, everything should be good to go in the next day or so.

[01:04:33] Far El: I'll make a post on my Twitter, so just follow me and you'll hear more about it there. Um, and also in parallel, we'll just, we're going to release kind of Dharma 2, which is going to basically be a cleaner version of these, uh, of Dharma 1, um, uh, using this new code. So, uh, you, you, you, you can actually just replicate it.

[01:04:56] Far El: We'll, we'll have the configs, uh, uh, like examples so you can just replicate it for yourself. Um, and yeah, uh, hopefully if anyone wants to contribute to this, uh, like there's a lot of different, uh, paths we can take, uh, to improve this and make this a toolkit for. Uh, for, um, uh, even more than just the downstream, uh, benchmarks like MMOU and

[01:05:23] Nisten: ArcScene and so on. Yeah, I've posted, I've posted by the way in the comments to this space and in the Jumbotron, the repo that Far El has up right now. And, uh, yeah, the main technique of it is that While the benchmarks are not great evaluators, they can be very good at telling incremental changes, or if you did something good in the model, you can spot that.

[01:05:47] Nisten: And, uh, with, with the Dharma technique, you only need to do about a hundred questions instead of running the entire 65, 000 question benchmark, and you will get a relatively accurate, but very, very fast, uh, fast eval. So again, it's, it's really good for people doing training and fine tuning.

[01:06:08] Alex Volkov: Alrighty folks, so we're coming up on an hour and a few minutes. Let's reset the space and then start talking about Claude. One second. Let's go.

[01:06:24] Alex Volkov: Hey everyone who recently joined us, we are now at the second hour of ThursdAI, today's March 7th. And the first hour we talked about open source LLMs, we talked about Ansari AI stuff, new techniques of training full huge models on [01:06:40] consumer hardware, we even briefly mentioned Um, TinyBox and TinyCorp from George Watts and AMD's response to it.

[01:06:47] Alex Volkov: And we've talked with two folks here who trained specific models, Vic with Moondream and Yam with Gemma, the Hebrew version as well. And now it's time for us to discuss the big world of big companies who spend millions and billions of dollars on AI. And I think. Uh, there's two issues for us to discuss.

[01:07:07] Alex Volkov: We're probably going to start with Claude because it's going to take us a long time, but we will acknowledge if, if, if we don't have time, uh, fully to discuss this, that, uh, Elon Su's OpenAI, OpenAI response back. And as part of this response, uh, Ilya was cited. And I don't know if you guys saw this, but the response from OpenAI to, to Elon's, uh, Elon's things, uh, Ilya Sotskover, the previously, the co founder of OpenAI, previously chief scientist, uh, was.

[01:07:33] Alex Volkov: Excited signing this, and I don't think somebody would sign in his name, I don't think. LDJ, you have comments on this before we talk about Claude?

[01:07:41] Nisten: I was going to say, I think, uh, unless you guys covered it already about an hour ago, there's some breaking news with Inflection releasing a new model.

[01:07:50] Alex Volkov: Yeah, yeah, so I definitely have this, uh, inflection released, uh, Pi 2. 5. Uh, we didn't cover this yet, let's, let's, uh, let's, let's cover this as well. But I think the biggest, and it is breaking news, but you know, uh, I think it dwarves compared to Claude. So. So, this Monday, Anthropic, who we've all but discarded, I think, don't actually discard it, but I regarded Anthropic as kind of the second best to open AI for a long time, especially because of the context windows, they had the biggest context window for a long time, even 128 1000 tokens in Contacts window during Dev Day back in December, I want to say November, December.

[01:08:37] Alex Volkov: Um, even then, Cloud still had 200, 000 tokens. So up until Gemini released their million, et cetera, Cloud still, Entropiq still was leading the chart for this. Um, slowly, slowly, they reduced our opportunity to use this, which was kind of annoying. Um, And then they just came out with three new models. The Cloud 3, so Cloud 3 has three new models, Cloud Opus, Cloud Sonnet, and Cloud Haiku.

[01:09:05] Alex Volkov: Haiku they didn't release yet, but they claim that for its speed and cost effectiveness, Haiku will be the fastest, most effective model of the size and ability, but they didn't release Haiku yet. Um, Sonnet is kind of the I want to say GPT 3. 5, um, equivalent, they claim balance as intelligence and speed.

[01:09:26] Alex Volkov: Uh, and if you want, like, just speed as well, that's, that's yours. And then Opus is the most intelligent model setting new standards in AI capabilities. And I love that companies do this, uh, and I think it's kind of on OpenAI's, uh, uh, kind of, it's their fault. Everybody compares themselves to OpenAI's GPT 4 released technical paper, uh, and since then we know definitely that GPT 4 is significantly more performant on many of these benchmarks, but still the big companies say, hey, well, we can only compare ourselves to whatever you released publicly.

[01:09:58] Alex Volkov: And so everybody still compares themselves to like GPT 4 a year ago, um, which, which Opus beats. So. What else is very interesting here? Um, very close, if not beating MMLU and, and different evaluation benchmarks on GPT 4, uh, competitive model. Finally, finally, um, multi model from Claude. I think this was a, this is a big step for most of the top models now are multi model, which is incredible.

[01:10:27] Alex Volkov: Excuse me.

[01:10:30] Alex Volkov: Uh, LDJ, go ahead. Clear my throat.

[01:10:33] Nisten: Yeah, I think, um, so if you look at the billboard, I just posted, uh, a post that shows like a couple of polls that have been made with, you know, like a few thousand people have voted in these polls where it seems like it's about a 5 to 1 ratio with for every one person saying GPT 4 Turbo is better at coding, there's 5 people saying Cloud 3 is better at coding.

[01:10:55] Nisten: Um, so Cloud 3 is winning 5 to 1 in that, and then another poll of, um, just straight up asking, is Cloud 3 Opus better than GPC 4? And Cloud 3 also won in that poll of 3 to 1, or sorry, um, 3 to 2.

[01:11:13] Alex Volkov: felt like The timeline that I follow and the vibes check. And we also had some time, right? Usually these things happen as we speak.

[01:11:22] Nisten: I'm going to make a quick post. Cloud 3 just went up on the LMSIS arena too.

[01:11:27] Alex Volkov: Oh, yeah? Okay, tell us.

[01:11:29] Nisten: Yeah, it is, uh. Yeah, so here's the thing, just because people voted that way does not mean that's what they voted in double blind tests. In double blind tests, it's third, so it's above, it's better than Gemini Pro, but it's worse than GPT and 01.

[01:11:49] Nisten: 25.

[01:11:50] Alex Volkov: In the arena metrics, right?

[01:11:52] Nisten: Yeah, in the double blind tests, which are pretty hard to, uh, to beat, you know. Yes, there's a lot of role play type of things, um, that people try to do, and also like summarization tasks and stuff in Elmsys, and I just know that from, I kind of like went through their, their data when they released like some of their stats before.

[01:12:14] Nisten: Um, And I think, like, from what I've gathered of what Cloud3 is specifically really good at, it seems like just high level, graduate level, uh, like, if you wanted to review your paper or help review some literature for a very deep scientific concept or a PhD topic, it seems like Cloud3 is better at those types of things, and also just, like, better at coding overall, where it seems like other, maybe more nuanced things, like, you know, summarization or, or things like that, GPT 4 might be better. Also, I think it's good to keep in, oh, sorry, did I cut out or can you guys still hear me? Okay, you guys still can hear me? Okay. Um, I think it's also good to keep in mind the fact that People are maybe used to the GPT 4 style at this point because it's like one of the most used models for the past year. And so I think that might have something to do with the fact as well that even in the double blind tests, people might just end up preferring the style of the GPT 4 model, even though they don't know it's GPT 4, like they're just so used to that style that they end up like having a preference for that, even though it's not objectively better, if that makes sense.

[01:13:31] Nisten: And. You know, that might be kind of skewing things a little bit.

[01:13:36] Alex Volkov: So, um, actually go ahead and then we're gonna cover some other stuff that we got from them because we did get a bunch of new