March madness... I know for some folks this means basketball or something, but since this is an AI newsletter, and this March was indeed mad, I am claiming it. This week seemed madder from one day to another. And the ai announcements kept coming throughout the recording, I used the "breaking news" button a few times during this week's show!

This week we covered tons of corporate AI drama in the BigCO segment, from Inflection → Microsoft move, to Apple Gemini rumors, to Nvidia GTC conference, but we also had a bunch of OpenSource to go over, including an exciting glimpse into the O1 from Open Interpreter, which the founder Killian (of the ThursdAI mafia haha) joined to chat about briefly after an all nighter release push!

Another returning FOTP (friend of the pod) Matt Shumer joined as we did a little deep dive into prompting Claude, and how he went viral (seems to happen a lot to Matt) with a project of his to make Claude write prompts for itself! Definitely worth a listen, it's the first segment post the TL'DR on the pod 👂 this week.

Btw, did you already check out fully connected? It's the annual Weights & Biases conference in SF next month, and tickets are flying, I'm going to be there and actually do a workshop one day prior, would love to invite you to join as well!

TL;DR of all topics covered:

Open Source LLMs

Big CO LLMs + APIs

Nvidia GTC conference - Blackwell platform, NIMs and Gr00t robotics

Jensen interviewed transformers authors

Apple rumored to look at a deal including GEMINI

Apple releases a multi modal MM1 paper (X)

Inflection founders leave to head Microsoft AI

Google opens up Gemini 1.5 with 1M context access to all (X)

Vision & Video

NVIDIA + MIT release VILA (13B, 7B and 2.7B) (X, HuggingFace, Paper)

This week's BUZZ

Voice & Audio

Suno V3 launched officially (X, Blog, Play with it)

Distil-whisper-v3 - more accurate, and 6x version of whisper large (X, Code)

AI Art & Diffusion & 3D

Tools & Others

Neuralink interview with the first Human NeuroNaut - Nolan (X)

Lex & Sama released a podcast, barely any news

Matt Shumer releases his Claude Prompt engineer (X, Metaprompt, Matt's Collab)

Open Source LLMs

Xai open sources Grok (X, Blog, HF, Github)

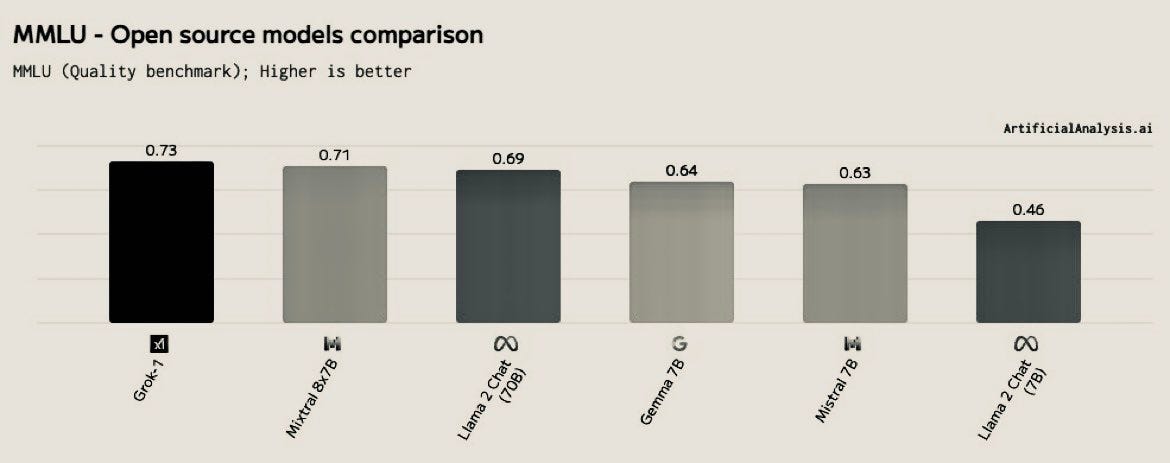

Well, Space Uncle Elon has a huge week, from sending starship into orbit successfully to open sourcing an LLM for us, and a huge one at that. Grok is a 314B parameter behemoth, with a mixture of experts architecture of 80B per expert and two active at the same time.

It's released as a base model, and maybe that's why it was received with initial excitement but then, nobody in the GPU poor compute category has the ability to run/finetune it!

In terms of performance, it barely beats out Mixtral, while being almost 10x larger, which just shows that.... data is important, maybe more important than Github stars as Arthur (CEO Mistral) helpfully pointed out to Igor (founder of Xai). Still big props to the team for training and releasing this model under apache 2 license.

Sakana AI launches 2 new models using evolutionary algo merging

Yeah, that's a mouthful, i've been following Hardmaru (David Ha) for a while before he joined Sakana, and only when the founder (and a co-author on transformers) LLion Jones talked about it on stage at GTC the things connected. Sakana means fish in Japanese, and the idea behind this lab is to create things with using nature like evolutionary algorithms.

The first thing they open sourced was 2 new SOTA models for Japanese LLM, beating significantly larger models, by using Merging (which we covered with Maxime previously, and whom Sakana shouted out in their work actually)

Open Interpreter announces 01 Light - the linux of AI hardware devices

Breaking news indeed, after we saw the release of R1 go viral in January, Killian (with whom we chatted previously in our most favorited episode of last year) posted that if someone wants to build the open source version of R1, it'll be super cool and fit with the vision of Open Interpreter very well.

And then MANY people did (more than 200), and the O1 project got started, and fast forward a few months, we now have a first glimpse (and the ability to actually pre-order) the O1 Light, their first device that's a button that communicates with your computer (and in the future, with their cloud) and interacts with a local agent that runs code and can learn how do to things with a skill library.

It's all very very exciting, and to see how this idea goes from an announcement on X, to hundreds of folks collaborating and pushing this to the open has been incredible, and we'll definitely do a deeper dive into capabilities and the whole project once the launch craziness dies down a bit (Killian joined us at the epitome of the launch all-nighter haha)

This is poised to be the first open source AI device, completely with .stl files for 3d printing at home, chip designs, ability to run end to end locally on your mac and we really applaud the team for this release 🫡

Big CO LLMs + APIs

Nvidia GTC annual conference - New Blackwell platform, NIMs, Robotics and everything AI + a chat with the transformer avengers

This week Nvidia had their annual GTC conference, where Jensen announced a ton of stuff, but the highlights where the new Blackwell chip (the next iteration of the H100) and the GB200 racks with a whopping 720PFlops of compute ( to put this number in perspective: the first DGX that Jensen delivered to OpenAI in 2016 was 0.17 Petaflops )

They also announced partnerships with everyone under the sun pretty much, a new way to deliver packaged AI experiences called NIMs (which we at weights & biases support as well) and a new foundational operating system for robotics called GR00T led by Dr Jim Fan.

Jensen also had the whole transformers original authors cast together on stage (and in the green room) for an hour, for the first time, to chat about, well... transformers. I really need to find the whole video and post it because it's hidden inside the Nvidia GTC website, but it was a very fun chat, where the team reminisced about the naming and their thoughts on the future of LLMs. They also covered each individual company (all of them lefty Google since then) and what they all do. It was a great chat.

Microsoft buys Inflection (almost) and Apple considers buying Gemini

In other huge AI player news, 2 of the 3 founders of Inflection AI left to start Microsoft AI (together with some of the staff), namely Mustafa who founded inflection, then helped raise 1.8B dollars, get up to 22K H100 GPUs, release Inflection 2.5 that comes close to GPT4, and then decided to leave. Inflection also pivoted away from consumer (Pi was a very nice AI to chat with) into API services, and apparently Microsoft will pay Inflection $650 to Inflection in the form of a licensing deal.

Meanwhile there are rumors that Apple is eyeing Gemini to integrate into IOS, which is, very weird given the recent bad press about Gemini (Unless Apple doesn't want to deal with the same bad press?) and it's even weirder given the latest push from Apple into Open Source.

Folks at apple this week released a new paper called MM1, outlining a new multi modal model they have trained (but not released) and show that it beats Gemini visual understanding.

It was also great to see that the authors of that model shouted out Weights & Biases crew that helped them through their work on this paper👏

Nolan - the first NeuralNaut (first human with a Nauralink implanted)

Just as I was summing up the notes for this week, Neuralink pinged that they are going to go live soon, and I tuned in to see a 20yo Paraplegic gamer, getting interviewed by a Neuralink employee, being very cheerful, while also playing a chess game, all with his brain. We went a really long way since the monkey playing Pong, and Nolan was able to describe his experience "it's like using The Force" of using Neuralink to control his mac cursor. It was all kind of mind-blowing, and even though brain implants are nothing new, the fidelity and the wireless connections + the very quick surgery made this demo such a nonchalant thing, that Nolan didn't even stop playing chess while being interviewed, probably not realizing that millions of people would be watching.

They have a bunch of ML understanding the signals that Nolan sends from his brain wirelessly, and while this is very exciting, and Nolan prepares for this halloween as Professor X from X-men, because well, he's in fact a telekinesis enabled human, Elon claimed that their next target is fixing blindsight (and that it already works on monkeys) presumably via camera input being triggered in the visual cortex.

Back in November 2022, I watched the Neuralink keynote and geeked out so hard about this section, where Dan Adams, one of the neuroscientists at Neuralink talked about how it's possible to trigger / stimulate the visual cortex to fix blindness and then generate an image.

Well, this is it folks, we talked about tons of other stuff of course but these are the main points that made the cut into the newsletter, as always, if you want to support this newsletter/podcast, please share it with friends ❤️ Hope to see you in SF in April (I'll be giving more reminders don't worry) and see you here next ThursdAI 🫡

P.S - I said Intel a bunch of times when I mean Nvidia, apologies, didn’t notice until post publishing 😅