"...Happy birthday dear ThursdAIiiiiiiii, happy birthday to youuuuuu 🎂"

What a day! Today is π-day (March 14th), 2024. For some reason it's important, not only because it's GPT-4 anniversary, or Claude 1 anniversary, or even that Starship flew to space, but also 🥁 it's ThursdAI BirthdAI 🎉

Yeah, you heard that right, last year following GPT-4 release, I hopped into a twitter space with a few friends, and started chatting about AI, and while some friends came and went, I never stopped, in fact, I decided to leave my 15 year career in software, and focus on AI, learning publicly, sharing my learnings with as many people as possible and it's been glorious. And so today, I get to celebrate a little 💃

I also get to reminisce about the state of AI that we were at, back exactly a year ago. Context windows were tiny, GPT-4 came out with 8K (we casually now have models with 200K that cost $0.25/1M tokens), GPT-4 also showed unprecedented levels vision capabilities back then, and now, we have 1.3B parameters models that have similar level of visual understanding, open source was nascent (in fact, LLama.cpp only had it's first commit 4 days prior to GPT4 launch, Stanford released the first Alpaca finetune of Llama just a day prior.

Hell even the chatGPT API only came out a few days before, so there was barely any products built with AI out there. Not to mention that folks were only starting to figure out what vector DBs were, what RAG is, how to prompt, and that it's possible to run these things in a loop and create agents!

Other fields evolved as well, just hit play on this song I generated for ThursdAI with Suno V3 alpha, I can’t stop listening to it and imagining that this was NOT possible even a few months ago

It's all so crazy and happening so fast, that annual moments like these propose a great opportunity to pause the acceleration for a sec. and contextualize it, and bask in the techno-optimism glory of aren't we lucky to live in these times? I sure am, and for me it's the ThursdAI birthday gift to be able to share my excitement with all of you!

TL;DR of all topics covered:

Open Source LLMs

Big CO LLMs + APIs

Anthropic releases Claude 3 Haiku (Announcement, Blog)

Cohere CMD+R (Announcement, HF)

This weeks Buzz

Early bird tickets for Fully Connected in SF are flying, come meet the Weights & Biases team. We're also going to be running a workshop a day before, come join us! (X)

Vision & Video

Deepseek VLM 1.3B and 7B (X,Announcement, HF)

Voice & Audio

Made a song with Suno v3 Alpha for ThursdAI, it's a banger (Song)

Hardware & Robotics (New)

Tools & Agents

Devin from Cognition Labs (Announcement, 47 minute demo)

Agents for your house and your Github tasks

Say hello to Devin from Cognition Labs (Announcement, Real world demo)

By far the most excited I've seen my X feed be this week, was excitement about Cognition Labs new agent called Devin, which they call the first AI software engineer.

You should really watch the video, and then watch a few other videos, because, well, only a few folks are getting access, and yours truly is not one of them.

It seems like a very published launch, backed by tons of VC folks, and everybody kept highlighting not only the innovative UI that Devin has, and it has a very polished UX/UI/Dev experience with access to a browser (where you can authenticate and it can pick up doing tasks), terminal (where you can scroll back and forth in time to see what it did when), but also a chat window and a planning window + an IDE where it rights code and you can scrub through that as well.

Folks were also going crazy about the founder (and team) amount of math ability and IOI gold medals, this video went viral featuring Scott the founder of Cognition, in his youth obliterating this competition… poor Victoria 😅

Regardless of their incredible math abilities, Devin is actually pretty solid, specifically from the UI side, and again, like with he AutoGPT hype of yesteryear, we see the same issues, it's nice, but cognition hiring page is still looking for human software engineers. Tune into the last 30 minutes of the pod today as we had tons of folks discuss the implications of an AI "software engineer" and whether or not coding skills are still required/desired. Short answer is, yes, don't skip, learn coding. Devin is going to be there to assist but likely will not replace you.

🤖 OpenAI + Figure give GPT-4 hands (or give figure eyes/ears/mouth)

Ok this demo you must just see before reading the rest of it, OpenAI announced a partnership with Figure, a humanoid robotics company recently, and just this week they released a demo of this integration.

Using GPT4-Vision and Text to speech capabilities (with a new, somewhat raspy voice and human like intonations), the bot listens to the human giving it instructions, sees the world in front of it, and is able to perform tasks that the human has asked it to do via voice. This feels like a significant jump in capabilities for these bots, and while it was a given that the two technologies (Actuator based robotics and LLMs) will meet soon , this shows the first I Robot like moment.

It'll still be a while until you can have this one do your dishes or fold your laundry, but it does feel like it's an eventuality at this point, where as before, it just felt like sci-fi. Kudos on this integration, and can't wait until Optimus from Tesla will add Grok brains and it'll make you laugh nervously at it's cringe jokes 😅

This weeks Buzz

We're coming to SF in April, our annual Fully Connected conference will feature keynote speakers from foundational AI companies, industry, our founders and tons of Weights & Biases users. We'll also be running a workshop (I'm one of the workshop folks) a day before, so keep an eye on that, it'll be likely included in your ticket (which is still, 50% off for early bird)

Open Source LLMs

Nous Research gives us Tool Use with Hermes 2 Pro (Announcement)

Getting json structured output and giving models the ability to respond with not only text, but specific instructions for which functions to run (aka tool use) is paramount for developers. OpenAI first released this back in June, and since then I've been waiting for Open Source to catch up. And catch up they did, with Nous releasing their first attempt at continued training of the renown Hermes 7B Mistral based model, with tool use and structured output!

If you're building agents, or any type of RAG system with additional tools, you will definitely be very happy as well, give Hermes Pro a try!

This one is not a simple download and run, you have to do some coding, and luckily the folks at Nous provided us with plenty of examples in their Github.

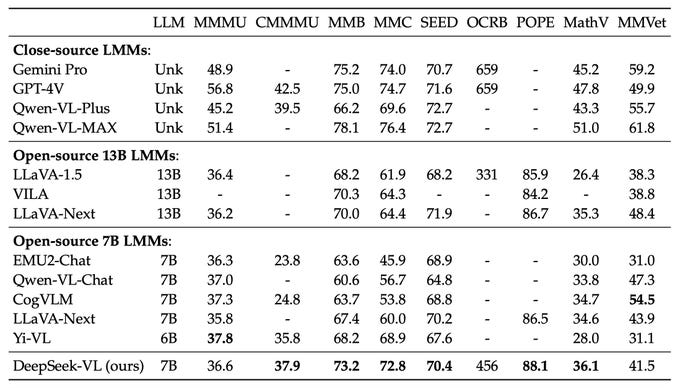

Deepseek gives us a new Vision model - Deepseek VL 1.3B & 7B (Announcement)

Absolutely punching above it's weight, this very high quality vision model from the Deepseek folks is just a sign of what's coming, smaller models, performing incredibly better on several tasks.

While the top is getting crowded with Claude, GPT4-V and Gemini which are generic, on specific tasks, we're getting tiny models that can offload fully into memory and run hell fast and perform very well on narrow tasks, even in the browser

Big CO LLMs + APIs

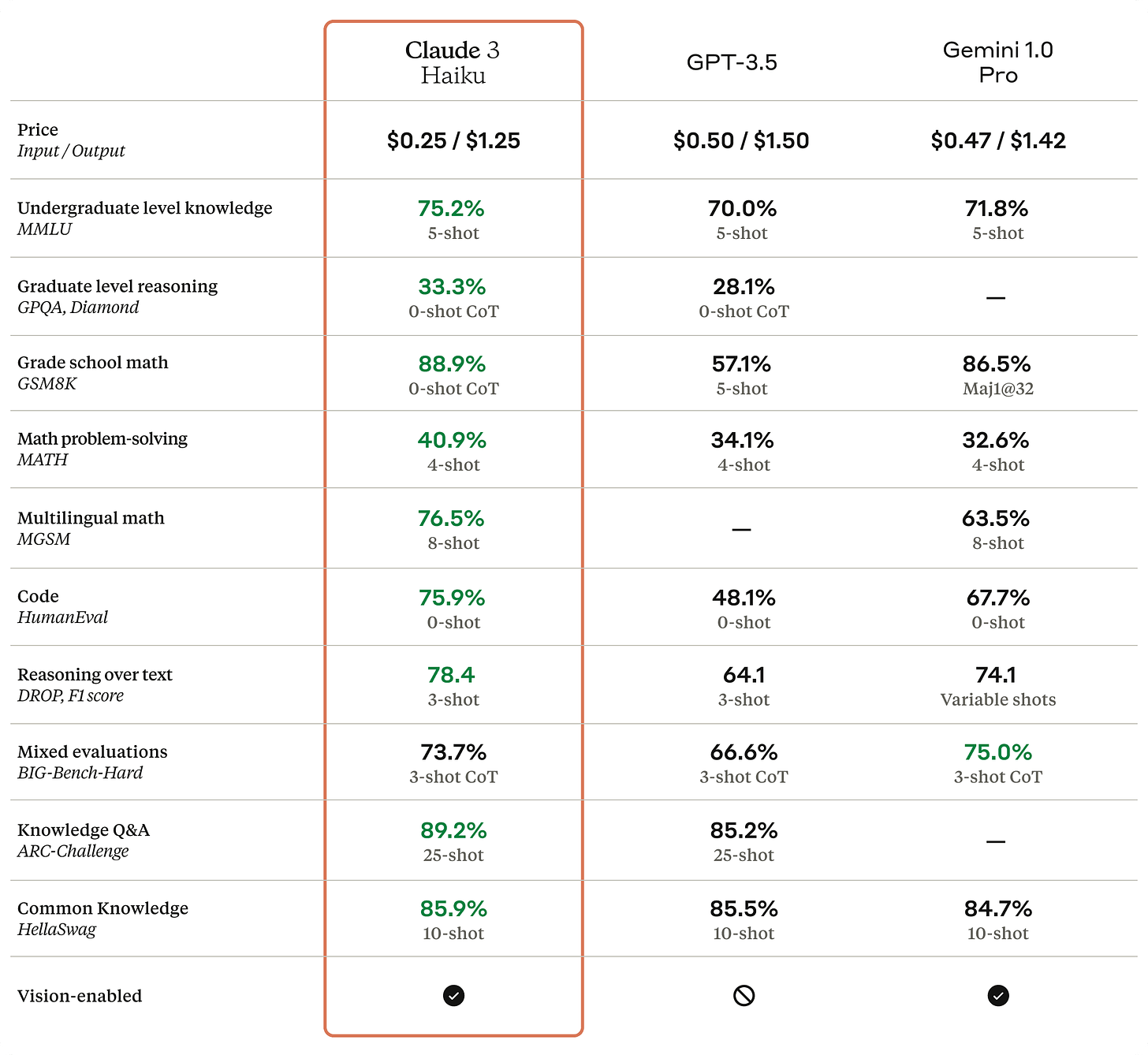

Anthropic gives the smallest/fastest/cheapest Claude 3 - Haiku

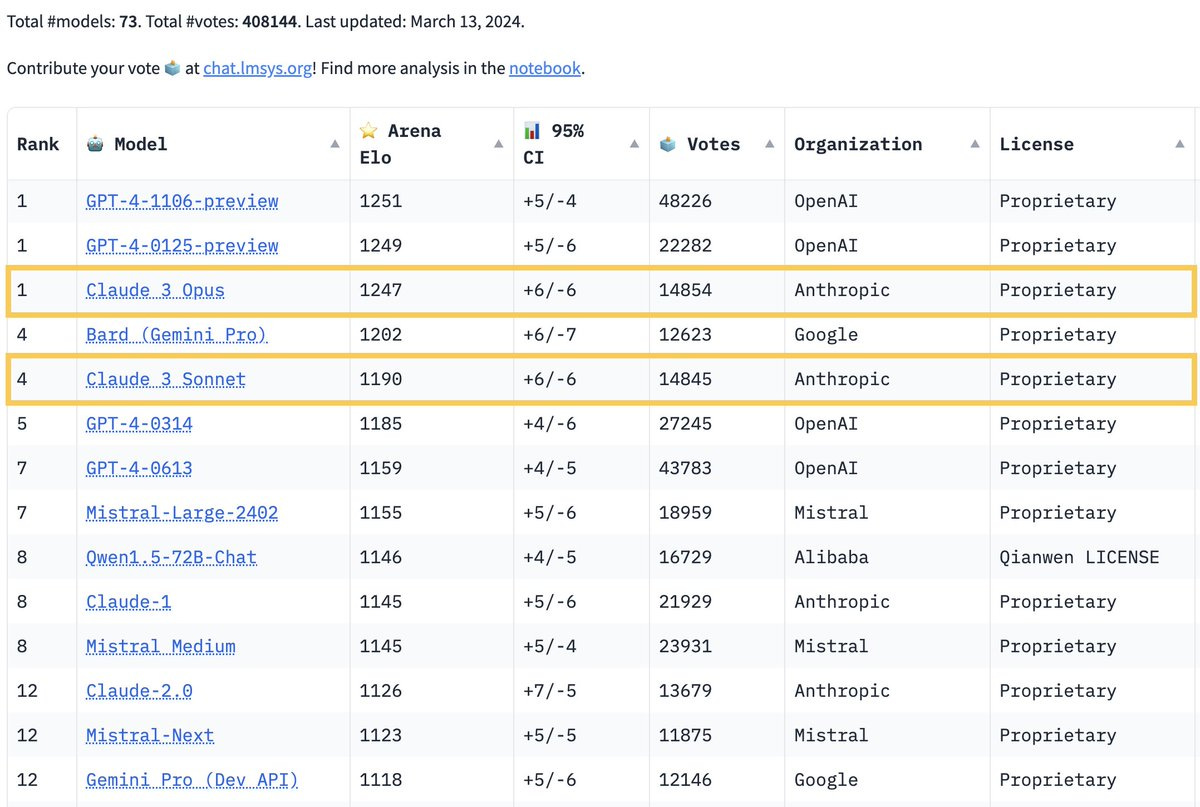

After releasing Opus and Sonnet earlier, Anthropic has reclaimed their throne as the leading AI lab we always knew them to be. Many friends of the pod prefer Opus for many things now, and I keep seeing this sentiment online, folks are even considering cancelling chatGPT for the first time since... well ever?

While sonnet, their middle model is taking a significant interesting place on top of the LMsys arena human rated rankings

Beating all GPT-4 besides the Turbo ones. And now Anthropics has given us Haiku, the smallest of the three Claudes, the fastest, and the cheapest by far.

With 200K context window, vision capabilities, this model crushes GPT3.5 on many benchmarks and becomes the de-facto cheapest model to run. It only costs $0.25/1M tokens, which is twice cheaper than GPT3.5 but just look at the performance. One thing to note, Anthropic still doesn't support function calling/tool use.

Cohere releases a new model for retrieval and enterprise purposes - CMD+R

Cohere goes for the second wind with a great release + open weights approach, and release Command+R (pronounced Commander) which is a model focused on enterprise uses, scalability and tool use. It supports 10 languages, 128K context and beats GPT3.5 and Gemini 1.0 on several tasks, namely on KILT - Knowledge Intensive Language Tasks. The tool use capabilities and the ability to ground information in retrieved context makes this specifically a great model to use for RAG purposes.

The model is 34B and is available non commercially on the hub

Together makes inference go BRRR with Sequoia, a new speculative decoding method

Together Sequoia shows a way to speed up Llama2-70B and be able to run this on a single consumer GPU with 8x speed up.

Being able to run AI locally can mean a few things, it can mean, make smaller models better, and we've seen this again and again for the past year.

Another way is... speculative decoding.

Being able to lower the inference TBT (time between tokens) by enhancing algorithms of decoding and using tiny draft models, and methods like offloading. The large model essentially remains the same, while a smaller (draft) model can help guide the inference and make it seem much faster. These methods compound, and while Sequoia from Together is new, shows great promise by enhancing the inference time LLama2 70B 8x on consumer hardware and up to 3-4x on dedicated hardware.

The compounding of these methods is the most exciting part to me, given that they will likely apply broadly (for now Sequoia only supports LLaMa) once a new model / architecture comes out.

—

Show notes:

Swyx AI news newsletter got a shoutout from Andrej Karpathy

Anthropic metaprompt cookbook from Alex Albert

Folks who participated in the AI Agent discussion, Nisten, Roie Cohen, Junaid Dawud, Anton Osika, Rohan Pandey, Ryan Carson

Thank you for being a subscriber, and for sharing this journey with me, I hope you enjoy both the newsletter format and the podcast 🫡

See you here next week 🎂 I’m going to eat a piece of cake

Full transcript :

[00:00:00] Alex Volkov: Hey, you are on Thursday. I March 14th, 2024. AKA bye. Day AKA Thursday. I birthday. I, I'm sorry for the pun. Uh, I promise I'm gonna, I'm gonna keep it contained as much as I can. My name is Alex Volkov I'm an AI evangelist with weights and biases Today on the show, a birthday celebration for Thursday I Twitter spaces.

[00:00:31] Alex Volkov: That's right. I started recording these exactly a year ago on GPT 4's announcement day, March 14th, 2023. In addition, everything important that happened in the world of AI for the past week that sometimes feels like a year. Including open source LLMs, big companies and their APIs, hardware and robotics for the first time, agents, And more.

[00:00:59] Alex Volkov: We've talked about a lot of stuff. But first, as always, a recap of everything we discussed as I recorded it at the end of the show while everything was fresh in my mind after this little birthday song that AI created for us.

[00:01:12]

[00:02:39] Alex Volkov: that this is AI generated? Maybe at the end there it went a little bit off, but holy cow, this is, I really listened to this birthday celebration multiple times after I created it with Suno V3 Alpha. So get ready for AI music everywhere. And now, the recap of everything we talked about for this week.

[00:03:02] Alex Volkov: But definitely, Stick around and listen to the end of the show. And as always, you will have chapters on every podcast platform that you use, especially Apple Podcasts.

[00:03:13] Alex Volkov: And if you do use Apple Podcasts, why not? Give us a thumbs up and like a five star review. That really helps. That's how people discover us, believe it or not. Here's a recap of everything we talked about. And following that, a beautiful in depth conversation with many folks who shared this journey with me and been, in one way or another, the reason I kept going this year for ThursdAI.

[00:03:36] TL;DR - everything we talked about in 10 minutes

[00:03:36] Alex Volkov: Everyone, here's a recap of everything we've talked about on Thursdays. Anniversary for Twitterspaces, March 14th, 2024, which is also Pi Day, which is also the anniversary of ChatGPT4, and anniversary of Cloud1, and we spoke about ThursdAI history, we spoke about how we got here, how now it's a podcast.

[00:03:56] Alex Volkov: And in open source, we had together AI release something called speculative decoding Sequoia. Speculative decoding is not new, but their approach to speculative decoding called Sequoia is new. It is able to Optimize inference for something like a Lama 70 billion parameter on consumer hardware up to 8 to 9 percent faster by just, , predicting a tree of next tokens and letting the model select between them.

[00:04:20] Alex Volkov: Speculative decoding is an additive technique to improve speed of inference of models. On top of models getting smaller and better, the bigger models are going to get faster on local hardware as well due to something like speculative decoding. It's very exciting to see. TogetherAI also announced like an extension of the round and now they're a unicorn and Definitely doing incredible things.

[00:04:40] Alex Volkov: We also, in the open source, we've covered that our friends at Nous Research,

[00:04:44] Alex Volkov: hermes Pro. If you followed us at any point before, you know that Hermes is one of the top Finetunes for Mistral 7 billion parameters. There is the Pro version of Mistral 7 billion on top of Hermes dataset. Hermes dataset also, by the way, is open and you can go and download and use it. This version, the pro version, is specifically focused on tool use and function calling.

[00:05:07] Alex Volkov: And we also covered what tool use is from the perspective of developers who build RAG apps, for example, or need structured output. This new version supports JSON mode and JSON output, which is a very welcome addition to the world of open source.

[00:05:19] Alex Volkov: It has OpenAI endpoint compatibility, and it's hosted on Fireworks, so you can actually try it out and just swap the OpenAI endpoint with that endpoint and see if your tasks are working with Hermes as well.

[00:05:31] Alex Volkov: On the border between open source LLMs and big company LLMs. We then moved to a conversation about Cohere. Cohere is a company that was co founded by one of the authors of the original Transformers paper Aiden Gomez and some other folks. Incredible company mostly focused on enterprise and use cases around RAG retrieval augmented generation.

[00:05:50] Alex Volkov: Cohere had a bunch of models called Reranker and Embedding Models. And now they released something called Command R. And by release, I mean they released it via API, but also they dropped it on Hug and Face in OpenWeight's non commercial license. So you'd be able to actually run and use this locally but you cannot use it commercially yet.

[00:06:06] Alex Volkov: For that, they offer their API and their API is definitely there. It performs very well on RAG application, outperforms other scalable models. So Outperforms, like even Mixtral and Lama70B, they're not comparing themselves to GPT 4 because this model the command R model is definitely focused on enterprise and use cases.

[00:06:25] Alex Volkov: It works very well with their cohere embedding and re rank models in tandem as well, it's focused on tool use. Like previously we said that Technium just added to open source. They're focused on tool use and external tools as well. And their Cohere API has a bunch of external tools that you can plug in into this one, like web search, like stock prices, like a bunch of other things.

[00:06:45] Alex Volkov: Optimized for 10 major languages, which is usually way more than other open models, and trained on 13 more, and has 128k context window.

[00:06:55] Alex Volkov: And in the same area of smaller models, we finally got the small model, Answer from Tropic, the folks that just released Claude three Claude three.

[00:07:06] Alex Volkov: Antropic released the smallest, most performant version of Claude 3 called Haiku. They call it the fastest, most affordable yet model for enterprise applications.

[00:07:15] Alex Volkov: Cloud3 Haiku is 25 cents per million input tokens, where GPT 3. 5, which is considered the cheapest one and the most performant one so far, is half a dollar for a million tokens. So it's half the price of GPT 3. 5. However, it significantly overperforms GPT 3. 5 on any other token. every metric that they've added, including human eval, which is 75 percent versus GPT 3.

[00:07:39] Alex Volkov: 5's 48%. MMLU score is 75. And the kicker here is as 200k context window, like the major Cloud Opus and Cloud Sonnet. So Heiko has 200k context window. Imagine a model that is only 25 cents per million tokens on input. has also 200k contacts window. And it's available via the API, obviously. or Amazon and Google Cloud as well. And it's vision enabled, so you can actually send images. And we geeked out about how a year ago when we started ThursdAI, one of the reasons why we came to the space, because we were blown away by GPT 4's vision capabilities.

[00:08:14] Alex Volkov: And now we're getting I'm not gonna say that Haiku is anywhere close to GPT 4 vision [00:08:20] wise, but it's From what I've tested very decent, given the price point, it's incredibly decent. Then I covered that in the Weights and Biases area we're coming to San Francisco in April 18th is our fully connected conference with many big clients of ours coming, foundational model creators, et cetera, coming to speak on the stage.

[00:08:40] Alex Volkov: And we're also going to do a workshop, , a day before. So April 17th, if you're interested in this, please write to me, I'll definitely. tell you when that's up. The tickets are early bird and you're more than welcome to join us in San Francisco. We will be very happy to see you.

[00:08:53] Alex Volkov: If you came from ThursdAI, come and give me a high five. I would love to, to, show my boss that this is actually pulling some folks. But also we covered continued things in ThursdAI around vision and video. So skipping from Weights and Biases stuff, we covered vision and video.

[00:09:06] Alex Volkov: We covered the DeepSeq, released a DeepSeq VLM, which is a tiny vision model. So Again, in the realm of multimodality this year, we're now getting 1. 3 billion parameter and 7 billion parameter models that on some tasks come close to GPT 4. It's quite incredible. So DeepSeq the folks who released DeepSeq Coder before and very impressive lineup of models open sourced VLM 1.

[00:09:30] Alex Volkov: 3 billion and 7 billion. Incredible, impressive on benchmarks, and the 1. 3 billion parameter model is so tiny, you can run this basically offloaded on your CPU. And in that vein, we also covered briefly, but we did cover that Transformers. js is very soon, from our friend Zenova, is very soon to support WebGPU.

[00:09:47] Alex Volkov: WebGPU is the ability to run these models in your browser in your JavaScript environment on the GPU of your machine, either that's a Mac or a PC. And that's now landed fully in all major browsers right now.

[00:10:00] Alex Volkov: The song that you heard beginning over this was made with suno v3. Alpha and I did this specifically for ThursdAI. And I'm very impressed that a year after we started all this, we're now getting songs that sound like somebody actually went in the studio and sang it. We then mentioned that in the AI art and diffusion corner, we still don't have stable diffusion tree.

[00:10:20] Alex Volkov: We also had another corner today, which is a hardware and robotics corner. And we've covered several very exciting things.

[00:10:28] Alex Volkov: We've covered that Cerebrus announced the fastest AI chip on Earth, with 4 trillion transistors and 900, 000 AI cores, able to train up to 24, 000 people. I don't use the word trillion parameters a lot here, but able to train 24 trillion parameters models on a single device. This sounds incredible, and once they put it in production, I think it's going to be a significant boost to the AI scene.

[00:10:52] Alex Volkov: We also covered Xtropic, the folks that came from Google X, Secret Lab, now announce Xtropic, the folks behind the EAC movement. As well, that's their company, they're building a TPU, Thermodynamic Processing Unit it's a little complex, but basically. They want to do natural physical embodiment of probabilistic learning, and they want to be considered the transistor of the AI era.

[00:11:17] Alex Volkov: And if you want to hear more about this, they have the full space Q& A that we'll link in the comments below. And so we covered Cerebrus, we covered Extropic in the hardware, and then we've talked about how FIGR, the humanoid robot company FIGR we covered before they, they announced a partnership with OpenAI, and this week they released a demo video that's unedited, so end to end recorded in 1x speed, of this, Figure robot, humanoid robot standing in something that looks like a fake kitchen and basically talks to the human in front of it using OpenAI's text to speech technology and vision.

[00:11:52] Alex Volkov: So it actually understands what it sees based on GPT 4 vision, probably custom version of GPT 4 vision, and also then is able to do some stuff. If you haven't seen this video, I'm going to put it in show notes on thursdai. news. Please feel free to subscribe. The video is mind blowing,

[00:12:07] Alex Volkov: but just the fact that the robot can see, talk about what it sees, and then perform tasks embodied in the real world, I think is a great way to see the future happening right now on Pi Day 2024. And I think this is most of the conversation that we've covered from the news perspective, besides this one last thing, where we covered that Cognition Labs released a video and actually started Letting folks in to something they call Devon, the first fully autonomous AI software engineer.

[00:12:35] Alex Volkov: That's the tagline. And obviously we've those of us who covered this, we remember AutoGPT hype from last year. We remember multiple since then, multiple different agentic frameworks, Devon seems like it took that to the next level, not only from a perspective of just being able to execute long tasks, but also from the ability of the UI to show you what it does and being autonomous alongside your software engineer.

[00:12:59] Alex Volkov: So you can, Devon actually has access to a full environment, probably with GPUs as well. It has access to a browser that you can log into your stuff and then Devon can on your behalf, use the browser and go and search for some stuff.

[00:13:10] Alex Volkov: And we had. One hell of a discussion following the Devon news to talk about, and I think it was started by Nisten saying, Hey folks, you have nothing to fear, still learn code. That this news, again, stoked fears of folks saying, Hey, should I even learn to code given these advancements? And we had a great discussion about Coding, taking over coders, for example, replacing or not replacing, and positivity in the age of AI.

[00:13:34] Alex Volkov: And this discussion, I really suggest you listen, stick to the end of the podcast, if you're listening on the podcast, and listen to the whole discussion, because I think it was a great discussion.

[00:13:43] Alex Volkov: Hey everyone. My name is Alex Volkov. I'm the host of ThursdAI for the past year, which I can now say proudly, and I just want to welcome you, yet again, to another Thursday. Today's a big day, not only because we're celebrating, but also because some of us woke up early to see the largest man made object ever to break through the atmosphere and go to space, which was incredible.

[00:14:24] Alex Volkov: Very tech optimist like, but also today is an anniversary of multiple things. And I think ThursdAI is just one of them. So we're gonna, we're gonna actually talk about this real quick. And I just want to say that ThursdAI I'm very happy to still be here a year after with many people who joined from week to week, from month to month, whatever friendships that were shaped in the ThursdAI community.

[00:14:49] Alex Volkov: And I just want to say I'm very happy that Swyx here is here. Swyx was on the actual first ThursdAI episode a year ago. We jumped in to discuss GPT 4 and I think we're blown away by the vision stuff. So welcome Swyx. How are you? Thanks for waking up early for this.

[00:15:04] Swyx: Hey morning. Yeah, it's a big day. The year has felt like 10 years, but it it's definitely a big day to celebrate.

[00:15:10] Alex Volkov: Absolutely. So thanks for joining us. Swyx, for folks who don't follow for some reason, definitely give Swyx a follow, a host of Latentspace and the founder of Small. And recently is being followed by SpaceDaddy as well. And I want to say also

[00:15:24] Swyx: Space Daddy!

[00:15:25] Alex Volkov: And I want to also say hi to Nisten who's been maybe the most consistent co host, Nisten.

[00:15:30] Alex Volkov: Nisten, welcome joining us all the way from called Canada, I think after visiting the doctor, how are you Nisten?

[00:15:38] Nisten: I'm good. I'm good. It's good. I missed one, I

[00:15:42] Alex Volkov: Yeah. . Yes.

[00:15:43] Nisten: was about it. I thought I was gonna miss the day, and I was upset, but no, I

[00:15:48] Alex Volkov: I have a question for you. Was the doctor that you visited a human doctor or an AI doctor?

[00:15:53] Nisten: Yeah, he was human. He hadn't seen me in five years, so I was showing him all this stuff about medicine and the AI. It's funny.

[00:16:00] Alex Volkov: The, and I also wanna acknowledge Farouk or Pharrell as we call him, maybe Pharrell, how are you? I.

[00:16:07] Nisten: Hey, what's up?

[00:16:09] Alex Volkov: Welcome, welcome to the ThursdAI celebration Far El is leading the Skunksworks crew and has been doing different incredible things in the open source. Very staunch proponent of open source here on the ThursdAI stage. If anything gets released and it doesn't get released with the source Far El will have words to say about this.

[00:16:25] Alex Volkov: So we're going to cover open source today as well. I also want to acknowledge the LDJ. Yesterday I wrote the whole thread and acknowledged like many people and I didn't tag. My, my good friend, Luigi. So LDJ, apologies for that. Welcome brother. How are you doing all the way from Florida?[00:16:40]

[00:16:41] LDJ: Yeah, I'm doing good, thanks. I've been late to a lot of the Thursday AIs past few months, but yeah, it's been good coming on and glad I was able to make it on time for this one.

[00:16:51] Alex Volkov: Yeah welcome. Welcome. And I also want to acknowledge Roei ray is the DevX Dev Advocates on Pinecone and Ray has been participating in many spaces. We had a lot of conversation about reg versus long context. And I remember those wells, a lot of like late night conversations as well. Welcome Ray.

[00:17:06] Alex Volkov: How are you?

[00:17:08] Roei Cohen: How's it going, everybody? Congrats, Alex, on this awesome anniversary. Yeah,

[00:17:16] Alex Volkov: there's a bunch of folks I see in the audience who are here from week to week, and it's so great to see the community shape up, and I really couldn't be prouder to be able to just talk about AI with friends and actually make a living out of this.

[00:17:29] Alex Volkov: I would be amiss if I don't acknowledge that the anniversary today is from The spaces. So we started talking about AI in Twitter spaces, back then Twitter spaces, now X spaces exactly a year ago or Pi Day 2023. The reason why we started talking about AI is because GVT 4 was announced and Greg Brockman gave the incredible demo where he took a screenshot of a Discord.

[00:17:52] Alex Volkov: So if you remember this, the Discord, the famous Discord where we went to hunt the Discord Snapchat. Mhm.

[00:18:00] Swyx: a screenshot of the, I think the OpenAI Discord and it just transcribed every word in there and described every, like the position of every icon and like the framing of it. It was just like the best vision model we'd ever seen by like by a lot.

[00:18:14] Alex Volkov: By a significant margin and it understood different like active states, etc. and to get to a point now where we're basically having open source models. We're going to talk about CogVLM today. We're going to talk about DeepSeq released a new vision model today to get the, to the point where we can basically recreate this with a tiny model that runs completely offloaded, it's crazy.

[00:18:36] Alex Volkov: Back then, no vision existed. So we got into space, started geeking out about this, and then we kept going. So this is the anniversary of the Twitter Spaces, the actual podcast, the ThursdAI podcast that I created. Encourage you to subscribe to didn't start about four or five months afterwards.

[00:18:51] Alex Volkov: After we did this and the community started shaping up and people started coming in and actual guests started to arrive. So I see a few guests that became friends of the pod. So if you guys see Jun Yang here in the audience, on, on the technical team at Quen, there's a great conversation that we had about Quen and their models as well.

[00:19:10] Alex Volkov: We have a bunch of folks like this from time to time, just join and talk about the stuff they built. And I think this is the best thing that I get from ThursdAI is definitely this is the ability to talk with folks who are experts in their fields. And definitely I'm not an expert in many of the things we cover.

[00:19:25] Alex Volkov: And it's great to have folks from vision and from foundational model training and from open source. And we had a bunch of conversation with Nous Research folks. We're going to cover a few of those today as well, and it has been incredible so far. And so the birthday for the actual podcast, once we started recording and sending a newsletter is coming up in.

[00:19:44] Alex Volkov: in June. Meanwhile, if you want to support the space, if you're here and you're like, Oh, this is great. I learned so much. You're more than welcome to just interact with us. On the bottom right, there's like a little icon there, the message icon that says five. You're more than welcome to just send replies there and boost a little bit of the signal and retweet the space link.

[00:20:02] Alex Volkov: And so I think with this, I think with this, Oh no, a year ago, another thing was and it went out the radar because GPT 4 took over. All over the airwaves. Cloud One was released exactly a year ago as well. Happy anniversary to the Cloud team. They've been killing it lately. The past few weeks have been entropic weeks for sure.

[00:20:20] Alex Volkov: And definitely folks are looking at Cloud and now, considering cancelling their JGPT subscription. So that's been great to see. And so a year ago, there is Cloud One and they were quickly quickly hidden with the news. I also want to shout out that in the past year as well. Open source were almost non existent.

[00:20:36] Alex Volkov: So a year ago and four days, Lama CPP was first released. Georgi Gerganov released Lama. cpp, a way to run the Lama model that was released a month before that on just, your local hardware. And, uh, nobody knew about this necessarily until a few days later. Vicuna was just released.

[00:20:56] Alex Volkov: So if you guys remember Rikuna, that was a thing. So all of these things happened in, in that week. And it feels this week we have, or at least the last few weeks, we have a similar like Insanity weeks. Don't you guys think? Especially with Opus and the rumors about GPT 4.

[00:21:11] Alex Volkov: Do you guys remember anything else from that last week before we started like talking about this week?

[00:21:15] Far El: It's hard to remember what happened last week because this week felt like a century alone. That's that, that's the thing. Like we, we've

[00:21:22] Nisten: had so much just in the last week that I don't even remember what happened.

[00:21:25] Alex Volkov: Absolutely. That's why we write down. And honestly, I think Swyx, we talked about this where now that, every ThursdAI is now recapped and you have AI News, Newsletter Daily, or that covers everything. This is just for the historical record, it's very important. Just to be able to go a year back and see where we were.

[00:21:41] Alex Volkov: Because it's really hard to remember even last week, not to mention the last year. So I think it's very important. I don't want to shout out do you still call this small talk? Or do you have AI News?

[00:21:50] Far El: It's just AI news. I'm reserving small talk for the other products that I'm working

[00:21:55] Alex Volkov: I see.

[00:21:56] Far El: yeah. Yeah. AI news's,

[00:21:57] Alex Volkov: so talk to us about the AI news just briefly for folks who are not familiar with that specific newsletter.

[00:22:02] Swyx: Man, this week was fucking, it was crazy in around December I was like very overwhelmed by all the AI discords and I knew that all the alphas being dropped in discords are no longer on Twitter, so I started making this bot to scrape discords and it was mostly just serving myself and then I shared it with some friends and it grew to like a couple hundred people, but one of them was Sumit Chintala from the Meta team, like he was the creator of PyTorch and still runs PyTorch.

[00:22:31] Swyx: And last week he shouted it out, saying that he he said it was like the highest leverage 45 minutes every day that he spends reading this thing. Which was like a freaking huge endorsement from someone like him. So I didn't even know he

[00:22:43] Alex Volkov: from from the guy who runs PyTorch. It's crazy. And of

[00:22:49] Swyx: so I, yeah, I didn't even know he was subscribed. I don't, honestly, I don't even look at the subscriber newsletter. I think it's really good for mental health to just do your thing, right? Don't even look at who's on the list. And then two days ago, Andre also just like unsolicited, completely no notice, no warning just said oh, yeah, I've been reading this thing for a while.

[00:23:06] Swyx: And I was like, what? And then I went back and looked through the emails and like his email's not there. There's no, his first name not there, not there. I eventually found his email, but yeah, it's it was just a shock that like he was also getting utility out of it and Yeah, so far I think like 12, 13, 000 people signed up in the past couple days, and we'll see where this, we'll see where this goes I think a newsletter is not the last final form, and also people have legitimate concerns around, how much is comfortable being scraped from Discord what is the sort of privacy expectation on a public Discord that anyone can join, right?

[00:23:39] Swyx: So I'm taking some steps to basically protect people it's purely meant for utility, not for snooping on people's conversations. But I do think like there should be a new sort of Hacker News of AI, quote unquote, that pulls together, Local Llama, Twitter, Discord, YouTube, podcasts, whatever.

[00:23:55] Swyx: And yeah, I think that's what I'm making AI News go towards.

[00:24:02] Alex Volkov: is excited about, Elon is excited about as well. So Elon now is a follower of Latentspace, which is a big moment. I wanted to ask

[00:24:08] Swyx: Yeah, we're trying to, yeah, let's

[00:24:09] Alex Volkov: Local Llama, by the way? Is Local Llama part of the source as well

[00:24:13] Swyx: we the engineer that I'm working with is working on this. So not yet, but we are working on it. And

[00:24:19] Alex Volkov: Alright folks, so if you want so if you want not only HighSignal, but if you want like the full firehose of information, that's great from Discord and Twitter list, I think you have a HighSignal Twitter list as well in there definitely subscribe to AI News previously Smalltalk, as like the titans of the industry now follow this and getting insight from this, so you should as well.

[00:24:40] Alex Volkov: But yeah. If that's too much for you, we're here every week to cover pretty much the very most important things.

[00:24:46] Open source - Function Calling model from NousResearch - Hermes Pro

[00:24:46] Alex Volkov: And so I think it's time for us to start with Open Source.[00:25:00]

[00:25:09] Alex Volkov: Alright folks, so let's let's cover some open source stuff. I think the first thing is we have to mention that our folks our friends from Nous Research announcing a new model today or I guess yesterday night. It's called Hermes Pro. Hermes Pro is specifically, I'm not really sure what Pro means here, so we have to ask some folks from Nous Research, but they announced the continued training of their Mistral model, their flagship model, that uses, that is fine tuned for tool use and function calling.

[00:25:40] Alex Volkov: And tool use and function calling are Maybe or should I say synonyms of each other at this point? I think it started with function calling from OpenAI that was released in June last year. And they gave us function calling in response of all of us wanting a JSON output. And since then, function calling became something called tool use.

[00:25:59] Alex Volkov: Basically, the ability of these models to not only So you the next word or complete, autocomplete, but also you could provide schemas for some of your functions that these models will say, Hey, I'm actually, I want to, I want more information on this topic or that topic. And so here is what tool you should use.

[00:26:20] Alex Volkov: And you as a developer, you would get that response. You would go call this tool. you would then pass back the data from this tool into the model. And then the model will use its context and the user's request together to come up with an answer. So think about stock price, right? Stock price is something that changes often.

[00:26:37] Alex Volkov: You cannot train the model on stock price because it changes very often. So for one example of a tool could be go check the stocks on the stock market or go check Bitcoin price, et cetera. And the model, Mistral is not able to, it's very obvious if you ask a Mistral 7b, Hey, what's the price of Bitcoin?

[00:26:55] Alex Volkov: It will give you something that something will be 100 percent wrong, a hallucination. So a model with tool use would be able to decide that if you provided, if a developer provided in advance, The the model with tools like, hey, price of Bitcoin, price of stock, et cetera the model will be able to decide that instead of hallucinating the answer, you'd actually return a reply to the developer and say, hey, go get me this information and then I'll be able to answer the user, right?

[00:27:20] Alex Volkov: So this is what tool use and function calling basically is. And we haven't had a lot of that in open source. We had a little bit. We've talked about the tool use leaderboard from the folks at Gorilla. I think. Stanford? I'm not sure. And then now Nous Research released us a continued training of their 7B model called Hermes Pro with the same general capabilities.

[00:27:39] Alex Volkov: So that's also very important, right? You keep training a model. You don't want something called catastrophic forgetting. You want the model to perform the same plus additional things as well. And the, now it's trained on new data with tool use plus JSON mode as well. So not only do we get The ability of the model to reply back and say, Hey, you should use this function.

[00:28:00] Alex Volkov: We also get JSON mode as well. It supports custom Pydentic schema. Pydentic for folks who don't write in Python is a way to define objects in, in, in Python in a very clear way. And when you use this and you give the model kind of the schema for your, tool use. The model then knows what parameters to call your functions with.

[00:28:18] Alex Volkov: So your job as a developer is basically just take this call and forward it to any API call that you want. It's available on the hub and it's announced with OpenAI endpoint compatibility, which is great. So I don't think we've seen this from Hermes so far directly. I think everybody who served Nous models they gave us OpenAI compatibility, but definitely we know that The industry is coalescing around the same format, which is the OpenAI endpoint, where you can just replace the URL to either OpenRouter or Fireworks or whatever.

[00:28:49] Alex Volkov: I think the chat from Mistral as well is supporting OpenAI compatibility. Great to see that we're getting open source models for tool use because it's very important for agents and it's very important for basically building, building on top of these LLMs. LDJ, I saw you wave your hand a little bit.

[00:29:07] Alex Volkov: Did you have a chance to look at Hermes Pro in tool use? And what are your general thoughts about open source tool use? Hey,

[00:29:16] LDJ: I'm pretty much Hermes, but also has a much improved JSON and function calling abilities and things like that. And I was just waving my hand to describe that, but then you pretty much described it already. So I put my hand back down.

[00:29:29] LDJ: but

[00:29:30] LDJ: Yeah, you got a good description of it.

[00:29:32] LDJ: And I think that pretty much summarizes it.

[00:29:34] Alex Volkov: this is the anniversary of this ThursdAI, Birthday AI. So I did my homework this time. Usually sometimes these things get released like super fast and we actually don't have time to prepare. Comments on general availability of function calling and tool use from the stage before we move on?

[00:29:48] Alex Volkov: Anything that you guys want to shout out specifically that's interesting here?

[00:29:50] Nisten: It's probably the most commercial used part, I think, because every person that's using a 7b, they want a really fast model, and usually they want some kind of JSON returned for commercial uses. There are chat uses as well, but I think like the majority of, I don't have any data on this, I'm just guessing that probably the majority of the use is to return JSON.

[00:30:15] Alex Volkov: Yeah. And then there, there are tools like Pydantic from Jason Liu that we've talked about that help you extract like structured data from some of these. And those tools require function calling as well. And function calling and Pydantic support. So definitely supports more enterprise y.

[00:30:29] Alex Volkov: Maybe that's why Technium decided to call this Hermes Pro.

[00:30:32] Together.ai new speculative decoding Sequoia improves AI inference by 9x

[00:30:32] Alex Volkov: Moving on to Together and Sequoia. And Together released something called Sequoia, which is speculative decoding. I actually wrote down explanation of speculative decoding is, and I'm going to try to run through this. So for folks who are not familiar with speculative decoding, basically, if you think about how we get open source, how we get bigger and better AI on to run locally on our machines, one of them is open source and smaller models getting better, right?

[00:30:58] Alex Volkov: So that's definitely something we've seen for the past year. We got Llama70B and then we got. 13B, and then different Finetunes and different other foundational models. I started beating the LLAMA 70B, definitely LLAMA 1, and now even LLAMA 2 is getting beaten by tinier models. So the progress of throwing more compute and more techniques is shrinking down these models to us being able to run them locally, just because our hardware is, let's say, limited.

[00:31:23] Alex Volkov: That's one way that we get local open source models. They just get. keep improving and keep getting trained on. Another way is we can, we're able to serve these like bigger, larger models, like 70B models on consumer GPUs, but then it's like super slow. So you wait one minute or two minutes between each token prediction or each word that you see.

[00:31:44] Alex Volkov: So one additional way on top of just getting smaller models faster and smarter is improving inference. So we see, we saw a bunch of attempts this year from folks like Modular releasing their max inference system and improvements obviously in different like places like FlashIntention and different inference engines as well.

[00:32:03] Alex Volkov: So we saw all of this and one such way that adds to all of this is called speculative decoding, which improves the inference speed, just inference speed. It basically tries to predict a few next tokens instead of just one, using a smaller model. And the key idea is to construct a tree of speculated future tokens for every potential token in the model's output.

[00:32:26] Alex Volkov: Sometimes they use, I think at least LLAMA CPP supports speculative decoding, sometimes they use a smaller model. For example, for LLAMA, they could use like a small LLAMA to help you predict the tokens, and then the larger LLAMA to actually help select them. And And together, folks who released a few things that we've covered before employe3d out there, who released the the Mamba architecture and the Hyena architecture we've talked about previously before also FlashAttention chief they now released their own take on speculative decoding.

[00:32:56] Alex Volkov: which they claim that on consumer GPUs, you'd be able to run something up to a 70 billion parameter Lama 2 with a RTX 4090. And they improve the ability of you to run this incredible like large model by almost nine, nine percent, nine x faster. On, non consumer GPUs like A100s, they also go up to 4x faster.

[00:33:17] Alex Volkov: Basically, by just predicting with a [00:33:20] smaller model, all like building a tree of all possible tokens, and then the larger model actually selects and predicts somehow based on those. They have a bunch of other things like offloading there, and very interesting things, but I just want to say that this is a field, speculative coding is a field that is entirely.

[00:33:39] Alex Volkov: How should I say? They only support LLAMA as far as I saw, but this whole field is entirely additive to the rest of the fields, right? So if speculative coding helps to improve LLAMA, 7TB9x faster, it's probably going to work on smaller models as well. So it's really incredible to see how much different speed improvements we're getting across the board.

[00:33:58] Alex Volkov: And definitely for the stuff that we all, we're working I love to talk about, which is open source models running locally, running faster. This is incredible. Yeah, LDJ, go ahead.

[00:34:09] Nisten: Yeah, I just wanted to direct people to I put, I pinned on the billboard a video that TogetherAI put out actually showing side by side Sequoia versus not Sequoia, and yeah, it's pretty insane the amount of speed up you're able to get.

[00:34:21] Alex Volkov: The amount of speed up on the same hardware and on the same model. So the model didn't improve, the hardware didn't improve. All they improved is the ability to to help the model predict next tokens and spit them out, which is, I agree with you, it's insane. And just, um, multiple improvements across the board are going to get us where basically we want to go, which is these type of models, these sizes of models running super fast on, on local hardware.

[00:34:44] Alex Volkov: They released it in GitHub. Folks can try. It only works for LLAMA. It doesn't work for like any other bigger models as well. Definitely we'll see. I will just say that the thing that I'm most excited about this is that all these techniques are, one, additive, and two they're there for the next big model to get released and just support them.

[00:35:00] Alex Volkov: So like when LLAMA 3 came out, eventually releases, and we know it will release these models will be able sorry, this speculative decoding will start working, and then speculative decoding will start working, LLAMA CPP will already be there. So we saw the kind of, the community efforts to support everything were just kicked into gear when GEMMA was released.

[00:35:18] Alex Volkov: I'm just very excited that we have all these techniques to Throw the, throw at kind of the next open source, the big model open source. And just the concept of running a 70 billion parameter model is very exciting. Last week we covered something from Jeremy Howard and Joanna Whittaker and Team Dietmers, the folks with Answer.

[00:35:36] Alex Volkov: ai and Qlora combined Qlora with another technique to be able to train 70 billion parameters or at least fine tune them on kind of consumer hardware as well. We're not only getting news for the past week and two weeks of being able to fine tune 70 billion parameter models on consumer ish hardware.

[00:35:53] Alex Volkov: We're also getting news about being able to run them with some, uh, some logical number of tokens per second and not a one token every like four minutes or something. Exciting news in open source.

[00:36:04] DeepSeek VLM 1.3 & 7B VLM that punches above its weight

[00:36:04] Alex Volkov: Maybe we'll cover DeepSeq VLM here as it's vision, but yeah, definitely released in open source and we don't want to miss.

[00:36:10] Alex Volkov: So DeepSeq, the folks behind The folks behind DeepSeq Coder and released DeepSeq VL, a state of the art 1. 3 billion and 7 billion visual parameter models. If you guys remember last week, we talked to Vic Hyntak of Moondream2, and that was a tiny vision model. And the whole point, and if you were here in the beginning, when we in Swyx got excited about a year ago about the vision capabilities of GPT 4.

[00:36:34] Alex Volkov: The whole point with these vision models is that their improvement this year definitely felt exponential, because now a model of 1. 3 billion parameters, a tiny model that most Macs can now run, can very easily. And if our friend Zenova is going to join us, it's very soon with WebGPU, we're going to be able to run fully in browser.

[00:36:53] Alex Volkov: These models now are able to perform very similarly to what we saw a year ago and just blew our minds, which is OCR. without an OCR model built in, understanding objects, understanding graphs and charts, etc. And so it's very interesting that DeepSeq coder, let me try to share this into the space. Yeah, it should be up there as well.

[00:37:13] Alex Volkov: Very interesting that DeepSeq released and is punching above its weight. significantly above its weight, and they actually try to compare themselves to GPT 4 vision, which is quite remarkable on different tasks like evaluation, multi images and then in some of these tasks, they get to half performance of GPT 4 vision, which is still quite incredible, right?

[00:37:35] Alex Volkov: Like it's a 7 billion parameter model, GPT 4, we still don't know how many parameters this is. We still don't know if GPT 4 vision is, a multi mixture of expert model or not. But DeepSeaCoder is actually. Coming close to the same performance as DBT four on Common Sense task and analysis task.

[00:37:55] Nisten: Yeah, and I just want to say Allama CBP supports these models. I don't know about DeepSeq, but they've supported all the other ones. And there's also a a Lava CLI in there, which you can use with these ones. Also, when you run the server, you can run the models as well.

[00:38:12] Nisten: I think they just need a little bit more compute and engineering, and they can match GPT 4 when it comes to vision. I am quite surprised that it wasn't that That big of a deal. In some ways, COG VLM, not DeepSeq, is a lot better than the rest, but it's also a larger model too. And I quickly wanted to say, because you mentioned Zenova before, I don't know if you're going to go more into that, but it turns out, it's that people of the core Chrome team or Chrome Canary that implement WebGPU, they listen to they listen to ThursdAI and Stuff that we've been saying over the years, over the months, they've actually started implementing.

[00:39:00] WebGPU and Int8 support for quantized models

[00:39:00] Nisten: And the most exciting thing that I find now is that they are trying to implement int8 support natively in WebGPU. So that will do another savings of half the memory when When you run stuff, even if you have a GPU that doesn't necessarily support in Tate, I think there was a method to run at half the memory.

[00:39:23] Nisten: So remember we went from only supporting Float 32, a few months back, I think it was September. And you needed a weird started version of Canary with a few commands to support Float16. So now they're supporting Inti, so the memory requirements of the browser have dropped down by 4x in the last 5, 6 months.

[00:39:44] Alex Volkov: I remember the days before WebGPU support even landed, like all of Transformers. js. And for folks who are not following us that closely, Zenova is a friend of the pod, the author of Transformers. js. We talked a lot on the pod, he actually announced him joining Hug and Face. on the part as well. And he created Transformers.

[00:40:04] Alex Volkov: js, which is a way in JavaScript on node to run these models via the ONNX platform. And when we talked about this before, the only way to run these models in the browser was like fully CPU. And then we always talked about, okay, WebGPU is going to come at some point. WebGPU is the ability to run to tap into GPU inference from the browser environment, from the Chrome environment.

[00:40:26] Alex Volkov: And since then, WebGPU was Still a spec that was announced that was released and now it's fully supported everywhere. But like Nisten, like you're saying, it only supports 32, right? And a bit, can you describe this part a little bit more? And then now they're listening to us and actually lending support for a quantized version of these models, a smaller version to be able to run even smaller models that perform the same.

[00:40:47] Alex Volkov: And,

[00:40:47] Nisten: Yeah so now, Chrome, you don't even need Canary and it will support Float Float16. And by default, if you only have a CPU, stuff can run now on the CPU and in float32. But again, the biggest use for this so far has not actually been chatbots. Even though chatbots do work, it has been more the visual stuff and the effects.

[00:41:10] Nisten: All the diffusion based some stuff of function calling. That's where stuff gets pretty exciting. because it changes what kind of applications you can build. It's, again, it's the front end, like what are you going to put before they reach this big GPU cluster? So it's pretty, this is the part where we're going to see the most changes and progress, in my opinion.

[00:41:34] Nisten: It's going to be the visual stuff, making use of Transformers [00:41:40] JS library.

[00:41:40] Alex Volkov: and one, one example of that Zenova showed on his feed is a real time background removal from video. So you play a video and then imagine a Chrome extension that's loaded or something, and then you're able to run AI transformer stuff on top of everything that you read or see that's the kind of stuff we're talking about with access to GPU, I think is going to be possible.

[00:42:00] Alex Volkov: So super, super exciting to see how this performs. And obviously this means that the models that we talk about local running locally, we'll just get. More use, because developers will be able to build them in. This will never get to the point of GPT 4 level for full generality, or I don't want to say never, but it's not quite there in terms of okay, running a GPT 4 level model fully in your browser.

[00:42:23] Alex Volkov: But for some specific tasks like vision, we just talked about on several benchmarks, Coq VLM and this tiny new release from DeepSeq VLM is now getting there, right? So you'd be able to analyze images, for example, you'd be able to do all kinds of things in the browser fully. Without loading, without Python environments, without all of these things.

[00:42:42] Alex Volkov: I think it means a lot for user experience as well. I think we've covered open source a bunch. Do you guys have anything else worth mentioning in the open source thing? Briefly before we move on to the to the big companies, and maybe we'll discuss, we're going to discuss agents as well.

[00:42:57] Cohere releases Command+R - a RAG focused model in API + open weights

[00:42:57]

[00:42:57] Alex Volkov: Yeah, so CommandR, I, interestingly, it's both in the open source and not, so maybe it's a good transition, right? Let's actually do this as a transitional topic. So Cohere, the company that I don't know, raised a bunch of million of dollars and everybody expected it to be like the second Lycanthropic and didn't for a while.

[00:43:18] Alex Volkov: Now is back in the very impressively is back. And so for a long time, I think Cohere re Refocus their efforts on something like RAG. They had the Cohere Reranking model and they had the Metting models for a while. And they focused on, I know that we in Weights Biases, we use Cohere Reranker for our RAG bot, and that's improving our responses significantly.

[00:43:39] Alex Volkov: Reranking is basically receiving Receiving back from your vector database, a few responses that are near neighbor equivalent to what your user has asked for. And then running another process of re ranking them for higher how should I say accuracy. And so Cohere Reranker was for a long time, like one of the more standard ones that folks use.

[00:43:58] Alex Volkov: And now Cohere actually stepped in and said, Hey, we're releasing a new model that's it's called Commander, Command R. It's a new generative model from Cohere aimed at production scale tasks, like RAG, Retrieval Augmented Generation, and using external tools and APIs. So here's this word again, external tools we use and APIs as well.

[00:44:16] Alex Volkov: As you as we previously discussed, Tool use is important. We just got tool use, in a fully open source, thanks to Nous Research and I haven't yet tested their tool use, but Cohere is definitely building this model. And I think Swyx, you also saw this release and we're going to both identify the same pretty much thing where this is interestingly not getting compared to any GPT 4 or Cloud Opus, right?

[00:44:40] Alex Volkov: They're not even trying. They have a very specific. Use case in mind and I wanted to see from use works if you have any other comments on that or how they're like positioning themselves and what specifically in what world that they're operating in.

[00:44:54] Swyx: For Coban,

[00:44:55] Alex Volkov: For Commandar and Cohere as general, yeah.

[00:44:58] Swyx: simple answer is probably not as good as GPT

[00:45:01] Alex Volkov: Yep.

[00:45:02] Far El: They didn't include it, but I haven't tried it out personally myself. People seem to be talking about it for retrieval and ragtime use cases, but, I can't give my personal endorsement. Just in general, Cohere, I think, they've been more active in sort of enterprise use cases and Finetuning, like talking about their Finetuning capabilities, or long tail low resource language, maybe use cases they also released AYA, I think, last month, which some people in the open source community were quite excited about but yeah, I think having them see, seeing them do such like a strong launch for a new model I think is like a second win for Cohere, and I'm excited to see more coming out of them.

[00:45:43] Alex Volkov: Definitely feels like a second wind, and we would don't know how much we covered Cohere here before but the fact that they released the model also in open weights on Hackenface, I think, gives them a lot of credibility from the community. LDJ, go ahead.

[00:45:58] Nisten: Yeah I noticed they did actually post some benchmarks on the website of comparison to LLAMA 270 billion, Mistral, and GPT 3. 5 Turbo, and like all comparisons and RAG benchmarks, and Command R does seem to be all of those three that I just mentioned. And of course, this is their own reporting, it's probably good to wait for third party benchmarks, but yeah, and it's apparently very good at multilingual abilities as well. I think I saw somebody saying that like somebody who, one of their like first languages is Portuguese, like they said Command R was one of the best languages, or one of the best models that was able to do that actually very fluently and understand the nuances of the language.

[00:46:39] Nisten: So yeah, I think that's really interesting and it might just be really good overall model for open source.

[00:46:45] Alex Volkov: Yeah

[00:46:45] Nisten: I think it is open source, but just, sorry it's open source, but I think it's just non commercial license.

[00:46:51] Alex Volkov: Yeah, so they did open Waitz release with with non commercial license. And they did say that if you're an enterprise, you want to build something cool with Commandar talk to them and they'll figure out something. And Aiden Gomez the CEO of Cohere is one of the founder, one of the authors on their Attention Is All You Need paper recently has unblocked And became friends with Nisten here in the Toronto community.

[00:47:16] Alex Volkov: He mentioned that this model is also optimized for 10 major languages for global like business and trained on 13 more. So it actually has a pre trained on 13 more has 128 context window, right? So if you do compare this to GPT 3. 5 Turbo or Mixtral, for example I don't remember 32k context this is 128k and they specifically focus on speed in addition to everything else, right?

[00:47:39] Alex Volkov: And in RAG systems, in, in these systems, you may not need a model that's like super, super fast. Smart, you may need a model that is able to retrieve everything that you want much faster and significant speed improvements may outperform smartness on MMLU tasks, right? So I think that's their game, they're playing it they compare it like, like LDJ said to 3.

[00:48:02] Alex Volkov: and not GVT4 or Opus and they have results in something called KILT, Knowledge Intensive Language Tasks and retrieval and Tool use specifically and so they also have a bunch of stuff on their platform to be able to do tool use and by Tool like I explained before go get me some news from the web, for example, so it's really focused on web Integration getting things from the web.

[00:48:22] Alex Volkov: Nisten, do you see the one line they posted where like it's basically they said hey, here's perplexity Based on command R. I think you replied to that. Do you remember do you want to cover this briefly? It was really fun as an example

[00:48:36] Nisten: Yeah, I shared it in the Jumbotron, it's like the third thing. It looks like it's pretty easy to build a RAG pipeline with their code, but not all of it is open. There are a few things there which are unclear, and I haven't built that pipeline yet to say for sure. So I don't want to say anything that it's incorrect, but it looks like they've made it really easy to build your own perplexity in five lines of code.

[00:49:04] Alex Volkov: That was really funny. Like a little dig at perplexity. Definitely the model is able to do like web, like the tool of web search. This model specifically is like Excelsat, but other tools as well. So shout out to Cohere, second win, like Swyx said, definitely we'll keep keep you guys posted when some of us try this.

[00:49:21] Alex Volkov: Open weights model that you can. Run, but not commercially, but you can use it and train and maybe this will help open source folks as well.

[00:49:29] Anthropic releases Claude Haiku - GPT3.5 competitor

[00:49:29] Alex Volkov: Moving on from Cohere I think in the same battlefield, actually Anthropic. gave us an announcement yesterday, and very smart release schedule from Entropic, I must say, right?

[00:49:40] Alex Volkov: So they released Cloud 3, they announced Cloud 3 a few weeks ago, they announced three versions, Opus, which is their flagship that now many people prefer on top of GPT 4, which is quite incredible. It's not taking over on LMCS yet. So GPT 4 still takes over on the LMCS people arena. But I think we've [00:50:00] been coming back here week after week and saying that, some more folks use Opus.

[00:50:04] Alex Volkov: Um, let me see just by raising hands. Do you guys use Opus for the past week? At least once? What do you have a thumbs up or thumbs down for Opus use?

[00:50:13] Swyx: Oh yeah, I use it every day.

[00:50:15] Alex Volkov: Every day. Wow. So you got the pro thing or are you using the API kinda?

[00:50:20] Far El: I got Pro, but apparently I'm a chump because I don't have to use Pro, like, only like B2C, non developer types should use Pro. Every developer should just use the Anthropic Workbench because you just pay by API call and you're probably using less than 30 worth.

[00:50:35] Alex Volkov: I will say this like very quietly because maybe Anthropic you don't even have to pay unless you apply for production use and then you have to put a credit card. It's open and you get API calls for free. I will say this, I will say this, there's a Tony Ding released like a year ago, I think something called TypingMind, which is like a front end for front end for ChatGPT basically, but on the back end you can put every model that you want.

[00:50:55] Alex Volkov: So basically you get the ChatGPT experience, including vision stuff. You can upload images as well. And I think that costs like 30 bucks. If you get that. and you plug in your API key that you get from Tropic for free, you basically get the same experience, you don't have to pay the 20 bucks a month

[00:51:08] Far El: Do you use TypingMine every day? I I hear some social media buzz about it, but I don't see any AI people. engineer type people

[00:51:15] Alex Volkov: I haven't used it up until I, I had to do, I had to try cloud three and I didn't want to pay the extra 20 bucks. Just remember in our subscription. So I just plugged it into typing mind and it's a nice experience. I still go to Workbench. Workbench is more for us, for engineers, right?

[00:51:30] Alex Volkov: Workbench, everything that you get there, you can immediately export and continue via the API, for example. And the Workbench is annoying because you have to remember to every Every prompt that you have, every answer that the model gives you, you have to click a button and put it back in kind of the stack of messages, right?

[00:51:47] Far El: you can use keyboard shortcuts, but it's also meant for you to prototype prompts, right? So that's what you want to do. You want your conversations not to persist. You want to see the output and you're like, okay, throw away the output. I'll tweak the prompt again, generate the new output. So you don't want it to auto add to the conversation.

[00:52:04] Far El: That's the main difference,

[00:52:05] Alex Volkov: That's true. And so definitely many folks use the Workbench for prototyping prompts is great, but just for chatting is also great. So you've been using it, so what's your take on Opus so far?

[00:52:17] Far El: Oh, yeah. If you go to AI News every day now, I'm Generating Haiku, Opus, and what's the other one? Sonnet. By the way did you know that the names of these things basically hint at the model size?

[00:52:30] Alex Volkov: Yeah, let's talk about this. Opus is like a big

[00:52:32] Far El: yeah. Haiku is three lines long, sonnet is 14 lines long. Interestingly, and in opus is, unbounded, but in, 3b, 14b, and probably 8 times 220b. Yes. I think the cloud people thought they were very smart by just encoding the, the numbers in

[00:52:50] Alex Volkov: gotta applaud them about the name because I stopped saying Cloud3, I'm just saying Opus now, and everybody gets what we're talking about. Opus is a brand name that's built in like separately from Cloud3, which is, I think, very smart. Like I'm, 3. 5, 4, 4Vision, all these things, like it's a little harder to say, and now they came out like with actual names, and I gotta applaud the strategy.

[00:53:12] Alex Volkov: I think just to connect the dots back to where we are today yesterday Claude finally released the announced haiku and yeah, Swyx, you had another comment that I spoke over?

[00:53:22] Far El: Nothing, I was just going to give say, if you want to do you should be generating things side by side and seeing the model difference. Haiku is very bad at instruction following. Sonnet is actually really surprisingly good enough. I would use Sonnet for most things, and then Opus is more powerful but slow and honestly not really worth it.

[00:53:42] Far El: And if you want to see side by side generations, just go in the last few issues of AI News. You'll see side by side and you can decide for yourself which one you prefer. Yeah, so I run all the summaries through through Sonnet and Opus and Haiku every day now, and I can see the difference.

[00:53:56] Far El: I would say the general take is that Code 3 in general is better at instruction following and summarization than GPT 4, which is huge. I can't believe I'm just saying

[00:54:08] Alex Volkov: It's crazy.

[00:54:08] Far El: of GPT 4. But it hallucinates more. They're like very obvious. inconsistencies in like the things that it tries to, the facts that it picks up on, and they're just plain wrong.

[00:54:18] Far El: And anyone with any knowledge of the subject matter will see, will spot that immediately. So Sumith, when he was talking about Cloud 3, actually referenced some examples from AI News in his timeline, if you go check out Sumith's timeline on Cloud 3. And yeah, I will say like that is the problem with using Cloud 3, like it.

[00:54:35] Far El: It follows instructions very well, but then it will hallucinate things. Maybe because it doesn't have as good of a world model as GPC 4. Whatever it is now I'm having to decide as a product creator, am I using Cloud 3 because it, the vibes are better, but then do I have to build an anti hallucination pipeline, which I'm trying to build, but it's difficult because what is truth?

[00:54:56] Alex Volkov: Yes. Are you, let me ask you a question real quick. Let me, one second Nisten, and then Nisten you go Swyx, one question. Did you change your prompt for Cloud specifically from your GPT 4 prompt?

[00:55:08] Far El: I copied over some of it and I wrote some other parts from scratch. I understand that a lot of people say you should use XML for this stuff. I think that it's a little bit of mumbo jumbo, especially because I'm not doing structured output.

[00:55:22] Alex Volkov: I will say this thing they have Alex Albert, who's now getting more of a highlighted role. He's the guy that we've talked about that, that did the New Zealand Haystack analysis, where Claude Opus realized that it's getting tested, right? So you probably saw this famous tweet. So Alex is the prompt engineer there.

[00:55:38] Alex Volkov: He has a a collab that's called a Metaprompt. So you can find it, I'm probably going to put this in show notes, that you basically describe the task that you want, and then Opus comes up with the prompt for Opus itself. And the prompts that it comes up with works for me way better than the prompts that I've written myself.

[00:55:54] Alex Volkov: So it does use a little bit of XML. And I just want to say to Diana, it's not necessarily to you, but definitely to you as well some different prompting is needed. So these models do do need different, they've been trained differently. And XML is one part of it, but also, It feels like a little bit of more prompting and folks can't just expect to have the same prompt that works for GPT 4 to work.

[00:56:16] Alex Volkov: I think some of our intuition as well changes per model. Some models, like you said, are like more hallucinatively, but following instructions better. Definitely, I saw this. Nisten, I cut you off before. If you still remember where I cut you off please continue.

[00:56:29] Nisten: No, it was along the side. So I've used Sonnet and I just opened the Bing sidebar and quickly iterate through stuff with Sonnet. And yeah, I noticed the same thing. It does make up a lot of stuff. So then I need to drop it into Bing in precision mode and have it actually look up. The stuff and Then it's still not quite ideal.

[00:56:52] Nisten: But this combination I also use Mistral Large just switching between being with internet mode and either Sonnet or Mistral Large to quickly Iterate through although Mistral Large is slow. So again, I really like the speed of

[00:57:09] Far El: Sonnet

[00:57:11] Alex Volkov: Yeah, so let's actually pick up on on the kind of the news thing. So we covered like cloud before, and now we're talked about as actually folks putting it in production like Swyx and we're also testing this Entropic Release Haiku, which is their smallest model, and that doesn't compete with any GPT 4, they go for the lowest price and the fastest kind of execution.

[00:57:32] Alex Volkov: Fairly similar to, to the command R kind of area of playground that we got, right? It's like focusing on speed, focusing on as best performance as possible for the fastest and the cheapest price possible. And we definitely heard before from multiple folks who fine tuned GPT 3. 5, for example, and get better results than GPT 4 on fine tuned GPT 3.

[00:57:51] Alex Volkov: 5 and significantly faster as well. So on tropical risk. Haiku, which is their fastest and most affordable model for enterprise applications. They stress enterprise because every dollar, every token counts, every dollar counts, and you actually get, get to measure these models not only on how good they are, but also how good they are compared to how many, how much money you pay for them and how fast they respond to your users.

[00:58:14] Alex Volkov: And the main differences between Haiku and like GPT 3. 5 or even 1. 2. [00:58:20] Zero Pro and Gemini. The main differences is price. It's priced at 25 cents per million tokens, which GPT-3 0.5 is half a dollar per million tokens. So half the price the output tokens are 1.25 dollars per million output tokens, which usually enterprises, they do prompt engineering, so they shove a bunch of stuff in the prompt, but the, the response is not that long.

[00:58:43] Alex Volkov: So usually you focus on the input tokens as well. It gets 75 on MMLU and 89 on GSM4K, which is significantly better than GPT 3. 5. Now, they may have used the announced 3. 5 metrics and not the actual metrics, which oftentimes folks do, but still is very important, very impressive. And it does human eval 75%, 70, almost 76.

[00:59:09] Alex Volkov: percent on human eval on code, which is quite impressive for a super fast model. But I think the highlight of the differences between a 3. 5 or a Gemini One Pro is that Haiku is vision enabled, right? So you can pass images. It's quite impressively so vision enabled. So whatever we got excited about last year at Swyx, I think now it's possible with like up to 25 seconds per million tokens, which is quite incredible.

[00:59:34] Alex Volkov: You can use it everywhere pretty much. It's none, million tokens is a lot. And also it has 200, oh, sorry, go ahead, Svek.

[00:59:43] Far El: No, one caveat or question is the vision model in Haiku the same as, same vision model as in Sonnet or Opus, right? Maybe it's dumbed down as well, and so no one's really run, yeah, no one's really run any of the benchmarks on this stuff.

[00:59:56] Alex Volkov: Yeah, and then I think it's worth calling out that like now the same level 3. 5 speed and performance, significant like improvement of performance plus vision enabled plus 200, 000 context window as well, which 3. 5 is, I think still is it 8? Yeah. So shout out to to Entropic, sorry, not Cohere, to Entropic to keep bringing us the news.

[01:00:17] Alex Volkov: The release schedule was very well timed. They released the biggest biggest two models and then followed up with this fast model. And for folks who are looking for how to use or maybe lower their costs with the same performance. It's very interesting. Anthropic promised us tool use and function calling and haven't yet gave us function calling.

[01:00:35] Alex Volkov: They said that these models are able to do function calling and tool use, but we still are not able to use this. For your preferences, you may go here.

[01:00:42] Hardware and Robotics

[01:00:42] Alex Volkov: Big companies and APIs. I think that's most of what we want to cover, but I think we're smoothly moving towards the next area where we talk about hardware and robotics because one big company joined another big company a few weeks ago, and now it's worth talking about open AI and figure the humanoid robot.

[01:01:01] Alex Volkov: Figure has been in the news, they showed the case the robot for a while. Their, I think, main competitor It's funny how Boston Dynamics was the big name in robotics for a while, and now all these companies are leapfrogging Boston Dynamics at some fields.

[01:01:16] Figure + OpenAI integration shown on a video

[01:01:16] Alex Volkov: So Figure has this humanoid robot, it has , ten fingers, it moves them very freely, it's very interesting.

[01:01:22] Alex Volkov: Recently they announced their integration with OpenAI, And I think OpenAI also announced the integration with Figure. And now they released a video, and that video is bonkers. It's really, folks, it's really bonkers.