This meme cartoon describes this week perfectly, and yeah, it can be a repeating theme, but this week was absolutely bonkers. For starters, for the first time ever, we got an Open Weights model (Command R+) to jump over GPT-4 in human rankings on LMsys, this is huge!

Then on Tuesday, it seems that all the companies just wanted to one up one another, first Gemini 1.5 released with updates, made it available in 180 countries, added audio mode + tons of API improvements and system prompts, then less than an hour later, OpenAI has given us a "majorly improved" GPT-4 Turbo version (2024-04-09) that is now back to being the BEST LLM IN THE WORLD and to cap that day off, Mistral did the thing again, the thing being, dropping a torrent link in a tweet with no explanations.

What was in that torrent is a Mixtral 8x22B MoE (which we started calling Bixtral) which comes with an Apache2 license and seems to be VERY good!

We also saw the first finetune from HuggingFace/KAIST folks less than 48 hours later (the authors of said finetune actually came on the show 🎉 )

Fully Connected is a week from today! If you haven't yet signed up, use THURSDAI promo code and come hear from Richard Socher (You.com), Jerry Liu (Ilamaindex CEO), Karoly (TwoMinutePapers), Joe Spisak (Meta) and and leaders from NVIDIA, Snowflake, Microsoft, Coatue, Adobe, Siemens, Lambda and tons more 👇

TL;DR of all topics covered:

Open Source LLMs

🔥 Mistral releases Mixtral 8x22 Apache 2 licensed MoE model (Torrent, TRY IT)

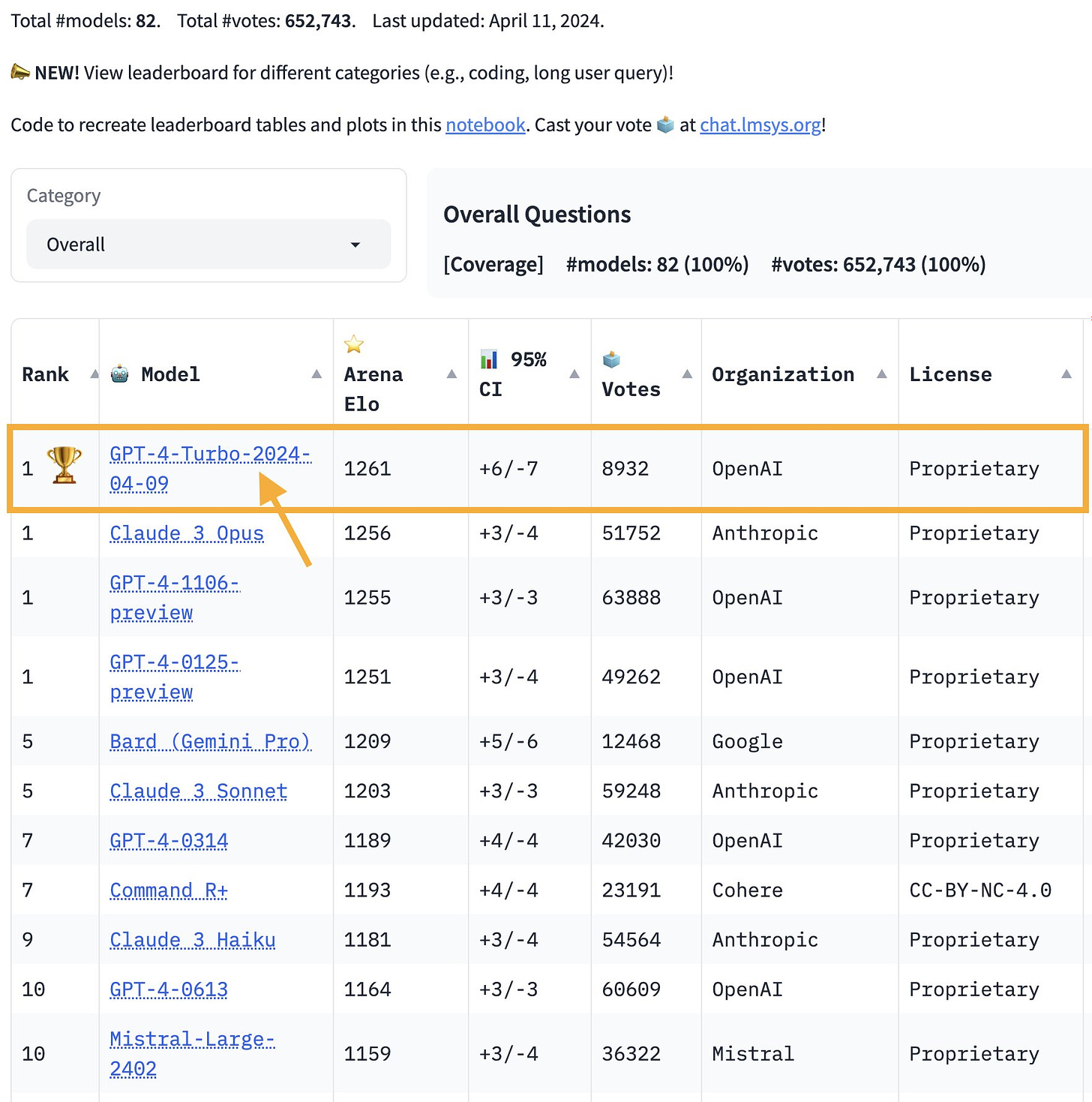

Cohere CMDR+ jumps to no 6 on LMSys and beats GPT4 (X)

CodeGemma, RecurrentGemma & Gemma Instruct 1.1 (Announcement)

Auto-code-rover gets 22% on SWE bench (Announcement)

HuggingFace - Zephyr 141B-A35B - First Bixtral Finetune (Announcement)

Mistral 22B - 1 single expert extracted from MoE (Announcement, HF)

This weeks Buzz - Weights & Biases updates

FullyConnected is in 1 week! (Come meet us)

Big CO LLMs + APIs

🔥 GPT-4 turbo is back to being number 1 AI with 88.2% Human Eval score (X)

Gemini 1.5 Pro now understands audio, uses unlimited files, acts on your commands, and lets devs build incredible things with JSON mode (X)

LLama 3 coming out in less than a month (confirmed by Meta folks)

XAI Grok now powers news summaries on X (Example)

Cohere new Rerank 3 (X)

Voice & Audio

HuggingFace trained Parler-TTS (Announcement, Github)

Udio finally launched it's service (Announcement, Leak, Try It)

Suno has added explore mode (suno.ai/explore)

Hardware

Humane AI pin has started shipping - reviews are not amazing

Open Source LLMs

Command R+ first open weights model that beats last year GPT4 versions

This is massive, really a milestone to be discussed, and even though tons of other news happened, the first time an open weights model is beating GPT-4 not on a narrow case (coding, medical) but on a general human evaluation on the arena.

This happened just a year after GPT-4 first came out, and is really really impressive.

Command R+ has been getting a lot of great attention from the community as well, folks were really surprised by the overall quality, not to mention the multilingual abilities of CommandR+

Mixtral 8x22B MoE with 65K context and Apache 2 license (Bigstral)

Despite the above, Cohere time in the sun (ie top open weights model on lmsys) may not be that long if the folks at Mistral have anything to say about it!

Mistral decided to cap the crazy Tuesday release day with another groundbreaking tweet of theirs which includes a torrent link and nothing else (since then they of course uploaded the model to the hub) giving us what potentially will unseat Command R from the rankings.

The previous Mixtral (8x7B) signaled the age of MoEs and each expert in that was activated from Mistral 7B, but for this new affectionally named Bixtral model, each expert is a 22B sized massive model.

We only got a base version of it, which is incredible on it's own right, but it's not instruction finetuned yet, and the finetuner community is already cooking really hard! Though it's hard because this model requires a lot of compute to finetune, and not only GPUs, Matt Shumer came on the pod and mentioned that GPUs weren't actually the main issue, it was system RAM when the finetune was finished.

The curious thing about it was watching the loss and the eval loss. it [Bixtral] learns much faster than other models - Matt Shumer

Matt was trying to run Finetunes for Bigstral and had a lot of interesting stuff to share, definitely check out that conversation on the pod.

Bigstral is... big, and it's not super possible to run it on consumer hardware.... yet, because Nisten somehow got it to run on CPU only 🤯 using Justin Tuneys LLM kernels (from last week) and LLama.cpp with 9tok/s which is kinda crazy.

HuggingFace + KAIST release Zephyr 141B-A35B (First Mixtral 8x22 finetune)

And that was fast, less than 48 hours after the torrent drop, we already see the first instruction finetune from folks at HuggingFace and KAIST AI.

They give us a new finetune using ORPO, a technique by KAIST that significantly improves finetuning ability (they finetuned Bigstral with 7k capybara instructions for 1.3 hours on 4 nodes of 8 x H100s)

They used the distilled Capybara Dataset (From LDJ and Argilla) to give this model a bit more clarity and instruction following.

You can find the model on the hub here, and the question is, but now the question is would one run this? 😅

Btw the authors of the finetune and the ORPO paper from KAIST, Jiwoo Hong and Noah Lee came on the pod and chatted about this finetune and ORPO which was awesome! Definitely check this conversation out.

Big CO LLMs + APIs

Gemini 1.5 Pro updates - Audio Mode, JSON, System prompts and becomes free

Google really pulled out all the stops for this updated release of Gemini 1.5 Pro, it's flagship, 1M context window model.

Its now available for free to over 180 countries, has a new audio mode where you can upload up to 9.5 hours of audio (which is crazy on it's own) and it's not merely transcription, it seems that they baked an audio encoder in there so the model can understand some tonality and even some dogs barking in the background!

In fact, instead of me writing down, how about I show you an example of Gemini itself extracting everything I said about it during the show? Here's a screenshot of me uploading 2+ hours of raw unedited audio form the show today:

You can see the Google AI studio (which is a very clean product!) and the new system message, the ability to turn the safety filters off (thank you!) and the audio mode. Not to mention the 250K tokens 😂 that my audio cost this model. Mind you, the highest context window after Gemini is Claude 3 with 200K.

Google also significantly improves the APIs, and gave access to a new file upload API that allows up to 2GB files uploaded (to support this amazing context and multimodality) 🔥

OpenAI - GPT 4 turbo a new and "Majorly improved version"

Remember when Gemini 1.5 was announced? You may not remember that specific day, because an hour after that, OpenAI published SORA and blew our collective minds off.

Well, OpenAI is at it again, but this time it didn't quite work the same way, but an hour after Gemini 1.5 updates came out, OpenAI released GPT4-Turbo-April-9 aka (gpt-4-turbo-2024-04-09) and basically all they said that it was "majorly improved"

The technical stuff first, they combined the tool use (function calling) API with the Vision API, which is feature parity with Anthropic).

The vibes are currently good, folks are seeing improvements across the board in logic and code creation, specifically the folks at Cursor posted an example (and enabled this model in their IDE) where it writes higher quality code.

As I’m writing these words, LMSys updated us that this new model shot up to the top of the arena taking the Mantle back from Opus as the best AI we have, and also a confirmation from OpenAI that this model is now powering the chatGPT interface 👏

OpenAI also just open sourced a repo to show what they used to get these exact scores for the new GPT-4 and they are impressive

This weeks Buzz (What I learned with WandB this week)

Final Call! Fully Connected, our very own annual conference is about to commence

(hehe of course it's happening on a ThursdAI, I still have to think about how to record the show next week)

Please feel free to use the code THURSDAI to sign up and come see us.

As a reminder, we're also running a workshop a day before, where we're going to showcase Weave and give practical examples for LLM builders, and it's going to be a lot of fun! Looking forward to see some of you there!

Audio & Voice

Udio launches a suno competitor AI Music service

For the past week+ I've seen tons of AI plugged folks in SF post about "a new AI for music is coming and it's going to be amazing". Well it's finally here, called Udio and it gives Suno a run for its money for sure.

With the ability to create full tracks, create into and outro, remix, and a very needed AI enhanced prompting, Udio does look very very polished and sounds GOOD!

Here is an example of a classical music track that's been going viral:

I've played a few more examples on the show itself, and you can check out the trending creations on their page.

Interestingly, this is probably a diffusion model, and so folks have been squeezing all kinds of stuff that's not only musical out of there, including, stand up comedy with a full laugh track.

Suno adds explore mode

Meanwhile Suno is not going down without a fight and have released this amazing new page where they generated thousands of samples for hundreds of interesting/weird sound styles, letting you get exposed and learn about different musical styles. I really liked it so recorded a short reaction video:

Phew, somehow we made it, we were able to summarize the huge news this week in under two hours + a newsletter!

The one thing I haven't been able to do is to actually try out many of the stuff I talked about, so after writing this, will take a little break and delve into some of the other things I haven't yet tried 👀

See you guys next week in limited capacity (maybe, we'll see) and until then, have a great week 🫡

📅 ThursdAI - Apr 11th, 2024 - GPT4 is king again, New Mixtral 8x22B + First finetune, New Gemini 1.5, Cohere beats old GPT4, more AI news