Hey yall, welcome to this special edition of ThursdAI. This is the first one that I'm sending in my new capacity as the AI Evangelist Weights & Biases (on the growth team)

I made the announcement last week, but this week is my first official week at W&B, and oh boy... how humbled and excited I was to receive all the inspiring and supporting feedback from the community, friends, colleagues and family 🙇♂️

I promise to continue my mission of delivering AI news, positivity and excitement, and to be that one place where we stay up to date so you don't have to.

This week we also had one of our biggest live recordings yet, with 900 folks tuned in so far 😮 and it was my pleasure to again to chat with folks who "made the news" so we had a brief interview with Steve Ruiz and Lou from TLDraw, about their incredible GPT-4 Vision enabled "make real" functionality and finally got to catch up with my good friend Idan Gazit who's heading the Github@Next team (the birthplace of Github Copilot) about how they see the future. So definitely definitely check out the full conversation!

TL;DR of all topics covered:

Open Source LLMs

Nous Capybara 34B on top of Yi-34B (with 200K context length!) (Eval, HF)

Microsoft - Phi 2 will be open sourced (barely) (Announcement, Model)

HF adds finetune chain genealogy (Announcement)

Big CO LLMs + APIs

Microsoft - Everything is CoPilot (Summary, copilot.microsoft.com)

CoPilot for work and 365 (Blogpost)

CoPilot studio - low code "tools" builder for CoPilot + GPTs access (Thread)

OpenAI Assistants API cookbook (Link)

Vision

🔥 TLdraw make real button - turn sketches into code in seconds with vision (Video, makereal.tldraw.com)

Humane Pin - Orders are out, shipping early 2024, multimodal AI agent on your lapel (

)

Voice & Audio

🔥 DeepMind (Youtube) - Lyria high quality music generations you can HUM into (Announcement)

EmotiVoice - 2000 different voices with emotional synthesis (Github)

Whisper V3 is top of the charts again (Announcement, Leaderboard, Github)

AI Art & Diffusion

🔥 Real-time LCM (latent consistency model) AI art is blowing up (Krea, Fal Demo)

Runway motion brush (Announcement)

Agents

Alex's Visual Weather GPT (Announcement, Demo)

AutoGen, Microsoft agents framework is now supporting assistants API (Announcement)

Tools

Gobble Bot - scrape everything into 1 long file for GPT consumption (Announcement, Link)

ReTool state of AI 2023 - https://retool.com/reports/state-of-ai-2023

Notion Q&A AI - search through a company Notion and QA things (announcement)

GPTs shortlinks + analytics from Steven Tey (

https://chatg.pt

)

This Week's Buzz from WandB (aka what I learned this week)

Introducing a new section in the newsletter called "The Week's Buzz from WandB" (AKA What I Learned This Week).

As someone who joined Weights and Biases without prior knowledge of the product, I'll be learning a lot. I'll also share my knowledge here, so you can learn alongside me. Here's what I learned this week:

The most important things I learned this week is just how prevelant and how much of a leader Weights&Biases is. W&B main product is used by most of the foundation LLM trainers including OpenAI.

In fact GPT-4 was completely trained on W&B!

It's used by pretty much everyone besides Google. In addition to that it's not only about LLMs, W&B products are used to train models in many many different areas of the industry.

Some incredible examples are a pesticide dispenser that's part of the John Deere tractors that only spreads pesticides onto weeds and not actual produce. And Big Pharma who's using W&B to help create better drugs that are now in trial. And it's just incredible how much machine learning that's outside of just LLMs is there. But also I'm absolutely floored by just the amount of ubiquity that W&B has in the LLM World.

W&B has two main products, Models & Prompts, Prompts is a newer one, and we're going to dig into both of these more next week!

Additionally, it's striking how many AI Engineers, API users such as myself and many of my friends, have no idea of who W&B even is, of if they do, they never used it!

Well, that's what I'm here to change, so stay tuned!

Open source & LLMs

In the open source corner, we have the first Nous fine-tune of Yi-34B, which is a great model that we've covered in the last episode and now is fine-tuned with the Capybara dataset by ThursdAI cohost, LDJ! Not only is that a great model, it now tops the charts for the resident reviewer we WolframRavenwolf on /r/LocalLLama (and X)

Additionally, Open-Hermes 2.5 7B from Teknium is now second place on HuggingFace leaderboards, it was released recently but we haven't covered until now, I still think that Hermes is one of the more capable local models you can get!

Also in open source this week, guess who loves it? Satya (and Microsoft)

They love it so much that they not only created this awesome slide (altho, what's SLMs? Small Language Models? I don't like it), they also announced that LLaMa and Mistral are coming to Azure services as inference!

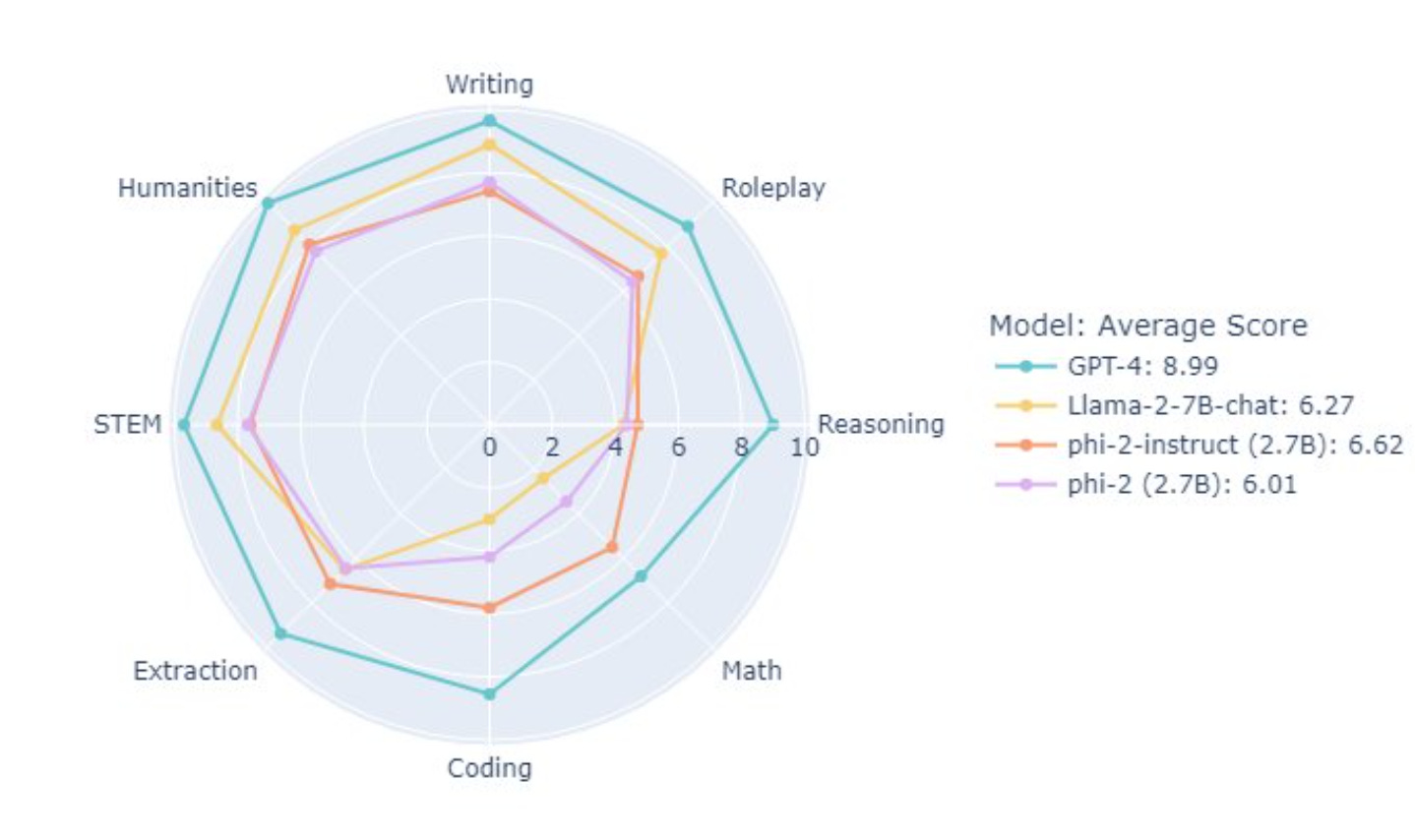

And they gave us a little treat, Phi2 is coming. They said OpenSource (but folks looking at the license saw that it's only for research capabilities) but supposedly it's a significantly more capable model while only being 2.7B weights (super super tiny)

Big Companies & APIs

Speaking of Microsoft, they announced so much during their Ignite event on Wednesday (15th) that it's impossible to cover all of it in this newsletter, but basically here are the main things that got me excited!

CoPilot everywhere, everything is CoPilot

Microsoft rebranded Bing Chat to Copilot and it now lives on copilot.microsoft.com

and it's basically a free GPT4, with vision and DALL-e capabilities. If you're not signed up for OpenAI's plus membership, this is as good as it gets for free!

They also announced CoPilot for 365, which means that everything from office (word, excel!) to your mail, and your teams conversations will have a CoPilot that will be able to draw from your organizations knowledge and help you do incredible things. Things like help book appointments, pull in relevant people for the meeting based on previous documents, summarize that meeting, schedule follow ups, and like a TON more stuff. Dall-e integration will help you create awesome powerpoint slides.

(p.s. all of this will be allegedly data protected and won't be shared with MS or be trained on)

They literally went and did "AI everywhere" with CoPilot and it's kinda incredible to see how big they are betting the farm on AI with Microsoft while Google... where's Google™?

CoPilot Studio

One of the more exciting things for me was, the CoPilot Studio announcement, a low-code tool to extend your company's CoPilot by your IT, for your organization. Think, getting HR data from your HR system, or your sales data from your SalesForce!

They will launch with 1100 connectors for many services but allow you to easily build your own.

One notable thing is, Custom GPTs will also be a connector! You will be literally able to connect yyour CoPilot with your (or someone's) GPTs! Are you getting this? AI Employees are coming faster than you think!

Vision

I've been waiting for cool vision demos since GPT-4V API was launched and oh boy did we get them! From friend of the pod Robert Lukoshko Auto screenshot analysis which will take screenshots periodically and will send you a report of all you did that day, to Charlie Holtz live webcam narration by David Attenborough (which is available on Github!)

But I think there's 1 vision demo that takes the cake this week, by our friends (Steve Ruiz) from TLDraw, which is a whiteboard canvas primitive. They have added a sketch-to-code button, that allows you to sketch something out and GPT-4 Vision will analyze this, and GPT-4 will write code, and you will get live code within seconds. It's so mind-blowing that I'm still collecting my jaw of the floor here. It also does coding, so if you ask it nicely to add JS interactivity, the result will be interactive 🤯

GPT4-V Is truly as revolutionary as I imagined it to be when Greg announced it on stage 🫡

P.S - Have you played with it? Do you have cool demos? DM me with 👁️🗨️ emoji and a cool vision demo to be included in the next ThursdAI

AI Art & Diffusion & 3D

In addition to the TLDraw demo, one mind-blowing demo after another is coming this week from the AI Art world, using the LCM (Latent Consistency Model) + a whiteboard. This is yet another see it to believe it type thing (or play with it)

(video from Linus)

Dear friends from Krea.ai were the first to implement this insanity, that allows you to see real time AI art generation almost as fast as you type your prompts, and then followed up by the wizards at Fal to get the generations down to several mili-seconds (shoutout Gorkem!), the real time drawing thing is truly truly mind-blowing. It's so mind-blowing that folks add their webcam feeds into this, and see almost real time generation on the fly of their webcam feeds.

Meta announcing new Emus (Video & Edit)

Meta doesn't want me to relax, and during the space, announced their text-to-video and textual-editing models.

Emu Video produces great videos from a prompt, and emu-edit is really interesting, it allows you to edit parts of images by typing, think "remove the tail from this cat" or "remove the hat from this person"

They have this to say, which... dayum.

In human evaluations, our generated videos are strongly preferred in quality compared to all prior work– 81% vs. Google’s Imagen Video, 90% vs. Nvidia’s PYOCO, and 96% vs. Meta’s Make-A-Video. Our model outperforms commercial solutions such as RunwayML’s Gen2 and Pika Labs

It's really compelling, can't wait to see if they open source this, video is coming ya'll!

Audio & Sound

Deepmind + Youtube announced Lyria (blogpost)

This new music model is pretty breathtaking, but we only got a glimpse, not even a waitlist for that one, however, check out the pre-recorded demoes, folks at deep mind have a model you can hum into, sing into, and it'll create a full blown track for you, with bass, drums, and singing!

Not only that, it will also license vocals from mucisians (al-la Grimes) and will split the revenue between you and them if you post it on Youtube!

Pretty cool Google, pretty cool!

Agents & Tools

Look, I gotta be honest, I'm not sure about this category, Agent and Tools, if to put them into one or not, but I guess GPTs are kinda tools, so I'm gonna combine them for this one.

GPTs (My Visual Weather, Simons Notes)

This week, the GPT that I created called Visual Weather GPT has blown up, with over 5,000 chats opened with it, and many many folks using this and texting me about this. super cool way to just like check all the capabilities of a GPT. If you remember, I thought of this idea a few weeks ago when we got a sneak preview to the "All tools" mode, but now I can share it with you all in the form of a GPT, that will browser the web for real time weather data, and create a unique art piece for that location and weather conditions!

It's really easy to make as well, and I do fully expect everyone to start making their own versions very soon, and I think we're inching towards the era of JIT (just in time) software, where you'll create software as you require it, and it'll be as easy as talking to a chatGPT!

Speaking of, friend of the pod Steven Tey from Vercel (who's dub.sh I use and love for thursdai.news links) has released a GPT link shortener, called chatg.pt and you can register and get your own cool short link like https://chatg.pt/artweather 👏 And it'll give you analytics as well!

Pro tip for weather GPT, you can ask for a specific season or style in parentesises and then those as greeting cards for your friends. Happy upcoming Thanks giving everyone!

Speaking of Thanks

giving, we're not taking a break, next ThursdAI, November 23, join us for a live discussion and podcast recoding! We'll have many thanks, cool AI stuff, and much more!