Hey all, Happy New Year! What a great chat we had today, and even had 2! deep dives, had the honor to host VB from HuggingFace who dove deep into ASR and then we had Bo Wang who’s an expert in embeddings with a deep dive into new architectures like the one showed by E5.

This week (and this year) I figured I’d try something new, and did a vocal recap of everything we talked about, at the end of the TL;DR of all topics covered, and figured I’d share with you (if you don’t have time for a full two hours, this is exactly 5 minutes!)

Here’s a TL;DR and show notes links

Open Source LLMs

Big CO LLMs + APIs

Samsung is about to announce some AI stuff

OpenAI GPT store to come next week

Perplexity announces a $73.6 Series B round

Vision

Voice & Audio

Tools & Agents

Open Source LLMs

WizardCoder 33B reaches a whopping 79% on HumanEval @pass1

State of the art LLM coding in open source is here. A whopping 79% on HumanEval, with Wizard Finetuning DeepSeek Coder to get to the best Open Source coder, edging closer to GPT4 and passing GeminiPro and GPT3.5 👏 (at least on some benchmarks)

Teknium releases a Hermes on top of SOLAR 10.7B

Downloading now with LMStudio and have been running it, it's very capable. Right now SOLAR models are still on top of the hugging face leaderboard, and Hermes 2 now has 7B (Mistral) 10.7B (SOLAR) and 33B (Yi) sizes.

On the podcast I've told a story of how this week I actually used the 33B version of Capybara for a task that GPT kept refusing to help me with. It was honestly kind of strange, a simple request to translate kept failing with an ominous “network error”.

Which only highlighted how important the local AI movement is, and now I actually have had an experience myself of a local model coming through when a hosted capable one didn’t

Microsoft releases a new text embeddings SOTA model E5 , finetuned on synthetic data on top of Mistral 7B

We present a new, easy way to create high-quality text embeddings. Our method uses synthetic data and requires less than 1,000 training steps, without the need for complex training stages or large, manually collected datasets. By using advanced language models to generate synthetic data in almost 100 languages, we train open-source models with a standard technique. Our experiments show that our method performs well on tough benchmarks using only synthetic data, and it achieves even better results when we mix synthetic and real data.

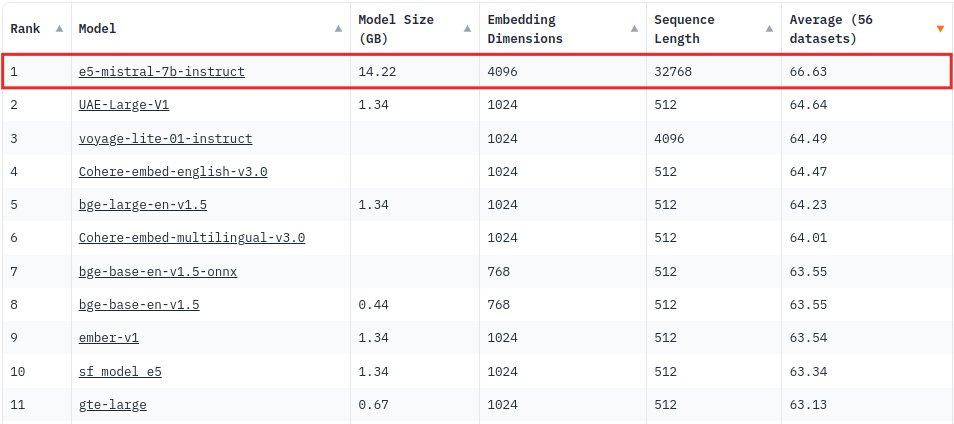

We had the great please of having Bo Wang again (One of the authors of the Previously SOTA Jina embeddings and a previous podcast gust) to do a deepdive into embeddings and specifically E5 with it's decoder only architecture. While the approach Microsoft researchers took here are interesting, and despite E5 claiming a top spot on the MTEB leaderboard (pictured above) this model doesn't seem to be super practical for most purposes folks use embeddings right now (RAG) for the following reasons:

Context length limitation of 32k, with a recommendation not to exceed 4096 tokens.

Requires a one-sentence instruction for queries, adding complexity for certain use cases like RAG.

Model size is large (14GB), leading to higher costs for production use.

Alternative models like bge-large-en-v1.5 are significantly smaller (1.35GB).

Embedding size is 4096 dimensions, increasing the cost for vector storage.

Big CO LLMs + APIs

OpenAI announces that the GPT store is coming next week!

I can't wait to put the visual weather GPT I created and see how the store prompts it and if I get some revenue share like OpenAI promised during dev day. My daughter and I are frequent users of Alice - the kid painter as well, a custom GPT that my Daughter named Alice, that knows it's speaking to kids over voice, and is generating coloring pages. Will see how much this store lives up to the promises.

This weeks Buzz (What I learned with WandB this week)

This week was a short one for me, so not a LOT of learnings but I did start this course from W&B, called Training and Fine-tuning Large Language Models (LLMs).

It features great speakers like Mark Sarufim from Meta, Jonathan Frankle from Mosaic, and Wei Wei Yang from Microsoft along with W&B MLEs (and my team mates) Darek Kleczek and Ayush Thakur and covers the end to end of training and fine-tuning LLMs!

The course is available HERE and it's around 4 hours, and well well worth your time if you want to get a little more knowledge about the type of stuff we report on ThursdAI.

Vision

SeeAct - GPT4V as a generalist web agent if grounded (X, Paper)

In June OSU NLP released Mind2Web which is a dataset for developing and evaluating web acting agents, LLMs that click buttons and perform tasks with 2350 tasks from over 130 website, stuff like booking flights, finding folks on twitter, find movies on Netflix etc'

GPT4 without vision was terrible at this (just by reading the website html/text) and succeeded at around 2%.

With new vision LMMs, websites are a perfect place to start training because of the visual (how website is rendered) is no paired with HTML (the grounding) and SeeAct uses GPT4-V to do this

SeeAct is a generalist web agent built on LMMs like GPT-4V. Specifically, given a task on any website (e.g., “Compare iPhone 15 Pro Max with iPhone 13 Pro Max” on the Apple homepage), the agent first performs action generation to produce a textual description of the action at each step towards completing the task (e.g., “Navigate to the iPhone category”), and then performs action grounding to identify the corresponding HTML element (e.g., “[button] iPhone”) and operation (e.g., CLICK, TYPE, or SELECT) on the webpage.

SeeAct achieves a 50% score on the Mind2Web evaluation task!

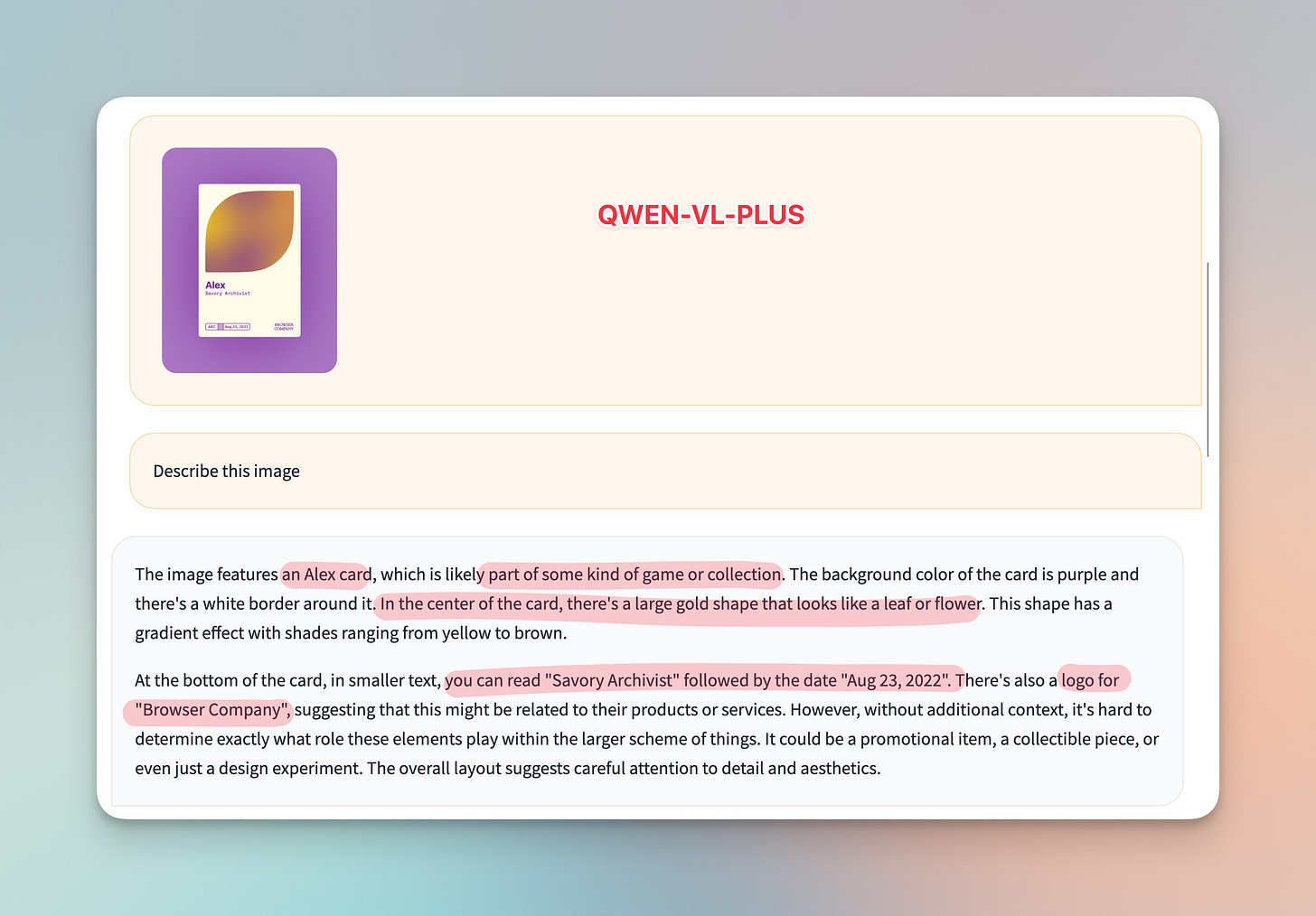

QWEN-VL was updated to PLUS (14B) and it's pretty good compared to GPT4V

Capabilities include: image captioning, visual question answering, visual grounding, OCR, visual reasoning. We had a chat with Junyang Lin, the tech lead for Qwen with Alibaba on the pod, and he mentioned specifically that they noticed that adding a larger "brain" (as in, LLM) to vision models, significantly increases the performance and vision understanding of the LMMs.

While this model is not yet released, you can demo it here, and Junyang told us that it is coming to a release, like the previous QWEN models did before.

I noticed the advanced OCR capabilities and understanding, this example was really spot on. Notice the "logo for Browser company" , the model understood that this text was in fact a logotype! (which even GPT4V failed at in my test)

Voice

Parakeet from NVIDIA beats Whisper on English with a tiny model (blog)

Brought to you by @NVIDIAAI and @suno_ai_, parakeet beats Whisper and regains its first place. The models are released under a commercially permissive license! The models inherit the same FastConformer architecture and come in 2 flavors: 1. RNNT (1.1B & 0.6B) 2. CTC (1.1B & 0.5B) Each model is trained on 65K hours of English data (40K private proprietary data by Suno & NeMo teams) over several hundred epochs. Key features of the parakeet model: 1. It doesn't hallucinate (if the audio sample has silence, the output is silent). 2. It is quite robust to noisy audio (if the audio sample has non-vocal sounds, it outputs silence).

We had the great please to have VB from the Audio team at HuggingFace, and he went in depth into the way in which Parakeet is better than Whisper (higher quality transcriptions while also being much much faster), it was trained on only 65K hours vs a few million with whisper, and we also covered that because of this different architecture, Parakeet is not able to receive any guidance for words that are hard for it to understand. For example, with whisper, I often provide ThursdAI in initial_prompt parameter to help guide whisper to know what it should say.

Regardless, having a model that's superfast, and can beat whisper, and is commercially licensed to build on top of is incredible! Here's a demo for you to try it out and it's available with the NVIDIA NeMO framework.

Coqui shuts down :(

We've had Josh from Coqui on our pod before, when they released XTTS, and they have been friends ever since. It's sad to see Coqui shut down, and we want to wish all the team an easy and great transition 👏 You guys did a great job and we're rooting for each and every one of you.

Coqui is closing down.

The team is praised for being small yet impactful, competing with big tech despite limited resources.

Coqui began as the Machine Learning Group at Mozilla, creating DeepSpeech, Common Voice, and TTS.

Spun out as Coqui in 2021 to accelerate their mission.

Major achievement: XTTS, with openly released model weights for versions 1 and 2.

2021: Coqui STT v1.0 released, Coqui Model Zoo and SC-GlowTTS launched.

2022: YourTTS became viral, numerous open-source releases, team expansion.

2023: Coqui Studio webapp and API launched, XTTS open release, first customers acquired.

Acknowledgment of the community, investors, customers, and partners for their support.

Partners include HuggingFace, Mozilla, Masakhane, Harvard, Indiana University, Google, MLCommons, Landing AI, NVIDIA, Intel, and Makerere University.

Future of generative AI in 2024 predicted to grow, with open-source playing a significant role.

Coqui TTS remains available on Github for further innovation.

Tools

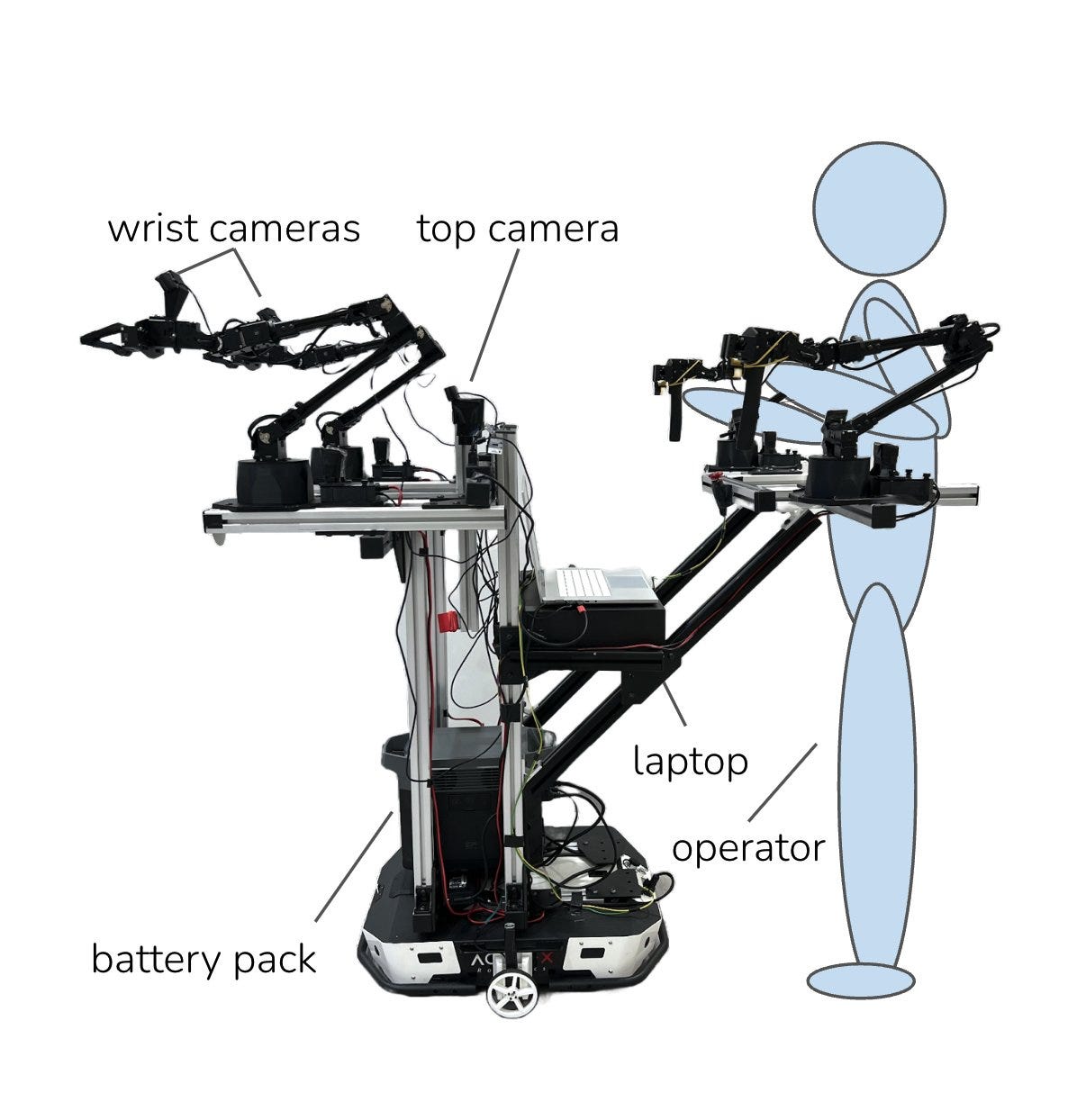

Stanford Mobile ALOHA bot open sources, shows cooking

Back in March, Stanford folks introduced ALOHA, (A Lowcost Open Hardware system for Bimanual Teleoperation)

Basically a 4 arm robot, that a human operator can operate tasks and do fine motor skills like break an egg or tie ziptie. Well now, just 10 months later, they are introducing the Mobile version. A mounted ALOHA gear, that uses the human to perform tasks like cooking, calling the elevator and is able to learn from those actions, and then perform them.

The operating gear can be easily detached for self operation, it's mobile so compute and battery pack are on the wheel base.

Recently Meta released a huge dataset of first person operations called Ego-Exo 4D which combines first person and third person perspective for a big variety of tasks, such as cooking, cleaning, sports, healthcare and rock climbing, and this open hardware from Stanford is an additional example of how fast robotics advances into the physical world

And just like that, the first ThursdAI of the year is done! 🫡 Thank you for being a subscriber, see you next week 👏