ThursdAI October 26th

Hey everyone, welcome to ThursdAI, this is Alex Volkov, I'm very happy to bring you another weekly installment of 📅 ThursdAI.

TL;DR of all topics covered:

Open Source LLMs

JINA - jina-embeddings-v2 - First OSS embeddings models with 8K context (Announcement, HuggingFace)

Simon Willison guide to Embeddings (Blogpost)

Data Provenance Initiative - public audit of 1800+ datasets (Announcement)

Huge open source LLM comparison from r/LocalLLama (Thread)

Big CO LLMs + APIs

NVIDIA research new spin on Robot Learning (Announcement, Project)

Microsoft / Github - Copilot crossed 1 million paying users (X)

RememberAll open source (X)

HuggingFace Gradio-lite (X, Playground)

Voice

Gladia announces multilingual near real time whisper transcriptions (X, Announcement)

AI Art & Diffusion

Segmind releases SSD-1B - 50% smaller and 60% faster version of SDXL (Blog, Hugging Face, Demo)

Prompt techniques

How to use seeds in DALL-E to add/remove objects from generations (by Simon Willison - Thread)

This week was a mild one in terms of updates, believe it or not, we didn't get a new State of the art open source large language model this week, however, we did get a new state of the art Embeddings model from JinaAI (supporting 8K sequence length).

We also had quite the quiet week from the big dogs, OpenAI is probably sitting on updates until Dev Day (which I'm going to cover for all of you, thanks to Logan for the invite), Google had some leaks about Gemini (we're waiting!) and another AI app builder thing, Apple is teasing new hardware (but nothing AI related) coming soon, and Microsoft / Github announced that CoPilot has 1 million paying users! (I tweeted this and Idan Gazit, Sr. Director GithubNext where Copilot was born, tweeted that "we're literally just getting started" and mentioned November 8th as... a date to watch, so mark your calendars for some craziness next two weeks)

Additionally, we covered the Data provenance initiative that helps sort and validate licenses for over 1800 public datasets, a massive effort led by Shayne Redford with assistance from many folks including friend of the pod Enrico Shippole, we also covered another massive evaluation effort by a user named WolframRavenwolf on the LocalLLama subreddit, that evaluated and compared 39 open source models and GPT4. Not surprisingly the best model right now is the one we covered last week, OpenHermes 7B from Teknium.

Two additional updates were covered, one of them is Gladia AI, released their version of whisper over web-sockets, and I covered it on X with a reaction video, it allows developers to stream speech to text, with very low latency and it's multi-lingual as well, so if you're building an agent that folks can talk to, definitely give this a try, and finally, we covered SegMind SSD-1B, a distilled version of SDXL, making it 50% smaller in size and 60% faster in generation speed (you can play with it here)

This week I was lucky to host 2 deep dive conversations, one with Bo Wang, from Jina AI, and we covered embeddings, vector latent spaces, dimensionality, and how they retrained BERT to allow for longer sequence length, it was a fascinating conversation, even if you don't understand what embeddings are, it's well worth a listen.

And in the second part, I had the pleasure to have Abubakar Abid, head of Gradio at Hugging Face, to talk about Gradio, it's effect on the open source community, and then joined by Yuichiro and Xenova to talk about the next iteration of Gradio, called Gradio-lite that runs completely within the browser, no server required.

A fascinating conversation, if you're a machine learning engineer, AI engineer, or just someone who is interested in this field, we covered a LOT of ground, including Emscripten, python in the browser, Gradio as a tool for ML, webGPU and much more.

I hope you enjoy this deep dive episode with 2 authors of the updates this week, and hope to see you in the next one.

P.S - if you've been participating in the emoji of the week, and have read all the way up to here, your emoji of the week is 🦾, please reply or DM me with it 👀

Timestamps and full transcript for your convinience

## [00:00:00] Intro and brief updates

## [00:02:00] Interview with Bo Weng, author of Jina Embeddings V2

## [00:33:40] Hugging Face open sourcing a fast Text Embeddings

## [00:36:52] Data Provenance Initiative at dataprovenance.org

## [00:39:27] LocalLLama effort to compare 39 open source LLMs +

## [00:53:13] Gradio Interview with Abubakar, Xenova, Yuichiro

## [00:56:13] Gradio effects on the open source LLM ecosystem

## [01:02:23] Gradio local URL via Gradio Proxy

## [01:07:10] Local inference on device with Gradio - Lite

## [01:14:02] Transformers.js integration with Gradio-lite

## [01:28:00] Recap and bye bye

Full Transcription for your convenience:

[00:00:00] Alex Volkov: Hey, everyone. Welcome to Thursday. My name is Alex Volkov, and I'm very happy to bring you another weekly installment of Thursday. I. This week was actually a mild one in terms of updates, believe it or not. Or we didn't get the new state of the art opensource, large language model this week. However, we did get a new state of the art embeddings model. And we're going to talk about that. we got very lucky that one of the authors of this, a medics model, gold Gina embeddings V2, Bo Wang joined us on stage and gave us a masterclass in embeddings and share some very interesting things about this, including some stuff they haven't charged yet. So definitely worth a listen. Additionally recovered the data provenance initiative that helps sort and validate licenses for over 1800 public data sets. A massive effort led by Shane Redford with assistance from many folks, including a friend of the pod. Enrico Shippole.

[00:01:07] we also covered the massive effort by another user named Wolf from Ravenwolfe on the local Lama subreddit. Uh, that effort evaluated and compared to 39 open source models ranging from 7 billion parameters to 70 billion parameters and threw in the GPT4 comparison as well. Not surprisingly, the best model right now is the one we covered last week from friends of the politic new called open Hermes seven B.

[00:01:34] Do additional updates we've covered. One of them is Gladia AI, a company that offers transcription and translation APIs release their version of whisper over WebSockets. So live transcription, and I covered it on X with a reaction video. And I'll add that link in the show notes. It allows developers like you to stream speech, to text and. Very low latency and high quality and it's multi-lingual as well. So if you're building an agent that your users can talk to. Um, definitely give this a try. And finally Segmind segued mind accompany that just decided to open source a distilled version of. SDXL, making it 50% smaller in size and the in addition to that 60% faster in generation speed. The links to all these will be in the show notes.

[00:02:23] But this week I was lucky to host two deep dives, one with Bo Weng which I mentioned. Uh, we've covered the embeddings vector led in spaces that dimensionality and how they retrained Bert model to allow for a longer sequence length. It was a fascinating conversation. Even if you don't understand what embeddings are, it's well worth the listen. And, , I learned a lot. Now I hope you will, as well. And the second part, I had the pleasure to have a Brubaker a bit. The head of grandio at hugging face to talk about gradient. What is it? Uh, its effect on the open source community. And then joined by utero. And Sunnova to talk about the next iteration of Grigio called Grigio light that runs completely within the browser. No Serra required. We also covered a bit of what's coming to Gradio in the next release. on October 31st.

[00:03:15] A fascinating conversation. If you're a machine learning engineer, AI engineer, or just somebody who's interested in this skilled. You've probably used radio, even if you haven't written any Gradio apps, every model and hugging face usually gets a great deal demo.

[00:03:30] And we've covered a lot of ground, including M scripting. Then by filling the browser. As a tool for machine learning, web GPU, and so much more.

[00:03:38] Again, fascinating conversation. I hope you enjoy this deep dive episode. Um, humbled by the fact that sometimes the people. Who produced the updates we cover actually come to Thursday and talk to me about the things they released. And I hope this trend continues, and I hope you enjoyed this deep dive over an episode. And, um, I'll see you in the next one. And now I give you thursday october 26. oh, awesome. It looks like Bo, you joined us. Let's see if you're connecting to the audience, and can you unmute yourself, can you see if we can hear you?

[00:04:22] Bo Wang: Hi, can you hear me? Oh, we can hear you fine, awesome. this, this, this feature of, of Twitter.

[00:04:30] Alex Volkov: That's awesome. This, this usually happens, folks join and it's their first face and then they can't leave us. And so let me just do a little, maybe... Maybe, actually, maybe you can do it, right? Let me just present yourself.

[00:04:42] I think I followed you a while ago, because I've been mentioning embeddings and the MTB dashboard and Hug and Face for a while. And, obviously, embeddings are not a new concept, right? We started with Word2Vec ten years ago, but now, with the rise of LLMs, And now with the rise of AI tools and many people wanting to understand the similarity between the user query and an actual thing they, they, they stored in some database, embeddings have seen a huge boon.

[00:05:10] And also we've saw like all the vector databases pop up like mushrooms after the rain. I think Spotify just released a new one. And my tweet was like, Hey, do we really need another vector database? But Boaz, I think I started following you because you mentioned that you were working on something that's.

[00:05:25] It's coming very soon, and finally this week this was released. So actually, thank you for joining us, Beau, and thank you for doing the first ever Twitter space for yourself. How about can we start with your introduction of who you are and how are you involved with this effort, and then we can talk about Jina.

[00:05:41] Bo Wang: Yes, sure. Basically I have a very different background. I guess I was oriJinally from China, but my bachelor was more related to text retrieval. I have a retrieval experience rather than pure machine learning background, I would say. Then I came to the Europe. I came to the Netherlands like seven or eight years ago as a, as an international student.

[00:06:04] And I was really, really lucky and met my supervisor there. She basically guided me into the, in the world of the multimedia information retrieval, multimodal information retrieval, this kind of thing. And that was around 2015 or 2016. So I also picked up machine learning there because when I was doing my bachelor, it's not really hot at that moment.

[00:06:27] It's like 2013, 2014. Then machine learning becomes really good. And then I was really motivated, okay, how can I apply machine learning to, to search? That is, that is my biggest motivation. So when I was doing my master, I, I collaborated with my friends in, in, in the US, in China, in Europe. We started with a project called Match Zoo.

[00:06:51] And at that time, the embedding on search is just a nothing. We basically built a open source. Software and became at that time the standard of neural retrieval or neural search, this kind of thing. Then when the bird got released, then our project basically got queue because. Everyone's focus basically shifted to BERT, but it's quite interesting.

[00:07:16] Then I graduated and started to work as a machine learning engineer for three years in Amsterdam. Then I moved to Berlin and joined Jina AI three years ago as a machine learning engineer. Then basically always doing neural search, vector search, how to use machine learning to improve search. That is my biggest motivation.

[00:07:37] That's it.

[00:07:38] Alex Volkov: Awesome. Thank you. And thank you for sharing with us and, and coming up and Gene. ai is the company that you're now working and the embeddings thing that we're going to talk about is from Gene. ai. I will just mention the one thing that I missed in my introduction is the reason why embeddings are so hot right now.

[00:07:53] The reason why vectorDB is so hot right now is that pretty much everybody does RAG, Retrieval Augmented Generation. And obviously, For that, you have to store some information in embeddings, you have to do some retrieval, you have to figure out how to do chunking of your text, you have to figure out how to do the retrieval, like all these things.

[00:08:10] Many people understand that whether or not in context learning is this incredible thing for LLMs, and you can do a lot with it, you may not want to spend as much tokens on your allowance, right? Or you maybe not have enough in the context window in some in some other LLMs. So embeddings... Are a way for us to do one of the main ways to interact with these models right now, which is RAC.

[00:08:33] And I think we've covered open source embeddings compared to OpenAI's ADA002 embedding model a while ago, on ThursDAI. And I think It's been clear that models like GTE and BGE, I think those are the top ones, at least before you guys released, on the Hugging Face big embedding model kind of leaderboard, and thank you Hugging Face for doing this leaderboard.

[00:09:02] They are great for open source, but I think recently it was talked about they're lacking some context. And Bo, if you don't mind, please present what you guys open sourced this week, or released this week, I guess it's open source as well. Please talk through Jina Embeddings v2 and how it differs from everything else we've talked about.

[00:09:21] Bo Wang: Okay, good. Basically, it's not like embeddings for, how can I say, maybe two... point five years. But previously we are doing at a much smaller scale. Basically we built all the algorithm, all the platform, even like cloud fine tuning platform to helping people build better embeddings. So there is a not really open source, but a closed source project called fine tuner, which we built to helping user build better embeddings.

[00:09:53] But we didn't, we found it okay. Maybe we are maybe too early. because people are not even using embeddings. How could they find embeddings? So we decided to make a move. Basically, we basically scaled up our how can I say ambition. We decided to train, train our own embeddings. So six months ago, we started to train from scratch, but not really from scratch because in binding training, normally you have to train in two stages.

[00:10:23] The first stage, you need to pre train on massive scale of like text pairs. Your objective is to bring these text pairs as closer as possible, as possible, because these text pairs should be semantically related to each other. In the next stage, you need to fine tune with Carefully selected triplets, all this kind of thing.

[00:10:43] So we basically started from scratch, but by collecting data, I think it was like six months ago, we working with three to four engineers together, basically scouting every possible pairs from the internet. Then we basically created like one billion, 1. 2 billion sentence pairs from there. And we started to train our model based on the T5.

[00:11:07] Basically it's a very popular encoder decoder model. This is on the market. But if you look at the MTB leaderboard or all the models on the market, the reason why they only support 512 sequence lengths is constrained actually by the backbone itself. Okay, we figure out another reason after we release the V1 model.

[00:11:31] Basically, if you look at. And the leaderboard or massive text embedding leaderboard, that is the one Alex just mentioned. Sorry, it's really bad because everyone is trying to overfitting the leaderboard. That naturally happens because if you look at BGE, GTE, the scores will never that high if you don't add the training data into the, into the, That's really bad.

[00:12:00] And we decided to take a different approach. Okay. The biggest problem we want to solve first, improving the quality of the embeddings. The second thing we want to solve is. Enable user to making longer context lens. If we want to making user make user have longer context lens, so we have to rework the BERT model, because every basically the embedding model, the backbone was from BERT or T5.

[00:12:27] So we basically started from scratch. Why not we just borrow the latest research from large language model? Every large language model wants large context. Why not we just borrow the research ideas? into the musk language modeling modelings. So we basically borrowed some ideas, such as rotary position embeddings or alibi, maybe you did, and reworked BERT.

[00:12:49] We call it JinaBERT. So basically now the JinaBERT can handle much longer sequence. So we trained BERT from scratch. Now BERT has been a byproduct of our embeddings. Then we use this JinaBERT to contrastively train the models on the semantic pairs and triplets that finally allow us to encode 8K content.

[00:13:15] Alex Volkov: Wow, that's impressive. Just, just to react to what you're saying, because BERT is pretty much every, everyone uses BERT or at least use BERT, right? At least in the MTB leaderboard. I've also noticed many other examples that use BERT or distilled BERT and stuff like this. You're saying, what you're saying, if I'm understanding correctly, is this was the limitation for sequence length?

[00:13:36] for other embedding models in the open source, right? And the OpenAI one that's not open source, that does have 8, 000 sequence length. Basically, sequence length, if I'm explaining correctly, is just how much text you can embed without chunking.

[00:13:51] Yes. And you're basically saying that you, you guys saw this limitation and then retrained BERT to use rotary embeddings. We've talked about rotary embeddings multiple times here. We had folks behind the yarn paper for extending context windows. Alibi is we follow Ophir Press.

[00:14:08] I don't think Ophir ever joined ThursdAI, but Ophir, if you hear this, you're welcome to join as well. So Alibi is another way to extend context windows and I think Mosaic folks used Alibha and some other folks as well. Bo, could you speak more about like borrowing the context from there and retraining BERT to JinaBERT and whether or not JinaBERT is also open source?

[00:14:28] Bo Wang: Oh, we actually want to make JinaBERT open source, but I need to align with my colleagues. That's, that's, that's really, that's a decision to be made. And the, the idea is quite naive. If you didn't know, I don't want to dive into too much about technical details, but basically the idea of Alibi basically removed the position embeddings from the large language model pre training.

[00:14:55] And the Alibi technique allow us to train on the shorter sequences. But inference at every very long sequence. So in the end, I think if I, my remember is correct, the author of alibi paper, basically trained model on 512 sequence lens and 1,024 sequence lens, but he's able to inference on 16 K. 16 K, like sequence lens.

[00:15:23] If you further expand it, you are not capable because that's the limitation of hardware, that's the limitation of GPE. So he, he actually tested 16 K like a sequence lens. So what we did is just. Borrowed this idea from the autoregressive models into the mask language models. And integrate Alibi, remove the position embeddings from the bird, and add this Alibi slope and all the Alibi stuff back into the bird.

[00:15:49] And just borrowed the things how we train bird or something Roberta, something from Roberta, and retrained the bird. I never imagined bird could be a by product of our embedding model, but this... This happened. We could open source it. Maybe I have to discuss with my colleague.

[00:16:09] Alex Volkov: Okay. So when you talk to your colleagues, tell them that first of all, you already said that you may do this on ThursdAI Stage.

[00:16:15] So your colleagues are welcome also to join. And when you open source this, you guys are welcome to come here and tell us about this. We love the open source. The more you guys do, the better. And the more it happens on ThursdAI Stage, the better, of course, as well. Bo, you guys released the Jina Embedding Version 2, correct?

[00:16:33] Gene Embedding Version 2 has a sequence length of 8k tokens. So that actually allows to, if, just for folks in the audience, 8, 000 tokens is, I want to say, maybe like 6, 000 words in English around, right? And different languages as well. Could you talk about multilinguality as well? Is it multilingual, is it only English?

[00:16:53] How that how that appears within the embedding model?

[00:16:57] Bo Wang: Okay, actually, our Jina Embedding V2 is only English, so it's a monolingual embedding model. If you look at the MTV benchmark or all the public multilingual models, they are multilingual. But to be frankly, I don't think this is a fair solution for that.

[00:17:18] I think at least every major language.

[00:17:24] We decided to choose another hard way. We will not train a multilingual model, but we will train a bilingual model. Our first target will be German and Spanish. What we are doing at Jina AI is we basically Fix our English embedding model as it is just keep it at is, but we are continuously adding the German data, adding the Spanish data into the embedding model.

[00:17:51] And our embedding model cares two things. We make it bilingual. So it's either German, English or German English, Spanish, Spanish, English, German, English, or Japanese, English, whatever. And what we are doing is we want to build this embedding model to make it monolingual. So imagine you are, you have a German English embedding model.

[00:18:12] So if you search for German, you'll get German results. If you use English, you'll get English results. But we also care about the cross linguality of this bilingual model. So imagine you, you, you encode two, two sentences. One is in German, one is in English, which they are With the same meaning, we also want these vectors to be mapped into the similar semantic space.

[00:18:36] Because I, I'm a foreigner myself, sometimes, imagine I, I, I buy some stuff in the supermarket. Sometimes I have to translate, use Google Translate, for example, milk into Milch in German, then, then, then put it into the search box. I really want this bilingual model happen. And I believe every, at least, major language deserves such an embedding model.

[00:19:03] Alex Volkov: Absolutely. And thanks for clarifying this because one of the things that I often talk about here on Thursday Night is as a founder of Targum, which is inside videos, is just how much language barriers are preventing folks from conversing to each other. And definitely embeddings are... The way people extend memories parallel lines, right?

[00:19:21] So like a huge, a huge thing that you guys are working on and especially helpful. The sequence length is, and I think we have a question from the audience is what is the sequence lengths actually allow people to do? I guess Jina and I worked with some, some other folks in the embedding space. Could you talk about what is the longer sequence lengths now unlocking for people who want to use open source embeddings?

[00:19:41] Obviously. My answer here is, well, OpenAI's embeddings is the one that's most widely used, but that one you have to do online, and you have to send it to OpenAI, you have to have a credit card with them, blah, blah, blah, you have to be from supported countries. Could you talk about a little bit of what sequence length allows unlocks once you guys release something like this?

[00:20:02] Bo Wang: Okay, actually, we didn't think too much about applications. Most of the vector embeddings applications, you can imagine search and classification. You build another layer of, I don't know, classifier to classify items based on the representation. You can build some clustering. You can do some anomaly detection on the NLP text.

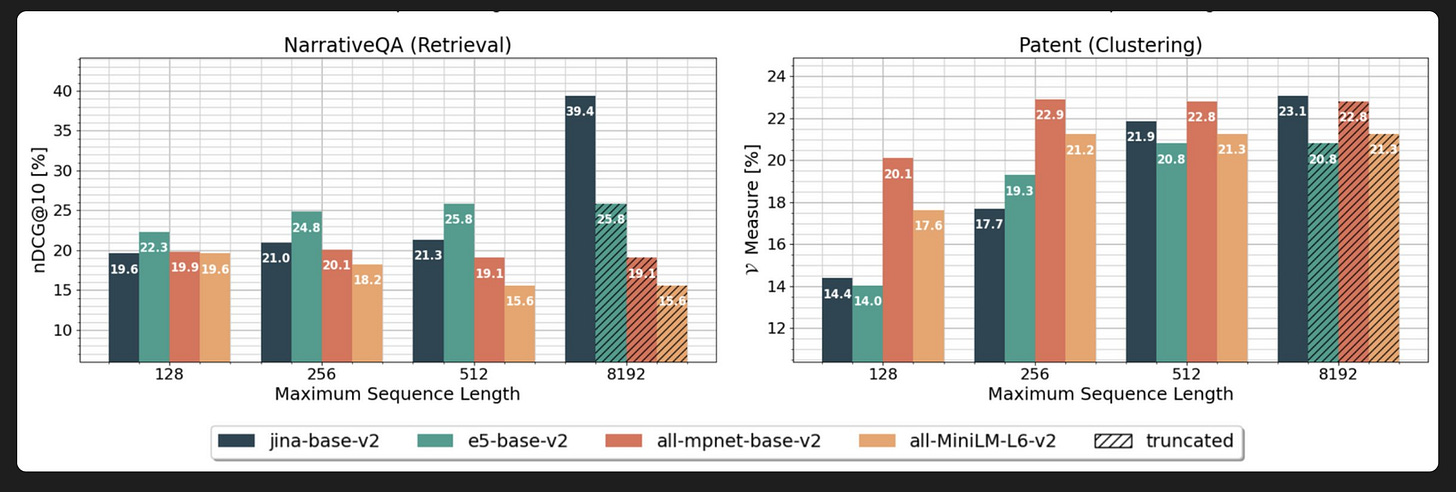

[00:20:22] This is something I can imagine. But the most important thing I I have to be frankly to you because we are, we are like writing a technical report as well. Something like a paper maybe we'll submit to academic conference. Longer embeddings doesn't really always work. That is because sometimes if the important message is in in the front of the document you want to embed, then it makes most of the sense just to encode let's say 256 tokens.

[00:20:53] or 512. But sometimes if you you have a document which the answer is at the middle or the end of the document, then you will never find it if the message is truncated. Another situation we find very interesting is for clustering tasks. Imagine you want to visualize your embeddings. Longer longer sequence length almost always helps and for clustering tasks.

[00:21:21] And to be frankly, I don't care too much about the application. I think people, we, what we're offering is the, how can I say, offering is, is like a key. We, we unlock this 512 sequence length. To educate and people can explore it. People, let's say I, I only need two K then, then people just set tokenize max lens to two k.

[00:21:44] Then, then embed. Based on their needs, I just don't want to be, people to be limited by the backbone, by the 500 to 12 sequence lengths. I think that's the most important thing.

[00:21:55] Alex Volkov: That's awesome. Thank you. Thank you for that. Thank you for your honesty as well. I love it. I appreciate it. The fact that, there's research and there's application and you not necessarily have to be limited with the application set in mind.

[00:22:07] We do research because you're just opening up doors. And I love, I love hearing that. Bo maybe last thing that I would love to talk to you about as the expert here on the topic of dimensions. Right. So dimensionality with embeddings I think is very important. Open the eye, I think is one of the highest ones.

[00:22:21] The kind of the, the thing that they give us is like 1200 mentioned as well. You guys, I

[00:22:26] think

[00:22:26] Jina is around 500. Or so is that correct? Could you talk a bit about that concept in broad strokes for people who may be not familiar? And then also talk about the why the state of the art OpenAI is so far ahead?

[00:22:39] And what will it take to get the open source embeddings also to catch up in dimensionality?

[00:22:46] Bo Wang: You mean the dimensionality of the vectors? Okay, basically we follow a very standard BERT size. The only thing we modified is actually the the alibi part and some training part.

[00:22:58] And our small model dimensionality is 512, and the base model is 768 and we have also a large model, haven't been released because of the training is too slow. We have so much data to change. Even the model size is small, but we have so much data and so large model dimensionality size is 1,024. And if my memory is correct, so are I embedding 0 0 2?

[00:23:23] Have but dimensionality of. 1, 5, 3, 6, something like that, which is a very strange dimensionality, I have to say, but I would say the dimensionality is, is, is the longer might be more Better or more expressive, but shorter, which means when you are doing the vector search, it's gonna be much more faster.

[00:23:48] So it's something you have to balance. So if you think the speed query speed, or the retrieval speed or whatever is more important to you. And if I, if I know correct, some of the Vector database, they make money by the dimensionality, let's say. They, they charge you by the dimensionality, so it's actually quite expensive if your dimensionality is too high.

[00:24:13] So it's a balance between expressionist and the, the, the, the speed and the, the, the, the cost you want to invest. So it's. It's very hard to determine, but I think 512, 768, and 1024 is very common as BERT.

[00:24:34] Alex Volkov: So great to hear that a bigger model is also coming, but it hasn't been released yet. So there's like the base model and the small model for embeddings, and we're waiting for the next one as well.

[00:24:46] I wanted to maybe ask you to maybe simplify for the audience, the concept of dimensionality. What does it mean between, what is the difference between embeddings that were stored with 512 and like 1235 or whatever OpenAI does? What does it mean for quality? So you mentioned the speed, right? It's easier to look up nearest neighbors, maybe within the 512 dimension space, what does it actually mean for quality of look up of different other ways that strings can compare? Could you maybe simplify the whole concept, if possible, for people who don't speak embeddings?

[00:25:19] Bo Wang: Okay maybe let me quickly start with the most basic version.

[00:25:24] If you imagine, if you type something in the search box right now, when doing, doing the matching, and it's actually also embedding, but it's something like if I make a simple version, it's a binary embedding. Imagine there 3, 000 words in English. Maybe there are much more, definitely. Imagine it's 3, 000 words in English, then the vector is 3, 000 dimensionality.

[00:25:48] Then what current solution of searching or matching do is just making... If the query has a token, if your document has a token, if your document has this token, then your occurrence will be one. If you query has the token, and this one will match your document token. But it's also about the, the frequency it appears, it's how, how rare it is.

[00:26:12] But the current solution is basically matching by the. By the English word, but with neural network, basically if you know about this, for example, ResNet know about a lot of different, for example, classification models, basically the output class of item, but if you chop up the classification layer, it will give you some a vector.

[00:26:36] Basically this vector is It's the representation of the information you want to encode. Basically it's a compressed version of the information in a certain dimensionality such as 512, 768, something like this. So it's a compressed list of non numerical numbers, which we normally call it dense vectors.

[00:26:57] because it's much more how can I say in English dense, right? Compared to the traditional way we store vectors, it's much more sparse. There is a lot of zero, there is a lot of one, because zero means not exist, one means exist. When one exists, then there is a match, then you've got the search result.

[00:27:16] So these dense vectors capture more about semantics, but if you match by the occurrence, then you might lose the semantics. But only matching by the occurrence of a token or a word.

[00:27:31] Alex Volkov: Thank you. More dimensions, basically, if I'm not saying it correctly, more dimensions just have more similarity vector. So like more things two strings or tokens can be similar on. And this basically means higher match rate. For more similarity things. And I think the basic stuff I think is covered in the Simon Wilson, the first pin tweet here, Simon Wilson did a basic, basic intro into what do dimensions embeddings mean and why they matter.

[00:28:00] And I specifically love the fact that there's arithmetic that can be done. I think somebody reads the paper even before this whole LLM thing, where if you take embeddings for Spain and embeddings for Germany, and then you take you, you can subtract like the embedding for Paris and then you get something closer to, to like Berlin, for example, right?

[00:28:19] So there's like concepts in, inside these things that are they're even arithmetic works and if you take like King and you subscribe male, then you get something closer to Queen and stuff like this. It's really, really interesting. And also Bo, you mentioned visualization as well. It's really impossible to visualize.

[00:28:36] 10, 24, et cetera, dimensions, right? Like we humans, we have perceived maybe three, maybe three and a half, four with time, whatever. And usually what happens is those multiple dimensions get down scaled to 3D in order to visualize in neighborhoods. And I think we've talked with folks from ARISE. They have a software called Phoenix that allows you to visualize embeddings for clustering and for semantics.

[00:29:02] Atlas does this as well, right? Nomic AI's Atlas does this as well. You can provide dimensions as well. And so you can provide embeddings and see clustering for concepts. And it's really pretty cool. If you haven't played with this, if you only did VectorDBs and you stored your stuff after you've done chunking, but you've never visualized how this looks, I strongly recommend you to do and I think well, thank you so much for joining us and explaining to us, the internals and sharing with us some exciting things about what's to come. Jina Burt is hopefully hopefully is coming, a, a retrained version of Burt, the, the, the, the, the... The grease of all how should I say, I can't, it's hard for me to define a verb, but I see it everywhere it's, it's the big base bone of a lot of NLP tasks, and it's great to see that you guys are about to first of all, retrain it for longer sequences, using tricks like Alibi and and I think you said Positional Embeddings, and hoping to see some open source action from this, but also that Jina Embedding's large model is coming as well with more dimensions waiting for that. Hopefully you guys didn't stop training that. And I just want to tell folks why I'm excited for this. And this kind of will take us to the next.

[00:30:08] Point as well is because, while I love OpenAI, I honestly do, I'm going to join their Dev Day, I'm going to report from their Dev Day and tell you all the interesting things that OpenAI does. We've been talking about we've been talking and we'll be talking today about local inference, about running models on edge, about running models of your own.

[00:30:28] Mistin is here, he even works on some bootable stuff that you can like completely off the grid run. And, so far, we've been focused on open source LLMs, for example, right? So we've had I see Pharrell in the audience from Skunks Works, and many other fine tuners, like Tignium, Alignment Labs, all these folks are working on local LLMs, and they never get to GPT 4 level yet.

[00:30:51] We're waiting for that, and they will. But the whole point of them is, you run them locally, they're uncensored, you can do whatever you want, you can fine tune them on whatever you want. However, the kind of the embeddings part Is the glue to connect it to an application and the reason is because there's only so much context window also context window is expensive and even if theoretically the yarn paper that we've talked with the authors of allows you to extend the context window to 128, 000 tokens The hardware requirements for that are incredible, right?

[00:31:22] Everybody in the world of AI engineers, they switch up to, to, to retrieval of data generation. Basically, instead of shoving everything in the context, they switched Hey, let's use a vector database. Let's say a Chroma. Or Pinecone, or Waviate, like all of those, vectorized from Cloudflare, and the other one from Spotify there, I forget its name or even Superbase now has one.

[00:31:43] Everybody has a vector database it seems these days, and the reason for that is because all the AI engineers now understand that you need to put some text into some embeddings, store them in some database. And many pieces of that were still requiring internet, requiring OpenAI API calls, requiring credit cards, like all these things.

[00:32:03] And I think it's great that we've finally got to a point where, first of all there are embeddings that are matching whatever OpenAI has given us. And now you can run them locally as well. You don't have to go to OpenAI. If you don't want to host, you can probably run them. I think though GeneEmbedding's base is very tiny.

[00:32:20] Like it's half like the small model is 770 megabytes, I think. Maybe a little bit more, if

[00:32:27] Bo Wang: I'm looking at this correctly. Sorry, it's half precision. So you need to double it to make it FP32.

[00:32:33] Alex Volkov: Oh yeah, it's half precision. So it's already quantized, you mean?

[00:32:37] Bo Wang: Oh no, it's just to store it as FV16,

[00:32:39] Alex Volkov: if you store it as FV16.

[00:32:43] Oh, if you store it as FV16. But the whole point is the next segment in ThursdAI today is going to be less about updates and more about the very specific things. We've been talking about local inference as well, and these models are tiny, you can run them on your own hardware, on Edge via Cloudflare, let's say, or on your computer.

[00:32:58] And you now can do almost end to end application wise. From the point of your user inputting a query embedding this query, running a match, a vector search, KNNN and whatever you want nearest neighbor search for that query for the user. Retrieve that all from like local open source. You basically you, you can basically go offline.

[00:33:20] And this is what we want in, in the era of upcoming regulation towards what AI can be and cannot be. And the era of like open source models getting better and better. We've talked last week where Zephyr and I think Mistral News from Technium is also matching some GPT 3. 5. All of those models you can download and nobody can tell you not to run inference on them.

[00:33:40] Hugging Face open sourcing a fast Text Embeddings Inference Server with Rust / Candle

[00:33:40] Alex Volkov: But the actual applications, they still require the web or they used to. And now I'm, I'm loving this like new move towards. Even the application layer, even the RAG systems, which are augmented generation, even the vector databases, and even the embeddings are now coming to, to open source, coming to your local computer.

[00:33:57] And this will just mean like more applications either on your phone or your computer. And absolutely love that. Bo, thank you for that. And thank you for coming to the stage here and talking about the things that you guys open sourced and hopefully we'll see more open source from Jina and everybody should follow you and, and Jina as well.

[00:34:13] Thank you. It looks like. Thank you for joining. I think the next thing that I wanna talk about is actually in this vein as well. Let me go find this o Of course, we love hug and face and the thing that I think that's already on top if you look, yeah if you look at the last thing, last tweet that's pinned it's a tweet from Jeri Lou from Lama Index, obviously.

[00:34:33] Well, well, well, we're following Jerry and whatever they're building and doing over at Lama Index because they implement everything like super fast. I think they also added support for Jina like extremely fast. He talks about this thing where HugInFace opensource for us something in Rust and Candlestick?

[00:34:51] Candlelight? Something like that? I forgot that they're like iteration on top of Rust. Basically, the open source is a server that's called TextEmbeddingsInferenceServer that you can run on your hardware, on your Linux. boxes and basically get the same thing that you get from OpenAI Embeddings.

[00:35:07] Because Embeddings is just one thing, but it's a model. And I think you could use this model. You could use this model with transformers but it wasn't as fast. And as Bo previously mentioned, there's considerations of latency for user experience, right? If you're building an application, you want it to be as responsive as possible.

[00:35:24] You need to look at all the places in your stack and say, Hey. What slows me down? For many of us, the actual inference, let's say use GPT 4, waiting on OpenAI to respond and stream that response is what slows many applications down. And but many people who do embeddings, let's say you have a interface of a chat or a search, you need to embed every query the user sends to you.

[00:35:48] And one such slowness there is how do you actually How do you actually embed this? And so it's great to see that Hackenface is working on that and improving that. So you previously could do this with transformers, and now they released this specific server for embeddings called TextEmbeddings Inference Server.

[00:36:04] And I think it's four, four times faster. than the previous way to run this, and I absolutely love it. So I wanted to highlight this in case you are interested. You don't have to, you can use OpenAI Embeddings. Like we said, we love OpenAI, it's very cheap. But if you are interested in doing the local embedding way, if you want to go end to end, complete, like offline, you want to build like an offline application, using their internet server I think is a good idea.

[00:36:29] And also it shows what HuggingFace is doing with Rust and I really need to remember what language there is but definitely a great attempt from Hug and Face, and yeah, just wanted to highlight that. Let's see. Before we are joined from the Grad. io folks, and I think there's some folks in the audience who are ready from Grad.

[00:36:48] io to come up here and talk about local inference which 15 minutes left,

[00:36:52] Data Provenance Initiative at dataprovenance.org

[00:36:52] Alex Volkov: I wanted to also mention the Data Provenance Initiative. Let me actually find this announcement, and then quickly... Quickly paste this here, and I was hoping that Enrico can be here. . There's a guy named Shane Longfree,

[00:37:05] and he released this massive, massive effort, included with many people. And basically what this effort is, it's called the Data Provenance Initiative. Data Provenance Initiative is now existing in dataprovenance. org. And hopefully can somebody maybe send me the, the direct link to the suite to add this.

[00:37:23] It... It is a massive effort to take 1, 800, so 1, 800 Instruct and Align datasets that are public, and to go through them to identify multiple things. You can filter them, exclude them, you can look at creators, and the most important thing, you can look at licenses. Why would you do this? Well, I don't know if somebody who builds an application needs this necessarily, but everybody who wants to fine tune models, the data is the most important key for this, and building data sets and running them through your fine tuning efforts is basically the number one thing that many people do in the fine tune community, right?

[00:38:04] Data wranglers, and now, thank you, Nishtan, thank you so much, and a friend of the pod, Enrico. is now pinned to the top of the tweet. Thank you for to the top of the space, the nest, whatever it's called. A friend of Enrico Cipolla, who we've talked previously in the context of extending I think Lama to first 16k and then 128k.

[00:38:24] I think Enrico is part of the team on yarn paper as well. I joined this effort, and I was hoping Enrique could join us to talk about this. But basically, if you're doing anything with data, this seems like a massive, massive effort. Many datasets from Lion, and we've talked about Lion, and Alpaca, GPT 4L Gorilla, all these datasets.

[00:38:46] It's very important when you release your model as open source that you have the license to actually release this. You don't want to get exposure, you don't want to get sued, whatever. And if you're in finding data sets and creating different mixes to fine tune different models, this is a very important thing.

[00:39:03] And we want to shout out, Shane Longpre, Enrico, and everybody who worked on this because I think... Just, I love these efforts for the open source, for the community, and it just makes, it's easier to fine tune, to train models. It makes it easier for us to advance and get better and smaller models, and it's worth celebrating and ThursdAI is the place to celebrate this, right?

[00:39:27] LocalLLama effort to compare 39 open source LLMs + GPT4

[00:39:27] Alex Volkov: On the topic of extreme, how should I say efforts that are happening by the community on the same topic, I want to add another one, and this one I think I have a way to pull it up, so give me just a second give me just a second, yes. A Twitter user named Wolfram Ravenwolf who is a participant of the local Lama community on Reddit and now is pinned to the nest at the top of the tweet did this massive effort of comparing open source LLMs and tested 39 different models ranging from 7 billion parameters to 70 billion, and also compared them to chat GPT, GPT 4.

[00:40:06] And I just want to circle back to something we've said. In the previous space as well, and I welcome like folks on stage also to jam in here. I've seen also the same kind of concepts from Hug and Face folks. I think Glenn said the same thing. It's really unfair to, to take a open source model like Mistral7b and then start comparing this to GPT 4.

[00:40:26] It's unfair for several reasons. But also I think it, it, it can obfuscate to some people when they do this comparison of how, just how advanced we've come for the past year in open source models. OpenAI has the infrastructure, they're backed by Microsoft, they have the pipelines to serve these models way faster.

[00:40:47] And also, those models don't run on like local hardware, they don't run on like one GPU. It's like a whole, a whole... Amazing MLOps effort to bring you this speed. When you're running local source model open source models locally when they're open source, they're, they're, they're small, there's drawbacks and there's like takeaways that you have to bake in into your evaluation.

[00:41:09] So comparing to the GPT 4, which is super general in many, many things, that will just lead to your disappointment. However, and we've been talking about this like with other open source models If you have a different benchmark in your head of if you're comparing open source to open source, then it's a whole completely different ballgame.

[00:41:26] And then you start seeing things like, Hey, we're noticing that the 7 billion parameter model is, beating 70 billion. We're noticing that size is not necessarily the king because if you guys remember, Three months ago, ni, I wanna say we've talked about Falcon 180 B. 180 B was like, three times the size of like the, the, the next largest model.

[00:41:47] And it was incredible the Falcon Open source this, and then it was like, like a wo like, no, nobody really was able to run 180 B because it's huge. But also once, once we did run it, we saw that like the difference between that and LAMA are not great at all. Maybe a few percentage points on, on the valuations.

[00:42:04] However, the benefits that we see are from local, like for tinier and tinier models from like 7D Mistral, for example, which is the, the one that the fine tuners of the world are now preferring to everything else. And so the kind of, when you're about to evaluate whatever next model that's coming up that we're going to talk about please remember that Comparing to large, open, big companies backed by billions of dollars that run on multiple split hardware, it's just going to lead to disappointment.

[00:42:34] However, when you do some comparisons, like the guy did, that is now pinned to the tweet this is the way to actually do this. However, on specific tasks, like for, say, coding go ahead,

[00:42:46] Nisten Tahiraj: Nisam. I was going to say, we're still a bit early to judge for example, Falcon could have used a lot more training.

[00:42:53] There's also other. parts where larger models play a big effect stuff like if you want to do very long context summarization then you want to use the 70b and as far as i'm getting it and this is probably inaccurate right now but the more tokens you have the more meat you have in there the Then the larger the thoughts can can be.

[00:43:23] So that's the principle which are going by. Well, Mistral will do extremely well in small analytical tasks and in benchmarks, and it's amazing as a tool. It doesn't necessarily mean that it'll be good at thinking big. You still need The meat there, the amount of tokens to do that. Now you could chop it up and, and do it one, one at a time, but anyway, just something to keep in mind because lately we also saw the announcement from Lama70Blong, which started getting really good at at summarization.

[00:44:04] So again, there's one particular part. Which is summarization where you, it looks like you need longer you need bigger models for that. And I've tested it myself with Falcon and stuff, and it's pretty good at summarization, I just want to also give them the benefit of the doubt that there is still something that could be done there.

[00:44:28] I wouldn't just outright dismiss.

[00:44:31] Alex Volkov: Yeah, absolutely, absolutely, and I want to join this non dismissal. Falcon open sourced fully commercially like Falcon 70B before, and this was the biggest open source model at the time. And then they gave us 180B, they didn't have to, and we appreciate like open sourcing.

[00:44:46] We're not going to say no. Bigger models have more information, more, more, maybe world model in them, and there's definitely place for that, for sure. The, the next thing you mentioned also, and I think I, I strongly connect to that, Nissen, and thank you, is GPT 4, for example, is very generalized. It does many, many, many things well.

[00:45:08] It's like kind of impressive and whatever Gemini is going to be from Google soon, hopefully, we're always waiting on ThursdAI, that the breaking news will come on Thursday. We're gonna be talking about something else and then Google suddenly drops Gemini on us. There's also other rumors for Google's other stuff.

[00:45:22] Whatever OpenAI's Arrakis was, and then they stopped training, and whatever next they're coming from OpenAI, will probably blow everything we expect in terms of generality out of the water. And this, the open source models, as, as they currently are, they're really great at... Focused tasks, right? So like the coder model, for example that recently Glaive Coder was released by Anton Bakaj, I think is doing very well on the evaluations for code.

[00:45:51] However, on general stuff, it's probably less, less good. And I think for open models expecting generality on the same level as GPT 4, I think, is, is going to lead to disappointment. But for tasks, I see, I think we're coming close to different things that a year ago seemed state of the art. If you guys remember, it's not even a year since JetGPT was released, right?

[00:46:14] I think JetGPT was released in November? No, not as an API even, just the UI, like middle of November. So we're coming up on one year, I think the Dev Day will actually be one year. That was 3. 5. 3. 5 now, many people use 3. 5 for applications, but, you want to go for 4. If you're paying for Chattopadhyay Plus and you have a task to solve, you're not going to go 3.

[00:46:35] 5 just because you feel like it. You know that 4 is better. But now we're having open source models way smaller. They're actually getting to some levels of 3. 5, and the above effort is actually an effort to try to figure out which ones. And so I strongly recommend, first of all, to get familiar with local Llama subreddit.

[00:46:54] If you don't use Reddit, I feel you, and I've been a Reddit user for a long time, and I stopped. Some parts of Reddit are really annoying. This is actually a very good one, where I get a bunch of my information outside of Twitter. And I think Andrej Karpathy also recommended this recently, which... Then became an item on that subreddit.

[00:47:12] It was really funny. And this massive effort was done by this user and he, he did like a full comparison of just 39 different models. And he outlined the testing methodology as well. We've talked about testing and evaluation methodology. Between ourselves, it's not easy to evaluate these things. A lot of them are like gut feeling.

[00:47:31] A lot of the, the evaluation, and Nathan and I have like our own prompts that we try on every new model, right? It's, it's like a lot, a lot of this for many people is like gut feel. And many people also talk about the problem with evals and I think Bo mentioned the same thing with the embedding leaderboards that then, you know.

[00:47:48] It then becomes like a sport for people to like fine tune and, and release models just to put their name on the board to overfit on whatever whatever metrics and evaluations happen there. And then there's a whole discussion on Twitter whether or not this new model that beats that model on, on some, some score actually, was trained on the actual evaluation data.

[00:48:09] But... Definitely the gut feeling variation is important and definitely having different things to test for is important. And you guys know, I think, those of you who come to ThursdAI, my specific gut feels are about like translation and multilingual abilities, for example, and direction following some other people like Jeremy, Jeremy Howard from ThursdAI have his own like approach.

[00:48:29] Everybody has their own approach. I think what's interesting there is... Kind of the community provides, right? We're like this decentralized brain of evaluating every new model. And for now, the community definitely landed on Mistral as being like the top. At least a model in the 7b range, and Falcon, even though it's huge and can do some tasks like Nissan said is less, less and Lama was there before. So if you start measuring the community responses to open source models, you start noticing better what does what. And this effort from this guy, he actually outlined the methodology, and I want to shout out... Friends of the pod, Tignium being the go to many, many things, specifically because Open Hermes, which, Hermes, which we've talked about before which was fine tuned from Mistral7b is probably like getting the, the, the top leaderboard from there, but also based on my experiences, right?

[00:49:22] So we've talked last week about Open Hermes being able, you're able to run Open Hermes on your... Basically, M1, M2, Max with LM Studio, which also shout out to LM Studio, they're great, and I've tested this, and this seems to be, like, a very, very well rounded model, especially for one that you can run yourself and comparing to GPT 4 and other stuff, this model for specific things is really great.

[00:49:45] It's good for coding. It's not the best for coding. I think there's a coding equivalent. And I just really encourage you, if you're interested, like figuring out what to use. And we've talked about this before. What to use. Is an interesting concept, because if you come to these spaces every week and you're like, Oh, this model is now state of the art, that model is state of the art.

[00:50:05] You may end up not building anything, because you just won't have the, you always keep chasing the latest and greatest. The differences are not vast from week to week, we're just seeing like better scores. But it's well worth checking out this effort for the methodology, for the confirmation that you have.

[00:50:21] Let's say you, you felt that Mistral is better and now you can actually understand. And also for friends of the pod I think John Durbin is also, Error Boris model is really great and it's also up there. And what Nistan highlighted is that bigger models sometimes excel at different things some summarization or just more knowledge.

[00:50:38] It's also outlined there as well. And You can also see models that are not that great, that maybe look good on the leaderboards, but don't necessarily perform as well, and you can see them as well in that effort.

[00:50:49] So maybe actually let me reset the space. Everybody who joined in the middle of me speaking is like, why is this guy speaking?

[00:50:56] And what's going on here? You are welcome. You, you're in the space of ThursdayAI. ThursdayAI we are meeting every week to talk about everything that happens in the world of AI. If you're listening to this and you're enjoying, you're the target audience, but generally we talk about everything from open source LLMs and now embeddings.

[00:51:13] We, we talk about big company APIs. There's not a lot of updates from OpenAI this week. I think they're quiet and they're going to release everything in a week and a half in their dev day. And, and Tropic obviously, and, and... Cloud and Microsoft and Google, like all these things we cover as much as possible.

[00:51:29] We also cover voice and audio. And in that vein, I want to shout out to friends from Gladia and I'll pin there actually, let me just pin this right now. Gladia just released a streaming of Whisper and I've been waiting for something like this to happen. Sometimes for AI engineers, you don't want to host everything yourself. And you want to trust that, the, the WebSocket infrastructure is going to be there when you don't want to build it out. And I'm not getting paid for this.

[00:51:53] This is like my, my personal, if I had to implement like something like the voice interface with ChatGPT, I would not build it myself. I would not trust my own MLOps skills for that. And so for that Gladia is, I've been following them since I wanted to implement some of their stuff and they just implemented like a WebSocket.

[00:52:11] Whisper transcription streaming, and it's multilingual, and it's quite fast, and I definitely recommend folks to check it out. Or check out my review of it, and try out the demo, and if you want it, use it. Because we've talked last week about the interface for ChatGPT that's voice based, and you can actually have a FaceTime call with ChatGPT, and that's incredible.

[00:52:30] And I think more and more removing the screen out of this talking to your AI agents, I think, with the latest releases also in text to speech, like 11 Labs and XTTS that we've covered as well. With advances there, with speed, you can actually start getting interfaces where you can talk, and the AI listens and answers back to you very fast.

[00:52:52] Worth checking out, and definitely an update. Thank you.

[00:52:57] Nisten Tahiraj: Okay. So this is a complete product. I was,

[00:53:00] Alex Volkov: yeah, this is a full, pay a little bit, get a WebSocket and then you use this WebSocket to just like stream and you can embed this into your applications like very fast. Setting that up, I think Koki, you can do this with Koki, which we also covered.

[00:53:13] Gradio Interview with Abubakar, Xenova, Yuichiro

[00:53:13] Alex Volkov: Alright, I think it's time to again, reset the space. ThursdAI I wanna thank Bo who is still on stage. Bo, you're welcome to keep, stay with us a little bit and now we're moving on to the second part of this.

[00:53:30] Welcome, Abubakar. Welcome, Zinova, Joshua. Welcome some folks in the audience from Hugging Face. It's great to see you here on ThursdAI, well, Zinova is always here, or hopefully, but Abubakar, I think this is your first time.

[00:53:41] I'll do a brief intro, and then we can, we can go and talk about Gradio as well.

[00:53:45] I my first inference that I ran on a machine model was a year and something ago, and this was via Gradio, because I, I got this weights file, and I was like, okay, I can, I can probably run something with CLI, but how do I actually visualize this? And back then, Gradio was... was the way and I think since then you already guys you were already part of Hug and Face and Everybody who visited a model page and tried a demo or something probably experienced Gradua even without knowing that this is what is behind all the demos So welcome, please feel free to present yourself.

[00:54:17] Give us Maybe two line, three line of how you explain Gradio to folks, and then we can talk about some exciting stuff that you guys have released this week.

[00:54:25] Abubakar Abid: Awesome. Yeah, first of all, thank you again for, for having me and for having several folks from the Gradio team here. I've known you, Alex, for a long time.

[00:54:32] I think you were one of the early users of Gradio or at least one of the early users of Gradio blocks and, and some of these viral demos. So I've seen, this podcast develop over time and it's It's a real honor to be to be able to come here and to be able to talk about Gradio.

[00:54:45] Yeah. Hi everyone. I'm Abu Bakr. I'm, I lead the Gradio team at Hugging Face. So Gradio is basically the way we describe it is it's the fastest way to, to build a GUI or an app from a machine learning model. So traditionally have, taking a machine learning model to production or at least letting...

[00:55:01] Users try it out has meant that you need to know a lot of front end. You need to know how to, setting up a server, web hosting. You have to figure all of these things out so that other people can play around with your machine learning model. But Gradio lets you do all of that with just a few lines of Python as I think Joshua was mentioning earlier.

[00:55:18] And Gradio has been used by a lot of people. We're very lucky that, we kind of coincide. We started Gradio a few years ago late 2019. It grew out of A project at Stanford, and then spun out to be a startup, and then we got acquired by Hugging Face, and we've been growing Gradio within that kind of ecosystem.

[00:55:32] But we're very lucky because during this time has coincided with a lot of real developments in machine learning. I come from an academic background, so before 2019 I was doing my PhD at Stanford. And, everyone's been doing, machine learning for a while now, but...

[00:55:45] The types of machine learning models that people wanted to build, you built it, you published a paper and that was it, but, since then, recently people are building machine learning models that other people actually want to use, other people want to play around with, things have gone very, exciting, and so that's led to a lot of people building radio demos I think, I was looking at the the stats recently we have something around more than three, four million demos, Gradio demos that have been built since we started the, library.

[00:56:09] And yeah, so recently we released something called Gradio Lite, which lets you run...

[00:56:13] Gradio effects on the open source LLM ecosystem

[00:56:13] Alex Volkov: Wait, before, before, Abubakar, if you don't mind, before Gradio Lite, let's not I just want to highlight how important this is to the ecosystem, right? I'm oriJinally a front end engineer I do component libraries for breakfast, and basically, I don't want to do them it's really nice to have a component library maybe Tailwind UI, or ShadCN, like, all these things, so even front end engineers, they don't like building things from scratch.

[00:56:35] Switching to machine learning folks who like build the model, let's say, and want to run some inference, that's not their cup of tea at all. And, just thinking about like installing some JavaScript packages, like running NPM, like all these things, it's not like where they live at all. And so what Gradio allows us to do this in Python.

[00:56:51] And I think this is, let's start there. That's on its own is incredible and lead, led to so many demos just look to happen in Gradio. And you guys built out pretty much everything else for them, like everything that you would need. And I think recently you've added stuff, before we get to Gradual Light, like components like chat, because you notice that, many people talk to LLMs, they need the chat interface, right?

[00:57:10] There's a bunch of multi modal stuff for video and stuff. Could you talk about, the component approach of how you think about providing tools for people that don't have to be designers?

[00:57:20] Abubakar Abid: Yeah, absolutely. So yeah, that's exactly right. Most of the time when you're, machine learning, developer you don't want to be thinking about writing front end, components that coupled with some, an interesting insight that we had with machine learning models.

[00:57:31] It's much more like the components from machine learning models are tend to be much more usable than in other kinds of applications, right? So one thing I want to be clear is that Gradio is actually not meant to be like a, build web apps in general in Python. That's not our goal. Our goal, we're heavily optimized toward building machine learning apps.

[00:57:50] And what that means is, the types of inputs and outputs that people tend to work with are a little bit more, contained. So we have a library right now of about 30 30, Different types of like inputs and outputs. So what does that mean? So things like images, image editing video inputs and outputs, chatbots as outputs JSON, data frames, various types of inputs and outputs that components that come prepackaged with Gradio.

[00:58:15] And then when you build a Gradio application, you basically say, Hey, this is my function. These are my inputs, and these are my outputs. And then Gradio takes care of everything else, stringing everything together sending them, message back and forth, and pre processing, post processing everything in the right way.

[00:58:29] So yeah, you just have to define your function in the backend, and your inputs and your outputs, and then Gradio spins up a UI for you.

[00:58:36] Alex Volkov: And so I really find it funny and I sent the laughing emoji and said that Gradio was not meant to build web apps, like full scale web apps, because I think the first time that we've talked, you reached out, because I joined whatever open source that was running for stable diffusion, this was before automatic, I think, and you told me hey, Alex, You did some stuff that we didn't mean for you to do, so I injected like a bunch of JavaScript, I injected a bunch of CSS, like I had to I had to go with my full on like front end developer, I was limited with this thing, and so I, even despite the limitation, I think we did like a bunch of stuff with just like raw JavaScript injection, and since then I think it's very interesting, you're mentioning like Gradio demos, Gradio demos, Automatic 1.

[00:59:16] 1. 1, which is maybe for most people, is the only way they know like how to run stable diffusion, is now getting investments from like NVIDIA and getting right, I saw like a bunch of stuff that Automatic does, so it's very interesting like how you started and how the community picked it up. So can you talk about like the bigger parts of this, like Automatic and some other that are like taking Gradio and pushing it to the absolute limit?

[00:59:37] Abubakar Abid: Yeah, absolutely. So that's yeah we're, we're, I'm, I'm, like, perpetually shocked by Automatic 111, every time I see a plug in, or, kind of the, the I think, like you said NVIDIA, now IBM, or something, released a plug in for Automatic 111? It's crazy. But yeah, so basically it's ever since we started Gradio, we've been noticing that, okay, okay, Gradio seems to work for 90 percent of the use cases, but then the last 10 percent people are pushing the limits of, of what's possible with Gradio.

[01:00:06] And so we've progressively increased what's possible. So in the early days of Gradio, there was actually just one class called Interface. And what that did was it allowed you to Specify some inputs and some outputs and a single function. And we quickly realized, okay, people are trying to do a lot more.

[01:00:20] So then about a year and a half ago, we released grad your Blocks, which allow you to like have arbitrary layouts. You can have multiple functions, string together connect inputs and in different ways. And that is what kind of allowed these very, very complex apps like automatic 1 1 1 SSD Next, and the equivalence in other domains as well.

[01:00:36] Of course, like the, the text, the text web, the, the UBA Booga. Text UI as well and then there's also similar kind of, very complex demos in the audio space as well. And music generation as well. So like these super complex, multiple tabs, all of that, that's possible with this new kind of architecture that we laid out called GradioBlox.

[01:00:55] And GradioBlox is this whole system for specifying layouts and and, and functions. And it's defined in a way that's intuitive to. Python developers, the, we like a lot of these like web frameworks in Python have, have popped up. And one of the things that I've noticed as someone who knows Python, but really not much JavaScript is that they're very much coming in from the perspective of a JavaScript engineer, and so like this kind of React inspired kind of frameworks and, and stuff like that.

[01:01:21] And, and what, that's not very intuitive to a Python developer, in my opinion. And so we've defined this whole thing. Where you can, have these, build these arbitrary web, kind of web apps, but still in this Pythonic way. And we're actually about to take this a step farther, and maybe I can talk about this at some point but next week we're going to release Gradio 4.

[01:01:38] 0, which takes this idea of being able to control what's happening on the page. To the next level. You can have arbitrary control over the ui, ux of any of our components. You can build your own components and use them within a Grady app app and get all of the features that you want in a grad app.

[01:01:52] Like the, the, API usage, pre-processing, post-processing. Everything just works out of out of out of the box. But now with your own kind of level of control, yeah. Awesome.

[01:02:01] Alex Volkov: And it's been honestly great to see just how much enablement. Something like as simple as Gradio for folks who don't necessarily want to install npm and css packages.

[01:02:11] There's not much enablement this gave the open source community because People release, like you said, different significant things. Many of them, maybe you are not even aware of, right? They're running in some Discord, they're running in some Reddit. It's not like you guys follow everything that happens.

[01:02:23] Gradio local URL via Gradio Proxy

[01:02:23] Alex Volkov: Additional thing that I want to just mention that's very important that. When you run Gradio locally, you guys actually expose it via like your server, basically my local machine. And that's been like a blast that that's been like a very, very important feature that people may be sitting behind the proxy or everything.

[01:02:39] You can share your like local instance with some folks, unfortunately only for 72 hours. But actually

[01:02:44] Abubakar Abid: that's about to change. So in 4. 0, one of the things that we're trying to get, so actually, we've been very lucky because Gradio has been developed along with the community. Like you said, like often times we don't know what people are using Gradio for until, they come to us and tell us that this doesn't work, and then they'll link to their repo and it's this super complex Gradio app and we're like, what?

[01:03:01] Okay, why are you even trying that? That's way too complicated. But, but, then we'll realize like to the extent to what people are building. And so this you mentioned the share, these share links as well, which I want to just briefly touch upon. So one, one of the things that we released in like the early days of, of, of Gradio is we realize People don't want to worry about hosting their machine learning apps.

[01:03:19] Oftentimes you want to share your machine learning app with your colleague. Let's say you're like the engineer and you have a colleague who's a PM or something who wants to try it out. Or it might be if you're in academia, you want to share it with fellow researchers or your professors, whatever it may be.

[01:03:33] And like, why do all of this hosting stuff if you just are, are, like, building an MVP, right? So we built this idea of a share link. So you just, when you launch your Gradio app, you just say share equals true. And what that does is it creates a it uses something called Fast Reverse Proxy to actually expose your local port to a to this FRP server which is running in a public...

[01:03:53] Machine, and what that does is it forwards any request from a public URL to your local, port. And, what the, in a, the long story short, what that does is it makes your Gradio app available on the web for anyone to try. It runs for 72 hours by default, but now what we're doing as part of 4.

[01:04:08] 0, we'll, announce this, is you can actually build your own share servers. So we have instructions for how to do that very easily and you can point your Gradio instance to that share server. So if you have an EC2 instance running somewhere, just point to it and then you can have that share link running for as long as you want and you can, share your share servers with other people at your company or your organization or whatever it may be and they can use that share link and, again, they can run for however they want.

[01:04:30] Wait,

[01:04:31] Nisten Tahiraj: wait, wait, is this out? Which branch is this? Is

[01:04:34] Abubakar Abid: this going to be out? This is going to be out on Tuesday for Gradio 4. 0 we're, we're going to launch on Tuesday.

[01:04:41] Nisten Tahiraj: It's like the most useful feature I'd say of, of Gradio, especially when you make a Google collab that you want people to just run in one click.

[01:04:49] And like, how are they going to even use this model? And you just throw the entire Gradio interface in there and you share equals true. And then they know, they can just give it, give the link to their friends and stuff. It's really, it makes it really easy, especially with Google Colab. But now that you can host your own, this is huge.

[01:05:09] This is going to... to another level. I have more questions for

[01:05:14] Alex Volkov: Google. I think I just Nissen, thank you. I just want to touch upon the Google collab thing. I think at some point Google started restricting how long you can run like a collab for, and I think you guys are the reason. This exact thing that Nissen said.

[01:05:30] People just kept running the Gradio thing with the URL within the Google collab and exposing like stable diffusion. They didn't build collab for that, and I think they quickly had to figure out how to go around this.

[01:05:41] Abubakar Abid: Yeah. And their approach is like literally blacklisting the name of the the of specific, GitHub repos, which, I, I completely understand where, where Colab is coming from, right?

[01:05:50] They're giving these GPUs for free. They have to have to prioritize certain use cases, but we're working with the Colab team and we're seeing if, there's ways, like right now it's like a blacklist on, on automatic one on one, and some other repos. So we're hoping we can find another way that's not That's not so restrictive.

[01:06:05] Nisten Tahiraj: No, but it still works. You can just fork the repo. It works for everything else. It works for LLMs. So if anybody else really needs it. Gradio works on Colab. Well, as far as language stuff goes, I haven't done that much.

[01:06:18] Abubakar Abid: Yeah, so Gradio works on Colab for sure. And, and that's, and that's early on, like one of the decisions we had to make actually was...

[01:06:25] Should we use like, the default python runtime or should we like change, like the interpreter and stuff like that? Because building GUIs is not necessarily python's like strength, and like oftentimes you wanna render re-render everything, and you, you wanna do certain things that may not be like what Python is suited for.

[01:06:42] But early on we decided, yeah, we wanna stick with the default python runtime because. One of the reasons was things like Colab, because we wanted people to be able to run Gradio wherever they normally run Python without having to change their workflows. And Colab, Gradio works in Colab.

[01:06:56] We had to do a lot of... Trickery to make it work. But yeah, it works. It's just like these certain very, very specific apps that have become too popular and apparently consume too many resources. They're blacklisted by Colab right now.

[01:07:10] Local inference on device with Gradio - Lite

[01:07:10] Alex Volkov: Alright thank you for this intro for Gradio. To continue this, we have on stage Zinova who introduced himself, authors of TransformerJS, we've been talking with Bo in the audience, also somebody who's like just recently open sourced, with Jina, the embeddings model, and everything that we love to cover in ThursdAI, a lot of it is talking about As open source, as local as possible, for different reasons, for, not getting restricted reasons.

[01:07:36] And you guys just recently launched Gradio Lite, and actually we have Yuichiro here on stage as well. So I would love to have you, Abubakar introduce and maybe have Yuichiro then follow up with some of the stuff about what is Gradio Lite? How does it relate to running models on, on device and open source?

[01:07:52] And yeah, please, please introduce it.

[01:07:54] Abubakar Abid: Yeah, absolutely. Yeah. Like you mentioned, I think one of the things that we think about a lot about at Gradia is like, it's the open source ecosystem and, right now, for example, where can open source LMs, for example, really shine and things like that.

[01:08:06] And one of the places is on device, right? On device or in browser is, open source has a huge, edge over proprietary models. And so we were thinking about how can Gradio be useful in this setting. And we were thinking about the in browser application in particular. And we were very, very lucky to have Yuchi actually reach out to us.

[01:08:25] And Yuchi has this, fantastic tracker, but if you don't already don't know Yuchi, he built Streamlit Lite, which is a way to run Streamlit apps in the browser. And then he reached out to us and basically had this idea of doing something similar with Gradio as well. And basically, I, almost like single handedly refactored much of the Gradio library so that it could run.