Happy leap year day everyone, very excited to bring you a special once-in-a-4 year edition of ThursdAI 👏

(Today is also Dune 2 day (am going to see the movie right after I write these here words) and well.. to some folks, this is the bull market ₿ days as well. So congrats to all who weathered the bear market!)

This week we had another great show, with many updates, and a deep dive, and again, I was able to cover most of the news AND bring you a little bit of a deep dive into a very interesting concept called Matryoshka Representation Learning (aka 🪆 embeddings) and two of the authors on paper to chat with me on the pod!

TL;DR of all topics covered:

AI Art & Diffusion & 3D

Playground releases a new diffusion foundational model Playground V2.5 (DEMO)

Alibaba teasing EMO - incredible animating faces (example)

Ideogram 1.0 announced - SOTA text generation (Annoucement)

Open Source LLMs

Gemma update - hard to finetune, not better than 7B mistral

LLama 3 will release in June 2024, not anytime soon

Starcoder 2 + stack V2 (Announcement)

Berkeley Function-Calling leaderboard Leaderboard (Announcement)

Argilla released OpenHermesPreferences the largest open dataset for RLHF & DPO (Announcement)

STORM from Stanford to write long documents (Thread)

Big CO LLMs + APIs

Mistral releases Mistral Large & Le Chat (Announcement, Le Chat)

Microsoft + Mistral strike a deal (Blog)

Google teases GENIE - model makes images into interactive games (announcement)

OpenAI allowing fine-tune on GPT 3.5

Wordpress & Tumbler preparing to sell user data to OpenAI & Midjourney

Other

Mojo releases their MAX inference engine, compatible with PyTorch, Tensorflow & ONNX models (Announcement)

Interview with MRL (Matryoshka Representation Learning) authors (in audio only)

AI Art & Diffusion

Ideogram 1.0 launches - superb text generation!

Ideogram, founded by ex google Imagen folks, which we reported on before, finally announces 1.0, and focuses on superb image generation. It's really great, and I generated a few owls already (don't ask, hooot) and I don't think I will stop. This is superb for meme creation, answering in multimedia, and is fast as well, I'm very pleased! They also announced a round investment from A16Z to go with their 1.0 release, definitely give them a try

Playground V2.5

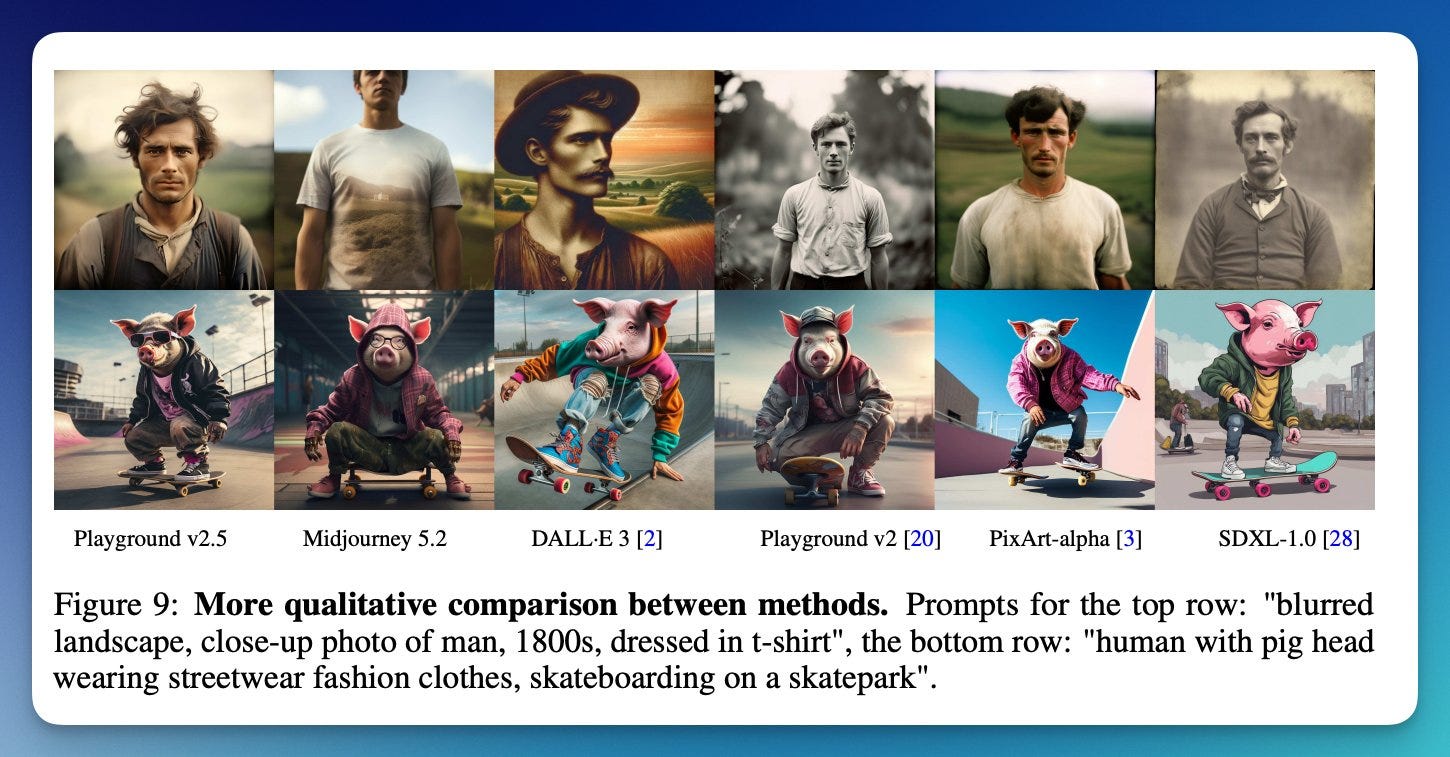

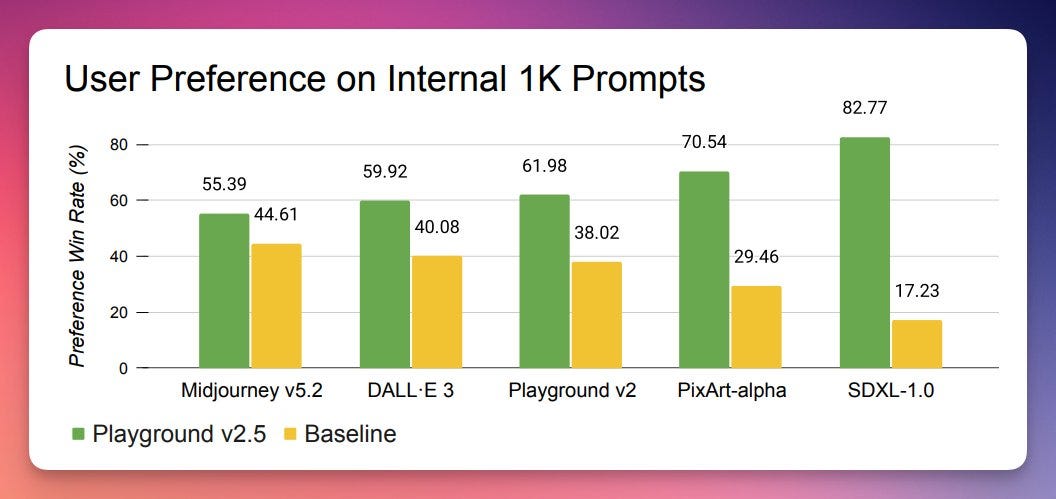

Suhail Doshi and Playground release a new foundational image model called Playground v2.5 and it looks awesome, very realistic and honestly looks like it beats MJ and DALL-E on many simple prompts.

They also announced that this model received higher user preference scores based on 1K prompts (which we didn't get to see) but they have released this model into the wild, you can download it and play with a free demo provided by modal folks

Another SORA moment? Alibaba teases EMO 🤯 (website)

Ok this one has to be talked about, Alibaba released quite a few preview videos + paper about something called EMO, a way to animate a talking/singing Avatars from just 1 image. It broke my brain, and I couldn't stop staring at it. Honestly, it's quite quite something. This model animates not only the mouth, eyes are blinking, there are emotions, hairs move, even earrings, and the most impressive, the whole Larynx muscle structure seem to be animated as well!

Just look at this video, and then look at it again.

The Github repo was created but no code released and I really hope we get this code at some point, because animating videos with this fidelity + something like SORA can mean so many possible creations!

I wrote this tweet only two weeks ago, and I'm already feeling that it's outdated and we're farther along on the curve to there with EMO, what a great release!

And just because it's so mind-blowing, here are a few more EMO videos for you to enjoy:

Open Source LLMs

Starcoder 2 + The Stack V2

Folks at hugging face and BigCode have released a beast on us, StarCoder 2 ⭐️ The most complete open Code-LLM 🤖 StarCoder 2 is the next iteration for StarCoder and comes in 3 sizes, trained 600+ programming languages on over 4 Trillion tokens on Stack v2. It outperforms StarCoder 1 by margin and has the best overall performance across 5 benchmarks 🚀🤯.

TL;DR;

🧮 3B, 7B & 15B parameter version

🪟 16384 token context window

🔠 Trained on 3-4T Tokens (depending on size)

💭 600+ Programming languages

🥇 15B model achieves 46% on HumanEval

🧠 Grouped Query Attention and Sliding Window Attention

💪🏻 Trained on 1024 x H100 NVIDIA GPUs

✅ commercial-friendly license

🧑🏻💻 Can be used for local Copilots

The Stack v2 is a massive (10x) upgrade on the previous stack dataset, containing 900B+ tokens 😮

Big CO LLMs + APIs

🔥 Mistral announces Mistral-Large + Le Chat + Microsoft partnership

Today, we are releasing Mistral Large, our latest model. Mistral Large is vastly superior to Mistral Medium, handles 32k tokens of context, and is natively fluent in English, French, Spanish, German, and Italian.

We have also updated Mistral Small on our API to a model that is significantly better (and faster) than Mixtral 8x7B.

Lastly, we are introducing Le Chat , a chat interface (currently in beta) on top of our models.

Two important notes here, one, they support function calling now on all mistral models in their API, which is a huge deal, and two, the updated Mistral Small to a "significantly better and faster" model than Mixtral 8x7B is quite the hint!

I want to also highlight Arthur’s tweet clarifying their commitment to Open Source because it's very important. They released a new website, it again had mentions of "don't train on our models" which they removed, and the new website also had removed the section that committed them to open weights and they put a much bigger section back up quickly!

This weeks Buzz (What I learned with WandB this week)

I mentioned this before, but this may shock new subscribers, ThursdAI isn't the only (nor the first!) podcast from Weights & Biases. Our CEO Lukas has a long standing podcast that's about to hit 100 episodes and this week he interviewed the CEO of Mayo Clinic - John Hamalka

It's a fascinating interview, specifically because Mayo Clinic just recently announced a mutli-year collaboration with Cerebras about bringing AI to everyone who googles their symptoms and ends up on mayo clinic websites anyway, and apparently John has been in AI for longer that I was alive so he's incredibly well positioned to do this and bring us the AI medicine future!

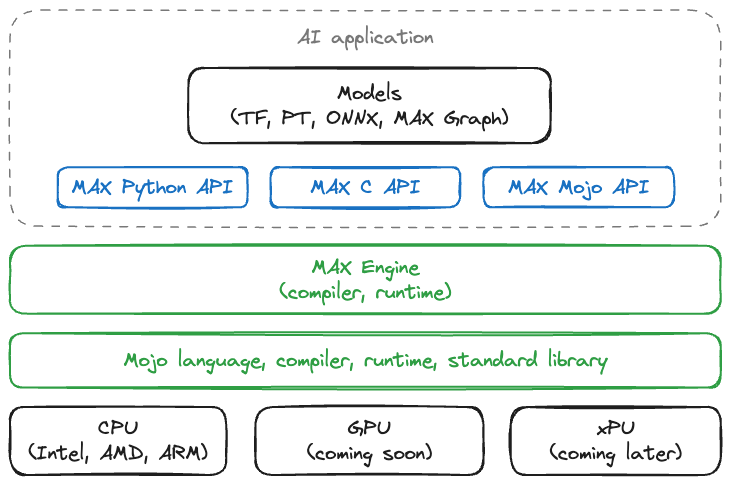

Modular announces MAX (Modular Accelerated Xecution) Developer Edition Preview (blog)

Modular, the company that created Mojo Lang from Chris Lattner, has now announced the second part of their stack, coming to all of us, and it's called MAX. It's an inference engine that has Mojo built in, that supports PyTorch, Tensorflow and ONNX and is supposedly going to run the same AI models we run now, significantly faster. MAX is a unified set of tools and libraries that unlock performance, programmability and portability for your AI inference pipelines

Right now they support only CPU inference, and significantly boost performance on CPU, however, they are planning GPU support soon as well, and promise up to 5x faster AI inference for most models like Mistral, LLama etc

I personally think this is a huge development, and while it's still early, definitely worth taking a look at the incredible speed performances that we are seeing lately, from Groq (as we chatted with them last week) and Modular, we're are very well on our way to run huge models faster, and small models instantly!

🪆 MRL (Matryoshka Embeddings) interview with Aditya & Prateek

Recently OpenAi has released 2 new embeddings models recently that replaced their ada-002 embeddings, and when they released it, they mentioned a new way of shortening dimensions. Soon after, on X, the authors of a 2022 paper MRL (Matryoshka Representation Learning) spoke out and said that this new "method" is actually MRL, the concept they came up with and presented at NeurIPS.

Since then I saw many folks explore Matryoshka embeddings, from Bo Wang to Connor Shorten and I wanted to get in on the action! It's quite exciting to have heard from Aditya and Prateek about MRL, how they are able to significantly reduce embeddings size by packing the most important information into the first dimentions, the implications of this for speed of retrieval, the significant boost in use-cases post the chatGPT LLM boom and more! Definitely give this one a listen if you're interested, the interview starts at 01:19:00 on the pod.

Thank you for reading, I really appreciate you coming back here week to week, and if you enjoy this content, please share with 1 friend and give us a ⭐ rating on Apple Pod? Here's a nice Ideogram image as a preemptive thank you!

As always, here’s the full transcript

[00:00:00] Intro and welcome

[00:00:00]

[00:00:00] Alex Volkov: Hey, you're on ThursdAI. This is Alex. Happy Leap Year Special Edition. Today's February 29th. We had a great show today. So great that got carried away during the recap, and it's almost twice as long as it usually is. The recap, not the show. But no worries. As always, if you're short on time, the first 25 minutes or so of this almost two hour podcast will catch you up on everything that happened in AI this week.

[00:00:29] Alex Volkov: If you're using Apple Podcasts, or any other modern podcatcher, you can also skip to the chapters, that I'm outlining every week and listen to the part that interests you, and only to that part.

[00:00:39] Alex Volkov: This week. After the newsy updates, we also had a deep dive into something called Matryoshka Embeddings, with the authors of the MRL paper, Aditya and Pratik.

[00:00:49] Alex Volkov: And thank you guys, and I really enjoyed chatting with them both. And we geeked out on why OpenAI decided to release something they came up with two years ago and how it affects the AI industry post the LLM explosion world. So definitely give them a listen!

[00:01:05] Alex Volkov: at the end of this episode. A brief TLDR, then a full news conversation you're used to, broken down to chapters, and then a deep dive, after this brief message from Weights Biases.

[00:01:15] AI teams are all asking the same question. How can we better manage our model development workflow? The path to production is increasingly complex, and it can get chaotic keeping track of thousands of experiments and models. Messy spreadsheets and ad hoc notebooks aren't going to cut it. The best AI teams need a better solution.

[00:01:38] and better tools. They need Weights Biases, the AI developer platform, to unlock their productivity and achieve production ML at scale. Replace messy spreadsheets with an automated system of record for experiments.

[00:01:57] Communicate about model evaluation. and collaboratively review results across the team. Clean up disorganized buckets of models with a unified registry. Automatically capture full model lineage. All the data and code used for training and testing. Seamlessly connect to compute to scale up training. And run large scale sweeps efficiently to optimize models.

[00:02:24] Analyze the performance of large language models. And monitor LLM usage and costs with live, customizable dashboards. Get your team on the same page to bridge the gaps from ideation to production. Use Weights Biases to build, manage, and deploy better models, faster.

[00:02:51] Alex Volkov: folks, here we go.

[00:03:10] Alex Volkov: Welcome, everyone. Welcome. This is ThursdAI, leap year of 2024. Today is February 29th. Don't get to say this often, February 29th. And this is ThursdAI, your weekly AI news update show and deep dive. We'll see a lot of it. My name is Alex Volkov. I'm an AI evangelist with weights and biases. And I get to do this as, and bring you all the AI updates that we've collected for the past week.

[00:03:43] Alex Volkov: And I'm joined here from week to week on stage with guests and experts and co hosts. I have Yam Pelig with me and Nisten Tahirai, and we're gonna have a few more guests later in the show today. And on this very Happy leap year, very special day. We're going to talk about a bunch of updates from the AI world, including big company updates, open source stuff.

[00:04:07] TL;DR for ThursdAI - February 29th

[00:04:07] Alex Volkov: Alright, so here's everything that we've talked about on ThursdAI for February 29th. This was a great once in a four year show. I just want to shout out before I recap everything that As always, I'm very happy when folks who build the stuff that we talk about, join and talk about that stuff. And this also happened today, so we had a deep dive, which I'm going to cover at the end.

[00:04:33] Alex Volkov: And also I will shout out that we're coming up on a one year ThursdAI stuff, which is March 14th. So in two weeks, we're going to have a one year celebration. I'm not quite sure what we're going to do with this. Maybe we'll do a give out of GPU credits. Maybe I'll, maybe I'll do some other musical stuff, but yeah, that's coming.

[00:04:50] Alex Volkov: I'm very excited. It's been a year and it's been crazy, a year of AI. Maybe we'll do a full recap. So with that, everything that we've talked about in ThursdAI for February 29th. We've started with open source LLM, our coordinator, and we've talked about. Google's Gemma update. So last week we covered the Gemma was just released and how the whole community got to start using Gemma and start to think about fine tuning and support and ElumStudio and Allama and all these things and Gemma It's been a week or so since the demo was out there, and we've tried to identify from the Vibes perspective and from the Finetuners perspective whether or not Gemma is this replacement for the top running Mistral 7b models that we had, even though on evaluations Gemma looks a little better and performs a little better than Mistral, we covered that It's not really 7b, it's like 8.

[00:05:40] Alex Volkov: 5 billion parameters, they just counted this differently. And we also saw that for multiple attempts from friends of the pod, Eric Hartford, Technium, Yam was here it's really hard to fine tune. The last curve goes crazy and we haven't seen like great fine tunes yet. Something from Hugging Face, from Philipp Schmid, but definitely.

[00:05:57] Alex Volkov: The Finetuners community didn't yet make this, take this model and make it like significantly better as we expected that they would and they're still working on this, so expect more to hear about this soon. And we also highlighted how much Mistral 7b set a very high bar in open source LLMs, and it's really hard to beat, even if you're Google, even if you have a huge amount of TPUs.

[00:06:19] Alex Volkov: We then covered briefly an unfortunate announcement from the information from Meta that Lama 3 will not be breaking news in ThursdAI this week or next week. Lama 3 release is probably scheduled to June in 2024, so not anytime soon. And it doesn't look like there's any information as to why that is, only speculation.

[00:06:39] Alex Volkov: So we definitely covered that this news happened. We then moved and talked about Starcoder 2, plus the Stack version 2 as well. Starcoder 2 is from I think Hugging Face and the Starcoder team. and they released a new model that beats pretty much DeepSea Coder before this was the best coding model in this area in the 15 and 7b parameters and StarCoder 2 is this model that now beats those quite significantly and together with this they also released a stack v2 which stack is a just a huge data set of code from github and other places and this is this data set is 10x the previous one

[00:07:16] Alex Volkov: And it also includes opt out, so you could, if you don't want your code to be trained on and to put into the stack this StackV2 includes opt out requests as well, and definitely great contribution to the open source It's 900 plus billion tokens in the stack, which is crazy.

[00:07:33] Alex Volkov: And I think there's the duplication, so it reduces a huge data set and supports , 600 programming languages. And quite impressive. We then also mentioned that Berkeley, the folks from Berkeley, Guerrilla, they previously released work in making AI's retrieve and call functions. And now they released what's called a function calling leaderboard and function called leaderboard is very cool because in addition to the MTB embeddings leaderboard that we've mentioned.

[00:08:02] Alex Volkov: Today, and obviously the open source LLM leaderboard on HagenFace that we all look to and see what's the best performing models. Now we also have something that measures the ability of models to do function calling. Function calling started with OpenAI, and then Entropic added support, and now Mistral added support.

[00:08:18] Alex Volkov: So we covered this effort as well, [00:08:20] and links will be in the show notes. We then moved and covered Illa or Illa, I'm never sure how to pronounce this. They used the Open IMIS dataset. Open IMIS is the dataset from news research that is fully open. And you can use this in production without being afraid of being sued.

[00:08:37] Alex Volkov: And open imis preferences is the new. Largest open dataset for RLHF and DPO, so Direct Preference Optimization, Argea used their distilled label feature to actually take every instruction in that dataset and turn it into a preference instruction where the model would basically learn one or another, which one of the instructions are preferable.

[00:08:59] Alex Volkov: So both could be correct, but one could be more preferable. So this is basically a very short version of DPO. And Argear released the largest open source like DPO dataset as according to them. And they used interestingly, they used another Nous model based on Ye34 to actually create those pairs and those preferences, which is super cool.

[00:09:18] Alex Volkov: I love how now open source uses other open source in order to rank and improve itself, which is really cool. So this is everything we covered in the open source. And then we moved into big companies, LLM and APIs. And the big companies we talked about, the biggest news from this week was If you guys remember, we can talk about Mistral's OpenWeights model in the OpenSource LLMs and OpenWeights LLMs, but Mistral is also now an API provider, and they have this platform called LaPlatform, or LaPlatformer, and then, pardon my very bad French as well, they released a huge model for us called Mistral Large, which we only speculated about whether that's coming at some point as well, plus they also released something called LeChat.

[00:09:59] Alex Volkov: And, Mistral Large is based on some MMLU stuff is actually second performing model in the world getting 81. 2 percent on, I think, MMLU and second only to GPT 4. So Bitscloud 2 and Gemini Pro, they didn't add Ultra here, so I'm actually not sure how it compares to Ultra, but definitely now is available over API for Mistral folks.

[00:10:20] Alex Volkov: One highlight that we've talked about, it's handles 32, 000 tokens of context. And because Mistral is trying to position themselves as the leader in at least European. This model is native in French and German and Spanish and Italian. And it's definitely well performing in those languages as well.

[00:10:39] Alex Volkov: In addition to this, those models, all of the models in there, the platform now support function calling as well, which is. This is really cool that we now have multiple providers that support function calling. Plus, we have a leaderboard for function calling so definitely a lot of highlights from what happens in this area.

[00:10:56] Alex Volkov: And also, they introduced LeChat, which is a chat interface currently in beta on top of ORDEL models, so you Actually, you can go and use this if you don't pay for, let's say, GPT 4, and you only get access to three, you can go to the chat and try their models out. Shout out to Mistral. They also announced a partnership with Microsoft and for the open source community.

[00:11:15] Alex Volkov: This sounded hey, they're releasing models, but they're not dropping torrent links anymore. Are there still proponents of open source? And they came out and said, yes, we're still proponents of open source. It's very important for us. And give us some time, we'll give you some more models. Basically, was the response from Arthur Mensch from Mistral.

[00:11:31] Alex Volkov: We also talked about Google teasing Genie, which is a model that makes images into interactive games. And that was really cool to see. I'll add this link to the show notes. It's quite remarkable to see this video from one image of a character in the world. It creates a full world. Imagine how much imagine like a full Mario just created from one image of Mario.

[00:11:52] Alex Volkov: It's quite remarkable. has been in the news lately for the past week or so, we've talked about this, but basically following up of what we talked, where Gemini release was celebrated in some areas because Gemini Ultra beats GPT 4 on different things. It, it also released a lot of responses online in terms of how it reacts to certain prompts, and it, it went, potentially also affected their stock price.

[00:12:15] Alex Volkov: I'm not sure if that was the one thing, but definitely Sundar Pichai, the CEO of Google, sent an email to the whole company talking about how this release was not quite received as much as they hoped, and I'm using choice words here, he actually talked about structural changes and a potential review of the whole process of releasing this and They've took down the ability to generate people from the image version of the Gemini model, but they also talked about specifically the Gemini model itself refusing different things.

[00:12:45] Alex Volkov: This is in addition to them delivering very well and giving us Gemini 1. 5 Pro, which has 1 million tokens in the context window, which I played with this week, and I definitely think it's a great thing from Google. This announcement from Google. released in open weights Jema models and Gemini 1.

[00:13:01] Alex Volkov: 5 doing like crazy new things, but also the Gemini release at large did not go probably as expected. Potentially the reason why Google took their time to release something for us. We then covered the OpenAI is allowing Finetune on GPT 3. 5 and also the OpenAI response to New York times and said, Hey, we actually did not, do the things that you accusers are doing, but also that New York Times did some trickery in prompts to get the model to respond this way. So the saga between OpenAI and New York Times continues, and that's going to be interesting to follow along. And, OpenAI was also featured in another piece of news, actually two pieces of news.

[00:13:37] Alex Volkov: One of them is now there's a conversation that WordPress and Tumblr, both companies from the automatic company daughter companies they will prepare to sell their user data. So basically everybody who had a blog on wordpress. com and everybody who had a Tumblr account. Most of this information probably was already scraped and already featured in datasets from OpenAI, but now they're preparing to sell this information to OpenAI and MidJourney.

[00:14:00] Alex Volkov: And similar to the Reddit Google deal for 200 million dollars recently announced WordPress and Tumblr are now preparing to sell to OpenAI and MidJourney as well. And also OpenAI, and the robotics company also announced a collaboration as well. Brad Atcock's company will integrate with OpenAI's models as well.

[00:14:23] Alex Volkov: Then we moved on to AI Art in Diffusion, which had an incredible week this week with two foundational models, or I guess like big new models that are not Stable Diffusion or DALY or Mid Journey. So the first one was Playground. Playground is a, was an interface. At first it was an interface for DALY and Stable Diffusion.

[00:14:41] Alex Volkov: And they built a very nice, very simple interface that's super fast. You can inject styles. So they used all this data to actually release a new foundational model called Playground V2. And in user preference, this Playground V2 beats Midjourney and beats Stable Diffusion Excel and beats the previous model Playground and DALI.

[00:14:56] Alex Volkov: It looks really cool. And specifically, they talk about their ability to generate photorealistic images very well. And also specifically different. ratios of images. So if you think about the standard 1024 by 1024 image for stable diffusion, Excel, for example, or different other sizes, their ability to generate other nonstandard ratio models, images, it looks very cool.

[00:15:21] Alex Volkov: And in the internal user preference, they actually beat by user preference, they're showing two images for the same prompt. They beat, their v2 beats Midjourney 5. 2 in DALY by 9 percent difference in, in the previous model. And SDXL by a significant margin as well. It looks really cool and definitely worth checking this out.

[00:15:40] Alex Volkov: I'll put a link in the show notes. And the other news That's not stable Fusion, mid journey or daily related. It's quite a mouthful to say ideogram, which we've covered before, announced a version 1.0 of Ideogram X Google, folks who worked on the Google models program, like a website called Ideogram.

[00:15:56] Alex Volkov: And their approach is very participatory. It's very I think Instagram is the source of their name, like Instagram for ideas. And they announced a version 1. 0 and investment from A16z. And specifically it's state of the art on text generation. Text generation is something that we know that other models have and their model is able to put.

[00:16:19] Alex Volkov: text very well inside images. So if you want like reactions or memes or if you're doing presentations, for example I had multiple creators and characters hold like ThursdAI spaces. I think we had some folks even react as I was talking with with ideogram generated text images in in the comments as well.

[00:16:36] Alex Volkov: We, so this is all we covered in AR and diffusion [00:16:40] until we got to this like jaw dropping thing called Emo from Alibaba, which is a tease. It's not a model they released yet, but definitely there is a bunch of videos that were to me as Jaw dropping as Sora from a couple of weeks ago there is something called Emo, which is a way to animate faces to take an image and create a singing or talking face, and it's not only the face, like the shoulders move and everything, so animate an avatar based on one image, and I will not be able to do it justice, because I'm still collecting my jaw from the floor, but definitely I will add some links and some videos, and Coherence with which these models generate talking faces is just incredible.

[00:17:17] Alex Volkov: It's not only about animating the mouth, they animate eyes and eyebrows movement and even different other things like hair and earrings . And one, one last thing that I noticed that really took me a second was they even animate the vocal cords and the muscles in the throat where somebody sings, for example.

[00:17:35] Alex Volkov: And when I saw this, I was like. This is another Sora moment for being able to create with these tools. It's really incredible and I really hope they release this in open source so we'd be able to animate whatever we created with Sora.

[00:17:47] Alex Volkov: And we covered all of this. And then we had a deep dive with Aditya Kusupalli Pratik Jain the authors of MRL paper, Matryoshka Representation Learning, and they talked to us how recently OpenAI released a new version of their embedding model, and you were able to specify the number of dimensions you want, and many folks didn't understand what this is and how it works.

[00:18:08] Alex Volkov: And apparently, Even though OpenAI built all of this from scratch, it was based on the paper that they released two, almost two years ago called MRL, Matryoshka Representation Learnings. And they, we had a very nice chat and deep dive into how this actually works and how they pack The information, the embedded information from later on dimensions into some of the first dimensions.

[00:18:30] Alex Volkov: If you're interested in this area and this area is very hot, I definitely recommend you check out this conversation. It was really great. And thank you, Aditya and Pratik and the rest of the Matryoshka team for joining and talking to us about this new and exciting field

[00:18:42] Alex Volkov: And I think we started already chatting a little bit, and I see some folks from Hug Face in the audience sending sad emojis.

[00:18:48] Alex Volkov: And I want to send I want to send hugs to the Huginface ML Ops team yesterday because for many of us who now work with

[00:18:57] Hugging Face was down, we were sad and thankful

[00:18:57] Alex Volkov: Huginface, and by work actually our code includes a bunch of imports from Huginface there's transformers as well. Yesterday was a realization of how big Hug Face is now part of many of our lives.

[00:19:11] Alex Volkov: I think for the first time for many of us, this was like such a big realization because that imports stopped working and the downloads didn't actually work. And so we actually had a long space yesterday pretty much throughout the whole downtime as we were holding each other's hands. It reminded me, I don't know Yam, if you want to chime in, but it reminded me previously when GitHub was down, basically You know, you could work, but if you can't commit your code,

[00:19:34] Alex Volkov: What does it help? And I wanted to hear from you, because I think you had some models queued up for some stuff, and then you were waiting for them?

[00:19:42] Yam Peleg: Yeah, look HuggingFace is really the hub today. It's not only for using, for most people, I think it's because they cannot fork or clone models from HuggingFace, so they cannot do many things that they do because your code relies on on getting the model from HuggingFace. This is why, by the way, they tweeted just For anyone that doesn't know, you can work offline.

[00:20:05] Yam Peleg: If you ever cloned a model from HuggingFace ever, you probably have it already on your computer, so you can just use the offline version. So there is a command for that. But for many people, it's cloning the models, but for many other people, it's also the feedback that you get from HuggingFace. I can tell you some people are, some people, some other people here in the stage, that we submit models to the leaderboard, and try to get Try to fine tune better and better models, and for us it's also the feedback of what is going on, where our models shine, and where do we need to make them even better.

[00:20:41] Yam Peleg: And for me at least, I was I had four models that I waited for results for, and many other people as well. And just shout out to Hugging Face for actually doing it. I'm running evals locally, and I know how to do it. Heavy it is to actually run them and how much compute it takes for how long.

[00:21:01] Yam Peleg: And it's amazing to see that they have such a leaderboard with so many models. It's amazing. It's thousands, like hundreds of thousands of dollars of compute to actually create such a leaderboard. So it's amazing to see. And they provide it literally for free where, the community is growing every day.

[00:21:18] Yam Peleg: So it. It does cost so huge shout out for them,

[00:21:22] Alex Volkov: I was trying to prepare

[00:21:23] Yam Peleg: are all addicted much.

[00:21:25] Alex Volkov: Absolutely, Dicta, I was trying to prepare yesterday for this space, and part of my preparation is reading X and Twitter, but definitely part of my presentation preparation is going to Hug Face, reading the model cards reading the leaderboards, for example. I was trying to count in my head how much stuff we're getting for free from Hug Face, and one such example is just their blog, which was also done, which I read specifically to prepare for the Matryoshka conversation today.

[00:21:50] Alex Volkov: And, That's just like a huge resource on its own. There's the whole conversation piece where, there's the hub, but there's also the conversations. AK posts papers, for example, they post them on Hug Face, and then there's a whole discussion threads about them as well. That wasn't accessible.

[00:22:04] Alex Volkov: Leaderboards themselves weren't accessible. And just the amount of compute, like you're saying, that they throw at us for free to be able to support this open source is definitely worth a shout out, and definitely shout out to engineers there that brought the hub back. Nisten, what are your thoughts on this?

[00:22:22] Nisten Tahiraj: Yeah, without Hugging Face, this place turned into a flea market for models. People were asking, does anyone have Quan72? And I was like, no, I have the Finetune. And then, the dev lead of Quan72 pointed us to some Chinese site where they can download it. It was pretty

[00:22:39] Alex Volkov: Wait. Modelscope is not just some Chinese site. Modelscope is where I think most of the Chinese folks are posting their models. It's like the, I think modelscope. cn, I think is the alternative on the Chinese area. So there is at least a backup for some Chinese, like models. Although I think you have to translate that website, right?

[00:22:59] Alex Volkov: But yeah, I don't know we had a conversation yesterday, and Far El was also talking about datasets, where many folks just upload the dataset, don't keep a local version of it locally, and then to be able to run evaluations, or do different things like this, that also was prevented yesterday.

[00:23:14] Alex Volkov: Definitely yesterday we discovered how big Hug Face became part of many of our lives, and it was a sobering realization, but, I don't know, for me, like I saw people complain online, And I get it, folks. I get it. Sometimes, you complain. But honestly, sometimes As far as I understood, the downtime wasn't even some their fault.

[00:23:32] Alex Volkov: There was like a mongo thing in AWS. I'm not sure. I didn't dive in deep. I just, when this happens, in my head, when I dealt with downtimes before in my professional career, Nothing but appreciation for the team to work hard. And the, I think, Yam, Clem, the CEO, even responded to you. When you said hug and face it down, right?

[00:23:55] Yam Peleg: To many people, not just to me, but yeah they are responsive.

[00:23:59] Alex Volkov: Responsiveness and like being in the community and saying, Hey folks, we understand, we're sorry about this. I think that's basically, besides having folks work on this actively, which we know they had, this is all we can basically ask for. So I'm just sending positive vibes and appreciation. I saw some people getting salty.

[00:24:17] Alex Volkov: I saw some people saying Oh, this sucks. And we need a backup. And I was like, yes, but also, this doesn't mean that, you can ignore everything for free that we've got so far from this incredible organization. So shout out. And I don't work there, but I do have many friends who do.

[00:24:33] Alex Volkov: I think, yeah, Nisten, go ahead. And then we'll move on to actual recap of everything we're going to talk about.

[00:24:39] Nisten Tahiraj: Yeah, and same for the leaderboard. We give Hugging Face so much crap when things don't work, and I really appreciated that. It's actually the CEO that responds directly to your Complaints and tickets and it's not just some like support person. No, it's Clem. He's the actual CEO. They'll respond [00:25:00] They're the first ones to respond.

[00:25:01] Nisten Tahiraj: So so that's pretty amazing You don't really see it in other companies Like we don't expect the president of microsoft brad smith to ever respond to a github issue. Could you imagine that? So

[00:25:12] Alex Volkov: He is not your favorite. I would love Satya though to, to chime in on the discourse but not Brad. Yeah, absolutely cannot imagine this and kudos, kudos to them for the participation in the community.

[00:25:23] Open Source AI corner

[00:25:23] Alex Volkov: And I guess we should start with our usual thing open source. So I guess let's start with open source Alright folks, this is our regular update every week for the Open Source Corner, where we're gonna start with Interestingly, Mistral is not in the open source corner, is not featured in the open source corner today, but we'll mention them anyway, because from last week, if you guys remember Jammer was released, it wasn't open source, it was open weights, but definitely Google stepped in and gave us two models to run, and since then, I just wanted to mention that many folks started using these models, and there's quite a few stuff that, yeah, I'm actually wanting to hear from you about, because we talked about this, the Gemma models are not necessarily seven billion parameters, right?

[00:26:24] Gemma from google is hard to finetune and is not as amazing as we'd hoped

[00:26:24] Alex Volkov: This was a little bit of a thing. And also about fine tuning. Could you give us like a brief out like how the last week in terms of Gemma acceptance in the community was?

[00:26:32] Yam Peleg: Oh, wow. Gemma is giving me a hard time. This is for sure. I'm fine tuning Gemma for, or at least struggling with fine tuning Gemma for a week at the moment. Okay, so starting from the beginning, GEMMA is not exactly 7 bit. The way it is referred in the paper is that the parameters in the model itself, apart from the embeddings, are exactly 7 billion parameters.

[00:27:01] Yam Peleg: But then you add the embeddings and you're a little bit over 8. 5, if I remember correctly. Um, which is fine. I don't think anyone has any problem with a bigger model. Just, I think that it'll be less, it'll be more genuine to just say it's an 8p parameters model. It's fine. That's first.

[00:27:23] Yam Peleg: Second, it's, it behaves differently. than what we're used to with Mistral and Lama. I'm not sure why. Maybe someone can tell me, but I'm not sure why. It behaves differently. And many people are currently working and struggling to fine tune it better. This is where it is at the moment. I heard, I've seen already Orca.

[00:27:54] Yam Peleg: Someone fine tuned on Orca and didn't get Great results. I also heard that Hermes, someone Finetune on Hermes, I think from Nous. I'm not sure, but I think. Also, results are not great. I'm continuing pre training and the loss is is doing whatever it wants. It goes down and then out of the blue it starts to jump.

[00:28:16] Yam Peleg: I'm not sure exactly why. It might be because the architecture is slightly different. There are slight modifications. So maybe that or maybe something else, but yeah, I think we're still. exploring the model. We don't have an answer yet.

[00:28:35] Alex Volkov: Yeah that's what I got as well. I pinned a few examples of Eric Hartford from DolphinFan, I think he now works in Abacus and Technium as well, tried to, to do some stuff and all these losses look crazy. All these losses look like jumping around up and down. I saw a tweet from Philip Schmidt from Hug Face where they were able to, to fine tune some stuff and the conversation from Eric and Wing Lian from Axolotl.

[00:29:00] Alex Volkov: And there looks to be an effort to try and hone this thing and see if actually, fine tuning this on some stuff. The Hermes stuff, Finetune, was not really like an official news research thing. It looked like somebody just took the data set and folks weren't able to actually Get it to run or perform well as far as I saw I haven't seen an update from this But I definitely follow up with news.

[00:29:22] Alex Volkov: So I would just remind folks, last week we talked about Jemma was well received.

[00:29:26] Alex Volkov: Everybody hopped on board like super quick and added support. LMStudio and Olami added support like super quick. Wing started adding support to Axolotl for fine tuning. Hug and Face added support in, I think, Transformers. There's a bunch of TreeDAO added support for Flash Intention. There's a whole community effort to receive GEM as much as possible.

[00:29:47] Alex Volkov: And they also released some stuff in, in, in quantized versions from Google. So very good effort from Google and then very big acceptance from the community. But since then, what I'm trying to highlight is a lot of the stuff that we've talked about a lot of the way we judge models, whether or not they're good or not is, if they're finetunable, for example, is one thing, but also if they're instruction following, if it's easy to converse with them. I haven't seen any of this come across my timeline at all. I will be frank, I only interacted with the 2 billion parameter model. And wasn't impressed. It's great that we released it.

[00:30:20] Alex Volkov: I wouldn't, would not be using this for any of my workloads. Nisten, do you have any other feedback as well? Specifically around like how Mistral 7b seems to be still. A good alternative, even though it's performing less on evaluations.

[00:30:34] Nisten Tahiraj: Yeah, I feel like we have been spoiled by just how high of a bar Mistral 7b has set for everyone, that it even made Mistral large feel somewhat unimpressive, although it was answering everything perfectly well. But, yeah, not only has it set a very high bar, but it was also very easy to work with. So the amount of innovation that came upon the community just building off of the initiated weights, has made This class of models, extremely competitive that even Google has a hard time cracking through that.

[00:31:15] Nisten Tahiraj: Yeah, our expectations now for a 7b model are extremely high. It has to run on my phone. It has to do what I want. It has to respond. It has to summarize stuff, has to carry forward the conversation. Oh, and it has to score high on the benchmarks too. And it. This pace of innovation that the community has set upon this is just very hard and also incredibly interesting to see that Google is having a very hard time matching or getting close.

[00:31:46] Alex Volkov: Specifically because, in the land of GPU poor and GPU rich, in the original article that defined the two categories, Google is the GPU slash TPU rich, right? They could and have thrown a bunch of compute at these models and still the folks from Mistral, a team that's less than 30 people that started eight months ago released a model.

[00:32:06] Alex Volkov: 6 months ago? I think Mistral 7B is around 6 months ago, right? September? That Google, 6 months after, with all the GPU richness, is very barely able to match, not to mention, beat significantly. Which is unlike any pace that we're used to. We're used to a 7B model beating a 7TB model week after week.

[00:32:25] Alex Volkov: And here's a huge company coming out and saying, Hey. Here's our best attempt at the 7b model that YUM doesn't even consider a 7b model, and it's in at least our attempts to play around with this. It's not beating significantly, which is strange. But also not being able to get fine tuned very easily.

[00:32:43] Alex Volkov: Very interesting and very a highlight of how much quality the the Mistral model was. I will also say that Arthur Mensch we'll cover this in the Mistral section afterwards, but he came out and he said something and basically said, we can only do so much with 1500. H100s, 1500 H100s just by contrast, Meta announced a few months ago famously, Zuckerberg came out and said, by the end of this year, they're going to have 600, 000 worth of equivalent of H100 compute, 600, 000 H100s to train and host and probably, do inference on Meta and Llama.

[00:33:19] Alex Volkov: And [00:33:20] this is like 1500 H100s that Mistral was able to use in Finetune, a model that Google cannot wipe off the board completely.

[00:33:29] LLama 3 won't be released until June 2024

[00:33:29] Alex Volkov: It's very crazy. Moving on to basically another news update that's not a news update. We've been waiting for Lama 3 for every week. I've been saying, Hey, it could get released here and et cetera.

[00:33:41] Alex Volkov: There was a leak from the information. I actually don't know if it was a leak or not, but the information came out and then a bunch of other companies followed with this news where Lama 3 will be released. I think in June, this was the update. LLAMA 3 will not get updated and released for us anytime this year.

[00:34:00] Alex Volkov: We were hoping for a one year anniversary. LLAMA 1 was released in February 2023. And now we're not gonna see LLAMA 3, even though it's like a finished training as far as I understood, or as far as updates were. And while Zuckerberg goes and eats at McDonald's, LLAMA 3 will not get released from us. I wanted to hear folks here on stage react to this, because surprising news, isn't it?

[00:34:23] Alex Volkov: Ha,

[00:34:24] Nisten Tahiraj: gonna say that I called it, just based on how just how censored and unwilling to answer anything Code Llama 2 was. So yeah, if Code Llama 70b wouldn't answer anything, I figured it would be pretty, it would be around the 3. So now they either have to go way back in the training. When they started doing a lot of this, and retrain it with with it being a lot more obedient, but still not horrible or anything, because we see from Mistral's team that it does obey to you and respond stuff, but it still won't tell you, like, how to kill your cat and stuff so it's, yeah, they, the public backlash from it.

[00:35:12] Nisten Tahiraj: People giving it to Gemini and Google has has completely affected the LLAMA3 release, which is just very interesting.

[00:35:19] Alex Volkov: interesting, Because they didn't release LLAMA 1, and then nothing bad happened in the world. And then they released LLAMA 2, with a commercial license that people can actually use this. Which kickstarted a bunch of open source stuff. And now they're waiting with LLAMA 3. Potentially I heard some stuff where it could be GPT 4 matching model that we could run.

[00:35:40] Alex Volkov: But, we don't know until it's released. But just like a very Interesting update. And I gotta wonder if by the time they decide to release this if other open source will catch up or not. Usually LLAMA, when they come out with a big model it's impressive. But for example, LLAMA code already was beaten by the time it came out, right?

[00:35:57] Alex Volkov: If I'm not mistaken, like DeepSeaCode and other models achieved the same score on coding that LlamaCode was released with. Maybe waiting a little bit. I gotta wonder what goes into this decision. Which on the topic of code,

[00:36:10] StarCoder 2 and Stack V2 open source from Hugging Face

[00:36:10] Alex Volkov: I think we're moving to the next thing. And Star Coder two and Stack V two were released and in collaboration with with hugging face.

[00:36:17] Alex Volkov: Stack v2 is like the second iteration of the stack data set, which was just like insane amount of code collected.

[00:36:25] Alex Volkov: I think stack v2 now includes opt outs. So you could say, hey, I want my code to be opted out from the stack v2. And so this new data set, I think is 60. Billion parameters, I want to believe 10x more than the first stack. And Starcoder, the 15 billion parameter model, it beats Code Llama 13b pretty much on every Human Evil Plus and DS 100, the GSM 8K.

[00:36:49] Alex Volkov: Um, very impressive. It beats, obviously, the previous Starcoder, which was a very significant model. I think Based on the evaluations, DeepSeq Coder, we know, was like one of the best code models so far. And it looks like StarCoder on a few benchmarks, competes with, but everything else, it beats DeepSeq Coder as well, for the 7b model.

[00:37:09] Alex Volkov: But it's a model twice, twice the DeepSeq size as well. So they released three models, 3 billion parameter, 15 billion parameter versions. 15 billion parameter is a very interesting, Place where you could potentially run this still on your Mac if your Mac is stacked up and get a decent result back.

[00:37:26] Alex Volkov: It has a 16k context window, a very weird one usually like 16 384 weird one. It was trained on 4 trillion tokens depending on the size of the model Includes 600 plus programming languages, which is great, all we care about probably is Python and JavaScript and maybe some folks care about Rust, but 600 plus programming languages, I honestly didn't even know there was that many.

[00:37:51] Alex Volkov: Percent of the human eval, which is okay, I've seen models that get way better than 46%, so that's interesting. And What else is interesting in DeepSeq? It's a commercial friendly license, so you can use this for commercial stuff. Can be used for local copilots, which is something we're waiting for.

[00:38:06] Alex Volkov: And the more of this, the better. And yeah, StarCoded 2. But I also want to shout out that the StackV2, like the more data we'll get, the better it is for everybody else and other models as well. And the StackV2 is definitely a great achievement that we should shout out.

[00:38:23] Nisten Tahiraj: Yeah, this is crazy. The full data set is 67. 5 terabytes for the stock v2 and you can just have it for free. It's the amount of work. So it's 900 billion tokens extra that went on top of what was actually an excellent model coding model to begin with. So this is this is huge, not just beneficial from the model itself, but also because you can just.

[00:38:47] Nisten Tahiraj: I don't know, Finetune 1 for TypeScript, if you want.

[00:38:50] Alex Volkov: Yep. Yeah, go ahead.

[00:38:53] Yam Peleg: Yeah, I think it's worth worth mentioning that as far as, I haven't looked at it in, in depth because the Honey Haze was down but as far as I understand, it's a base model. When we compare human eval of a base model to a model that was specifically Finetuned to obey instructions, And we see a result that is, okay, it's not the same, but it's somewhere at the ballpark.

[00:39:18] Yam Peleg: It's amazing, because it just means that as soon as you will find Junaid, it's going to be incredible. Moreover, from what I've seen in the paper, I was just, I heard about it, and I was sure that I'm going to open the paper, and what I'm going to see is something like hey we did the same thing, but huge 4 trillion tokens, enjoy.

[00:39:38] Yam Peleg: But no what you see over there is that they really went in depth into the benchmarks themselves and checked which benchmark is actually what exactly does it measure? How it correlates to real life usage. They went over there and benchmarked different packages, like each and every one, like how good is it with Matplotlib?

[00:39:59] Yam Peleg: How good is it with SciPy? And this is It's a very detailed and high quality work for, it's very hard to say which is better as a base model, DeepSeq or StarCoder, because there are so many benchmarks in the paper I've never seen before, even DeepSeq has, I think, six benchmarks. StarCoder, I didn't even count, there are so many, and I think it's great work, even I suppose that the model is really good at least on the level of DeepSeq, although I don't know, I need to check, but I, but just the paper alone, it's such a huge contribution, the paper alone and datasets, so yeah it's amazing.

[00:40:40] Yam Peleg: And it just, it went a little bit silent. People just released models that were trained on 4 trillion tokens and it goes silent nowadays. It's amazing that we got numb to something that's insane.

[00:40:53] Yam Peleg: And on the same week, on the same week, NVIDIA released a model. I don't think they actually released the model, but they just trained the model on 8 trillion tokens.

[00:41:03] Yam Peleg: And we don't even talk about it. It's just insane.

[00:41:06] Alex Volkov: let's talk about it. I saw the Nvidia stuff, but I don't see a release. I saw an announcement, right?

[00:41:12] Yam Peleg: Yeah, it was a paper and I think that's about it. NVIDIA is showing they got the best hardware because they got the best hardware. So they can train on a lot of tokens really fast. And the model is really good at the end because, the tokens, but but yeah, I'm just saying that it's increasing, the amount of data is increasing, the size of the models that we actually use are increasing, and worth noting [00:41:40] the trend is, there is a trend of things getting more and more powerful.

[00:41:45] Alex Volkov: Absolutely. And I would just say this is partly what we're here for to highlight things like this in the open source and shout out the folks who worked hard on this, on releasing this and making sure that this didn't go silent because this effort is very well appreciated. If it's a base model, then we'll get local co pilots performing way better.

[00:42:04] Alex Volkov: And this is great, especially the data set that they released. 10 times the size of the previous one, it's called the stack, and folks would be able to use this to fine tune other models. And that's obviously also great.

[00:42:15] Argilla releases OpenHermesPreferences

[00:42:15] Alex Volkov: And on the topic of datasets, if you guys, Remember, we've talked about Argea multiple times at this point, shout out Argea folks, and if you want to come up and talk about Argea, your place is here.

[00:42:27] Alex Volkov: They released a DPO conversion of Technium's Hermes dataset, it's called Open Hermes Preferences. And as we've talked about Nous Research and Hermes multiple times, this is one of the datasets that, I think, a million rows that compile from different other datasets as well.

[00:42:45] Alex Volkov: And Argia is an open source tool that allows you to, make datasets better by converting them to preferences and DPO. So they released the DPO version, DPO's direct preference optimization version, where basically they take a dataset with a regular ROHF dataset with one instruction in a conversation and turn it into kind of a preference where they show a few instructions and they actually have information about what would be a more preferable.

[00:43:12] Alex Volkov: Instruction. That's what, very poor explanation of DPO. Yam, if you want to chime in here and clean this up feel free. And Argia released an open Hermes preferences, which is 1 million preferences dataset on top of Technium. And, um, it's pretty remarkable because we know that Even Nous Research, when there is DPO versions of their models, it performs better than a regular SFT fine tuning models on top of every benchmark pretty much.

[00:43:40] Alex Volkov: And now, they've converted all of that dataset into a preferenced dataset. They've created the responses with another Hermes model, which is pretty cool, right? So they're using they're not using OpenAI because scraping from OpenAI is against, as we saw in the lawsuit with OpenAI it's against the terms of service.

[00:44:02] Alex Volkov: But you can actually create these preferences with another model. So they're using Nous Research's Hermes to Yee on top of YE 34 B to do what's called the distill label and make those instructions a little better. And this data set is open. So unlike the regular thing Air Ms, this data set is open for you to also go and fine tune your models, which is pretty cool.

[00:44:24] Alex Volkov: And shadow to the open ESS preferences. I'm gonna pin this to the top of the space and I will also definitely add this to the show notes.

[00:44:32] Function calling leaderboard from Berkley

[00:44:32] Alex Volkov: No. Okay. Let's move on in our conversation. I wanna talk about the function calling leaderboard because I think it's pretty cool. Lemme just go and find this this switch real quick. This is from, oh, was actually, yeah.

[00:44:44] Alex Volkov: There was an effort before called Guerrilla, and now the same folks from Berkeley released a leaderboard called Berkeley Function Calling Leaderboard, and essentially, function calling for those who don't use any open source model but use something like OpenAI. OpenAI, during last summer, I think, answered everybody's request to give us structured outputs in the form of JSON and answered them with, hey, we're going to introduce something called function calling for you, where you call our model and you provide One function or several functions in your code, and the model will respond and say, Hey, you should call this function and with these parameters.

[00:45:23] Alex Volkov: And basically, instead of getting JSON mode, we got function calling back then. Now we have both, we have a way to get just structured JSON, but also we get models to respond with which functions we should call. And this is great for agents, this is great for folks who are building with these models.

[00:45:38] Alex Volkov: And I think during the summer, because OpenAI came up with this concept, OpenAI was the only model that was supporting this. And then quickly, open source started catching up. And I think, Nisten, correct me if I'm wrong, but I think John Durbin's Ouroboros has a bunch of like function calling instructions in it.

[00:45:54] Alex Volkov: So this model and then models who are trained on Ouroboros were also fairly okay with function calling. Mistral just released their update, so Mistral supports function calling.

[00:46:05] Nisten Tahiraj: They had about a thousand. About a thousand of function calling datasets in the AeroBoros two, or I forgot. Just look up John Durbin, J O N Durbin, and AeroBoros, A R A I R O B O R O S dataset. And, yeah, apparently there's about a thousand entries in there for function calling. That's by accident helped a lot of the other models be better at function calling too.

[00:46:29] Alex Volkov: Yeah, so every other model that was trained on Airbores, which is a lot Hermes includes Airbores data set. They now I don't know if this is by accident or this is now how things work in the merging world. And in the, Finetuning on top of data sets that Finetune on top of other data sets, right?

[00:46:44] Alex Volkov: But definitely other. other open source models, no, no support, at least the notion of functional control, and then the eventually we get to the point where there's now a leaderboard like we like. So if we're going to talk about embeddings later, there's an MTB leaderboard for different embeddings model, even though I see Bo in the audience and He's not very happy with how easy it is to game this leaderboard.

[00:47:07] Alex Volkov: We obviously look at the open source LLM leaderboards and Yam was talking about submitting a few stuff there and see how it performs and that's being, exploding popularity and merging. So it's great to have a function calling leaderboard as well. And folks at Berkeley that tests models I think API only, I don't know if they're supporting open source at this point, the test models and looks at.

[00:47:28] Alex Volkov: How you could expect a performance on different function calling and I think for folks who are building with this it's very cool. So Some of the models that are leading this leaderboard and GPT 4 the latest preview from January is leading this They have something called Open Functions V2, which I I think the organization that pulled this up, Gorilla LLM, is the folks who put it up, and they have an Apache 2 license, and they have an average score on different Simple Function, Multiple Functions, Parallel Functions different scores for all of these tasks.

[00:48:08] Alex Volkov: And I just, I want to highlight this and I want to add this to the show notes because more and more we see Mistral Medium entering their Cloud From Entropiq and open source models. And I think for many folks building, agents building with these models This type of interaction with the model is very important, where it's not only a prompt, a textual prompt, and you get something back, you actually need to do something with it, and I think a shout out for folks for building and maintaining this data, this leaderboard.

[00:48:34] Alex Volkov: And I think they also released the Gorilla model as well. . Let's move on, I think this is it, folks. I think this is everything we have to talk about in the open source LLMs.

[00:48:42] Alex Volkov: And given that Conor, given that Storm is in the area of open source ish, let's cover Storm a little bit.

[00:48:49] Alex Volkov: I think this is a good time. Because it also like dances on the on the area of interest that we talked about last time. Do you want to present Storm and talk about this and see how cool this is?

[00:48:58] Connor Shorten: Yeah, cool. I guess maybe let me say one more thing on the gorilla. I think it's fascinating going through the functions that they have if you go through the open function, the blog post from Berkeley, you have calculate triangle area, and then you give it the base and the height. And I think that kind of just like super specific functions, having a massive data set of that.

[00:49:16] Connor Shorten: It's fascinating that they've, seeing this next evolution of that, but. Okay, so with Storm yeah, there's definitely some intersection between DSPy and the function calling models. With DSPy, one of the one of the one of the built in signatures is that React one, where at React you have thought, action.

[00:49:33] Connor Shorten: And so you, it's one way to interface tools. Yeah, the tool thing is pretty interesting. I think it's also really super related to the The structured output parsing and, the please output JSON and, Jason, our favorite influencer of the function calling

[00:49:47] Alex Volkov: I just wanna make sure that folks don't miss this. Jason Liu is the guy who you're referring to, and he is, he's our favorite influencer in, in forcing these models to output JSON. I find it really funny that the, a guy named [00:50:00] Jason is the guy who's leading the charge of getting these models to output JSON formatted code.

[00:50:04] Alex Volkov: I just find it really funny. Didn't wanna skip this. I wanted to plug this, that joke somewhere, but please go ahead and let's talk about the story. Oh, and a shout out to both Weights Biases and Connor on WayVid, Jason appeared in both places talking about Instructor Library and how to get these models to give a structured output.

[00:50:21] Alex Volkov: So definitely shout out for Jason for this, check out his content on both platforms.

[00:50:29] Connor Shorten: yeah, awesome. Yeah, it's such a huge part of these, lLM pipelines, like I know Bo is going to speak in a bit, who's someone I consider one of the experts in information retrieval. And one of these big things is like you will retrieve and then you'll re rank and then you'll generate. And if it doesn't follow the output exactly, you can't parse it in the database.

[00:50:47] Connor Shorten: So it's such a massive topic, but okay.

[00:50:50] Stanford introduces STORM - long form content grounded in web search

[00:50:50] Connor Shorten: So starting with Storm, I guess I can tell a funny story about this. Erica and I were hacking on this and we came up with the plan of You start off with a question, and then you do retrieval, and so you're looking at the top five contexts, as well as the question, and you use that to produce an outline.

[00:51:06] Connor Shorten: And again, structured output parsing, that outline better follow the comma separated list, so that then you can parse it, and then you'll loop through the topics. And then we have a topic to paragraph prompt, where, you know, you're doing another retrieval now with the topics. And then we have the proofreader and then the the blog to title.

[00:51:26] Connor Shorten: So that's the system that we, got our hands on with. And I could probably talk about that better than the STORM system, but it's very similar. With STORM, the difference, so the difference is we're retrieving from, a Weaviate index with Weaviate blog posts. Let's make it as much Weaviate as we can, but like they, so they replaced the specific retriever with with web search retriever. And. So I was playing with that a bit on the weekend as well, using the U. com API as the web search and, it's pretty cool web search and as well as a private thing that you curate. I think that's definitely one of the big topics.

[00:51:56] Connor Shorten: Okay, so then the interesting thing is once you've got this, in our case, as a four layer system, now you use DSPy to compile it. So what compiling it entails in DSPy is tweaking the task description as well as producing input output examples. So you have in the prompt, you slightly change it from, you'll take a topic and write it into a blog post.

[00:52:19] Connor Shorten: Typically, that ends up resulting in a blog post about software documentation, right? So that's what that ends up looking like. And then the input outputs end up being, like, an example of what are cross encoders. Here's a blog about cross encoders. So you can use that input output to then reason about the new inference, so hopefully that's a good description of what it means to compile these programs, where you optimize the prompts for each layer in the task as you decompose this task into its subtasks.

[00:52:45] Connor Shorten: Storm then introduces something that I think is pretty novel. which is how you do that research loop. So we naively just went question to outline and then just instantly flesh out the outline, whereas they instead go from question to perspectives about the topic. And you retrieve from each of the perspectives about the topic, and then you'll, write it, and then it will, I'm not sure how it all gets resolved, but it's, so it's almost like a multi agent system in my view, this kind of like perspective guided to adding personas or like background.

[00:53:18] Connor Shorten: So I think that's probably the key differentiator between Storm and then that kind of like blog post system that I described. But so we have open source code on Weaviate Recipes. If you want to see what the, what our four layer program looks like and compiling that with the Bootstrap Optimizer.

[00:53:35] Connor Shorten: With the Bootstrap Optimizer is you just run a forward pass through the model with a super high capacity model like dbt4. And then, to get the input output, and then you hope that Turbo or one of the cheaper, or the open source models can can look at those input output examples and then copy the system behavior.

[00:53:51] Connor Shorten: There's a lot of other interesting things about this, like multi model systems, even in the Storm paper they compare GPT Turbo, and then they use Mistral 7b Instruct as the judge. Another thing is like earlier talking about re ranking. You might want to have the long context models do re ranking because with re ranking you typically try to give it a lot because you're trying to like, put a band aid on the search.

[00:54:13] Connor Shorten: So you probably want to have 20 to a hundred results that go into the re ranker rather than, five to 10. And it's probably also not really a task for the for LLMs anyways. And I think that's another, opportunity for a task specific model, but overall to conclude this thing about Storm, I think for me, the big exciting thing is it's becoming, DSPi is making it super clear, I think, on how to build more than chatbots or just simple question answering.

[00:54:40] Connor Shorten: It's I think we're probably within a few months from, anytime you have a pull request, the documentation will be written for you automatically. Probably you could even have an idea and have a pull request created by the model. I'm personally biased by coding applications, but yeah. So the but yeah, this kind of like long form content generation by breaking down each task and then optimizing each part of the task.

[00:55:05] Connor Shorten: It's all just really interesting.

[00:55:07] Alex Volkov: very interesting. And I had a storm to, from Yijia Xiao to, to the show notes as well and folks are definitely worth checking out because it writes like wikipedia length articles and uses like you. com API or different search APIs to give perspectives and References and very interesting. I want to in the sake of time I want to move so just like to reset the space we've been at this for almost an hour You guys are on ThursdAI.

[00:55:33] Alex Volkov: ThursdAI is the weekly podcast and newsletter that's recorded live on xSpaces. And I'm here with several friends and guests and experts in different fields. And we've been covering open source LLMs until now. And I think we're going to move into big companies because we need to cover this. And soon we're going to have some folks to do a deep dive about embeddings.

[00:55:51] Alex Volkov: And let me just make sure that the folks know that they're, they can come up. Uh, the big companies, LMs and APIs this is the segment where we chat about OpenAI and Microsoft and Google and whatever not the models that they released for us in OpenWeights and OpenSource that we can run ourselves this is the segment where we talk about API and developments and different updates.

[00:56:13] Alex Volkov: So let's run through them.

[00:56:14] Mistral releases Mistral Large & Le Chat interface

[00:56:14] Alex Volkov: The biggest one from this Monday was Mistral releasing Mistral Large, which we've been waiting for and getting excited about. And also they released a chat version of their models called LeChat. And, um, it's very impressive, folks. Like the Mistral Large now is based on at least some metrics that they released, is second only to GPT 4, and beats Claude and Tropic and Gemini Pro on the MMLU score.

[00:56:43] Alex Volkov: And Mistral is vastly superior to Mistral Medium handles 32k tokens of context natively fluent in English, French, Spanish, German, and Italian. It highlights how much Mistral is focusing on becoming the open AI alternative from Europe, because you can go to the chat and there's execute every chat that you have with their models.

[00:57:09] Alex Volkov: And basically, Maybe you don't have to have an OpenAI subscription. I think that's what they want to do. But also, this model is available in the API, and it's significant performance on top of everything else on the other languages. And they're aiming for the five top languages in Europe, obviously, and I think it's a Very standard, like a very important move from theirs that they're establishing themselves as this big company.

[00:57:32] Alex Volkov: This was why we moved them to the big company APIs as well. The announcement also includes something interesting. They said, we have also updated Mistral Small in our API to a model that's significantly better and faster.

[00:57:45] Alex Volkov: The Mixtral 8x7b. If you remember when we announced, when we talked about Mistral releasing API access, we said that, whatever Mistral Next is It's probably going to be medium. So now we have a large model that outperforms pretty much every model besides GPT 4 on different tasks. According at least to them, but also the small model that's like faster and better.

[00:58:06] Alex Volkov: They upgraded this like behind the scenes. They're not released that any of this in open weights. Which is the response from the community was partly this, is Mistral releasing a bunch of stuff, and none of the stuff like we expected. No torrent links this [00:58:20] time, no, open models that we can start fine tuning.

[00:58:22] Alex Volkov: And I think so first of all, kudos on this release. I've used some of the stuff in the chat, and I'm very happy with the responses. They're fairly quick, but definitely giving good responses. Nisten, I think your perspective from before, from the open source segment is very interesting where they spoil us so much with the open models, with the Mixtral models, and even the 7B, that even large doesn't seem that significantly better.

[00:58:45] Alex Volkov: However, just on the metrics, it looks like we just got Another competitor in the ring from, now there's Google, Gemini Pro, Entropic Cloud keeps releasing models that are less performant, at least on LMSys, than the previous models. And now Mistral not only doing fully open weights, open source, but also in the API.

[00:59:03] Alex Volkov: And if folks want to build on top. They can. An additional thing to this, they also released a partnership with Microsoft and announced that these models are also going to be distributed through Azure. And I think this is a big deal for companies who maybe don't want to trust a startup that's less than one year old from, from Europe, for example, and maybe their servers are in Europe, maybe the companies don't want to trust their ability to stay up because there's like only 30 people, or, enterprises, they need more stuff like ISO and different things.

[00:59:34] Alex Volkov: And so I think it's a big deal that Microsoft is now also supporting and giving us access to kind of these models through Azure, and especially for companies that want stability. I'll just, not stability, just stability in general. I want to just mention that if you guys remember after Dev Day, OpenAI went down for a week, or not a week, but there was like a whole period where OpenAI had a lot of issues on production, and the Azure version of OpenAI stayed stable.

[01:00:00] Alex Volkov: Obviously Microsoft wants to sell their cloud, and I do believe this is a very big deal that Mistral is now supported through Azure as well. In addition, Microsoft also announced a small stake in Mistral, and Arthur, the CEO of Mistral, and went and clarified. So first of all their new website with these announcements, again, didn't include some stuff or included the a note that you shouldn't train on this, right?

[01:00:22] Alex Volkov: And then our friend Far El here for the second time called them out publicly and for the second time, Arthur Mensch, the CEO of Mistral came and said, whoops, gone. And so it does seem like an omission rather than something they put on purpose and then they remove after Twitter calls them out.

[01:00:38] Alex Volkov: Far El, thank you for that for noticing. But also some other folks noted that their commitment to open source, which we discussed before was gone from the website. And they put it back. And so now, like prominently on their website, even though this time they didn't release any open source, any open weights for us this time their commitment for open source is prominently featured on top of their of top of their website.

[01:00:59] Alex Volkov: And now there's two segments there. One of them is optimized models, they call them. And one of them is open weights models that they released for the community. As we talked previously in the open source segment their models from six months ago are still competing with something like. The new and cool Gemini Pro 8 billion parameters.

[01:01:15] Nisten Tahiraj: It's still a 32k context window by the way, so I measured and after that it completely forgot, and also it was okay. I was expecting as a chat model to be way more chat optimized, but it does feel more like a base model. And yeah, again, I said the comments before, we're too spoiled by all the 7b and Mixtral, Finetunes, and merges.

[01:01:43] Nisten Tahiraj: That now this is extremely good and is very utilitarian. And if your business needs it, you should use it because it provides reliable answers. It's not, we were just expecting more.

[01:01:56] Alex Volkov: So one thing definitely to note as well, and we mentioned this a little bit, but definitely worth mentioning. So the smaller model is now better upgraded. So if you play with this they also upgraded the pricing for this. And I would also caution folks, the tokenizer they use is a different tokenizer.

[01:02:10] Alex Volkov: So sometimes when you measure tokens they may look different. Our friend Zenova here in the audience. Has a tokenized playground in hug face, which by the way, with the rest of hug face also went down yesterday. So I went to check just the length of a string. I wasn't able to, it was sad but now it's back.

[01:02:24] Alex Volkov: So that token, I think, measures open the eye, token's length, and Mr, I think has a different one. So when you calculate pricing for use, definitely make sure that you're calculating the right thing. Yes. No, you're welcome to come up and tell us about this. So one last thing on Mytral is that it supports function calling as well, which is, I think is a big deal.

[01:02:41] Alex Volkov: And we mentioned this before in the function calling leaderboard. And now mytral models can also respond to your RAG applications or whatever with actually the functions that you should call, which is I think super cool. And the industry moves there and it shows again, the open AI can come up with something.

[01:02:57] Alex Volkov: a year ago and basically set the standard for how things should look. I actually don't know if assistance API is going to be like this, but I do know that, for example, we talked about Grok and Grok supports the OpenAI standard. And many of these, I don't know if Mistral does, but many of the like Together API and other I think Perplexity as well, all of them have their own version of their API, but also you can just replace whatever code you wrote for OpenAI with just like a different proxy URL.

[01:03:24] Alex Volkov: And then you basically use the same structure that OpenAI innovated on, so that I think is pretty cool. Moving

[01:03:32] Nisten Tahiraj: Yeah,

[01:03:33] Connor Shorten: also just a note is that the OpenAI PIP package allows you to actually call any any URL doesn't matter if it's if it's OpenAI or not which actually uses that standard. It is very easy to drop in any replacement to the OpenAI

[01:03:49] Alex Volkov: Yeah, including local ones. If you use LM studio, our friends on studio, shout out Yags or Olam, I think both of them will expose like a local server when you run the open source models. And then you can put in your code, like your local URL that runs the server with the local model. And then your code will also work, which is, yeah, thanks for all.