Hey dear ThursdAI friends, as always I’m very excited to bring you this edition of ThursdAI, September 21st, which is packed full of goodness updates, great conversations with experts, breaking AI news and not 1 but 2 interviews

TL;DR of all topics covered

AI Art & Diffusion

🖼️ DALL-E 3 - High quality art, with a built in brain (Announcement, Comparison to MJ)

Microsoft - Bing will have DALL-E 3 for free (Link)

Big Co LLMs + API updates

Microsoft - Windows Copilot 🔥 (Announcement, Demo)

OpenAI - GPT3.5 instruct (Link)

OpenAI - Finetuning UI (and finetuning your finetunes) (Annoucement, Link)

Google - Bard has extensions (twitter thread, video)

Open Source LLM

Glaive-coder-7B (Announcement, Model, Arena)

Yann Lecun testimony in front of US senate (Opening Statement, Thread)

Vision

Leak : OpenAI GPT4 Vision is coming soon + Gobi multimodal? (source)

Tools & Prompts

Chain of Density - a great summarizer prompt technique (Link, Paper, Playground)

Cardinal - AI infused product backlog (ProductHunt)

Glaive Arena - (link)

AI Art + Diffusion

DALL-E 3 - High quality art, with a built in brain

DALL-E 2 was the reason I went hard into everything AI, I have a condition called Aphantasia, and when I learned that AI tools can help me regain a part of my brain that’s missing, I was in complete AWE. My first “AI” project was a chrome extension that injects prompts into DALL-E UI to help with prompt engineering.

Well, now not only is my extension no longer needed, prompt engineering for AI art itself may die a slow death with DALL-E 3, which is going to be integrated into chatGPT interface, and chatGPT will be able to help you… chat with your creation, ask for modifications, alternative styles, and suggest different art directions!

In addition to this incredible new interface, which I think is going to change the whole AI art field, the images are of mind-blowing quality, coherence of objects and scene elements is top notch, and the ability to tweak tiny detail really shines!

Additional thing they really fixed is hands and text! Get ready for SO many memes coming at you!

Btw, I created a conversational generation bot in my telegram chatGPT bot (before there was an API with stability diffusion and I can only remember how addicting this was!) and so did my friends from Krea :) so y’know… where’s our free dall-e credits OpenAI? 🤔

Just kidding, an additional awesome thing that now, DALL-E will be integrated into chatGPT plus subscription (and enterprise) and will refuse to generate any living artists art, and has a very very strong bias towards “clean” imagery.

I wonder how fast will it come to an API, but this is incredible news!

P.S - if you don’t want to pay for chatGPT, apparently DALL-E 3 conversational is already being rolled out as a free offering for Bing Chat 👀 Only for a certain percentage of users, but will be free for everyone going forward!

Big Co LLM + API updates

Copilot, no longer just for code?

Microsoft has announced some breaking news on #thursdai, where they confirmed that Copilot is now a piece of the new windows, and will live just a shortcut away from many many people. I think this is absolutely revolutionary, as just last week we chatted with Killian from Open Interpreter and having an LLM run things on my machine was one of the main reasons I was really excited about it!

And now we have a full on, baked AI agent, inside the worlds most popular operating system, running for free, for all mom and pop windows computers out there, with just a shortcut away!

Copilot will be a native part of many apps, not only windows, here’s an example of a powerpoint copilot!

As we chatted on the pod, this will put AI into the hands of so so many people for whom opening the chatGPT interface is beyond them, and I find it incredibly exciting development! (I will not be switching to windows for it tho, will you?)

Btw, shoutout to Mikhail Parakhin who lead the BingChat integration and is now in charge of the whole windows division! It shows how much dedication to AI Microsoft is showing and it really seems that they don’t want to “miss” this revolution like they did with mobile!

OpenAI releases GPT 3.5 instruct turbo!

For many of us, who used GPT3 APIs before it was cool (who has the 43 character API key 🙋♂️) we remember the “instruct” models where all the rage, and then OpenAI basically told everyone to switch to the much faster and more RLHFd chat interfaces.

Well now, they brought GPT3.5 back, with instruct and turbo mode, it’s no longer a chat, it’s a completion model, that is apparently much better at chess?

An additional interesting thing is, it includes logprobs in the response, so you can actually build much more interesting software (by asking for several responses and then looking at the log probabilities), for example, if you’re asking the model for a multiple choice answer to a question, you can rank the answers based on logprobs!

Listen to the pod, Raunak explains this really well!

FineTune your finetunes

OpenAI also released a UI for finetuning GPT3.5 and upped the number of concurrent finetunes to 3, and now, you can finetune your finetunes!

So you can continue finetuning already finetuned models!

Bard extensions are like chatGPT plugins but more native.

While we wait for Gemini (cmon google, just drop it!) the multi modal upcoming incredible LLM that will beat GPT-4 allegedly, Google is shoving new unbacked features into Bard (remember Bard? It’s like the 5th most used AI assistant!)

You can now opt in, and @ mention stuff like Gmail, Youtube, Drive and many more Google services and Bard will connect to them, do a search (not a vector search apparently, just a keyword search) and will show you results (or summarize your documents) inside Bard interface.

The @ ui is really cool, and reminded me of Cursor (where you can @ different files or documentation) but in practice, from my 2 checks, it really didn’t work at all and was worse than just a keyword search.

Open Source LLM

Glaive-coder-7B reaches an incredible 63% on human eval

Friends of the pod Anton Bacaj and Sahil Chaudhary have open sourced a beast of a coder model Glaive-coder-7B, with just 7B parameters, this model achieves an enormous 63% on HumanEval@1, which is higher than LLaMa 2, Code LLaMa and even GPT 3.5 (based on technical reports) at just a tiny 7B parameters 🔥 (table from code-llama released for reference, the table is now meaningless 😂)

Yann Lecun testimony in front of US senate

Look, we get it, the meeting of the CEOs (and Clem from HuggingFace) made more waves, especially on this huge table, who wasn’t there, Elon, Bill Gates, Sundar, Satya, Zuck, IBM, Sam Altman

But IMO the real deal government AI thing was done by Yann Lecun, chief scientist at Meta AI, who came in hot, with very pro open source opening statements, and was very patient with the very surprised senators on the committee. Opening statement is worth watching in full (I transcribed it with Targum cause… duh) and Yann actually retweeted! 🫶

Here’s a little taste, where Yann is saying, literally “make progress as fast as we can” 🙇♂️

He was also asked about, what happens if US over-restricts open source AI, and our adversaries will … not? Will we be at a disadvantage? Good questions senators, I like this thinking, more of this please.

Vision

Gobi and GPT4-Vision are incoming to beat Gemini to the punch?

According to The Information, OpenAi is gearing up to give us the vision model of GPT-4 due to the hinted upcoming release of Gemini, a multi modal model from Google (that’s also rumored to be released very soon, I’m sure they will release this on next ThursdAI, or the one after that!)

It seems to be the case for both DALL-E 3 and the leak about GPT-4 Vision, because apparently Gemini is multi modal on the input (can take images and text) AND the output (can generate text and images) and OpenAI maybe wants to get ahead of that.

We’ve seen images of GPT-4 Vision in the chatGPT UI that were leaked, so it’s only a matter of time.

The most interesting thing from this leak was the model codenamed GOBI, which is going to be a “true” multimodal model, unlike GPT-4 vision.

Here’s an explanation of the difference from Yam Peleg , ThursdAI expert on everything language models!

Voice

Honestly, nothing major happened with voice since last week 👀

Tools

Chain of Density

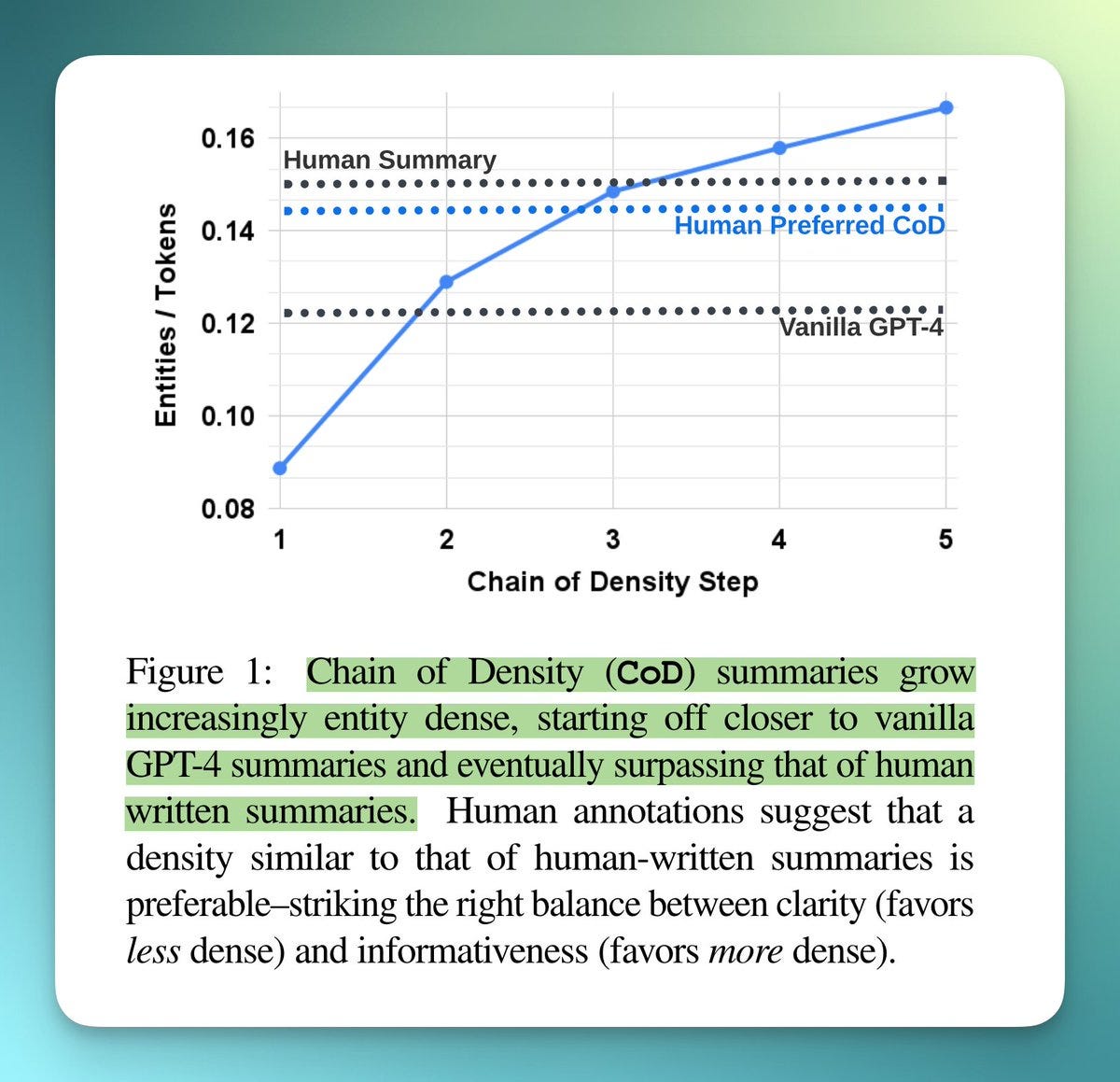

The Salesforce AI team has developed a new technique for improving text summarization with large language models. Called Chain of Density (CoD), this prompting method allows users to incrementally increase the informational density of a summary.

The key insight is balancing the right amount of details and main ideas when summarizing text. With CoD, you can prompt the model to add more detail until an optimal summary is reached. This gives more control over the summary output.

The Salesforce researchers tested CoD against vanilla GPT summaries in a human preference study. The results showed people preferred the CoD versions, demonstrating the effectiveness of this approach.

Overall, the Salesforce AI team has introduced an innovative way to enhance text summarization with large language models. By tuning the density of the output, CoD prompts can produce higher quality summaries. It will be exciting to see where they take this promising technique in the future.

RememberAll - extend your LLM context with a proxy

We had Raunak from rememberAll on the pod this week, and that interview is probably coming on Sunday, but wanted to include this in tools as it’s super cool.

Basically with 2 lines of code change, you can send your API calls through RememberAll proxy, and they will extract the key information, and embed and store it in a vectorDB for you, and then inject it back on responses.

Super clever way to extend memory, here’s a preview from Raunak (demo) and a more full interview is coming soon!

Cardinal has launched on ProductHunt, from my friends Wiz and Mor (link)

Quick friendly plug, Wix and Mor are friends of mine and they have just launched Cardinal, an AI infused product backlog, that extracts features, discussion about feature requests, and more, from customer feedback, from tons of sources.

Go give them a try, if you’re looking to make your product backlog work better, it’s really really slick!

Hey, if you arrived here, do me a quick favor? Send me a DM with this emoji 🥔 , and then share this newsletter with 1 friend who like you, loves AI?

Thanks, I expect many potatoes in my inbox! See you next ThursdAI 🫡

Here’s the full transcript (no video this time, I’m finishing this up at 10:30 and video will take me at least 3 more hours, apologies 🙇♂️)

[00:10:21] Alex Introduces Yam Peleg

[00:10:57] Alex Introduces Nisten Tahiraj

[00:11:10] Alex Introduces Far El

[00:11:24] Alex Introduces Xenova

[00:11:44] Alex Introduces Roie S. Cohen

[00:11:53] Alex Introduces Tzafrir Rehan

[00:12:16] DALL-E 3 - An AI art model with a brain, coming to chatGPT plus

[00:20:33] Microsoft c launches Windows CoPilot

[00:30:46] Open AI leaks, GPT-4 Vision, Gobic

[00:38:36] 3.5 instruct model from OpenAI

[00:43:03] Raunak intro

[00:43:25] Bard Extensions allow access to GMail, Youtube, Drive

FULL transcript:

[00:00:00] Alex Volkov: So, Thursday I is this wonderful thing that happened and happened organically as well.

[00:00:26] And basically what happens is we have this live recording every Thursday, every ThursdAI on Twitter spaces. I am I'm very grateful to share the stage with experts in their fields, and we all talk about different things, because AI updates are so multidisciplinary right now. It's really hard for even experts in their one field to follow everything.

[00:00:51] I find this mixture of experts type model on stage very conducive because we all go and find the most up to date things from the last week. And then we have folks who, it's their specification, for example, to comment on them. And you guys in the audience get the benefit of this. And it just happened organically through many conversations we had on, on Spaces since GPT 4 was launched.

[00:01:16] Literally the day, March 14th, 2023 aka Pi Day. It was the first day we started these spaces, and since then the community has grown to just... An incredible amount of people who join quality experts, top of their field people. I'm, I'm just so humbled by all of this. And since then, many folks told me, like Roy here in the audience, that, Hey, Alex, you're doing this in this weirdest hour.

[00:01:42] Thursday a. m. in San Francisco, nobody's gonna come. It's really hard to participate in the actual live recording. And so, I started a newsletter and a podcast for this. And so, if you aren't able to make it, I more than welcome you to register to the newsletter. You know what? Even if you are here every week, register to the newsletter, because why not?

[00:02:03] Because, share it with your friends. We're talking about everything AI related. Hopefully, hopefully no hype. And I have friends here to reduce the hype when I'm getting too hypey. Definitely none of the, Hey, here's a new AI tool that will help you fix the thing you don't need fixing.

[00:02:18] And I think that's, that's been resonating with the community. And so, as you now are here, you're also participant in this community. I welcome everybody to Tag Thursday AI on their news about ai or #thursdAI, or just like the Thursday iPod, which probably should join this so people get some more visibility. but you are part of the community. Now, those of you who come back, those of you who listen in, those of you who share all of them. All of these things are very helpful for the community to grow and for us to just know about more stuff.

[00:02:49] It's actually an incredible signal when two or three or more of you react under a piece of news and say, hey, we probably should cover this in Thursday. It really helps, truly. I think with that, yeah, I think this intro is enough intro. Welcome. What's up, Tzafrir? How are you?

[00:03:06] Tzafrir Rehan: All's well. Thank you very much. I wanted to, to strengthen your point about the time factor. So we expand. So anyone here who wants to be a little bit interested in generative technologies and breaking news and have some things to do in the meanwhile, and also looking to actually build something cool from all of this.

[00:03:31] Time is the limiting factor here. That's like the, the hardest resource here. Having this group and having everyone explore everything together. It's a lifesaver. It's like a order of magnitude improvement on our ability to move forward each one individually. And that's a group together just to give examples.

[00:03:53] So I'm interested in generative images, videos, and audio. And for each of these, there are hundreds of models right now available. With the availability to make fine tunes on specific datasets for some of these generating a single asset like a video can take hours. Training takes hours. If you want to explore a little bit like the effect of different prompts, just generating hundreds of samples takes hours.

[00:04:26] So without this group, it would be impossible to even know. Where to go and where to invest my time And the name of the game right now is to just choose where you invest your time on To actually get things done and keep up. So thank you. Thank you. Thank you for you and for this group And let's have fun.

[00:04:46] Alex Volkov: Thank you. Thank you everyone. I definitely feel super powered by the people in this group who can like back me up on, I read one tweet and then I saw some people react to this tweet, but I didn't have the time or the capability or the experience to dive in.

[00:05:00] And then there's folks here who did, and then we're going to complete each other. And I think our model, I haven't shared since we started, but our motto is we stay up to date. So you don't have to and have to, I think is the operating word. You want to stay up to date and you're welcome to stay up to date and you're welcome to tag us and talk with us and leave comments here in the chat as well, but you don't have to anymore because, there's a, there's a newsletter that will update you and there's folks on stage who will talk about this.

[00:05:26] I want to briefly cover one tiny thing that I did on the podcast that I think I will start doing as well. So, so far editing this hour and a half, two hours that we have here live was a pain, but I just decided to lean into this because. The conversation we're having here is so much more informative and interesting that any type of summary that I want to do or wanted to do is not going to do it justice.

[00:05:50] And so I had some different feedback from different folks about the length of the podcast. Some people said, yeah, 25 minutes, just the updates is like the right spot. And yeah, the podcast is moving towards. This is going to be the live recording. I'm going to edit this don't worry.

[00:06:04] But besides that, the podcast will be this conversation. Going forward as much as I'm able to edit this, and ship both the newsletter and the podcast in time on Thursday But with that Tzafrir thank you for the kind words, man. I appreciate you being here and sharing with us your expertise

[00:06:20] I want to say hi to Zenova and Arthur.

[00:06:22] We'll start with Zenova. Welcome Josh. How are you?

[00:06:27] Xenova: Yeah. Hey Yeah, pretty good. Been busy, busy, busy

[00:06:33] for those who Don't know. I'll just quickly introduce myself. I am the creator of Transformers. js, which is a JavaScript library for running HuggingFace Transformers directly in the browser, or Node, or Deno, or maybe Bunsoon.

[00:06:49] Who knows when that gets sorted out properly, but any JavaScript environment that you're, that you're looking for. And, yeah, I recently joined HuggingFace, which is exciting. Now I'm able to sort of work on it basically full time. And yeah, lots of, lots of exciting things are, are in the pipeline.

[00:07:06] Alex Volkov: It's been incredible to have you here and then see your progress with Transformer.

[00:07:10] js and then you joining Hug and Faceman. I appreciate the time here.

[00:07:13] Arthur, thank you for joining. Please feel free to introduce yourself.

[00:07:18] Arthur Islamov: Okay. So, my name is Arthur and I'm fixing and making WebAssembly to work with big models.

[00:07:25] So, soon you will be able to run anything huge in the browser, and I'm particularly interested in diffusion models, so right now I'm making the Staple Diffusion 2. 1 to work in the browser, and then have some plans to make SDXL, and maybe as well as Lama and other models too. With all that work done.

[00:07:50] Alex Volkov: That's awesome. Thank you for joining.

[00:07:52] Far El: Yo, what's up? Yeah, I'm my name is Farouk. I'm like founder of Nod. ai where we build autonomous agents and also working on skunkworks. ai, which is an open source group where we are pushing the boundaries of what we can do with LLMs and AI as a whole, really.

[00:08:10] Our first, like, major project is this open source MOE architecture that we've been tinkering around with for the last couple months. We're also exploring even more, exotic AI arcs to try to get, to GPT 4 level capability for open source.

[00:08:28] Alex Volkov: Awesome. Awesome. Awesome. And Nistan, welcome brother.

[00:08:33] Yam Peleg: Yeah. Hey everyone, I'm Nistan Tahirai and I'm terminally online. That's the introduction. Thank you. Yeah, I, I'm also, I'm a dev in Toronto. I worked on the first doctor wrapper which is still doing pretty well. Like no complaints so far, six months later, knock on wood. And yeah, recently started doing a lot more open source stuff.

[00:09:03] Put out a bunch of open source doctor models on, on HuggingFace, which I still need to write a benchmark for because there is no safety benchmarks that are public. And yeah, lately been working with Farouk to make the whole Sconcrooks AI mixture of experts model more usable because it's still, it's not even bleeding edge.

[00:09:26] And this one is more like hemorrhaging edge technology. It takes like three people to get it to work. And yeah, I've been extremely interested on the web GPU side ever since Zenova on a random tweet just gave me the command to start Chrome Canary properly. And then I was able to load it. Whole seven B model.

[00:09:48] And yeah, I'm thinking next for the future, if, if things go okay. I mean, my goal that I've set myself is to have some kind of distributed. Mixture of experts running via WebGPU and then having Gantt. js encrypts the connections between the, the different nodes and experts. And we'll see how that plays out because everything is changing so quickly.

[00:10:14] But yeah, it's, it's good to be here. And I'm glad I found this Twitter space randomly way back in

[00:10:21] Alex Introduces Yam Peleg

[00:10:21] Alex Volkov: Yeah, for a long time. I just want to welcome Yam to the stage. And Yam doesn't love introducing himself, but I can do it for you Yam this time if you'd like.

[00:10:31] All right. So, I will just run through the speakers on stage just real quick. Yam, thank you for joining us. Folks, Yam is our, I could say, resident... Machine learning engineer extraordinaire everything from data sets and training large language models understanding the internals of how they work and baking a few of his own definitely The guy who if we found the interesting paper, he will be able to explain this to us

[00:10:57] Alex Introduces Nisten Tahiraj

[00:10:57] Alex Volkov: Nisten. I call you like The AI engineer hacker type, like the stuff that you sometimes do, we're all in awe of being able to run stuff on CPU and doing different, like, approaches that, like, nobody thought of them before.

[00:11:10] Alex Introduces Far El

[00:11:10] Alex Volkov: Far El you're doing, like, great community organizing and we're waiting to see from the MOE and Skunkworks.

[00:11:15] And folks should definitely follow Far El for that and join Skunkworks OS. It's really hard for me to say. Skunks. Works OS efforts in the discord.

[00:11:24] Alex Introduces Xenova

[00:11:24] Alex Volkov: Zenova is our run models on the client guy so Transformers. js, everything related to ONNX and everything related to quantization and making the models smaller.

[00:11:35] All of that. All models, all modularities, but I think the focus is on, on the browser after you're new, but obviously you introduce yourself, WebGPU stuff.

[00:11:44] Alex Introduces Roie S. Cohen

[00:11:44] Alex Volkov: We have Roy, who's a DevRel in Pinecon, who he didn't say, but Pinecon and VectorDB is in Context Windows and, and discussion about RAG, like all of these things Roy is our go to.

[00:11:53] Alex Introduces Tzafrir Rehan

[00:11:53] Alex Volkov: And Tzafrir also introduced himself, everything vision, audio, and excitement. So a very well rounded group here. And I definitely recommend everybody to follow. And now with that, now that we are complete, let's please start with the updates because we have an incredible, incredible Thursday, literally every week, right folks?

[00:12:12] Literally every week we have an incredible Thursday

[00:12:16] DALL-E 3 - An AI art model with a brain, coming to chatGPT plus

[00:12:16] Alex Volkov: so we'll start with, with two big ones. I want to say the first big update was obviously DALL-E 3. So I will just share briefly about my story with DALL-E and then I would love folks on stage also to chime in. Please raise your hand so we don't talk over each other. DALL-E when it came out, When the announcement came out for DALL-E 2, I want to say it was a year ago in, a year and a half ago, maybe, in January, February or something, this blew me away.

[00:12:47] I have something called aphantasia, where, I don't know if you saw this, but like, I don't have like the visual mind's eye, so I can't like visually see things, and it's been a thing with me all my life, and then here comes the AI tool that can draw. Very quickly, then I turned my, I noticed stable diffusion, for example, and I just like.

[00:13:04] It took away from there. Everything that I have, all my interest in AI started from DALL-E basically. And DALL-E 3 seems like the next step in all of this. And the reason I'm saying this is because DALL-E 3 is visually incredible, but this is not actually like the biggest part about this, right? We have mid journey.

[00:13:22] I pinned somebody's comparison between DALL-E and mid journey. And Midrani is beautiful and Gorgias is a way smaller team. DALL-E 3 has this beautiful thing where it's connected to ChatGPT. So not only is it like going to be not separate anymore, you're going to have the chat interface into DALL-E 3.

[00:13:41] ChatGPT will be able to help you. As a prompt engineer, and you'd be able to chat with the creation process itself. So you will ask for an image, and if you don't know how to actually define what you want in this image, which types, you'd be able to just chat with it. You will say, you know what, actually make it darker, make it more cartoony, whatever.

[00:14:01] And then chatGPT itself with its brain is going to be your prompt engineer body in the creation. And I think. Quality aside, which quality is really, really good. The thing they're highlighting for, for DALL-E 3 is the ability to have multiple. Objects and subjects from your prompt in one image because it understands them.

[00:14:23] But also definitely the piece where you can keep talking to an image is changing the image creation UI significantly where, mid journey. With all, all the love we have for Midjourney is still stuck in Discord. They're still working on the web. It's, it's taking a long time and we've talked about Ideogram to lead them from the side.

[00:14:44] We know that Google has multiple image models like Imogen and different ones. They have like three, I think at this point, that they haven't yet released. And DALL-E, I think is the first. Multimodal on the output model that we'll get, right? So multimodal on the output means that what you get back towards you is not only text generation and we saw some other stuff, right?

[00:15:06] We saw some graphs, we saw some code interpreter can run code, etc. But this is a multimodal on the output. And Very exciting. I, I, DALL-E 3 news took Twitter by storm. Everybody started sharing this, including us. We can't wait to play with DALL-E 3. I welcome folks on stage. I want to start with Zafreer reaction, but definitely to share what we think about this.

[00:15:26] And the last thing I'll say... Say is that now that the community community is growing, suddenly people dmm me. So first of all, you're all welcome to DM me about different stuff. I see I see somebody in the audience with DM me. I think she's still here. So shout out about joining the better test for DALL-E three, which now they, they're able to share about Funny tidbit, it will, it's right now baked into the UI.

[00:15:48] So Dally 3 is going to be baked into ChatGPT and ChatGPT Enterprise UIs. However, when they tested this, they tested it via a plugin. So OpenAI actually built a plugin and had like a restricted access to this plugin. And folks who like talked with this plugin, the plugin ran the Dally ChatGPT version behind the scenes.

[00:16:06] And we don't have access to it yet. I don't know if anybody on stage has access. Please tell me if you do. The access is coming soon, which is interesting from OpenAI. And I think that's most of the daily stuff that I had. And I want to, please, please, buddy, I want to hear from Zafira, please.

[00:16:23] And please raise your hand. I really need us to not talk over each other.

[00:16:30] Thank you.

[00:16:31] Tzafrir Rehan: So yeah, DALL-E 3 is looking amazing. I did see some examples that people with early

[00:16:38] access were

[00:16:38] generating, and it's far more detailed and coherent than the things we are used to seeing from stable diffusion. And much less randomness, I would say. And what's exciting here is a few changes in the paradigm of how it works.

[00:16:56] For example, like you said,

[00:16:59] it doesn't expect you to know all the intricacies. You can describe in

[00:17:03] your natural language what you want to see

[00:17:05] and it will use

[00:17:07] GPT, however much they are powering the, for generating a prompt to make the whole image. That's the one thing. The other thing is that it's not

[00:17:19] text to image.

[00:17:21] It's more a conversation. Similar to how chat GPT is a conversation between you and the assistant. DALL-E 3 is a chat. So you can see in the video that they released. You generate one image and then you discuss if you want to make changes to it, if you want to make more variations, and that would be very interesting to see the flow.

[00:17:44] From the AI artist perspective, I think it will be met with a little bit hesitation, at least not knowing how much fine control they are providing. If they are letting away... to influence all these various parameters that the model uses. That is a lot of the workflow for generating AI art.

[00:18:06] And when you want to make a piece for release as an artist, you spend a lot of time fine tuning it.

[00:18:13] And today with Stable Diffusion, and with Mid Journey, we have a lot of fine grained control over changing the parameters by a little bit, adding one more word, That's one thing, and another thing is that artists usually actually want to have that control over the prompt. For example, this week I saw an interesting example, I'll try to find it for you, where the artist adds the words Event horizon to an image.

[00:18:44] Now the image is not of space, but the model does take that idea of the event horizon shape, and makes the image more shaped like an event horizon. So those are the kinds of tricks that right now prompt engineers use to make very specific changes in the image. So I'm interested to knowing if DALL-E 3 will allow that kind of control.

[00:19:08] And most of all, finally, we had DAL E2 very early in the game, before Stable Diffusion even gave the first clunky models, before everything, and there was so much work and mid journey. And so many much interesting things coming out in image generation and open AI will always like hanging back.

[00:19:30] We have this very basic value too, which sometimes works and usually doesn't gives you very weird results. So yeah, good to see that they are still working on actually

[00:19:43] innovating

[00:19:44] and thinking of the next step and how we can combine all of these technologies. To make something that's much more fun to the user experience.

[00:19:53] Alex Volkov: Absolutely. And I will remind some folks the internals behind kind of diffusion models, like stable diffusion, et cetera. OpenAI actually made the whole field happen, I think, with some was it VIT? Vision Transformer that they released and,

[00:20:05] Yam Peleg: they released the first diffusion. The first diffusion model.

[00:20:08] Alex Volkov: Yes. And so like the whole field is all to open the eye and it's great. I, it's a fair, I joined you in the, it's super great to see them innovate and give us some new UIs for this because. I heard from multiple people who have access to this, that this, you can get lost in just chatting to a picture, to the creation process.

[00:20:26] It's like a whole new creation process, basically, like prompting, but chatting. I'm very excited about this, very excited.

[00:20:31] , so we'll definitely talk more about this.

[00:20:33] Microsoft c launches Windows CoPilot

[00:20:33] Alex Volkov: I want to move on to the next thing, which is exciting. And so. Until today, basically, the word co pilot meant GitHub co pilot, at least for those of us with VS Code, those of us who write code. GitHub co pilot obviously is the auto complete engine that, gives you code abilities.

[00:20:50] And many of us use it, many of us don't use it. But, today, I think, Microsoft who owns GitHub and who is very close with OpenAI has announced Copilot for Windows. And it's coming soon with the Windows update. And we've seen some previews about this in some discussions. And I find it very interesting that Microsoft is innovating in AI, whereas we're waiting for Google to come up with Gemini.

[00:21:18] We're waiting for Google to, we're going to talk about Bard updates as well. But Copilot for Windows will be able To be just like a shortcut away. I think windows C is the new shortcut and you'd be able to ask it like he asked you for different things. And for those of us in the audience who didn't join us in the previous ThursdAIs, we.

[00:21:40] Talked with Killian from this open source called Open Interpreter. And one of the things that we all like in Open Interpreter is that it runs on my machine and it generates code, and some of that code could be AppleScript. And so it's very easy to run stuff on the Mac using AppleScript. You can open Calendar, you can send emails, you can do a bunch of stuff.

[00:21:58] And so it was beautiful to see that, like, even an open source agent like Open Interpreter is able to Run code and then, activate stuff on your computer. Having, and I think Kilian mentioned, like, Microsoft's Copilot is coming. And not just a week later, exactly a week later after that discussion, we now have Windows Copilot.

[00:22:16] Which is going to be able to run Windows for you. It's going to be able to open apps and shut down apps. It's going to be able to just like... Be a, chat GPT, but living inside windows. And I think it's going to be based on GPT 4. It only makes sense with the Microsoft OpenAI collaboration. And like I can't understate this for a second.

[00:22:38] GPT 4 was released on March, right? Chat GPT was released less than a year ago on November something. And now the next version of world's probably most. Common operating system, Windows, is going to have AI built in as a companion. How insane is this, folks? I, I, I, I have a Windows machine, because I have an NVIDIA GPU, blah, blah, blah, and not only I'm not only on the Mac and I'm really excited to, like, play with this.

[00:23:09] An additional thing that they've announced together with this update is connecting to the previous thing that we said, which is Bing, Chat, and Windows Copilot will both have DALL-E 3 built in for free. So DALL-E 3 is going to be possible on GPT Plus subscribers, the ones of us who paid the 20 bucks.

[00:23:32] However... For, through Bing, you'll be able to get it for free, and it's going to be part of Windows. Right, so, my mom, who probably doesn't use Windows, okay, her husband, my mom's husband uses Windows, he'd be able to use GPT 4 to run his Windows and also generate images. I think that's incredible, and, only Microsoft can give it out for free.

[00:23:52] I think that's mostly it in... The Microsoft update. However, it's breaking news. Literally, they released the tweet once we started the space So I'm sure more stuff will come out of there But I invite folks on stage to chime in with Windows Copilot news What do you think about this whether or not, you know This is going to change multiple people's usage of Windows or Or not

[00:24:16] Nisten Tahiraj: I mean the whole Using software thing is all up in the air now, right? Everyone's in creative mode. Yeah, it's pretty hard to predict what's going to be the, the better interface voice is getting really good. Open interpreter show that it can do a whole bunch of stuff. You can also delete all the Jason files on your computer accidentally, but I think those, those will be worked out those issues.

[00:24:43] Yeah, it is hard to, it's hard to call because again, being is still a free beta service, they haven't quite figured out how to fully monetize that, because that's not cheap to run especially considering that it is the multimodal image one, so. Yeah, don't have that much an opinion.

[00:25:05] I think it's still too early to call as to how interfaces will change.

[00:25:09] Alex Volkov: I agree. I just, I'm excited that AI that we've come to known for less than a year is now baked into an operating system for everyone, right? Even going to a website like chatGPT registering is not for everyone and they will. They will definitely , lower the bar for usage here. What's up, Yam

[00:25:28] Yam Peleg: hi I just want to say that we've seen, because everything is so early, we've seen really great infrastructure for RAG but we haven't seen a wide scale product using RAG on this scale. So, and, and it makes sense at the end.

[00:25:47] I mean, you have a lot of information scattered around all different software and different devices. It's, I think it's the perfect idea to just merge everything with the RAG and just allow you to chat with whatever information you have everywhere. And Microsoft is perfectly positioned to do that. And I'm looking forward.

[00:26:13] I think that I think it's a great idea. I don't know if the implementation. Will be great. It's, we need to see, I think it will, but we need to see, but I think that's it. As a concept is a great concept.

[00:26:26] Alex Volkov: Something that I saw from a person who's very close with the Microsoft team, for some reason, the guy behind being his name is Michael Perakin, and he has this like very non branded Twitter account that barely has an avatar image.

[00:26:43] And he's been doing, he's open. Yeah. He's been doing, he's been doing like customer support basically on Twitter. Like people will say, Oh, Bing has this, has that. And he's like been very, very responsive to some people. And so two things that he did say, first of all, Dally three is already part of Bing for some percentage of population.

[00:27:00] So if you use Bing, and we've talked about Bing before about image and vision. If you use Bing, go try and generate images with it. It used to be Dally too, but if you get. Good ones. You may get value three, which is incredible. You may already have this. And the second thing is I saw somebody commented that he is now head of windows, right?

[00:27:17] So the guy behind being the guy who pushed a I into being is now moving to be ahead of windows. And I think this together with this release shows us that. How just how much Microsoft is serious about a I everywhere and is determined to not miss this new wave like they missed the mobile wave. And everybody says that, Apple overtook Microsoft and Microsoft was like late to mobile.

[00:27:37] And And it just goes to show like how much they invest in this whole thing. And I find it like very, very good because for many people, even going to a website is a barrier of entry. And then when it's just like one click in their operating system of choice, I think it's going to be very it's going to shove AI into way more people's faces.

[00:27:54] I also want to say that Microsoft out of the big ones is fairly based in terms of. Safety and regulation, which we usually don't talk about we can talk about in maybe the next space, but like, we can have worse than Microsoft, which is surprising for me because I used to hate on the Internet Explorer most of my life.

[00:28:12] And so now Microsoft is very based. I think less comments on Windows Copilot here, folks, and then we can move on to the next stuff from OpenAI, actually.

[00:28:22] Nisten Tahiraj: So my last one is I've started using Edge Canary as my daily browser just because of the sidebar and the splitting. So if you have a widescreen monitor, it's actually very handy because you can have code interpreter on one side, and I'll show an image of it very quickly.

[00:28:39] And I have Bing, which has an excellent voice back and forth. And it has really good voice generation, which normally would be very expensive if you're paying for it, but it's in beta And then I have the actual work and on the sidebar you can have Anyway, this interface is a bit convoluted and edge browser is it's still a little bit clunky, but Overall, it's been working pretty well for me.

[00:29:06] So I I don't know. I sort of see the browser as being more and more important. That's your operating system. Some people disagree. They're trying like Sean is, is trying to do more of a OS native stuff with his tool that lets you run multiple ones. But Yeah, you can see the screenshot of how I started using it with voice, so.

[00:29:28] In general, I see it as you'll just talk to it back and forth. I think That's,

[00:29:32] Alex Volkov: at least that's what I want. Were you referring to Swix's Godmode app where you can run all the LLMs in like a window?

[00:29:39] Nisten Tahiraj: Yes, but that one, for example, on the Mac is right, there's an icon right beside the clock. And you just click that and it pops up, so it's unintrusively there.

[00:29:49] And it adds to your experience instead of getting in the way. And I, I do like that part because it is using real estate on the screen efficiently, but again, if you have a. If you use a wider monitor, so can Edge with all of its right sidebar shortcuts, because then you can add your discord, your outlook and stuff there too, right where the GPT like right where I use the code interpreter window and even have some completion and document writing stuff too now.

[00:30:19] So that's how I see it. I, it's again, it's up in the air, what people will find most helpful

[00:30:25] Alex Volkov: absolutely. And I've been using Bing somewhat as well. And yes. The sidebar can also read from the page, right? So the Bing chat in the sidebar has access to the page if you give it.

[00:30:37] And that for like summarization and different things, that's really, really excellent as well. Like it completes your browsing experience. So I'm assuming that they're doing some stuff with the co pilot.

[00:30:46] Open AI leaks, GPT-4 Vision, Gobic

[00:30:46] Alex Volkov: All right, folks, we're moving forward because we have much to cover. And, there's more news from OpenAI.

[00:30:52] They actually came before DALL-E, and we were supposed to talk about them first, and then DALL-E, but sorry, and then DALL-E came out. And now let's cover some news from OpenAI. So... It feels like the theme behind all of these news is OpenAI is trying to rush stuff to the door or to announce some stuff to the door because they know or they hear or they saw the information from Google breaking out about Gemini, the multi model wolf.

[00:31:19] Huge model from, from Google that is potentially GPT 4 like and can do images in the input and output is multimodal on the output as well. And so we don't know many sorry, we don't know much information about Gemini so far, but we do know that the information kind of the publication called the information released that Gemini is coming very soon.

[00:31:40] And we see the response from OpenAI in multiple places, right? So DALL-E 3 is one of them. OpenAI released so the information also leaked. about open the eye gearing up to give us vision for those of you who remember pretty much every space since march we're talking about gpt4 that is also multi model on the input and yeah we can probably go into the details whether or not it's fully multi model versus gobby and i would love for you to participate in this but basically gpt4 when they announced they showed the demo of it they gave it some screenshot they gave it like a sketch of a website that was able to code that and then we didn't get That feature, the Multimodality from GPT 4, we didn't get it.

[00:32:20] The only people who got it, and me and Nisten interviewed the CEO of this, is Be My Eyes, which is this app for blind folks, and they just like shove GPT 4 vision in there to help those with eyesight issues. And it seems that now Google has finally stepping into the arena, sorry for the pun, and that we may get GPT 4 vision very soon.

[00:32:42] I actually saw some screenshots how it looks inside the GPT 4 chat GPT interface. And the additional exciting thing is, they have a different model. With the code name Gobi, that as apparently it works in OpenAI. And that one is going to be multi modal and like fully. So, Yam, I would love to, if you can repeat what we talked about last night, about the differences and how GPT 4 is multi modal, but not fully.

[00:33:06] I would love for you to expand on this.

[00:33:09] Yam Peleg: Yeah. First it's important to understand that there is a huge difference in infrastructure between the two companies. And the infrastructure dictates what is possible or not possible, what is hard or not hard. From the rumors nothing is confirmed, but from the rumors the, the structure and the size of GPT 4 is.

[00:33:34] It was chosen to fit the hardware, the infrastructure to actually run the model. It doesn't matter if you have the best model in the world, if you cannot just serve it. So Google is using its own hardware, which is not sharing with anyone else. And it's important to understand this. So when we see that Google is doing according to the rumors.

[00:33:58] And, and insane training run or, or preparing to ship or, or serve an insane model that is multimodal on the input and on the output. It, the reason we didn't see, I think, I think the reason open AI I didn't release a GPT with the image head is simply because it's. It's probably expensive. It's not that easy to deploy something like this, especially not with the amount of people that use OpenAI services.

[00:34:31] And, and I think this is this is what we see. This is the reason for what we see at the moment. Now it's important to understand that according to rumors, again, nothing is confirmed, take with a grain of salt, according to the rumors, which makes sense, GPT 4 is first a language model. It was trained as a language model, just language model.

[00:34:53] And once it was trained. It, there was they added an image head to the frozen model. This basically, this reduced the risk of something going wrong with full multimodality end to end. And moreover it allows you to just use the model on its own. And if you want, you can plug the head so you can use them.

[00:35:14] You can, it's flexible. You can use them with or without a head. Now, the thing is that there is you do pay a price because again, with a grain of salt, there, there is there is, there are caveats to this, but we have already seen multiple times that multimodality, when done right, benefits both modalities.

[00:35:36] So GPT 4 allegedly did not benefit from the multimodality. And this is the difference between GPT 4 and the new rumored model that we have. According to the rumors, the rumored model was trained end to end images and text throughout the whole training. So, we should, if it's true, if everything is true we should expect a better model only if you just use it for text, we should expect a better model because the, the images just influence the text and text influence the images and So on and so forth.

[00:36:12] Alex Volkov: That's great. That's what I have. One follow up question. You spoke about benefits from training on text and vision. And I remember Ilya Asatkov also talked about this. I think with the Jensen CEO of NVIDIA. He talked about different other places. Could you speak to some of those potential benefits of how multi model trains on text and images is actually better?

[00:36:37] Yam Peleg: If I remember correctly Ilya said Ilya gave the perfect example for this. You can, if you really want, you can describe what the color red mean with text or what, what objects are red. All of this will be nothing like just seeing the color red. So there is a difference between actually training on images.

[00:37:04] Versus training on text that describe the images which is just, it's just a different sensation. So the whole you can say the world model inside the, the language model is influenced by, by the images. And I think color is, is just a great example. And if I remember correctly, that was Example he gave in this interview.

[00:37:27] Alex Volkov: Yeah, absolutely. And I think the other one he said is It's obviously better at stuff like math or physics where it's able to actually read different, the graphs and everything. It's like, it just arrives at the question faster, but also like Yam you correctly pointed out the world model of this model is way better because it's able to see basically.

[00:37:50] So We have potentially exciting news. One thing I will add is that Yam I think you're correct opening. I just didn't want to spend the kind of this GPU cycles on the vision model and the being able to attach a head with vision. I think it's exciting. I do want to highlight that Microsoft likely has.

[00:38:08] The bandwidth for that, because being has the ability to have vision. Now, I don't know if it's like the full one. I don't know if they did some work because the examples that I tested with being vision gave less quality like responses on images than I was expecting GPT four from the example.

[00:38:25] So maybe they know if they, maybe they did some stuff for optimization speed, but yeah, definitely it feels like infrastructure was gearing up for this and hopefully we'll see it soon. From OpenAI.

[00:38:36] 3.5 instruct model from OpenAI

[00:38:36] Alex Volkov: Another thing we saw from OpenAI, and I think this is this last one, we have a bunch of OpenAI updates, is the 3.

[00:38:42] 5 Instruct model. And unlike the ChagGPT model, 3. 5 Instruct is very similar to how OpenAI APIs We're actually working before the ChatGPT explosion, right? Before you were able to like do back and forth conversation. Before it was RLHF for conversation purposes. And I saw many, many folks get very excited about 3.

[00:39:05] 5 Instruct. Because it's very similar to what we had before ChatGPT. But it's much faster. Now we don't know if it's faster because way less people use this because it's new. Or it's faster because they actually did some TurboMagic on it. But, we'd love to invite folks on stage, maybe Roy, maybe Mr.

[00:39:21] Yang to talk about the instruct and the difference between kind of this end point in the API versus the regular chat end point. If you have anything to, to, to, to add here from what you read, please feel free to, to add.

[00:39:36] Nisten Tahiraj: I used it in the playground to just like write An agreement for the site, like a privacy agreement.

[00:39:41] It was pretty good for that. It just it's annoying that the context window is so small. It's only a 4k context window. And it's more like only three and a half K because some of it will be your prompt. I think it has some very other very good usability uses, which we haven't experimented with yet.

[00:40:02] Like the one person got it to play chess very well. And I think it's, yeah, it's really worth looking at for stuff like doing automation or you're continuing some work on your desktop, for example, with open interpreter, and it'll be able to continue generating in that regard. So there are quite a few things to explore there.

[00:40:26] I'm just glad it's cheap and it's good. So that's that's what we want at the end of the day

[00:40:34] Alex Volkov: Yeah it's it's cheap and I think for many folks they were surprised with like the chat interface They had to switch for chgpt to get like the benefits and the speed and now they're happy that they have the instruct model of old They also added log props.

[00:40:47] So I, I would love to ask folks on stage because I'm not entirely sure what like logprops is in the API response. And I saw Alex Gravely and some other folks are getting excited about logprops. And I want to say, just before, I want to say hello to Ronak, if I'm pronouncing this correctly. Ronak. And we're going to talk about RamboRole in a second, or in a few minutes, but if you have comments on the Instruct API and LogProps, feel free to share.

[00:41:18] Raunak Chowdhuri: Yeah, I do. LogProps is awesome. It basically gives you like, token level probability distributions on, in terms of the model. So normally when you are using GPT 4 or GPT 3, You just get words back when you're, when you're querying the model. But what LogProbs allows you to do is, is see the probability distribution that's outputted by the model that is normally sampled by, like, the temperature parameter.

[00:41:43] And you can use that to do a lot of, like, really interesting things. Like, for example, if you're, if you're asking GPT to solve a multiple choice question, for example it's really useful to actually understand. the model's confidence in whether it's A, B, C, or D. And you can actually get that directly from the model by examining that probability distribution from the log prop.

[00:42:05] So it actually provides... A lot more insight into what the model is thinking and I think that's a pretty useful technology. You can actually do a lot of clever things with it, like someone built something called like JSONformer which is basically like a tool that allows you to if you have a model that exposes log props, you can only sample the tokens.

[00:42:24] That basically are valid JSON tokens, and construct a response that is very much aligned with like a certain format that you want. So I think that's a pretty powerful tool.

[00:42:36] Alex Volkov: Thank you, Ronak. Thank you. And I remember JSONformer and did not know that they use log, log prox for that. So here you have it, folks.

[00:42:43] There's a new endpoint for you, your usages that now exposes the token probabilities. So you can use this to build better tools and different types of tools. And yeah, Ronak, would you care to introduce yourself briefly? I will ask again once we record kind of your section, but feel free to introduce yourself.

[00:43:03] Raunak intro

[00:43:03] Raunak Chowdhuri: Yeah, absolutely. So I'm a senior at MIT. I'm graduating in a couple months. My background's in machine learning, artificial intelligence. I've been doing research in this area for quite a few years now. Yeah, I'm working on some interesting projects that we'll dive into later, but basically building long term memory for Language models.

[00:43:25] Bard Extensions allow access to GMail, Youtube, Drive

[00:43:25] Alex Volkov: Awesome, awesome. Thank you. Thank you for coming up and thank you for explaining log, log props as well. All right. So the next thing I want to talk about briefly, really briefly, because it's not that great is bard from Google. Before we get to Gemini, before we hear from Googles like Explosive, GPT 4, Combating Model, etc. Right now we have Bard. For some reason we also have Google Assistant, which I'm not sure what's the involvement with LLMs there. But Bard is something that some folks on stage here use. And I was never like very, very excited about Bard for some reason.

[00:44:00] However, they just released a few updates to Bard and they say like this is the best Bard ever. And it feels like very googly, very like product manager y to me, at least. What they released is something called extensions, right? So if you use Bard before and you haven't touched it in a while, like I haven't, if you go to Bard right now, what you will have is the chance to...

[00:44:22] Updated with extensions. Those extensions could access your Gmail, all of it, your Google Drive, all of it, YouTube. I think some other ones that I'm trying to remember. And the cool thing about this, which I actually like, is the UI. You can do at sign, like like you mentioned somebody on Twitter.

[00:44:38] And then you have access to those extensions. It's a different take on the plugins with ChagPT, where like ChagPT plugins, you have to be in that mode, it decides for you, blah, blah, blah. So here you can actually say like, add Gmail, and then ask it questions. It will actually go and do a search in your Gmail account and give you back answers with, with natural text.

[00:44:56] So. Conceptually pretty cool, right? We all use Gmail, or like at least most of us use Gmail. And so to be able to like get summaries of the latest emails, blah, blah, blah. So conceptually very cool. Google Docs as well. You can tag Google Docs. You can do Google Drive. Oh, Google Maps is the, is the other one.

[00:45:10] So you can actually say like, hey, what are some of the stuff that, in San Francisco, Seattle, whatever it will give you. The thing that I was really surprised by is just how bad it is, just honestly not to... If there's folks in the audience who work on Bard, I apologize. And sometimes we say these things, but there's like so many, so many people working on this stuff.

[00:45:31] And like, it's, the, the nights and weekends, they don't see family. So like, I apologize. Just network from the comparison point in my experience, I was really disappointed in how... Google, who's this like huge company that like created Transformers for us, like they, they're not afraid to release something this bad.

[00:45:50] And what is bad, I mean, specifically, I literally used two of the extensions. One is Gmail. To ask it about my upcoming flight to San Francisco, which I told you guys about. I'm going to be at the AI engineer event as, as a media person. And it couldn't find any information from this flight and just gave me flights from the past.

[00:46:07] I literally asked, give me flights from the future or like, give me my upcoming flights. And it gave me flights from the past. It also gave me two trips to the Denver museum, which is, which are not flights. And so, yeah, we know LLM hallucinates, blah, blah, blah. But if you, if you put your brand behind this and you're Google and you put Gmail in there and you cannot like do a basic search, that's upsetting.

[00:46:30] And so I said, all right, I'll give it another try. I did YouTube. And I asked, Hey, what does MKBHD, Marques Brownlee, if you guys don't follow him, he's like this great tech reviewer. What does he think about the latest iPhone? And it went to YouTube and it searched and it gave me. Marquez's videos from last year about the iPhone 14, and I literally took the same string that I pasted into Barg, went to the YouTube interface, pasted it in the YouTube search, and got like the latest videos that he had about the iPhone 15.

[00:46:58] And so I was thinking there like why would I ever use this if like the first two searches did not work, where this is the whole promise of this. So again, not to be negative. I don't love being negative. It's just like from a comparison standpoint. It's really I really got to wonder how many folks in Google are trying to rush through the LLM craze.

[00:47:19] We remember Sundar Pichai saying, AI, AI, AI, AI, AI, AI, AI, AI, AI, on the stage like 48 times, right? And they're shoving AI into everywhere. It's just, for me, it wasn't that useful. So I would love to hear, Safrir, I see your hand up. I would love to hear from folks on stage about your experience with BARD and those specific kind of extension new things.

[00:47:41] Tzafrir Rehan: So I don't have much experience with it, actually, for the same reasons that you said. But I want to give the perspective that I think what we're seeing here is Google jumped early to stay in the game. Maybe they didn't expect ChatGPT to go viral that big so fast. Well, this was developed like a sci fi technology and suddenly it's a household item overnight.

[00:48:09] But, if you're talking about Google, and I worked at Google actually for three years, about a decade ago, it's a company that can make very big moves very slowly. That means, if Gmail data, Drive data, it's the holiest of holy of privacy. If you want as an engineer at Google, if you want to touch that data to read even a single bite, you need to go through quarters of legal meetings.

[00:48:41] So the fact that they are going in this direction indicates a course heading that they took the time to think of it through and decide, yes, we are doing this very risky move in terms of privacy and user expectations. Because they believe in the value. So let's see where they get to when they actually, when they are actually fully implemented.

[00:49:05] Because I think right now, what we are seeing is a rushed out version.

[00:49:09] Alex Volkov: I agree. I think that's how it definitely feels where the basic stuff, like. A keyword search works better than like this search and they're basically hitting the API, which they have behind it definitely feels rushed very polished UI wise, very safe, very like protective, like googly, but very, it's not super helpful.

[00:49:27] I think at this point Yeah, I think this is most of the news unless I'm missing some so let me look and see in my template that I already Drafted for myself. Let's see if we have any more things to cover before we before we move on to the interviews So yes, one last thing I wanted to find I'll just find this thing.

[00:49:48] It's called chain of density So, I saw this, I think it was a paper first, and then yeah, I'll share this in the chat. I'm sorry, not in the chat, in the, in the Jumbotron. I saw somebody release a paper on this, and then I think Harrison from LangChain reposted this and actually put it up on their website with the prompt sharing, where you can play with prompts, is this new method called chain of density, which is actually really, really good at getting summarizations from From ChatGPT and different other places like Cloud as well.

[00:50:21] And I think it's really cool because I just posted it on top. It it asks for four summarizations with more and more density, right? So it starts with like, hey, summarize this text or article. And then it says give me like a JSON file in response with like four summarizations. The second

[00:50:37] one, give me a summarization.

[00:50:40] Extract from the first one that you just gave me, extract the entities that were missing, and give me another summarization with those entities, and then do it again and again. And I think there's, like, some cool prompt magic in there that says something to the tune of, make sure that this is understood on its own, and the person doesn't have to read the article to understand the summarization.

[00:51:00] I personally have gotten really good summarizations based on this technique, so much so that I've added it to my snippets. Where, where I have different snippets for prompts. And if you are doing any type of summarization, definitely check it out. Nistan, I saw your hand briefly up if you want to comment on this thing.

[00:51:16] Nisten Tahiraj: Yeah. Like the first person that I knew who got a contract as a prompt engineer actually used this technique a lot last year. And the way he was explaining it was when you do, when you compress an idea and then you extrapolate, that's how creativity happens in general. Like you, you compress. You extrapolate out of it, you compress and then you extrapolate.

[00:51:36] So it's pretty interesting that someone did this in a much more systematic way. I'm, I'm going to check it out.

[00:51:43] Alex Volkov: Chain of density. And I wanted to ping back real quickly on the compressing part, because yeah, I saw your tweet and there was a paper about compression as well. And Ilya gave a talk about compression recently.

[00:51:55] And I wanted to see if you want to talk about that compression part and paper. Briefly and if not, that's also okay. We can move on but I just like I think this is also this week.

[00:52:07] Yam Peleg: Yeah, I got I Had some controversial opinions in the last couple of weeks And as it turns out there are papers that support them coming up after them.

[00:52:19] But yeah, I highly, highly suggest reading the compression paper. Basically, basically what it says is that it just it just conveys the idea that what, what we are actually doing is I want to say. Reversing the process that generates the data and by reversing the process that generates the data.

[00:52:39] If you think about it, the process that generates the data is us. So, I don't, I don't want to, I don't wanna say the, the the words that I shouldn't. I got a, I got some heat for them, but you can find in my tweets. It's it's a really good paper. It's really It's much more scientific, you can say versus other papers that talk about intelligence, about general intelligence, and poke on this idea, and I highly recommend reading this paper if you're interested in this part of what we're doing.

[00:53:13] It doesn't prove anything because, general intelligence is a, is a big thing, but it. It is it is interesting the ideas there are, are, are solid and great to see.

[00:53:24] Alex Volkov: Yeah, I, I, I heard this multiple times this comparison or metaphor that intelligence is compression and, compressing a lot of ideas into, First of all, it compares to natural language, the ability of us to understand something, to put it into words, that's compression.

[00:53:39] Obviously, Feynman's quote, where like, you really understand something if you can explain this to a five year old, is also like, compressing down and also being able to explain some stuff. And so I heard this multiple times, and it's great to see that, there's now papers to talk about this. And continuous compression, like Nissen said, actually...

[00:53:54] Actually brings out better results and it's also good to see on the topic of literal compression. I know this like it's confusing There was also another paper that's worth checking out from this week Where they actually used llms and different transformers for an actual compression to compare to like png or or jpeg, etc And I think they saw very interesting compression results as well I don't remember if I have a tweet for that But yeah, be on the lookout for the for multiple types of different compression, uh as we as we move forward Thank you I think With that, I think we are ready to move on to our guests here on stage and to talk about two exciting things.

[00:54:30] So, first of all, actually three exciting things. One of them is, Nisten you had a visit to Geoffrey Hinton's lab that I wanted to hear from you a brief story about. After that, we're going to talk with Arthur and Zenova about WebGPU and going to do like a brief interview about like... Running models locally in the browser.

[00:54:47] And then at the end, we're going to talk about remember all with Ronak and his exciting approach to extending complex windows. So with that, I'll just give a brief kind of summary of the spaces we had today and some logistics, and then we can get started with the second part of Thursday. So again, everybody in the audience, we're just drawing the joint in the middle or have joined us from week to week.

[00:55:08] Thursday is about. Staying up to date together and give giving updates every week so that folks don't have to follow everything because it's almost impossible. I'm very happy to be joined by multiple folks from different disciplines and folks who can answer stuff and complete and find new things to get excited about about AI.

[00:55:28] From different fields every week here on stage. We also have a podcast and newsletter. If you're here and you're new and you just like just joined us and you can join next week, you can sign up for the newsletter as well. We stay up to date. So you don't have to, this is the model. And the first part of this is usually updates from last week, breaking news.

[00:55:46] There's another breaking news with YouTube something, but I think we'll cover this next time unless folks here want to read up on this and then give us an update at the end. But the second part of it is usually A deep dive into different conversations and, and guests. And today we have Arthur and we have Ronak to, to talk about different, very exciting things.

[00:56:05] And we'll start with Nistan's brief foray into the lab, AKA yeah, Nistan, give us, give us like a few minutes on, on your, your excursions.

[00:56:16] Nisten Tahiraj: Well, I've been going as a guest to Vector Institute for. Over a year and a year and a half and this time I, I went in and I'd never met Pharrell in real life.

[00:56:28] I didn't even know what he looked like. It was just some dude. He was GitHub. And yeah, so I, I invited him in and we were going to work on making the bootable. Bootable OS that just boots straight into a GDML model and then hopefully gets Chromium running with WebGPU. And essentially I just, I made before a, a tiny 4.

[00:56:54] 7 gig ISO that includes an entire Llama 7b model and an entire Linux distro. I use Slackworks, that's the smallest, and I used that for like 20 years. And yeah, so we were in the lab and Eventually, he's like, let's just try and get the whole thing working. So let's just try and get the mixture of experts.

[00:57:14] Let's just do it all at once and see where, where we get stuck. And anyway, I had to call another friend who was an extremely good DevOps engineer. To help and, and yeah, anyway, long story short, I couldn't get it to run on the GPU because there were no instances and I only had an A10 24 gig and MixtureFX needs more than that because it's 32 experts.

[00:57:39] So I had to run it on the CPU and that's what we spent the entire day and evening on. And it was really slow, but then we realized, yeah, this is probably like the first time someone has effectively ran Mixture of experts model on on, on a CPU. And again, it's, you can check out the REPL.

[00:57:58] I made a CPU branch and it's the V1 branch if you really want to get it to work. But yeah, that was the story. I just met with a random person from Twitter for the first time who was in their discord and yeah, it was, it was fun. And we also, the funniest part was that. Happened to be there a call in Rafael, who has been teaching about mixture of experts and writing a lot of the papers, and then we look behind and he's just like literally like five dusks away.

[00:58:30] And I was just like, taking a back. It's like, Oh, holy cow. He's here. And he had no idea who we were or anything. So, yeah, that was that was fun.

[00:58:39] Alex Volkov: There, if you don't mind me completing this story from what you told me multiple times, because I think it's like way more colorful than you, than you let on. First of all, VectorLab is the lab of Geoffrey Hinton, the grandfather of AI, right?

[00:58:52] This is the lab. This is like, he's widely considered the person who like, have kickstarted this whole field, basically. Is that, is that, that lab? Was he there?

[00:59:02] Nisten Tahiraj: Yeah, yeah, yeah. Ilyas Iskever has been a student. He wasn't there. He's rarely there. He only has like one PhD, one student under his wing this year.

[00:59:12] So he comes in very rarely. But yeah, Ilya Suskever was not in, in the smaller lab before they moved here. Also Adrian Gomez, the, one of the writers of the Transformers paper still comes there every once in a while. He was there regularly up until Cohere got funded last year. And yeah, this is, this is the lab and it's it's pretty funny because everyone's very, very academic and we're just straight up hacking and whatever we can find.

[00:59:45] Alex Volkov: So the second thing that I wanted to cover here is that exactly what you built in the lab of Geoffrey Hinton, because He's now very public about the AI kind of doomerism and AI different kind of potential bad things that will happen with AI and how to not open source, how to regulate. He's very public.

[01:00:04] He's on every news. And here you are, you and Pharrell are working on an ISO, a bootable AI disc that you literally can run offline that has Lama and offline LLM. The, that basically will say, even if they regulate, you can just like take an old. CPU based machine and run this thing. So you basically get democratizing AI in the lab of the person who's now like very, very vocal about like stopping it.

[01:00:27] So that's, that's the second part that I personally like very enjoy.

[01:00:31] Nisten Tahiraj: It's not just that. Also, if you listen further than what the news media. Shows it's a lot more complex than that. He, he wants people to acknowledge that the risks are real and show that they are mitigating them. But at the same time, he's been doing research to do molecularly grown chips.

[01:00:51] And that architecture first didn't work. So. They're still going full speed ahead. They're just making, the reason that they went that way was just saying to a lot of the community, just don't act like idiots, just regulate yourselves. That, that was why they were challenging that.

[01:01:09] It's it was a lot more complex than people realize. And the professor there, Colin, he's been a big pusher for demarketizing and open sourcing. Model C in general, and so, yeah, it's a lot more, it's a lot more nuanced than what you see in the media, and when you think about it, the safest form of AI that you can have is one that you can just literally unplug, and you have full control over, so there is nothing safer than that.

[01:01:40] Otherwise, you're just trusting some incompetent politician with regulatory or whatever legal hacks to control it. So, it's yeah. It's a lot, I want to say, it's a lot more nuanced than people, than what you've just seen in media snippets and reactionary Twitter checks.

[01:01:58] Alex Volkov: Yeah, I hear you. And definitely we'll, we'll, we'll check out the nuances and Jeffrey Hinton on the topic very briefly before after our apologies, we'll get to in just a second, just like something that also happened this week. Yan Likun, the GOAT, aka, the chief meta AI chief scientist, well, went in front of the Senate. I think a couple of days ago, and he, I just pinned the tweet on the top that he actually retweeted, which was like, sent by notices, and he gave an incredible opening statement, talking about how open sourcing is very important, why the open source LLAMA, talking about the fact that, the open source LLAMA1 and the sky didn't fall, and all of these things, and he also outlined a bunch of the safety protocols that they have into account when they release LLAMA2, and I think it's a First of all, very important to have somebody like Jan in front of Senate and talking about legislators and regulators and about regulation, because we see more and more Jan.

[01:02:52] I think you brought up last week about there was another discussion and Elon Musk was there and Sundar Pichai was there. Everybody was there talking about AI and how to regulate. And I think it's very important to have voices like Jan LeCun talk about like, talk with different things with clarity and safety.

[01:03:07] And so definitely. Recommend everybody to check out his opening statement because you know the doomers it's very easy to scare Especially on like the engaged baiting networks like x and etc It's very easy to like take something that people don't understand use it to scare folks And I think it's very important to have very clear very Credentialed and very like, understanding people from this world to actually explain that there's benefits and explain how open source can benefit as well.

[01:03:36] And I think you also mentioned how excited the open source community was about the Lama to release. And I want to believe that we all had like a small, tiny part to play in, in this. And so, yeah, we're definitely on Yam's map sorry Yam Likun's map and definitely worth checking this out. I think with that listen, thank you for sharing your story.

[01:03:52] Tell us more escapades from vector lab. And if you get to meet Geoffrey Hinton, tell him about Thursday night and also Colin.

[01:03:59]

[01:03:59] Alex Volkov: All right folks this actually concludes the two hours that we've allotted for thursday night today I I know there's like many folks. I see dave in the audience. What's up, dave? I haven't I see. I see other folks just stepping in with, with all the sadness of, I want to keep talking with all of you.

[01:04:20] There's also now a need to, transcribe this and, and, and put this into a newsletter, a podcast form Thursday is here every week. We're here literally every week since GPT four came out, I think. Mr. Did I miss one week on vacation? Yeah, newsletter came out, but we didn't talk that week. I felt like, oh, I miss my guys.

[01:04:37] I miss my friends. We need to get up to date together. So we're here every Thursday. I there's so much always to talk about. I want to just like to highlight how much boring this would have been without friends like distance and Nova Arthur, now the new friend of the pod, it's a freer and some other folks who stepped away young and far and for real, like many, many other folks who joined this week to week.

[01:04:58] And Help us bring you, the audience, the best AI news roundup possible on, on X slash Twitter. Now almost six, seven months already into this. This has opened many, many opportunities for many folks on stage, including myself. I'm going to the AI Engineer Conference. As a media person, I'm going to do some spaces from there.

[01:05:19] If you're in the AI Engineer Conference in a couple of weeks, definitely reach out and, we'll talk over there. With that, I want to just say... Without the audience here, this also would be very, very boring. So thank you for joining from week to week. Thank you for listening. Tuning in. Thank you for subscribing.

[01:05:34] Thank you for sharing with your friends. And thank you for leaving comments as well. And with that, I wanna wish you a happy Thursday. I, I'm sure there's going to be many, many, many new things we're listening just today. But you know, we can only cover so much. With that, thank you folks. Have a nice rest of your Thursday.

[01:05:49] I, and we'll meet you here next week. And yeah. Cheers. Have a good one.