Hello hello everyone, this is Alex, typing these words from beautiful Seattle (really, it only rained once while I was here!) where I'm attending Microsoft biggest developer conference BUILD.

This week we saw OpenAI get in the news from multiple angles, none of them positive and Microsoft clapped back at Google from last week with tons of new AI product announcements (CoPilot vs Gemini) and a few new PCs with NPU (Neural Processing Chips) that run alongside CPU/GPU combo we're familiar with. Those NPUs allow for local AI to run on these devices, making them AI native devices!

While I'm here I also had the pleasure to participate in the original AI tinkerers thanks to my friend Joe Heitzberg who operates and runs the aitinkerers.org (of which we are a local branch in Denver) and it was amazing to see tons of folks who listen to ThursdAI + read the newsletter and talk about Weave and evaluations with all of them! (Btw, one the left is Vik from Moondream, which we covered multiple times). I

Ok let's get to the news:

TL;DR of all topics covered:

Open Source LLMs

Big CO LLMs + APIs

Microsoft Build recap - New AI native PCs, Recall functionality, Copilot everywhere

Will post a dedicated episode to this on Sunday

OpenAI pauses GPT-4o Sky voice because Scarlet Johansson complained

Microsoft AI PCs - Copilot+ PCs (Blog)

Anthropic - Scaling Monosemanticity paper - about mapping the features of an LLM (X, Paper)

Vision & Video

OpenBNB - MiniCPM-Llama3-V 2.5 (X, HuggingFace)

Voice & Audio

OpenAI pauses Sky voice due to ScarJo hiring legal counsel

Tools & Hardware

Humane is looking to sell (blog)

Open Source LLMs

Microsoft open sources Phi-3 mini, Phi-3 small (7B) Medium (14B) and vision models w/ 128K context (Blog, Demo)

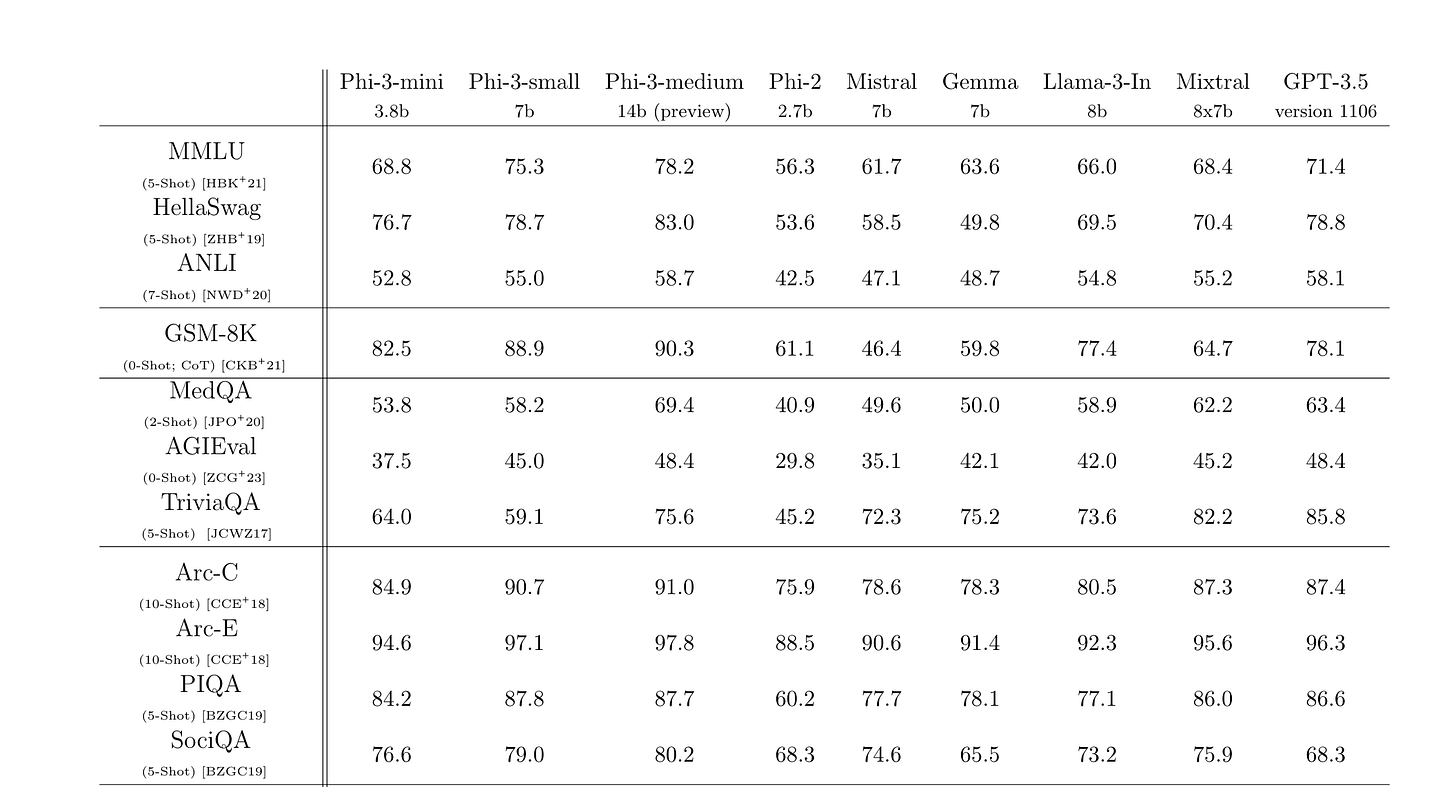

Just in time for Build, Microsoft has open sourced the rest of the Phi family of models, specifically the small (7B) and the Medium (14B) models on top of the mini one we just knew as Phi-3.

All the models have a small context version (4K and 8K) and a large that goes up to 128K (tho they recommend using the small if you don't need that whole context) and all can run on device super quick.

Those models have MIT license, so use them as you will, and are giving an incredible performance comparatively to their size on benchmarks.

Phi-3 mini, received an interesting split in the vibes, it was really good for reasoning tasks, but not very creative in it's writing, so some folks dismissed it, but it's hard to dismiss these new releases, especially when the benchmarks are that great!

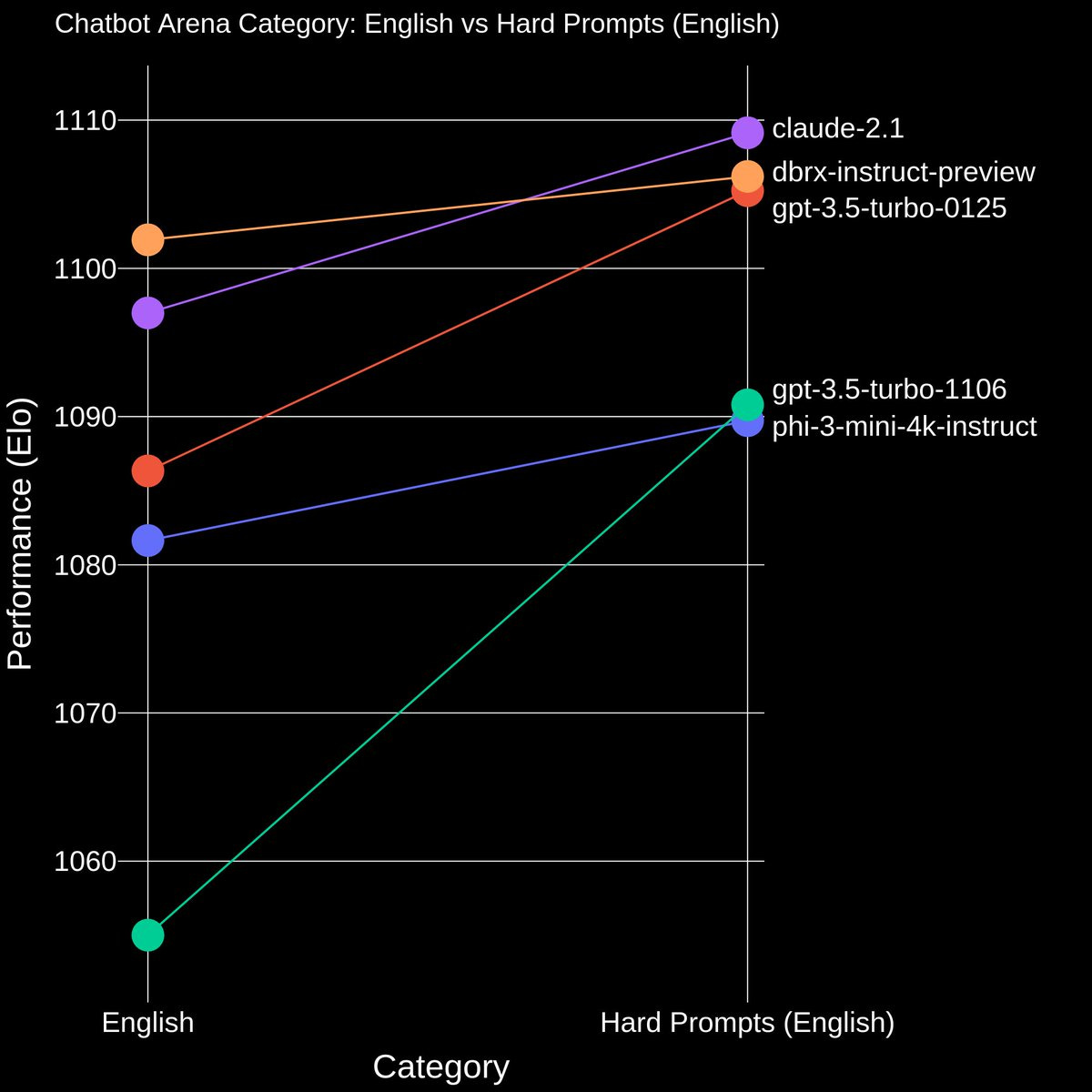

LMsys just updated their arena to include a hard prompts category (X) which select for complex, specific and knowledge based prompts and scores the models on those. Phi-3 mini actually gets a big boost in ELO ranking when filtered on hard prompts and beats GPT-3.5 😮 Can't wait to see how the small and medium versions perform on the arena.

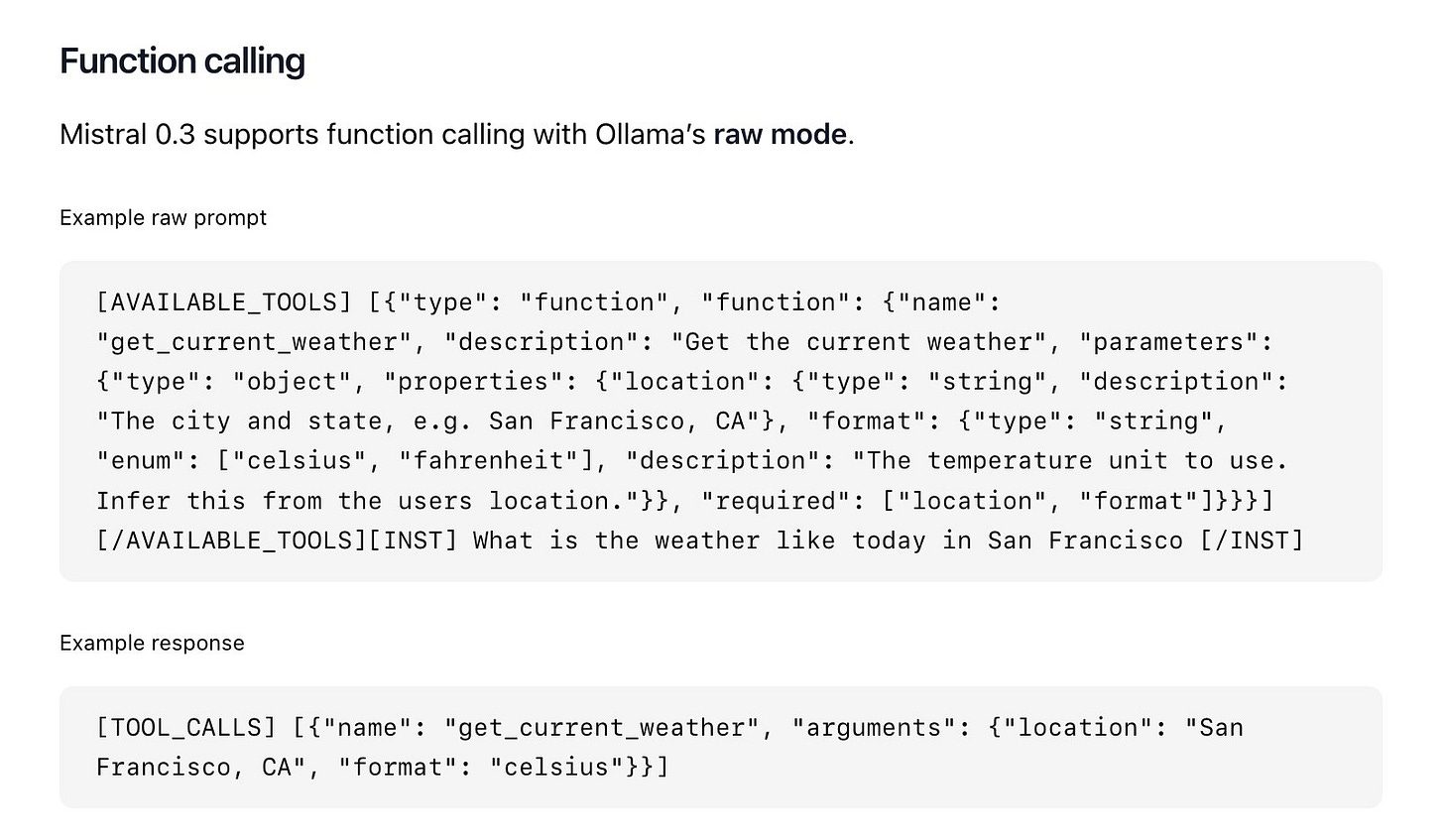

Mistral gives us function calling in Mistral 0.3 update (HF)

Just in time for the Mistral hackathon in Paris, Mistral has released an update to the 7B model (and likely will update the MoE 8x7B and 8x22B Mixtrals) with function calling and a new vocab.

This is awesome all around because function calling is important for agenting capabilities, and it's about time all companies have it, and apparently the way Mistral has it built in matches the Cohere Command R way and is already supported in Ollama, using raw mode.

Big CO LLMs + APIs

Open AI is not having a good week - Sky voice has paused, Employees complain

OpenAI is in hot waters this week, starting with pausing the Sky voice (arguably the best most natural sounding voice out of the ones that launched) due to complains for Scarlett Johansson about this voice being similar to hers. Scarlett appearance in the movie Her, and Sam Altman tweeting "her" to celebrate the release of the incredible GPT-4o voice mode were all talked about when ScarJo has released a statement saying she was shocked when her friends and family told her that OpenAI's new voice mode sounds just like her.

Spoiler, it doesn't really, and they hired an actress and have had this voice out since September last year, as they outlined in their blog following ScarJo complaint.

Now, whether or not there's legal precedent here, given that Sam Altman reached out to Scarlet twice, including once a few days before the event, I won't speculate, but for me, personally, not only Sky doesn't sound like ScarJo, it was my favorite voice even before they demoed it, and I'm really sad that it's paused, and I think it's unfair to the actress who was hired for her voice. See her own statement:

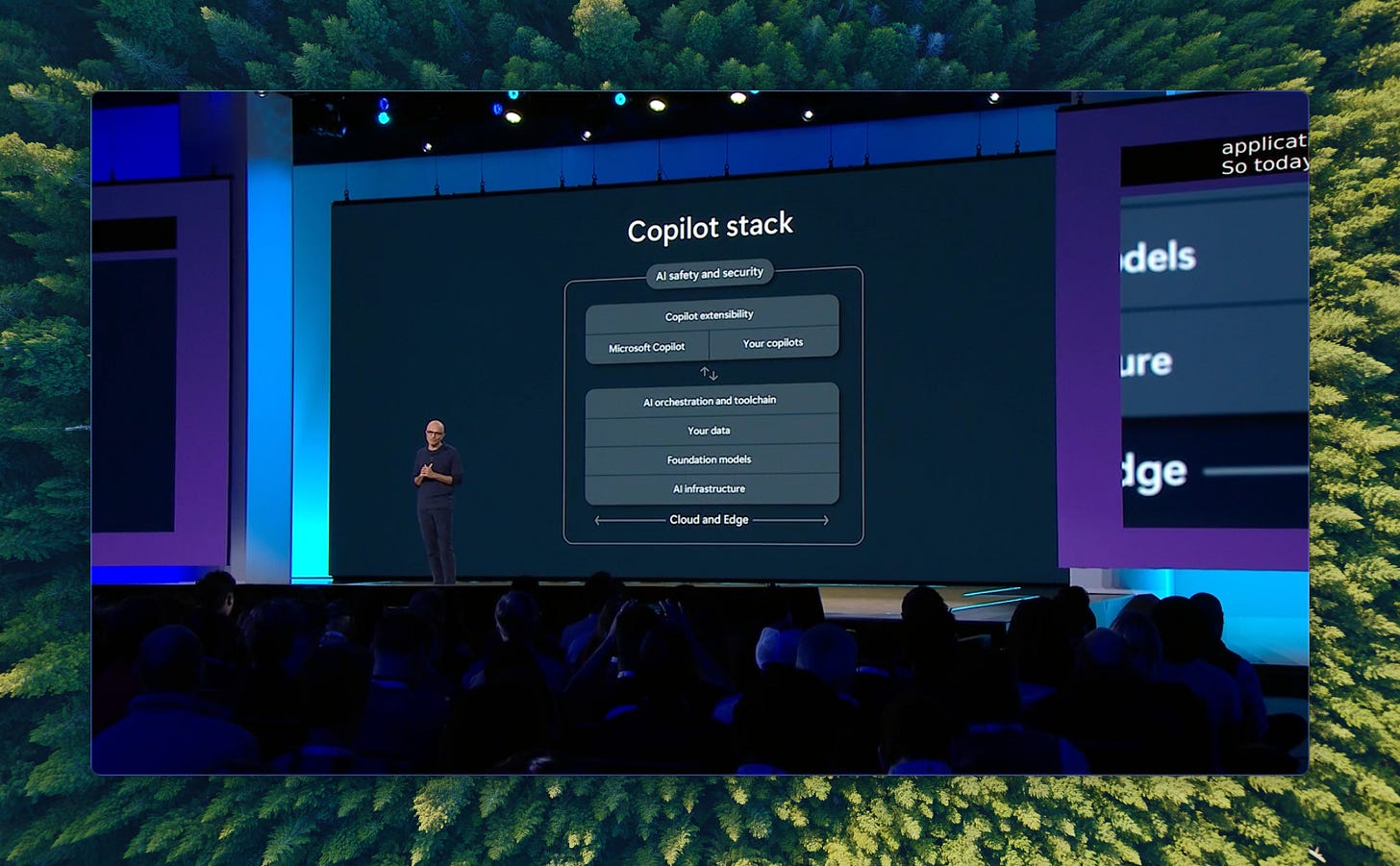

Microsoft Build - CoPilot all the things

I have recorded a Built recap with Ryan Carson from Intel AI and will be posting that as it's own episode on Sunday, so look forward to that, but for now, here are the highlights from BUILD:

Copilot everywhere, Microsoft builds the CoPilot as a platform

AI native laptops with NPU chips for local AI

Recall an on device AI that let's you search through everything you saw or typed with natural language

Github Copilot Workspace + Extensions

Microsoft stepping into education with sponsoring Khan Academy free for all teaches in the US

Copilot Team member and Agent - Copilot will do things proactively as your team member

GPT-4o voice mode is coming to windows and to websites!

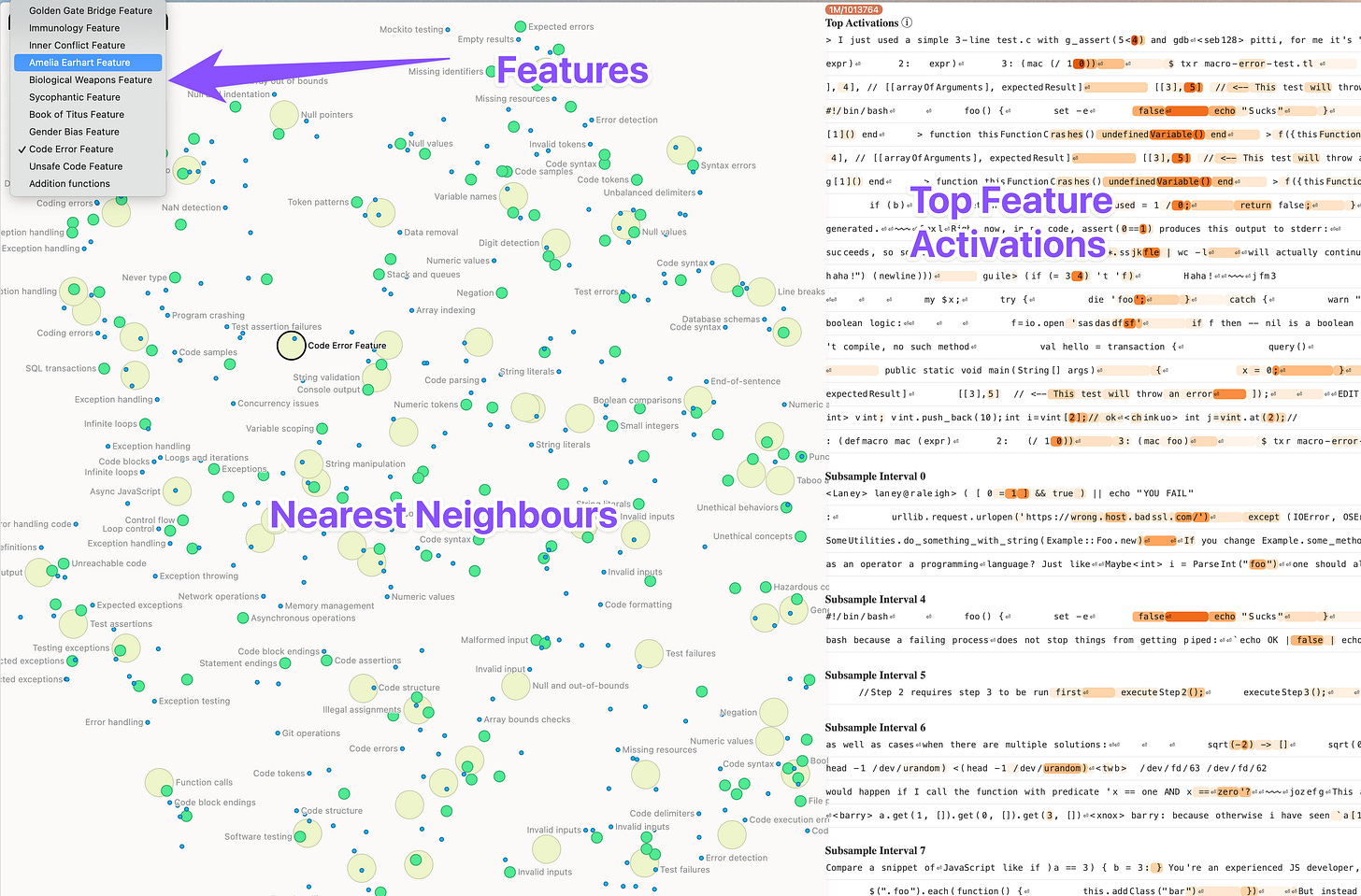

Anthropic releases the Scaling Monosemanticity paper

This is quite a big thing that happened this week for Mechanistic Interpretability and Alignment, with Anthropic releasing a new paper and examples of their understanding of what LLM "thinks".

They have done incredible work in this area, and now they have scaled it up all the way to production models like Claude Haiku, which shows that this work can actually understand which "features" are causing which tokens to output.

In the work they highlighted features such as "deception", "bad code" and even a funny one called "Golden Gate bridge" and showed that clamping these features can affect the model outcomes.

One these features have been identified, they can be turned on or off with various levels of power, for example they turned up the Golden Gate Bridge feature up to the maximum, and the model thought it was the Golden Gate bridge.

While a funny example, they also found features for racism, bad / wrong code, inner conflict, gender bias, sycophancy and more, you can play around with some examples here and definitely read the full blog if this interests you, but overall it shows incredible promise in alignment and steer-ability of models going forward on large scale

This weeks Buzz (What I learned with WandB this week)

I was demoing Weave all week long in Seattle, first at the AI Tinkerers event, and then at MSFT BUILD.

They had me record a pre-recorded video of my talk, and then have a 5 minute demo on stage, which (was not stressful at all!) so here's the pre-recorded video that turned out really good!

Also, we're sponsoring the Mistral Hackathon this weekend in Paris, so if you're in EU and want to hack with us, please go, it's hosted by Cerebral Valley and HuggingFace and us →

Vision

Phi-3 mini Vision

In addition to Phi-3 small and Phi-3 Medium, Microsoft released Phi-3 mini with vision, which does an incredible job understanding text and images! (You can demo it right here)

Interestingly, the Phi-3 mini with vision has 128K context window which is amazing and even beats Mistral 7B as a language model! Give it a try

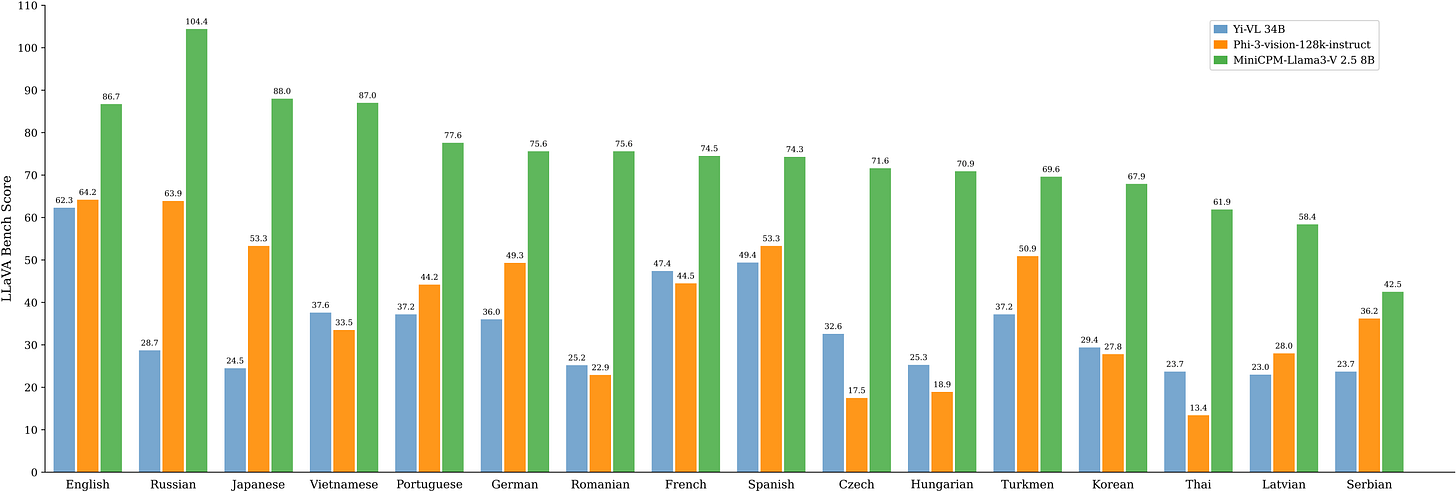

OpenBNB - MiniCPM-Llama3-V 2.5 (X, HuggingFace, Demo)

Two state of the art vision models in one week? well that's incredible.

A company I haven't heard of OpenBNB have released MiniCPM 7B trained on top of LLama3 and they claim that they outperform the Phi-3 vision

They claim that it has GPT-4 vision level performance and achieving an 700+ score on OCRBench, surpassing proprietary models such as GPT-4o, GPT-4V-0409, Qwen-VL-Max and Gemini Pro

In my tests, Phi-3 performed a bit better, I showed both the same picture, and Phi was more factual on the hard prompts:

Phi-3 Vision:

And that's it for this week's newsletter, look out for the Sunday special full MSFT Build recap and definitely give the whole talk a listen, it's full of my co-hosts and their great analysis of this weeks events!