Hey everyone, happy ThursdAI!

As always, here's a list of things we covered this week, including show notes and links, to prepare you for the holidays.

TL;DR of all topics covered:

Open Source AI

OpenChat-3.5-1210 - a top performing open source 7B model from OpenChat team beating GPT3.5 and Grok (link, HF, Demo)

LAION 5B dataset taken down due to CSAM allegations from Stanford (link, full report pdf)

FLASK - New evaluation framework from KAIST - based on skillset (link)

Shows a larger difference between open/closed source

Open leaderboard reliability issues, vibes benchmarks and more

HF releases a bunch of MLX ready models (LLama, Phi, Mistral, Mixtral) (link)

New transformer alternative architectures - Hyena & Mamba are heating up (link)

Big CO LLMs + APIs

Apple - LLM in a flash paper is making rounds (AK, Takeaways thread)

Anthropic adheres to the messages API format (X)

Microsoft Copilot finally has plugins (X)

Voice & Audio

AI Music generation Suno is now part of Microsoft Copilot plugins and creates long beautiful songs (link)

AI Art & Diffusion

Midjourney v6 is out - better text, great at following instructions (link)

Open Source AI

We start today with a topic I didn't expect to be covering, the LAION 5B dataset, was taken down, after a report from Stanford Internet Observatory found instances of CSAM (Child Sexual Abuse material) in the vast dataset. The outlined report had identified hundreds to thousands of instances of images of this sort, and used something called PhotoDNA by Microsoft to identify the images by hashes, using a sample of NSFW marked images.

LAION 5B was used to train Stable Diffusion, and 1.4 and 1.5 were trained on a lot of images from that dataset, however SD2 for example was only trained on images not marked as NSFW. The report is very thorough, going through the methodology to find and check those types of images. Worth noting that LAION 5B itself is not an image dataset, as it only contains links to images and their descriptions from alt tags.

Obviously this is a very touchy topic, given the way this dataset was scraped from the web, and given how many image models were trained on it, the report doesn't allege anything close to influence on the models it was trained on, and outlines a few methods of preventing issues like this in the future. One unfortunate outcome of such a discovery, is that this type of work can only be done on open datasets like LAION 5B, while closed source datasets don't get nearly to this level of scrutiny, and this can slow down the advancement of multi-modal open source multi modal models while closed source will continue having these issues and still prevail.

The report alleges they found and validated between hundreds to a few thousand of CSAM verified imagery, which considering the size of the dataset, is infinitesimally small, however, it still shouldn't exist at all and better techniques to clean those scraping datasets should exist. The dataset was taken down for now from HuggingFace and other places.

New version of a 7B model that beats chatGPT from OpenChat collective (link, HF, Demo)

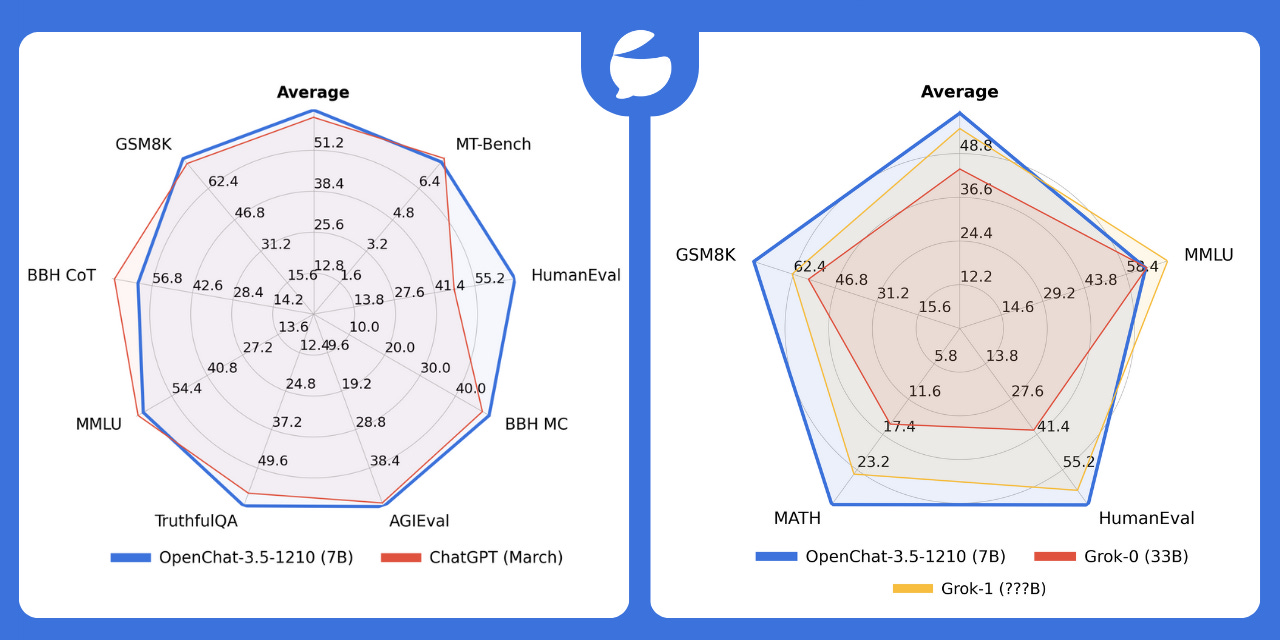

Friend of the pod Alpay Aryak and team released an update to one of the best 7B models, namely OpenChat 7B (1210) is a new version of one of the top models in the 7B world called OpenChat with a significant score compared to chatGPT 3.5 and Grok and with very high benchmark hits (63.4% on HumanEval compared to GPT3.5 64%)

Scrutiny of open source benchmarks and leaderboards being gamed

We've covered State of the art models on ThursdAI, and every time we did, we covered the benchmarks, and evaluation scores, Whether that's the popular MMLU (Multi-Task Language Understanding) or HumanEval (Python coding questions) and almost always, we've referred to the HuggingFace Open LLM leaderboard for the latest and greatest models. This week, there's a long thread on the hugging face forums that HF eventually had to shut down, that alleges that a new contender for the top, without revealing methods, used something called UNA to beat the benchmarks, and folks are suggesting that it must be a gaming of the system, as a model that's trained on the benchmarks can easily top the charts.

This adds to the recent observations from friend of the pod Bo Wang from Jina AI, that the BGE folks have stopped focusing on the MTEB leaderboard (Massive Text Embedding Benchmark) benchmarks as well, as those are also seem to be gamed (link)

This kicked off a storm of a discussion about different benchmarks and evaluations, ability to score and check wether or not we're advancing, and the openness of these benchmarks. Including one Andrej Karpathy that chimed in that the only way to know is to read the r/LocalLlama comment section (e.g. vibes based eval) and check the ELO score on the LMSys chatbot arena, which pits 2 random LLMs behind the scenes and lets users choose the best answer/score.

LMsys also has a leaderboard, and that one only includes models they have explicitly added to their Arena, and also merges 3 different scores, the ELO score by human raters, the MTBench score and the MMLU.

This is the latest benchmark, showing that Mixtral is the highest ranking open source model at this point, and that a few other Apache 2.0 models like OpenChat (the previous version, the one from today should score even higher) and OpenHermes are inching closer as well and have honorable mentions given their license and size!

However, given the latest releases in HuggingFace lineage, where you could track the model finetunes to what models they were fine-tuned on, it's still a good place to check out those leaderboards, just... self evaluation and running models on your own tasks is always a good idea! Also a good idea is additional benchmarks, like the one proposed by KAIST this week called FLASK that shows quite a significant distance between closed source models and open source ones based on several skills.

This weeks Buzz (What I learned this week in Weights & Biases)

This week we kicked off a buildweek internally, which unfortunately I wasn’t able to be a super active participant in, due to lying on my couch with a fever for most of the week, but regardless, I noticed how important is it to have these build weeks/hack weeks from time to time to actually use some of the new techniques we often talk about, like chain-of-density prompting techniques, or agent fine-tunes. I also got paired with my colleague Anish on our project, and while we work on our project (to be revealed later) he gave a kick ass webinar on the famous deeplearning.ai platform on the topic of enhancing performance for LLM agents in automation that more than 5K folks tuned into! Anish is a wealth of knowledge, so check it out if this topic interests you 👏

Big CO LLMs + APIs

Apple - LLM in a Flash + MLX stuff

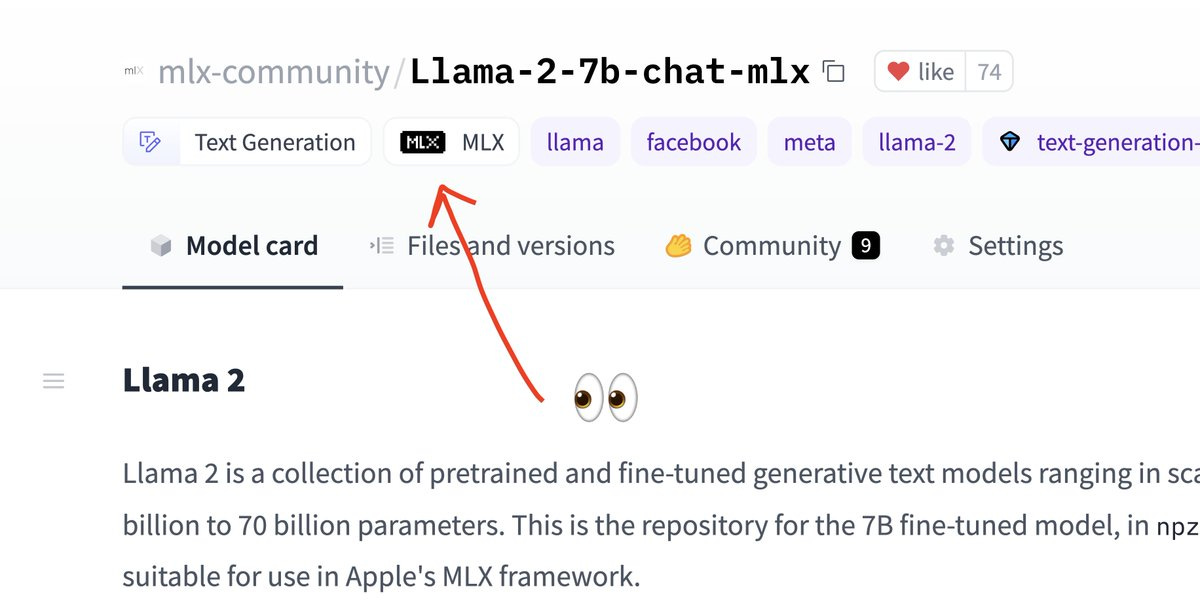

Apple has been more and more in the AI news lately, having recently released MLX framework for running models directly on apple silicon devices, without a lot of dependencies, which was always possible, but is not optimized. This got many folks to start converting models to an MLX compatible format and there's no even a new tag on HF for those converted models

But the main news this week don't stop there, folks from Apple also released the LLM in a flash paper, which shows advances in running LLMs in hardware restricted environments like smartphones, where memory is limited, and shows interesting promise, and also a glimpse that Apple is likely moving towards on device or partially on device inference at some point if we combine the MLX stuff and this paper attempts.

Anthropic moves towards messages API

Anthropic Claude finally gives us some DX and introduces a similar to OpenAI messages API.

Voice

Microsoft copilot now has plugins and can create songs!

Microsoft copilot (FKA Bing Chat) now has Plugins (probably not new from this week, but we haven't yet reported on this) and one of the coolest ones is SUNO, which is an audio generation platform that has been around. And now it's super easy to create whole songs, directly from the Microsoft Copilot interface!

Here’s my 1 shot attempt and creating a holiday jingle for ThursdAI, it’s not good, but it’s fun 😂

And I’ve seen some quite decent examples like return to monkey

AI Art & Diffusion

Midjourney v6 looks stunning and follows prompts very well

Midjourney finally dropped their version 6, and it looks, really really good. Notably, it's likely the highest quality / fidelity diffusion model out there that we can use, has better support for text, and follows prompts closely. DALL-E is still very impressive for folks given that the iteration via chatGPT interface is very easy and convinient, but still ,just look at some of these MJv6 generations 😻

Nick gave it a very details prompt with 8 specific color assingments and besides the image looking insane, MJ nailed the super complex prompt!

35mm film still, two-shot of a 50 year old black man with a grey beard wearing a brown jacket and red scarf standing next to a 20 year old white woman wearing a navy blue and cream houndstooth coat and black knit beanie. They are walking down the middle of the street at midnight, illuminated by the soft orange glow of the street lights --ar 7:5 --style raw --v 6.0

And just for fun, here’s a comparison of all previous versions of MJ for the same prompt, just to… feel the progress 🔥

Thanks for reading all the way through, I think I got more than I bargained for during NeurIPS and I came back with a fever and was debating wether to even record/send this weeks newletter, but now that I’m at the end of it I’m happy that I did! Though, if you listen to the full recording, you may hear me struggling to breathe a bit 😅

So I’ll go rest up before the holidays, wishing you merry Christmas if you celebrate it 🎄 See you next week 🫡