Happy Apple AI week everyone (well, those of us who celebrate, some don't) as this week we finally got told what Apple is planning to do with this whole generative AI wave and presented Apple Intelligence (which is AI, get it? they are trying to rebrand AI!)

This weeks pod and newsletter main focus will be Apple Intelligence of course, as it was for most people compared to how the market reacted ($APPL grew over $360B in a few days after this announcement) and how many people watched each live stream (10M at the time of this writing watched the WWDC keynote on youtube, compared to 4.5 for the OpenAI GPT-4o, 1.8 M for Google IO)

On the pod we also geeked out on new eval frameworks and benchmarks including a chat with the authors of MixEvals which I wrote about last week and a new benchmark called Live Bench from Abacus and Yan Lecun

Plus a new video model from Luma and finally SD3, let's go! 👇

TL;DR of all topics covered:

Apple WWDC recap and Apple Intelligence (X)

This Weeks Buzz

Open Source LLMs

Microsoft Samba - 3.8B MAMBA + Sliding Window Attention beating Phi 3 (X, Paper)

Sakana AI releases LLM squared - LLMs coming up with preference algorithms to train better LLMS (X, Blog)

Abacus + Yan Lecun release LiveBench.AI - impossible to game benchmark (X, Bench

Interview with MixEval folks about achieving 96% arena accuracy with 5000x less price

Big CO LLMs + APIs

Mistral announced a 600M series B round

Revenue at OpenAI DOUBLED in the last 6 month and is now at $3.4B annualized (source)

Elon drops lawsuit vs OpenAI

Vision & Video

AI Art & Diffusion & 3D

Tools

Apple Intelligence

Technical LLM details

Let's dive right into what wasn't show on the keynote, in a 6 minute deep dive video from the state of the union for developers and in a follow up post on machine learning blog, Apple shared some very exciting technical details about their on device models and orchestration that will become Apple Intelligence.

Namely, on device they have trained a bespoke 3B parameter LLM, which was trained on licensed data, and uses a bunch of very cutting edge modern techniques to achieve quite an incredible on device performance. Stuff like GQA, Speculative Decoding, a very unique type of quantization (which they claim is almost lossless)

To maintain model , we developed a new framework using LoRA adapters that incorporates a mixed 2-bit and 4-bit configuration strategy — averaging 3.5 bits-per-weight — to achieve the same accuracy as the uncompressed models [...] on iPhone 15 Pro we are able to reach time-to-first-token latency of about 0.6 millisecond per prompt token, and a generation rate of 30 tokens per second

These small models (they also have a bespoke image diffusion model as well) are going to be finetuned with a lot of LORA adapters for specific tasks like Summarization, Query handling, Mail replies, Urgency and more, which gives their foundational models the ability to specialize itself on the fly to the task at hand, and be cached in memory as well for optimal performance.

Personal and Private (including in the cloud)

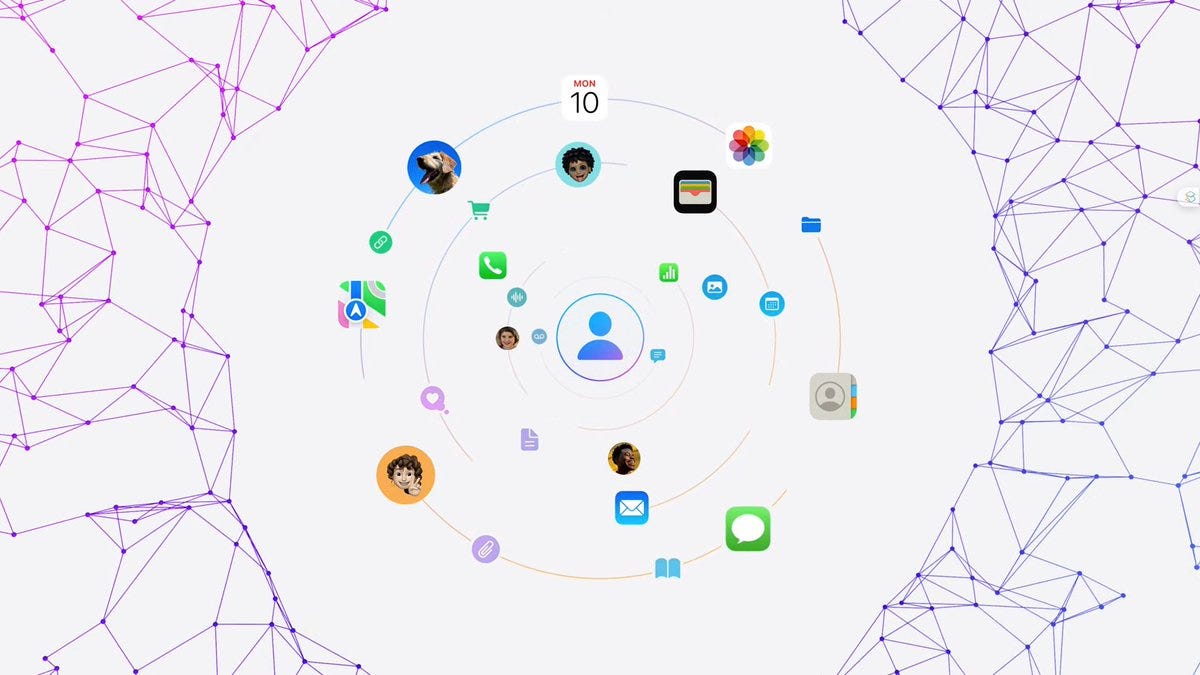

While these models are small, they will also benefit from 2 more things on device, a vector store of your stuff (contacts, recent chats, calendar, photos) they call semantic index and a new thing apple is calling App Intents, which developers can expose (and the OS apps already do) that will allows the LLM to use tools like moving files, extracting data across apps, and do actions, this already makes the AI much more personal and helpful as it has in its context things about me and what my apps can do on my phone.

Handoff to the Private Cloud (and then to OpenAI)

What the local 3B LLM + context can't do, it'll hand off to the cloud, in what Apple claims is a very secure way, called Private Cloud, in which they will create a new inference techniques in the cloud, on Apple Silicon, with Secure Enclave and Secure Boot, ensuring that the LLM sessions that run inference on your data are never stored, and even Apple can't access those sessions, not to mention train their LLMs on your data.

Here are some benchmarks Apple posted for their On-Device 3B model and unknown size server model comparing it to GPT-4-Turbo (not 4o!) on unnamed benchmarks they came up with.

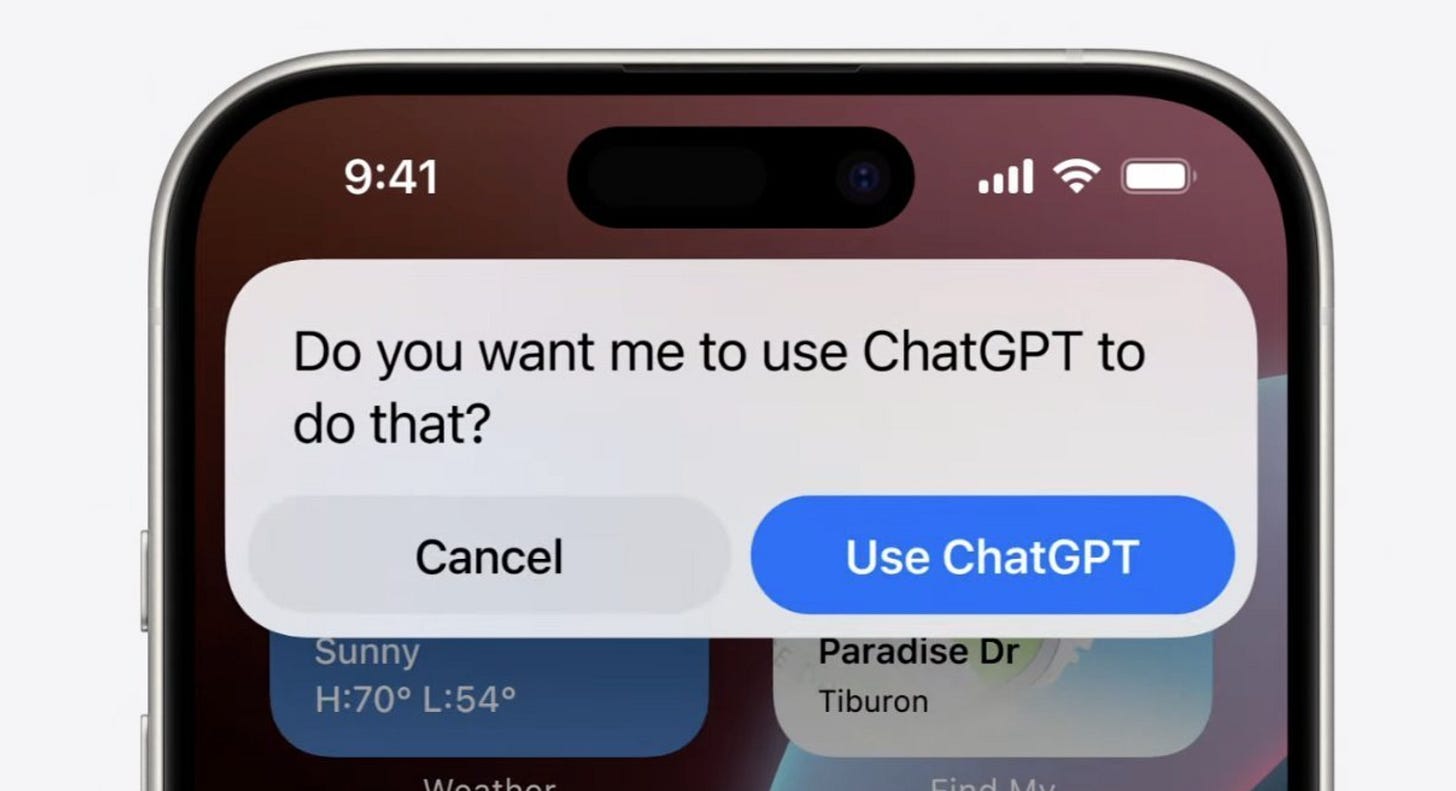

In cases where Apple Intelligence cannot help you with a request (I'm still unclear when this actually would happen) IOS will now show you this dialog, suggesting you use chatGPT from OpenAI, marking a deal with OpenAI (in which apparently nobody pays nobody, so neither Apple is getting paid by OpenAI to be placed there, nor does Apple pay OpenAI for the additional compute, tokens, and inference)

Implementations across the OS

So what will people be able to actually do with this intelligence? I'm sure that Apple will add much more in the next versions of iOS, but at least for now, Siri is getting an LLM brain transplant and is going to be much more smarter and capable, from understanding natural speech better (and just, having better ears, the on device speech to text is improved and is really good now in IOS 18 beta) to being able to use app intents to do actions for you across several apps.

Other features across the OS will use Apple Intelligence to prioritize your notifications, and also summarize group chats that are going off, and have built in tools for rewriting, summarizing, and turning any text anywhere into anything else. Basically think of many of the tasks you'd use chatGPT for, are now built into the OS level itself for free.

Apple is also adding AI Art diffusion features like GenMoji (the ability to generate any emoji you can think of, like chefs kiss, or a seal with a clown nose) and while this sounds absurd, I've never been in a slack or a discord that didn't have their own unique custom emojis uploaded by their members.

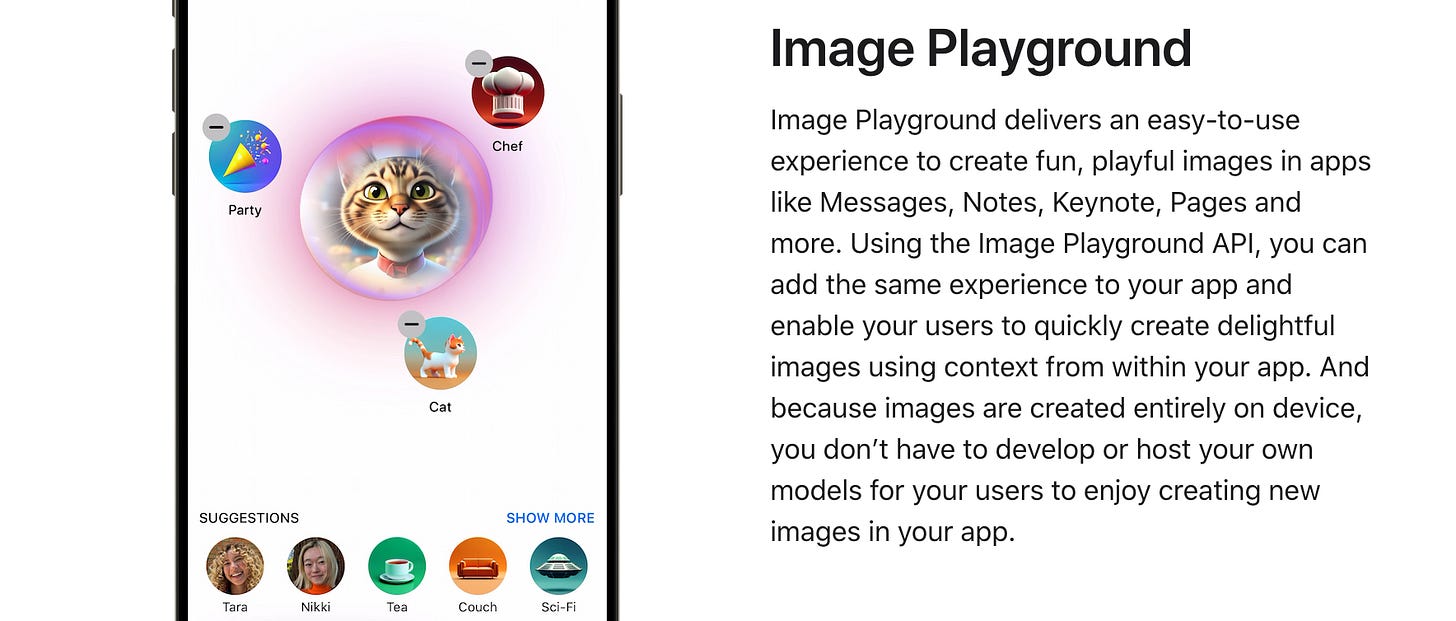

And one last feature I'll highlight is this Image Playground, Apple's take on generating images, which is not only just text, but a contextual understanding of your conversation, and let's you create with autosuggested concepts instead of just text prompts and is going to be available to all developers to bake into their apps.

Elon is SALTY - and it's not because of privacy

I wasn't sure if to include this segment, but in what became my most viral tweet since the beginning of this year, I posted about Elon muddying the water about what Apple actually announced, and called it a Psyop that worked. Many MSMs and definitely the narrative on X, turned into what Elon thinks about those announcements, rather than the announcements themselves and just look at this insane reach.

We've covered Elon vs OpenAI before (a lawsuit that he actually withdrew this week, because emails came out showing he knew and was ok with OpenAI not being Open) and so it's no surprise that when Apple decided to partner with OpenAI and not say... XAI, Elon would promote absolutely incorrect and ignorant takes to take over the radio waves like he will ban apple devices from all his companies, or that OpenAI will get access to train on your iPhone data.

This weeks BUZZ (Weights & Biases Update)

Hey, if you're reading this, it's very likely that you've already registered or at least heard of ai.engineer and if you haven't, well I'm delighted to tell you, that we're sponsoring this awesome event in San Francisco June 25-27. Not only are we official sponsors, both Lukas (the Co-Founder and CEO) and I will be there giving talks (mine will likely be crazier than his) and we'll have a booth there, so if your'e coming, make sure to come by my talk (or Lukas's if you're a VP and are signed up for that exclusive track)

Everyone in our corder of the world is going to be there, Swyx told me that many of the foundational models labs are coming, OpenAI, Anthropic, Google, and there's going to be tons of tracks (My talk is of course in the Evals track, come, really, I might embarrass myself on stage to eternity you don't want to miss this)

Swyx kindly provided listeners and readers of ThursdAI with a special coupon feeltheagi so even more of a reason to try and convince your boss and come see me on stage in a costume (I've said too much!)

Vision & Video

Luma drops DreamMachine - SORA like short video generation in free access (X, TRY IT)

In an absolute surprise, Luma AI, a company that (used to) specialize in crafting 3D models, has released a free access video model similar to SORA, and Kling (which we covered last week) that generates 5 second videos (and doesn't require a chinese phone # haha)

It's free to try, and supports text to video, image to video, cinematic prompt instructions, great and cohesive narrative following, character consistency and a lot more.

Here's a comparison of the famous SORA videos and LDM (Luma Dream Machine) videos that I was provided on X by a AmebaGPT, however, worth noting that these are cherry picked SORA videos while LDM is likely a much smaller and quicker model and that folks are creating some incredible things already!

AI Art & Diffusion & 3D

Stable Diffusion Medium weights are here (X, HF, FAL)

It's finally here (well, I'm using finally carefully here, and really hoping that this isn't the last thing Stability AI releases) ,the weights for Stable Diffusion 3 are available on HuggingFace! SD3 offers improved photorealism and awesome prompt adherence, like asking for multiple subjects doing multiple things.

It's also pretty good at typography and fairly resource efficient compared to previuos versions, though I'm still waiting for the super turbo distilled versions that will likely come soon!

And that's it for this week folks, it's been a hell of a week, I really do appreciate each and one of you who makes it to the end reading, engaging and would love to ask for feedback, so if anything didn't resonate, too long / too short, or on the podcast itself, too much info, to little info, please do share, I will take it into account 🙏 🫡

Also, we're coming up to the 52nd week I've been sending these, which will mark ThursdAI BirthdAI for real (the previous one was for the live shows) and I'm very humbled that so many of you are now reading, sharing and enjoying learning about AI together with me 🙏

See you next week,

Alex