👋 Hey there, been quite a week, started slow and whoah, the last two days were jam-packed with news, I was able to barely keep up! But thankfully, the motto of ThursdAI is, we stay up to date so you don’t have to!

We had a milestone, 1.1K listeners tuned into the live show recording, it’s quite the number, and I’m humbled to present the conversation and updates to that many people, if you’re reading this but never joined live, welcome! We’re going live every week on ThursdAI, 8:30AM pacific time.

TL;DR of all topics covered:

Open Source LLMs

Nous Hermes Mixtral finetune (X, HF DPO version, HF SFT version)

NeuralBeagle14-7B - From Maxime Labonne (X, HF,)

It's the best-performing 7B parameter model on the Open LLM Leaderboard (when released, now 4th)

We had a full conversation with Maxime about merging that will release as a standalone episode on Sunday!

LMsys - SGLang - a 5x performance on inference (X, Blog, Github)

NeuralMagic applying #sparceGPT to famous models to compress them with 50% sparsity (X, Paper)

Big CO LLMs + APIs

🔥 Google Deepmind solves geometry at Olympiad level with 100M synthetic data (Announcement, Blog)

Meta announces Llama3 is training, will have 350,000 H100 GPUs (X)

Open AI releases guidelines for upcoming elections and removes restrictions for war use (Blog)

Sam Altman (in Davos) doesn't think that AGI will change things as much as people think (X)

Samsung S24 has AI everywhere, including real time translation of calls (X)

Voice & Audio

AI Art & Diffusion & 3D

Stable diffusion runs 100% in the browser with WebGPU, Diffusers.js (X thread)

DeciAI - Deci Diffusion - A text-to-image 732M-parameter model that’s 2.6x faster and 61% cheaper than Stable Diffusion 1.5 with on-par image quality

Tools & Hardware

Rabbit R1 announces a deal with Perplexity, giving a full year of perplexity pro to Rabbit R1 users and will be the default search engine on Rabbit (link)

Open Source LLMs

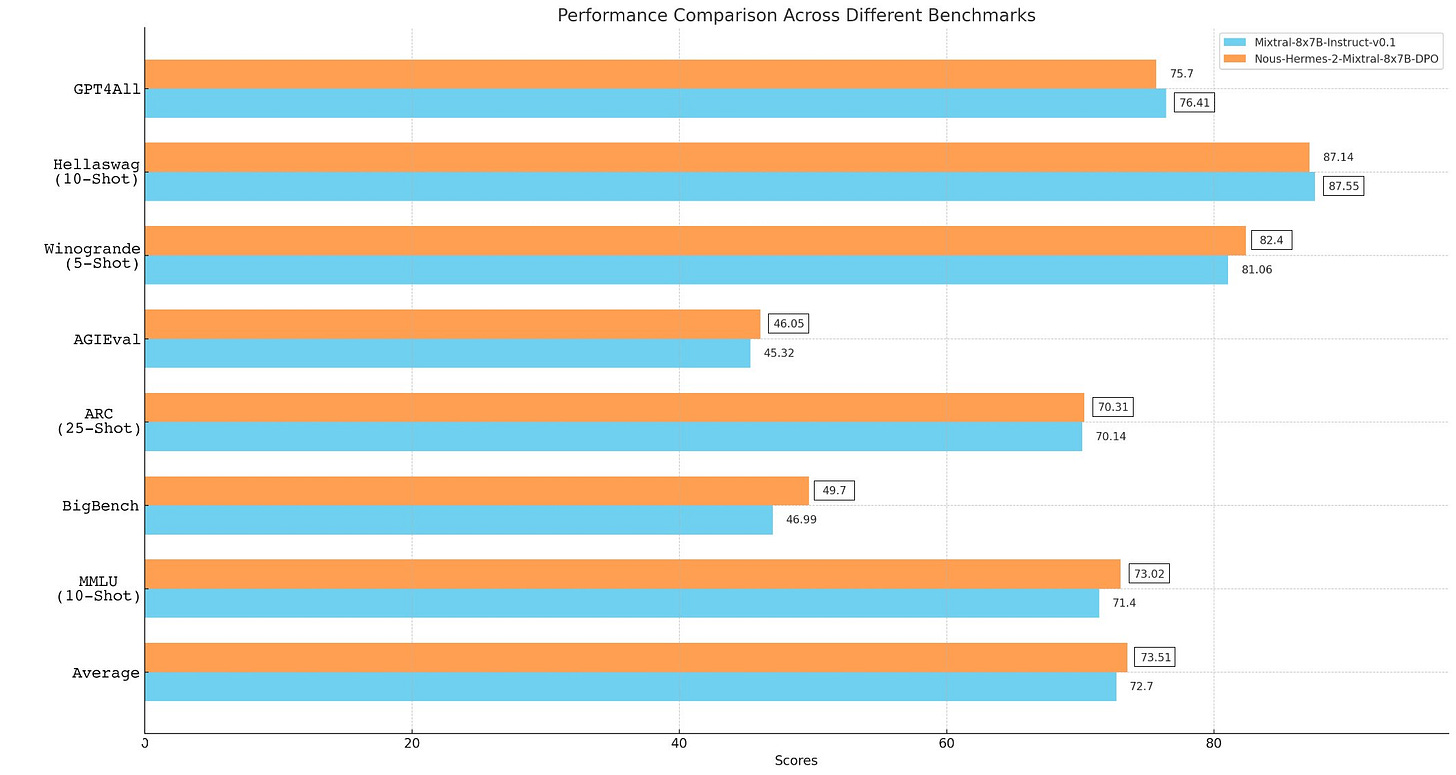

Nous Research releases their first Mixtral Finetune, in 2 versions DPO and SFT (X, DPO HF)

This is the first Mixtral finetune from Teknium1 and Nous team, trained on the Hermes dataset and comes in two variants, the SFT and SFT+DPO versions, and is a really really capable model, they call it their flagship!

This is the fist Mixtral finetune to beat Mixtral instruct, and is potentially the best open source model available right now! 👏

Already available at places like Together endpoints, GGUF versions by the Bloke and I’ve been running this model on my mac for the past few days. Quite remarkable considering where we are in only January and this is the best open chat model available for us.

Make sure you use ample system prompting for it, as it was trained with system prompts in mind.

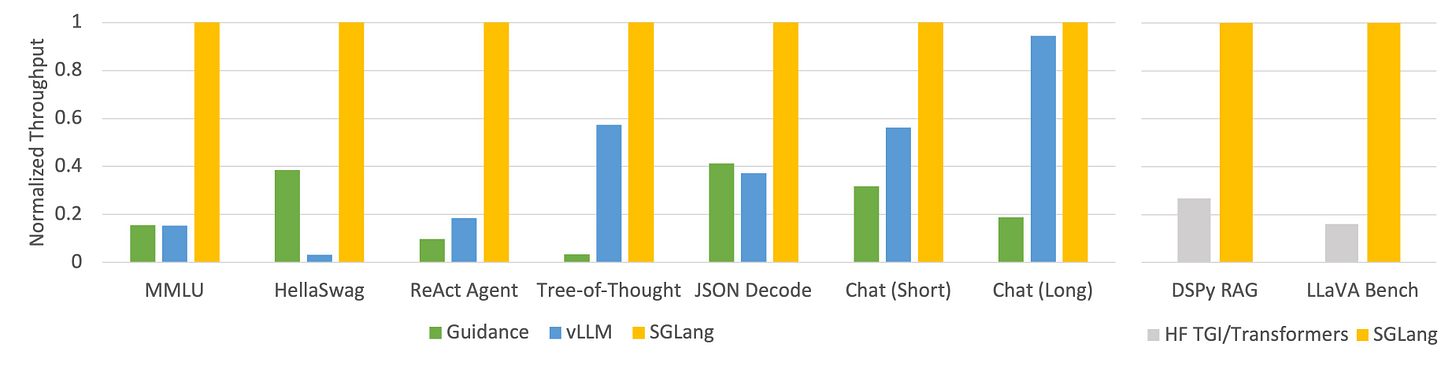

LMsys new inference 5x with SGLang & RadixAttention (Blog)

LMSys introduced SGLang, a new interface and runtime for improving the efficiency of large language model (LLM) inference. It claims to provide up to 5x faster inference speeds compared to existing systems like Guidance and vLLM.

SGLang was designed to better support complex LLM programs through features like control flow, prompting techniques, and external interaction. It co-designs the frontend language and backend runtime.

- On the backend, it proposes a new technique called RadixAttention to automatically handle various patterns of key-value cache reuse, improving performance.

- Early users like LLaVa reported SGLang providing significantly faster inference speeds in their applications compared to other options. The LMSys team released code on GitHub for others to try it out.

Big CO LLMs + APIs

Meta AI announcements (link)

These #BreakingNews came during our space, Mark Zuckerberg posted a video on Instagram saying that Llama3 is currently training, and will be open sourced!

He also said that Meta will have 350K (that’s not a typo, 350,000) H100 GPUs by end of the year, and a total of ~600,000 H100 equivalent compute power (including other GPUs) which is… 🤯 (and this is the reason why I had to give him double GPU rich hats)

Deepmind releases AlphaGeometry (blog)

Solving geometry at the Olympiad gold-medalist level with 100M synthetic examples

AlphaGeometry is an AI system developed by Google DeepMind that can solve complex geometry problems on par with human Olympiad gold medalists

It uses a "neuro-symbolic" approach, combining a neural language model with a symbolic deduction engine to leverage the strengths of both

The language model suggests useful geometric constructs to add to diagrams, guiding the deduction engine towards solutions

It was trained on over 100 million synthetic geometry examples generated from 1 billion random diagrams

On a benchmark of 30 official Olympiad problems, it solved 25 within time limits, similar to the average human medalist

OpenAI releases guidelines for upcoming elections. (Blog)

- OpenAI is taking steps to prevent their AI tools like DALL-E and ChatGPT from being abused or used to spread misinformation around elections

- They are refining usage policies for ChatGPT and enforcing limits on political campaigning, impersonating candidates, and discouraging voting

- OpenAI is working on technology to detect if images were generated by DALL-E and labeling AI-generated content for more transparency

- They are partnering with organizations in the US and other countries to provide users with authoritative voting information through ChatGPT

- OpenAI's goal is to balance the benefits of their AI while mitigating risks around election integrity and democratic processes

Microsoft announces copilot PRO

Microsoft announced new options for accessing Copilot, including Copilot Pro, a $20/month premium subscription that provides access to the latest AI models and enhanced image creation.

Copilot for Microsoft 365 is now generally available for small businesses with no user minimum, and available for additional business plans.

This weeks Buzz (What I learned with WandB this week)

Did you know that ThursdAI is not the FIRST podcast at Weights & Biases? (Shocking, I know!)

Lukas, our CEO, has been a long time host of the Gradient Dissent pod, and this week, we had two of the more prolific AI investors on as guests, Elad Gil and Sarah Guo.

It’s definitely worth a listen, it’s more of a standard 1:1 or sometimes 1:2 interview, so after you finish with ThursdAI, and seeking for more of a deep dive, definitely recommended to extend your knowledge.

AI Art & Diffusion

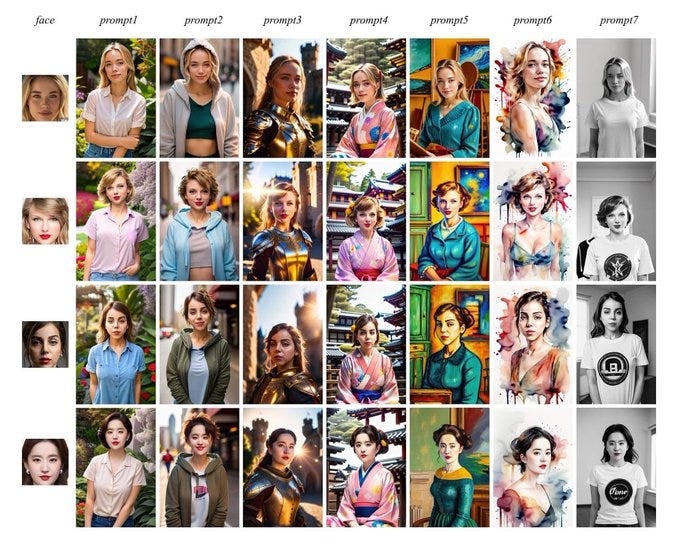

Zero shot face adapted image gen - 3 different tech approaches

What used to take ages, now takes seconds with 0 shot, there are quite a few approaches to generate images with real human faces, in 0 shot capacity, providing just a few faces. Gradio folks call it Zero-shot face-adapted image generation and there are 3 tools to generate those:

1⃣IPAdapter

2⃣PhotoMaker

3⃣InstantID

Here’s a great summary thread from Gradio folks for this fast advancing field! Remember we had to finetune on faces for a long time? Dreambooth and then LORAs, and now we have this exciting development.

Tools & Hardware

Rabbit R1 partners with Perplexity

The R1 device that was just announced, is about to sell through it’s first 50K in just a few days, which is remarkable. I definitely pre-ordered one, and can’t wait to get my hands on it. Jesse the founder has been all over X, getting incredible recognition, and after a few conversations with Aravind Srinivas, they agreed to make a deal right on X.

Today they hopped on a space and announced that all the first 100K early buyers of Rabbit are going to get a full year PRO subscription of Perplexity (one of the best AI search engines out there) for free! I sure as heck didn’t expect it, but the email was sent just a few minutes after the X space, and now guess who uses perplexity pro?

Here’s an example of a perplexity searching ThursdAI content (it doesn’t always get it right tho)!

I guess that’s it for today, as I’m writing this, there are incredible other stuff getting released, Codium open sourced AlphaCodium (here’s a link to the founder talking about it) but I didn’t have a second to dive into this, hopefully will bring Imatar to ThursdAI next time and chat about it!

Have a great weekend all 🫡 (please give us a good review on Apple Itunes, apparently it really helps discovery!)

Full Transcription for convenience:

[00:00:02] Alex Volkov: Hey everyone, happy Thursday. My name is Alex Volkov. I'm an AI evangelist with Weights Biases, and this is Thursday AI.

[00:00:13] Alex Volkov: We had such a great show today, over 1100 of you tuned in to the live recording, which is incredible.

[00:00:30] I also wanted to say that if you're not subscribed to thursdai.news newsletter, please go ahead and do because I send a full blog with the links to the show notes and to the speakers that we have on stage, and you should be able to follow up.

[00:00:46] Alex Volkov: There's a bunch of multimedia, like videos, that are not coming through in the audio only podcast format. So please subscribe to ThursdayEye. News as well. This live recording, we also hosted Maxime Lebon, who's a senior machine learning scientist with J.

[00:01:04] Alex Volkov: P. Morgan, and the author of several models, and Merged models, lately the Neural Beagle model that we've talked about. We had a great conversation with Maxime. And that full episode will be posted as a Sunday special evergreen content episode. So please stay tuned for that.

[00:01:29] Alex Volkov: It's been an incredibly illuminating conversation in the world of merging and merge kit and everything else that Maxim does and it was a super cool conversation. So that's coming soon.

[00:01:41] Alex Volkov: And, as I've been doing recently, the following is going to be a 7 minute segment, from the end of the live recording, summarizing everything we've talked about.

[00:01:54] Alex Volkov: I hope you've been enjoying these TLDR intros. Please let me know in the comments if this is something that's helpful to you.

[00:02:05] ThursdAI Jan18 TL;DR recap by Alex

[00:02:05] Alex Volkov: Alright we started with talking today, Thursday I, January 18th. We was talking about n News imis, the Mixt mixture fine tune that came out from Teo and the folks at News. It, it was of the first fine noon of mixture, the mixture of experts model from a mistral that came from the news research folks.

[00:02:35] Alex Volkov: And it released in two versions, the DPO only version SFT plus DPO version. Given different data sets they was trained on and actually different capabilities. It looks based on the community, the DPO version is like very well performing. I've been running this on my Macbook with LM studio and it really performs well.

[00:02:53] Alex Volkov: So shout out and folks should try this. This is By far the best, looks like the best new Hermes model based on just benchmarks. They're trained on the best open source model that's currently Mixtro. Mixtro is number 7th in the world based on LMCS Arena, and that's an open source model that we all get to use.

[00:03:10] Alex Volkov: Then we've covered the Neural Beagle 14. 7b from Maxim Le Bon. Maxim also joined us for a full interview that you can hear as part of the a podcast episode and Maxim released a Neural Beagle, which is a merge plus a DPO fine tune. And it's one of the top performing 7 billion parameters on the OpenLM leaderboard.

[00:03:30] Alex Volkov: When released in a few days ago, now it's fourth. So the speed with which things change is quite incredible. We then covered the LMSYS. SGLang attempt is a 5x performance inference bunch of techniques together on the front end and the back end called Radix attention on the back end and the SGLang way to run through inference code on the front end that combines into almost a 5x performance on inference.

[00:03:56] Alex Volkov: 5x is incredible Nistan mentioned that it does less than 5x on like longer sequences and then we had a conversation about Where it could improve significantly, which is agents and agents are sending short sequences. Alignment Labs told us that this could be significant improvement in that area.

[00:04:13] Alex Volkov: So our agents are about to run way faster. A 5x improvement is just incredible. And we also mentioned that at the same day when this was released, another Optimization was shouted out by Tim Ditmers from the Qlora fame called Marlin that also improves by 4x some significant inference techniques.

[00:04:34] Alex Volkov: And I wonder if those can be compiled together in some way. Quite impressive. We also covered neural magic doing spars, pacification and sparse. And we did in a deep dive into a short, deep dive. Thank you. Alignment and thank you Austin for what's spars, pacification means. And they do in this for like major models and they compress them with specification to around 50% sparsity.

[00:04:55] Alex Volkov: It's zeroing. Out the weights that you don't actually use. And it makes the models like significantly smaller. We covered Desilang a little bit. We didn't actually get to the diffusion. I'll just read out those updates as well. Then we covered the OpenAI had new guidelines for upcoming elections, and they're trying to add techniques for folks to identify daily generated images.

[00:05:18] Alex Volkov: And they're adding, restrictions to how their LLMs are used in the context of voter suppression, etc. We then talked about DeepMind and AlphaGeometry, where DeepMind released And open sourced looks like a model called Alpha Geometry that uses neuro symbolic approach with two models that solves geometry at almost a gold medal at the Olympiad level.

[00:05:42] Alex Volkov: So Geometry Olympiads and quite impressive this release from from DeepMind and shout out. It was trained on a hundred million synthetic data set sources. A source from like more than one billion. Or so random examples and it's quite impressive. So shout out DeepMind as well. We also briefly mentioned Samsung that has a Samsung S24, the flagship phone that now Apple is needed to compete with, that has AI everywhere, uses the new Qualcomm chip and has AI in.

[00:06:10] Alex Volkov: Pretty much summarization everywhere. There's like a button with the sparkles with AI. And one cool thing that we haven't mentioned, but I saw MKBHD on Twitter review is that they added real time translation of calls. So you can literally call some people with a different language and on device translation, after you download the model on device, we'll actually be able to translate this in real time.

[00:06:30] Alex Volkov: So you can read what the other person said in different language, but also hear it. And that's like quite cool. Then we had a deep interview with Maxim Lebon, the author of many things. Recently, we've talked about Fixtral or Fixtral, the mixture of experts of the five models. We've talked about merges.

[00:06:46] Alex Volkov: Maxim had a great explanation on, on, on his blog. And then on the Hug Face blog about what merges, what MergeKit does and how that. Plays into the whole ecosystem, the top LLM leaderboard now has been taken over by merges, specifically, likely because merging models does not require additional computer, additional training, and that's fairly easy to do with just the code merges takes and combines.

[00:07:11] Alex Volkov: With different, using different algorithms like SLURP and other algorithms it combines different models and different weights from different models, including potentially building models of novel sizes. So we've seen 10 billion parameter models, like 120 billion parameters so you can use those techniques to Combine models or merge models into different ways.

[00:07:31] Alex Volkov: There's also Frankenmerge that uses different models to combine into one. So we dove into that and what the inspiration for merging and what it actually does. Maxim also released like Lazy Merge Kit, which is a thin wrapper on top of the merge kit from Charles Goddard. So shout out to Charles.

[00:07:47] Alex Volkov: So we had a very interesting interview about merging and thank you, Maxim, for joining us. Definitely worth a listen as well. And then we had breaking news from BigZuck and the meta team that talked about he gave an update about the number of GPUs that they have. And by the end of this year, they're talking about 350, and overall 600, 000 H100s or equivalents of compute which they're going to use for AI and Metaverse.

[00:08:14] Alex Volkov: And Definitely a great update. They're training Lama 3 right now. The stuff that we didn't get to, but I wanted [00:08:20] to update, there's a, and I will add in show notes. There's a stable diffusion code that runs 100 percent in the browser with WebGPU and Diffusers. js, a thread from ClipDrop, the CEO Cyril Diagne.

[00:08:32] Alex Volkov: And there's also, we've talked about DeciEye, the company that releases a bunch of models. They release DeciDiffusion, a text to image model with only 370, the 300. Sorry, 732 million parameters. It's twice as fast and 61 percent cheaper than Stable Diffusion with the same image quality, so that's getting improved.

[00:08:51] Alex Volkov: But I think they're talking about Stable Diffusion 1. 4, so not SDXL or the new one. And Desi, I also released Desi Coder, and we also covered the Stable Diffusion Coder that is a coding model that runs closer on device, a 3 billion parameter model that beats Code Llama 7b. I think that's most of the stuff we talked about.

[00:09:09] Alex Volkov: And then one of the major things that Umesh brought we've talked about corporate drama, maybe a new segment in Thursday Eye where Microsoft, Did some things that actually disrupted workflows and companies actual products built on top of Microsoft, which is considerably not great and led to a fight.

[00:09:30] Alex Volkov: Hopefully not, but potentially a legal battle as well, and that's not something that should be done by a cloud provider such as Microsoft. Very ugly. In addition to this, we also talked about Microsoft announcing the CoPilot Pro that's now open for small businesses for 20 bucks a month with no minimum seats as well.

[00:09:46] Alex Volkov: And I think that's most of the things that we've mentioned

[00:09:49] Alex Volkov: Let's go.

[00:09:51] Sounds: to all of you.

[00:09:57] Alex Volkov: from, I guess

[00:09:59] Sounds: all of you. Namaskaram to

[00:10:07] Alex Volkov: 2024, we all need to get used to say 2024 at this point we have a bunch of AI news. My name is Alex Volkov, I'm an AI evangelist with Weights Biases, and I'm joined on stage here with dear friends, co hosts of Thursday AI. Podcast, newsletter, live X recording, community, I don't know, a bunch of other stuff as well.

[00:10:29] Alex Volkov: Nishten does paper readings, is a semi part of this as well. Welcome everyone. Welcome.

[00:10:33] Introduction to the Session's Structure

[00:10:33] Alex Volkov: I will just say a few things before we get started. So first of all, for those of you who are new, who are listening to this for the first time first of all, welcome.

[00:10:41] Alex Volkov: It's great that you have found us. Please DM me with like how you found us. I would love to know as I'm looking into the channels, et cetera. However, I will say that we've been here every week, pretty much at the same time. I don't think we've changed time since the summer. So 8.

[00:10:55] Alex Volkov: 30 AM Pacific and we try to do this every Thursday. I think we missed one or two. I was sick once, apologies. But other than that, we're here to talk about the AI every week. And what happens often is as we as we talk about things, different breaking news happened and folks announced different stuff on Thursday., and we cover pretty much everything. A very broad spectrum in AI changes. So I know there's like spaces to talk about diffusion, specifically art spaces as well. So we cover diffusion to an extent, but we try to focus on I guess our main focus is open source LLMs. We love those. We have a bunch of folks here on stage. They're training and fine tuning the greatest kind of open source models and definitely follow up on the different how should I say, different techniques, like the merging stuff that we're going to talk to at length later, and we, we hopefully get to hear about them first before they take over hug and face which was the case, I think with some of the models and some of the techniques.

[00:11:54] Alex Volkov: And I see two more folks joining us as well from different areas of the open source community. So I will say welcome LDJ and welcome alignment, LDJ. You've been missing in action. I was just saying, how are you, man? Welcome back.

[00:12:08] Luigi Daniele: Yeah, I'm doing good. Glad to be

[00:12:10] Alex Volkov: Yeah. And also we have Austin AKA Alignment Lab. What's up Austin?

[00:12:16] Alignment Lab: Oh, dude, I'm doing great. I was actually just in a call with LDJ and he was like, oh, Thursday Eye is starting and I was like, let's go.

[00:12:22] Alex Volkov: Yeah that's exactly what I like to hear that the calendar events is popping off and Thursday is starting.

[00:12:27] Open Source AI: Nous Hermes Mixtral Finetune + DPO deep dive

[00:12:27] Alex Volkov: So with that, I think it's time for the open source stuff.

[00:12:44] Sounds: Open Source AI, let's get it started.

[00:12:48] Alex Volkov: All right, so welcome to probably the biggest, the most fun, the most Contentful section of Thursday ai, where we talk about open source, LLMs and lms. I guess we should also start mentioning because a bunch of these models that we see are also multimodal, and I guess we'll start with.

[00:13:08] Alex Volkov: , News Hermes Fine Tune on Mixtral we've been waiting for this, Mixtral was released I want to say a month or so ago, a month and a half ago, and now we're getting one of the top kind of data sets and fine tunes trained on Mixtral, and we're getting this in multiple formats.

[00:13:25] Alex Volkov: Again, shout out Technium. If you guys don't follow Technium yet what are you even doing showing up on Thursday? I definitely give Technium a follow. But Mixtral fine tune is available and it comes in two variants and SFT and then DPO and SFT only. So SFT is a supervised fine tuning and DPO, direct preference optimization.

[00:13:45] Alex Volkov: This is like a, not a new technique, but definitely has been around for a while. Many people are using DPOs at this point. We've talked about DPO multiple times. I think we also saw, Nistan, correct me if I'm wrong, the actual mixtural instruct is also DPO, right? We saw this in the paper.

[00:14:00] Alex Volkov: So DPO is everywhere. And this is not the first time that the SFT and DPO pair is getting released separately. I think we've chatted with John Durbin who's, shoutout John, is in the audience. And that conversation is on the feed. So definitely check out the conversation with John.

[00:14:16] Alex Volkov: And the Bagel models were also released separately with SFT and the DPO version as well. And I think John back then mentioned that each one has Different different things it's good at. And I also would love to figure out which one of the new, Neus Ermis Mixtural Fine Tunes is best at what.

[00:14:33] Alex Volkov: Technium has a bunch of stuff in in, in the thread, so I'll link this below for examples. And I will say that the comparisons to Mixed Real Instruct. Technium posted a bunch of comparisons to Mixed Real Instruct. And it's interesting that not all of the benchmarks look like on improvements.

[00:14:51] Alex Volkov: There's a few, I think on GPT4ALL and HelloSwag. The base model, at least the non DPO base model, still wins just by a little bit. But everything else, like ARX, AGI, EVAL, and MMLU are significant improvements. And we're gonna probably continue to see those improvements. Shoutout. If you have tried it, please let me know.

[00:15:08] Alex Volkov: I will say this last thing, that finally, after setting up LM Studio again, shoutout to LM Studio we'll get to chat with LM Studio at one point. Hopefully soon, I am now, the first thing I do is download these models because it's super, super easy. Both of them, Studio and Allama, and there was a tiny, I think, quantization thing in the beginning, and now there isn't, and now it works great.

[00:15:33] Alex Volkov: And these models, I've loaded them up on my Mac before, before a flight. And I was just able to chat with this AI with no internet connection or like poorly internet connection. It was really something. I know we've talked about this multiple times. Hey, put this on a a thumb drive and then have all of human knowledge, quote unquote.

[00:15:51] Alex Volkov: I'm not really saying it's all human knowledge, but I've been actually able to do this before my flight and it was really cool.

[00:15:57] Alex Volkov: And I think the last thing to mention here is that Technium suggests to make liberal use of system prompts. So all of Hermes models, which is, there's now a bunch of Hermes models flying around, definitely the most. At least the famous one is Hermes, I think, 7B, but also the YI version, and this seems to beat the YI version as far as our friend Wolfram Raven, Wolfram Loco Lama tested.

[00:16:22] Alex Volkov: This is probably the best news model out of them all. So far, obviously it's based on the best. Open source model called Mixtro and definitely liberal use of system prompts. Yeah, roleplay is suggested setting expectations, specifications and everything else you can think of. Very easy to do with Elm Studio.

[00:16:39] Alex Volkov: I haven't [00:16:40] dove into like actually how to steer these models for exactly the task that I do. Luigi, you said LDJ, you said that you want to Tell me how to use LM studio in regards on this. So I would love to hear from you. First of all, have you had a chance to try these models specifically? And second of all let's talk about system prompts in LM studio a little bit, because I think it's a part that people are definitely missing.

[00:17:02] Luigi Daniele: Yeah. A lot of the latest models like Hermes and I think maybe Dolphin too, trained with system prompts. So if you really want to get the best use out of it definitely use that and it's just same thing with chat GPT really, where it's give instructions of how you maybe want to have it respond to you, or maybe add in a few threats of, of what you would do to the AI if it does not respond correctly, and so surprisingly that seems to actually sometimes.

[00:17:28] Luigi Daniele: Give good results, I personally try to always say please and thank you, but yeah yeah. And there's also prefix and suffixes, which I think I talked to you about, Alex,

[00:17:36] Alex Volkov: You briefly mentioned this, but maybe worth like a given a little bit of a heads up for folks.

[00:17:41] Luigi Daniele: yeah I think it really is worth maybe just a sit down and just a video with me and you actually going through it, because,

[00:17:47] Alex Volkov: Sure.

[00:17:47] Luigi Daniele: it's a decent amount to go through, but, yeah on the model card of most models, if you just look at something called prefix or suffix that is usually described in the model card, then You apply that to the LM Studio settings on the right panel in the chat settings.

[00:18:03] Luigi Daniele: And yeah, you just make sure you have those things right. If you don't, there's a good chance you're not actually using the model correctly. And it's not going to give you the best results.

[00:18:10] Alex Volkov: And they differ from the base model as well. Like we've seen like different base models have different things that you want to you want to add there. And you may getting like the same performance, but getting under performed a little bit. I'll also say for folks who are using Mac the Silicon, Apple Silicon, there's a little hidden checkbox there that I don't know if it's like, it's by default already.

[00:18:30] Alex Volkov: It's called use Apple Metal. And definitely make sure that's on for you. Significant improvement in performance and inference. All so I think NeuralRMS, anything else on folks here on stage that want to talk about this model and how it was trained and the difference in DPO? Folks, feel free to chime in.

[00:18:45] Alignment Lab: There's the cool thing about DPO is It's so it's a reinforcement learning technique. I don't know if anyone else has had a chance to read the paper about it, but essentially what occurred was that some researchers found that the, that transformers already have a baked in optimal reward function.

[00:19:03] Alignment Lab: And so what DPO is really doing is just training the model on that reward function, just biasing it towards the selected. Like good example when you give it a good and bad example pairs not directly unique to to the, to this model, but it is super interesting because it really opens up a whole bunch of possibilities for what you can do with the model now that you can give it negative examples and get more performance for it.

[00:19:27] Alex Volkov: DPO is ranking different outputs in terms of like preference, . So can you talk about the pairs stuff? Everybody says DPO pairs, like what do they mean by pairs? Could you say this about this?

[00:19:38] Alignment Lab: instead of training on like typically what you would do is you would build your data set. And that would be like your good data set. You'd have a weaker model that you, than the one that you use to synthesize the dataset or just bad examples of responses for every single example in the dataset.

[00:19:54] Alignment Lab: So if you have one that's like, how do I make a cup of tea? And then instructions about how to make a cup of tea, then you'd also have that paired with a negative example of, a response to how do I make a cup of tea? And then, the response is something else, like how to build a Lego house or whatever.

[00:20:08] Alignment Lab: And when you go to actually train it, you show it both at once, and you tell it which one is the positive and which one's the negative, and you just bias it towards the positive. It's quite similar, conceptually, to the way that OpenChat does the CRLFT training, although OpenChat actually has a specific token for the good and bad examples that it has weighted.

[00:20:34] Alignment Lab: But functionally, it's, the idea is the same. You're just doing reinforcement learning which lets you take data where you may have bad examples in there, and rather than having to remove them and waste data, you can now make a good example and get more out of it than you would have been by just replacing it.

[00:20:50] Alignment Lab: So it lets you recoup extra performance out of bad data.

[00:20:54] Alex Volkov: Thanks for the explanation. And definitely we've seen at least in my game plays with the bigger model and the DPO version of noose. RMS mixture this feels like the DPO at least behaves a little bit. Actually don't know how to attribute this to the technique or to the datasets, but it's really good.

[00:21:13] Alignment Lab: Yeah, we've noticed if we do a regular supervised fine tune first, like a just normal fine tuning, and then we DPO over that we, the models push just much further than either thing alone, too. I don't know if that's unilaterally true, because we do a fairly, specific kind of model when we make these big releases, but it seems, at least for the case of just general reasoning skill it helps a lot.

[00:21:37] Alex Volkov: Yeah, it's super cool. And I guess the downside of this, not the downside, but the outcome of some of this is that folks now have, folks who want to just use a model and are trying to maybe tune in to Thursday Eye to know which model is good to use, or maybe they're reading the local Lama stuff.

[00:21:53] Alex Volkov: There's now so many choices, including so many configurations. So maybe we should do Like a recap and also a simplification LDJ for like system messages and the prefixes alignment with DPO versus SFT. Just simplify and say, Hey folks, use this. Because right now there's so many, you can choose between quantization methods.

[00:22:11] Alex Volkov: There's at least four or five different ones for you to choose from. And LM studio says in a few of them, use this is recommended, but it says recommended for five, five different ones. There's different quantization providers as well, right? So the bloke is obviously the most familiar one,

[00:22:26] Alex Volkov: there's now a choice between DPO or SFT or DPO plus SFT, and We haven't even begun to talk about merges, which is coming as well. So there's a lot of choice and we need to simplify this for folks. So definitely just to simplify the Hermes models are usually very well behaved and great for role play as well.

[00:22:43] Alex Volkov: Try them out. If you have the room to run Mixtrl for your stuff, Mixtrl is definitely by far the best open source models that we have. Go ahead, Levent.

[00:22:52] Alignment Lab: Yeah, so Mixtrel is, that model is the architecture is very similar to a really old, comparatively old architecture that's been tried and true before. And so because of that, there's a lot of efficiencies that we just haven't integrated into the modern stack, but that will come.

[00:23:09] Alignment Lab: And there's a bunch of new ones that people have been making. And between the new quantization methods that you can do with Mixtro, because since it's sparse MOE, it doesn't actually, need all of its weights as much as it, as as each other. So some of them are, like, less important. It lets you quantize those quite a lot without actually hurting the model's performance very much.

[00:23:27] Alignment Lab: And you can also offload these layers when they're not being used. And then you can do like expert pre caching, where you predict some experts ahead of time, which lets you get faster inference speed. And at the end of the day, if the sort of quick sharp, which is like 2 bit quantization method continues to prove out that it's as performant as it claims, We could end up running Mixtro on 4 gigs of VRAM, like on a laptop.

[00:23:58] Alex Volkov: And

[00:23:59] Nisten Tahiraj: We will.

[00:24:00] Alex Volkov: we will.

[00:24:00] Nisten Tahiraj: it to perform a bit better.

[00:24:02] Alex Volkov: So I guess this takes us to the next, I'll go ahead and stand, and it's going to take us to the next optimization stuff.

[00:24:09] Nisten Tahiraj: We could definitely have it run on on 4 gigs. I've had it a little above 4. However, but the point is to have it run well. The quantization, it still makes it a little bit unfit for anything other than very short conversations. And we'll get it there.

[00:24:30] Alex Volkov: All right. So in this, in, in this

[00:24:32] Nisten Tahiraj: we'll have Mixtro under 4 gigs very soon and it'll be good.

[00:24:37] Nisten Tahiraj: Yes.

[00:24:37] Alex Volkov: And that's a promise. That's a promise.

[00:24:39] LMsys SGlang - increased inference by 5X

[00:24:39] Alex Volkov: So what happens is once you go and put those bigger models on slower hardware, which is possible you then wait painfully a long time for inference to actually happen. But this takes us to the next thing from the folks from LMSys. They released a fast and expressive LLM inference with Radix attention and SG Lang.

[00:24:59] Alex Volkov: So folks from [00:25:00] LMSys, if you guys remember from Models like Vicuna that took Lama and trained it on additional datasets. and NMSIS Arena and all these places like we definitely trust them at least with some of the evaluation stuff. I think, is MMLU also in NMSIS's area? Or at least they test on MMLU. They released a inference optimization kind of collection of techniques.

[00:25:24] Alex Volkov: I don't think it's one specific technique because there's like Radix attention. Yeah, go ahead.

[00:25:28] Alignment Lab: It's where all this was going in the first place between all these sort of different prompting programming frameworks and inference engines. What they've done is they built out the back end with the end goal of having an extremely controllable, steerable compiling system for programming outputs from a, from like an AI in the way, like a Pydantic or in the way that you would typically use sort of structured grammars and sampling techniques.

[00:25:58] Alignment Lab: And way more. It's hard to explain in, in summary in a way that's very easily grokkable without getting too technical but it's a combination of many things that we've been doing individually, which were always gonna be one big thing, they just saw it first and did it first, and now, when you're looking at it, it seems very obvious that this is probably how things should look going forward

[00:26:17] Alex Volkov: so let's actually talk about

[00:26:18] Bluetooth: overall, just a

[00:26:19] Alex Volkov: they have. Yeah, they propose like different co designing the backend runtime and the frontend language, which is like Alain said, a structured domain specific language embedded in Python to control the inference generation process. It's called domain specific language, DSLs.

[00:26:35] Alex Volkov: I, I think many folks have been using some of this. I think DS p Ys as well from is being like mentioned in the same breath. And then this language like executed in the interpreter code or in compiler code. And on the backend they have this radix attention technique for automatic and efficient KV cache reuse.

[00:26:53] Alex Volkov: I don't know if that's like instance like MOE specific or not yet, but definitely. The combination of those two plus the code that they've released shows just incredible results. Like folks, we live in an age, and we've talked about multiple of those techniques. We live in the age where somebody like this can come up and say, Hey here's an example of a set of techniques that if you use them, you get.

[00:27:12] Alex Volkov: 5x improvement on inference. In the same breath that we're saying, Hey, we're going to take Mixtrel and put it in 4GB, and we've seen this obviously with Stable Diffusion, which we're going to mention that runs fully in the browser, we're now seeing releases like this from a very reputable place. A collection of techniques that have been used to some extent by some folks, and now all under one roof, under one like GitHub.

[00:27:35] Alex Volkov: Thing that actually improves the inference by 5x on all of the major evaluations, at least that they've tested, that we always talk about. So 5x on MMLU and HelloSwag is significantly more performant, all these things. Quite impressive. One thing that I would definitely want to shout out is that the maintainer of Lava the LMM, the kind of the visual Lama, is definitely also replied and said that the execution of Lama is actually, of Lava, is actually written in the report itself.

[00:28:07] Alex Volkov: And it improves lava execution by 5x as well. And by execution, I mean like inference speed, basically. So without going like too much into Radix attention, because honestly, it's way too heavy for the space. It's quite incredible that we get, do we get stuff like this from like places like LMCS, specifically in the area of running smaller models, sorry, running bigger models with smaller hardware.

[00:28:33] Alex Volkov: Go ahead, Nissan.

[00:28:36] Nisten Tahiraj: I'll say something. So it does automate a lot of the tricks that people have been pulling, and it works great for large amounts of smaller prompts. Once you go to longer prompts, the benefit is not that much compared to VLLM. I think it felt like five or ten percent faster when it came to VLLM. So again, I haven't taken a very deep dive into it.

[00:29:01] Nisten Tahiraj: Just want to just make people aware that it's fantastic for smaller prompts and stuff. But for longer ones, you don't necessarily need to switch your whole stack to it. VLLM still works fine. Yeah, I think for if you're doing like what you would normally be doing with VLLM, which is like processing like large amounts of data or serving for just general purposes.

[00:29:24] Nisten Tahiraj: Probably, there's no need to switch your stack. I think for, specifically what it feels optimized for is Asian frameworks, in which you have many models communicating short strings back to each other. One model wearing many hats. And the optimizations just while we're on the topic, is crazy right now.

[00:29:43] Nisten Tahiraj: There's still three papers with major inference optimizations for MixedRole alone, as well as for VLLM, and that seem to compose everything pretty well. Having an alternative to VLM that's similarly. Performance is huge because VLM is a big bottleneck on a lot of stacks because of the way that it handles attention off on the CPU.

[00:30:00] Nisten Tahiraj: It feels a lot like when llama CPP got like offloading the same week that speculative decoding came out with hugging face transformers and. Everything just got a hundred times faster, like a half a year ago or so.

[00:30:12] Alex Volkov: Yeah, I would also it definitely felt like that day when LMS released the SG Lang optimization that we just now talking about I don't have a link for this, but also LES released from IST Austria. Released Marlin, which is a 4 bit, I think the way I know it's cool is that, Tim Dittmers from QLOR retweeted this and said this is a huge step forward.

[00:30:33] Alex Volkov: And Tim Dittmers is the guy who in KUDO mode, the codes, KUDO kernels, within like a night or something, planning for 3 months and then finishing. So I know that Tim Dittmers, when he says something is a huge deal, he probably Probably knows what's up. So Marlin released the same day that like the SGLang released and it's a linear kernel for LLM entrants with near ideal.

[00:30:53] Alex Volkov: 4x speedup up to batch sizes of 16 to 32 tokens. And they came out pretty much the same day yesterday on January 17th. So I'm going to add this in the show notes. So Marlin is also like an exciting optimization. And Nostia, I fully agree with you where we see these breakthroughs or collections of method that suddenly are finally collected in the same way.

[00:31:11] Alex Volkov: A bunch of papers that haven't, released code as well or haven't played with different things. And it's very exciting to see them Keep coming out, we're only at the beginning of this year. And I think to the second point that you just mentioned, with agent frameworks Specifically, RAG, Retrieval Augmented Generation this benefit is significant like you said, because the short strings back and forth, these agents communicate with each other.

[00:31:34] Alex Volkov: Last week we've talked with one such author from Cru AI, Cru specifically is an orchestration of different agents that do different tasks and coordinate and talk to each other and improving inference there. Many of them run on GPT 4 and I haven't fully gotten into how to do this yet, but SGLang also say that they're like LLM programming can actually work with various backends.

[00:31:55] Alex Volkov: So OpenAI as well and Tropic and Gemini and local models. That's very interesting if they actually improve OpenAI inference in Python. But DSPY RAG, so RAG on DSPYs from Omar Khattab is definitely mentioned in the SGLANG report. I know I'm throwing like a lot of a lot of acronyms at you guys.

[00:32:14] Alex Volkov: So SGLANG is the stuff we talk about as the That's the new language from LMCS org that speeds up some stuff. DSPY I haven't talked about yet, so we'll cover but one of the tasks on, on, on DSPY's RAG, so retrieval is mentioned that it gets like a significant boost. Like Nissen and Austin said, not necessarily for longer context prompts.

[00:32:35] Alex Volkov: 30, 000 tokens for summarization, maybe this technique that caches a bunch of. Stuff between calls is not going to be super helpful, but for fast execution of multiple things is definitely significant 5x. And like I think Lyman said, it's only the beginning of optimization cycles that we see, and it's quite exciting to to see them come out.

[00:32:56] Alex Volkov: I think we've covered two optimization techniques, SGLang, and then Marlin as well. I'll put a link to the show notes as well.

[00:33:03] NeuralMagic, compressing models with sparcification

[00:33:03] Alex Volkov: And I think now it's time to move to Yeah, one, one, one thing that we're going to chat about is neuromagic and I definitely focus on stage. Feel free to talk about neuromagic because I saw [00:33:20] somebody told me it's cool, but I have no idea how to even simplify this.

[00:33:23] Alex Volkov: So if you want us and you want to take a lead on this one, definitely feel free.

[00:33:28] Alignment Lab: Okay Neural Magic. This is actually the first conversation I think that me and LDJ both geeked out really hard on we were talking, because we were both the only people the other person knew who even knew about this company. Neuromagic has been making miracles in the corner for years.

[00:33:44] Alignment Lab: I first got interested in them because they had made a BERT model that was initially, it was nearly like I think a gig on your computer to run and, it spoke English perfectly well and all this other stuff. And they had compressed it to the point that the full model completely On your computer was like 15 megabytes and it, and what blew my mind was like, how does that even know English?

[00:34:06] Alignment Lab: And it's it was at like 96 percent the original accuracy, despite all of that. They specialize in these like optimization and compression techniques. And so what they do typically is they have a stack, which they wrote a paper about a while ago, which I'll post in the comments here.

[00:34:22] Alignment Lab: It's called Overt Surgeon, which is basically a process in which they have a teacher model. In a student model, in the student model they use distillation in the the more traditional sense than I think it's more commonly used now, where you're just training on a model's output, and they use the actual logits during they basically load both models in during the training run, and train the smaller model to behave like the larger model, and while they're doing that, they're also pruning it, which is, Essentially, you reduce the weights that are not getting used during training to zero, which lets your computer not have to calculate them, so it moves much faster.

[00:34:58] Alignment Lab: And then they also quantize, which is where you reduce the accuracy. Basically, without getting too technical, you're literally summarizing the parameters of the model, such that it's literally a smaller file. And they do this all at once, which takes the larger model, And compresses it into the student model that's starting out smaller, and then they're quantizing the student model and pruning it, so it's both running faster and literally getting smaller, and they can, as far as I'm aware, there's nobody who's even coming close as far as being able to compress a model so much and recently I think about two months ago we first saw that they're integrating transformers with Sparsify Alpha, which is now just out and it's called Sparsify on the GitHub.

[00:35:43] Alignment Lab: Totally check it out. You can make a tiny llama and do all that stuff to it and make it microscopic. It's amazing. And

[00:35:49] Alex Volkov: here, Austin, just real quick. So we've been talking about quantization for folks who are like not following the space look super closely. Let's say there's different quantization techniques in, and some of them create like small files, but the performance or like the accuracy, is getting lowered.

[00:36:03] Alex Volkov: How is Sparsification different from quantization, at least on the basic level. Are they compatible? Will they be used could you use both of them on the same file? What is this thing, sparsification?

[00:36:15] Alignment Lab: so in reality, probably if it were like more accessible of a tool, we would all likely just be doing both every single training run. But since there's always new quantization techniques, it doesn't make sense to. But with sparsification, the specific difference is rather than taking the same model and reducing the accuracy of its, the calculations, but making it smaller, the model's staying the same size physically on your drive, but you're reducing the weights that aren't getting used to to a zero value.

[00:36:50] Alignment Lab: And what that does is just means your, your GPU just has to do less calculations for the model to do inference and it makes it just much faster.

[00:36:59] Alex Volkov: All

[00:36:59] Nisten Tahiraj: Also, we for the next Baklava version, Neural Magic did make a A clip model for us. So shout out to them. They were able to cut down the size by from about four times smaller.

[00:37:14] Nisten Tahiraj: So we'll we'll have that out soon. And yeah, also for anybody else that. wants to learn about sparsity, just look up Nir Shavit on on YouTube. N I R S H A V I T. He's the OG MIT professor that pioneered sparsity and has a lot of videos out, and Neuromagic is his company. And yeah, it's looking really promising in the future because they can optimize at a deep level for CPU inference.

[00:37:45] Nisten Tahiraj: And it's not necessarily just quantization, it's also They are reducing the amount of unused weights. So yeah, expect to see a lot more stuff about sparsity from the GPU poor side of the spectrum, , because that's where the benefits are yet to be read.

[00:38:02] Nisten Tahiraj: Anyway, shout out to Neural magic as well.

[00:38:04] Alex Volkov: shout out to Neer Shovit and Neural Magic, it looks cool, and they just got into sparsifying fine tuned models as well, I think they sparsified some new models, and I don't know if they got to open chat yet, but I think some folks are waiting for PHY sparsification, definitely. The area of smaller models running on smaller hardware is advancing super, super fast.

[00:38:26] Star Coder from Stability AI - 3B coding model bearing CodeLLama

[00:38:26] Alex Volkov: Let's move on, folks, because we've been in the open source area for quite a while, and then we also need to get to our to the end of our conversations here and start doing deep dives. So StarCoder was released from Stability. A brief review here is a 3 billion parameter language model.

[00:38:41] Alex Volkov: From Stability AI it does code completion and obviously it runs offline cause it's a small model and you can run it. They claim it can run on MacBook Airs as well. And they say something like without GPU. Interesting. Accurate completion across 18 languages at level comparable to models twice their size.

[00:38:57] Alex Volkov: This is a Code Llama. Interesting comparison to Code Llama at this point, because we've seen a bunch of other models already beat, I think, Code Llama on different metrics. But people still compare themselves to the big dog. And it's very interesting. They use the multi stage process, pre training in natural language.

[00:39:15] Alex Volkov: fine tuning on code datasets to improve programming language performance. And it supports fill in the middle and expanded contact sizes compared to previous versions of stable coder. And I think, oh yeah the stable diffusion now has like a commercial membership plan because everybody's thinking about, okay how was.

[00:39:33] Alex Volkov: Table going to make money. So they have this membership where you can use their models. So it's not like fully open source. I think you can use this models commercially if you participate in this membership, otherwise you can use them for research. So stable quarter, check it out. I think it's new on, on hug and face.

[00:39:48] Alex Volkov: I think from today I believe,

[00:39:50] Discussion on Neural Beagle 7B & Model merging

[00:39:50] Alex Volkov: And I think the last thing that I want to chat about in open source just briefly is Neural Beagle 7B from Maxim who's in the audience and is going to come up hopefully in the interview in a few.

[00:39:59] Alex Volkov: Minutes, I want to say maybe 20 minutes, Maxim. Neural Beagle back when I added this to my notes, was the top performing 7 billion parameter fine tune in, in, in open source LLM leaderboard. It's no longer the top performing, it was definitely number 4, at least.

[00:40:14] Alex Volkov: And it's a merge plus a DPO, that's what I saw from Maxim, a merge of Actually interesting what it's a merge of, so let's go into the model card and check this out.

[00:40:24] Alex Volkov: But Maxim looks like have a bunch of models and Neural Beagle, the, this Neural Beagle 14, 7 billion parameters has an average of 60 on the, all the scores, 46 on AGI eval. And yeah, it's one of the top performing models and it's a merge of different things. And it already has a demo space that I'll link in the show notes as well.

[00:40:43] Insights on Lazy Merge Kit

[00:40:43] Alex Volkov: Yeah, it uses Lazy Merge Kit, which is a collab that Maxim also we're going to chat about and figure out what this means, what this merging thing means but definitely, I think that this model triggered one of the Nathan's in AI that says, Hey, I wanted to ignore this merge business for a while, but I guess I can't anymore because, merges is not to be ignored at this point.

[00:41:04] Alex Volkov: And this is a merge of the Wunna And distilled Markoro. Slurp. So which is also a merge. So if you guys hear me and you're like confused, like what are all these things mean? Hopefully we'll be able to clarify this one. Maxim. Maxim also had a tweet where there's now a collab where you can take a model like this and basically map out the genealogy of these models.

[00:41:25] Alex Volkov: What is based on what? And it's quite cool to see. And what else should I say about this model? I think that's pretty much it. It's very performative. I actually haven't had the chance to use this, but it's right up there and it's a merge model. There is, there's the [00:41:40] checkbox, like we said, in the open LLM leaderboards.

[00:41:42] Alex Volkov: If you don't want for some reason to see the merge models and we'll see like more trained models, you will uncheck that. But definitely the merge models are competing for the top of the LLM leaderboards right now. Haven't seen a lot of them on the LMCs arena, so it's going to be interesting to see how they treat the merge models.

[00:42:02] Alex Volkov: And I think that's most on open source, and we've given this corner almost 40 minutes, so I think it's time to move on a little bit here, folks. So I'll, yeah, I don't have breaking news here, so I'll just do this, a small transition so I can take a breath, haha.

[00:42:17] Sounds: Namaskaram to all of

[00:42:22] Deep mind to Alpha Geometry

[00:42:22] Alex Volkov: LMs and APIs, and I think the biggest player in this whole, Aparigraha, Niyama, Shaucha, Satya, Ashtanga, Yama, Ashtanga, Niyama Ashtanga, Ashtanga, Ashtanga, Ashtanga, Ashtanga, Ashtanga, Ashtanga, Ashtanga, Ashtanga, Ashtanga, Ashtanga, Ashtanga, Ashtanga, Ashtanga, Ashtanga, Ashtanga, Ashtanga, Ashtanga, Ashtanga, Ashtanga, Ashtanga, Ashtanga, is deep mind, deep mind released, A Nature article, which they always do, they always publish in Nature, this time the link to Nature article didn't really work but hopefully they fix it by now, and they released Alpha Geometry, so they released like a bunch of stuff, Alpha Fold, if you remember, Alpha Go Alpha Zero, they had a model that, that self trains to play anything, not only chess, or, not only Go, and now they've released Alpha Geometry, that solves geometry, almost a gold medal Level at the at the Olympiad level, so they have this this how should I say, this nice chart that says the previous state of the art on this Olympia Gold Medallist Standard gotten to ten problem solved there's like time limits. I'm not sure what the time limits are actually are. I don't have it in my notes. But you have to solve these like very like difficult geometry levels. Folks compete for the gold medals in this Olympiad. And alpha geometry now comes very close to the gold medalist standard.

[00:43:29] Alex Volkov: So the gold medalist is answers 25.9 problems solved, and alpha geometry now answers 25, and they claim that the previous state of the art answered 10, just 10. So they more than doubled and they're getting close to the Olympiad. I think I saw like a tweet from Nat Friedman or somebody. That says they would offer a 1, 000, 000 prize for somebody who solves the Geometry Olympiad at the Golden Medal, and now we're getting there.

[00:43:53] Alex Volkov: They use the newer symbolic approach and they combine all of them with a symbolic deduction engine to leverage the strength of both. Which some folks compare to thinking fast and slow, where you have system 1, system 2 thinking, or at least the outline system 1, system 2 thinking.

[00:44:09] Alex Volkov: In this case, this does actually help. They have the neuro symbolic approach. I think they use this, the neuro symbolic approach. I don't think I've seen this before. And I think the most interesting parts It was trained on over a hundred million synthetic geometry examples generated from one billion random diagrams.

[00:44:27] Alex Volkov: Completely, solely synthetic geometry examples. This whole data set for training of this model that beats Humans at Geometry, which was previously very difficult, is fully synthetic. And I think that's super cool. We only began this year, but definitely this is going to be the year where full synthetic datasets are going to rule.

[00:44:49] Alex Volkov: And Yeah. Opinions, folks here on stage. Have you read about this? What's interesting to you? I would love to hear folks kind of chime in on this as well, because I think it's like super cool and kudos for them to releasing this. Also, I saw somebody said, I think Bindu said that they released this open source, but I haven't seen anything.

[00:45:06] Alex Volkov: Definitely Luigi Go and then Nistan.

[00:45:09] Luigi Daniele: Yeah it's funny that you brought up Nat Friedman having that bet up. Because I remember that too, and now I'm thinking, I wonder if he'd be willing to give up like the million dollars or whatever the money is to DeepMind. Ha

[00:45:20] Luigi Daniele: was done by Google DeepMind, so that'd be funny.

[00:45:25] Nisten Tahiraj: How has Google not discovered AGI yet and fallen so behind?

[00:45:30] Nisten Tahiraj: This almost feels like an internal illness or something. Something's going on. Because yeah.

[00:45:40] Alignment Lab: I don't think that Google needs to compete is the thing. I just don't think they're incentivized to release anything into the space because they don't have to. There's really not anything here except money to lose for them.

[00:45:51] Alignment Lab: They already have all the data and stuff. Yeah, and back to the geometry problems, I can't wait to test this, if they release it, as to how it does when given really random, very long numbers. If it still solves the problem, then that, that will be extremely impressive. And yeah, I've done those Math Olympias with geometry questions and they're not easy at all.

[00:46:18] Alignment Lab: You have to picture stuff in 3D. 4D and whatever in your head. They're very tricky problems. So yeah this is pretty huge. That's all. Yeah.

[00:46:26] Alex Volkov: Quite, quite huge and kudos on them. Umesh, I think you actually found the source, right? I just

[00:46:32] Umesh Rajiani: Yeah so there is GitHub repo on Google DeepMind. So if you go to Google DeepMind on GitHub and then alpha geometry, you can find the code repo for that. So Nistan, if you want to test it out, it's there for you. So I'm taking your

[00:46:47] Alex Volkov: hark on this just like for a little bit. Did Google release code for us finally? Did Google like open source something? Welcome back, Google.

[00:46:54] Umesh Rajiani: yeah, so this is like first release kind of thing, coming out of Google. So it's going to be, yeah, it is quite quite interesting.

[00:47:01] Alex Volkov: Definitely moves us towards like more generalist

[00:47:04] Bluetooth: I'll have it up in a sec.

[00:47:05] Alex Volkov: Yeah, listen, please put this and we'll add this to the show notes as well. Definitely the question, how have they not solved AGI yet? Solving math at the Olympiad level seems like moving us forward, definitely. This neuro symbolic approach where they combine language models with a symbolic deduction engine, which I have no idea what symbolic deduction means in this case.

[00:47:24] Alex Volkov: But leveraging strength of both, this seems like going towards the right path. We've seen, I think Similar things with vision as well, where you combine kind of vision heads into one model they can understand. I don't think this model was multi modal at all. Doesn't look like, but maybe I'm wrong here.

[00:47:42] Alex Volkov: And I think Yeah, the solutions for this thing is verifiable by machines. I saw this one tweet that will go down in history. Somebody said, computers has always been good for calculations. So I don't understand the big deal there, here. And I think I think it's really funny to like, keep this tweet behind the scenes.

[00:48:04] Alex Volkov: Alright, so shout out to DeepMind for this fairly incredible release. Hopefully some of the techniques they used will be then used by folks in other areas as well to get us AIs that are significantly better at the geometry and different things. Oh yeah, Umesh, just before, before we continue, you want to talk about this NeuroSymbolic thing? Cause we've talked about this. I think Daniel Jeffries talked about this last time we've talked about Rabbit.

[00:48:27] Alex Volkov: If you guys remember, this was at the end of the last space and we've talked about Rabbit LAM, Large Action Model. And Umesh, you just mentioned something that they also use NeuroSymbolic to an extent, right?

[00:48:39] Umesh Rajiani: Yeah, so the LAM Large Action Model, basically based on Neuro Symbolic Programming for when, specifically when they are talking about training the model from the actions that you're going to perform is basically they are encoding Neuro Symbolic Programming to train the model or capture the actions, basically.

[00:48:55] Umesh Rajiani: So that's what we're trying to do. Namaste. In theory, they are saying we have to see what comes out in practice.

[00:48:59] Alex Volkov: Yeah, and based at least on their examples, it looks like very compelling and potentially like being able to solve a bunch of stuff or like to remember based on your actions. So neuro symbolic not a new approach. I apologize. I will edit this. Definitely Rabbit said this, you're right and hopefully we're going to get to see this lamb thing.

[00:49:19] Alex Volkov: So back to OpenAI as elections are happening right now and everybody was fearing like, Hey, what's going to happen with deepfakes, et cetera. OpenAI released their guidelines toward election, as they prepare for elections, obviously, they're aware that they're happening. And I think the few interesting things there that they're taking steps to prevent their tools like Dalai and Shajipati from being abused.

[00:49:38] Alex Volkov: I don't know. We have open source, so I don't know if folks will go to the GPT 4 to generate let's say, propaganda. But DALI, for example, starts to integrate some cryptography to their images, which is very interesting. Cryptography solutions, which, again, In case you download the actual file and then send it, could be a thing.

[00:49:58] Alex Volkov: But I don't know if [00:50:00] somebody takes a screenshot of a Dalit generation, if that will apply at all. There are definitely like usage policies for like stuff like Chajapati enforcing limits on political campaigning and impersonating candidates and discouraging voting. And then they want to run ahead of what happened with Facebook and Cambridge Analytica, and like all these things they want to get ahead of us which, it makes sense.

[00:50:18] Alex Volkov: So the technology they use to detect images were generated by DALI I haven't seen any release on them that says, Hey, we'll build a tool for you to actually identify if those are generated images or not. It's going to be interesting because like with LLM writing all of these tools that you use to like dump AI text in there, they're all can be obscured with another LLM.

[00:50:38] Alex Volkov: I don't know if it's a futile attempt, but definitely a worthwhile one. And at least in the basic UI, I think blocking some attempts of destabilizing democracy, I think it's a good idea. And I think that's mostly it. I think there's one different mention that somehow silently they removed where the terms and conditions thing where their outputs is not to be used for war or weapon developing.

[00:51:04] Alex Volkov: And I think they removed that and I think they're also like signed something with Department of Defense, but I think that's all for OpenAI.

[00:51:11] Microsoft announces CoPilot pro

[00:51:11] Alex Volkov: And then I wanted to mention about Microsoft and Umesh, definitely feel free to chime in here as well, because the underlines the benefit for open source, but quickly Microsoft announced Copilot, we've talked about Copilot, the kind of previously BingChat, Copilot everywhere.

[00:51:25] Alex Volkov: So they've announced like different paid plans for Copilot Pro, 20 bucks a month premium, and then it does. Enhanced image creation, where we don't even get We don't even get in, in, in Dali like by default, and it's now generally available for small businesses with no user minimum. So if you guys remember, we've talked about Copilot before when Microsoft announced it for large enterprises it integrates into Microsoft 365 everywhere.

[00:51:49] Alex Volkov: And now the Copilots are also open for smaller businesses. And soon there's going to be like this Copilot Studio to build custom GPTs. Very cool for small businesses. We'll see how much actually folks will use this. And there's also some Microsoft Saga that they've changed some stuff in their pipeline.

[00:52:04] Corporate Drama - Microsoft Azure changing moderation flows and breaking products

[00:52:04] Alex Volkov: So Umesh, you mentioned this in the beginning. We'd love to hear from you what's been going on as you guys are big Azure users through Microsoft.

[00:52:11] Umesh Rajiani: Ooh happened

[00:52:15] Umesh Rajiani: day before yesterday. Actually, we got a call from one of our clients, which is one of the, one of a very big financial institution. And we have a deterministic pipeline, which was constructed using Azure studio, in fact. And we work together with very core Microsoft team actually to make sure that it is right.

[00:52:36] Umesh Rajiani: properly deterministic because there are some legal implications and everything. And and then the tool started failing and because we had some function calling, which would actually go into the knowledge base of the company. And that function calling was was getting extracted, getting triggered using what you call the deterministic intent from user's prompts, basically.

[00:52:56] Umesh Rajiani: And and that entire function calling was failing. Now, we carried out all types of work and everything it was very frantic because it was a front end tool and it started having some impact. And it was, remember, it was working for six months. So it's it worked without any problems for six months and suddenly it just stops working.

[00:53:14] Umesh Rajiani: And the reason was that there were two words that were in the definition of The tool, so that definition of tool was actually informing the pipeline what the tool is all about and that's how the tool was getting invoked and those two words basically were getting flagged into The OpenAI API.

[00:53:32] Umesh Rajiani: So we're basically Azure OpenAI API, not OpenAI's direct API. We are routing it through Azure and it's a separate separate instance of of GPT 4 and there are separate guidelines. They mimic some of the guidelines that are there in OpenAI, but Microsoft has its own guidelines and they change the guidelines without actually informing the clients. That basically triggered. Yeah. So we literally we literally had legal people and literally had fight. It was an open fight, literally, with Microsoft. If you were in that room, you would have you would have seen. It was really bad. And and then eventually there were talks about cases and stuff like that.

[00:54:08] Umesh Rajiani: And eventually, basically actually this company is actually modifying the contract with Microsoft. Where Microsoft will be liable to inform the company before they change any kind of guidelines. And you know what happened after that is, is the beauty because in the beginning of my startup, like beginning of the year, we implemented some solutions where we have a direct contract with Microsoft And we have implemented solution on the backing of those contracts.

[00:54:34] Umesh Rajiani: So in last two days, actually, I've gone back to those clients with whom we have implemented solutions so that they have a direct contract with Microsoft, because we don't want to be a party involved as far as the SLAs are concerned, because this is very dangerous if you're developing solutions for.

[00:54:49] Umesh Rajiani: For people and and if the core solution through which you are driving the entire application pipeline is getting changed without any kind of data contract backing, so to say. Yeah, this is a great learning for us and I've been always a proponent of. Open source solutions, and I think this has given one more kind of a booster to us because now we can go back to the new clients and say, Hey, guys if possible, if we give you the kind of solution that you're looking for, then let's go to open source solution rather than going for a closed source solution.

[00:55:20] Umesh Rajiani: So

[00:55:20] Alex Volkov: And this is like a huge, yeah, a huge like reason why, right? Getting, it's very interesting, like in this area we mentioned, definitely feel free to chime in on this a little bit more. The outputs of LLMs are usually non deterministic. And so this has to be built into understanding when you build tools on top of this.

[00:55:36] Alex Volkov: But this is not that. This is them adding not like a different model or something like a different that you can switch. They're adding something in between or some like policy thing without announcing this to the customers. And supposedly if you go to Azure instead of OpenAI, for example, you would go for the most stability as underlined by the fact that when OpenAI had downtime after Dev Day, Microsoft Azure, GPT for like endpoints, they were all fine.

[00:56:02] Alex Volkov: They were all green, right? So supposedly you would go for the stability and kind of the kind of the corporate backing. There's also like different ISO things and HIPAA compliances, like all these things that Microsoft Azure like proposes on top of OpenAI. But here we have a case where like underlines how.

[00:56:17] Alex Volkov: How important open models that you host yourself are, even if you host them, like maybe on Azure as well, because then nobody can change the moderation endpoints for you and suddenly decide that a few words in your prompt are not, to be used anymore.

[00:56:32] Umesh Rajiani: Yeah, but Alex this had nothing to do with the prompt, actually. It was actually the definition of the function that was there. And the key is like I would draw an analogy to what you call the data contracts. I don't know how many people are aware of data contracts, but when you have.

[00:56:47] Umesh Rajiani: Ownership of data within a very large organization, let's say 20, 000, 30, 000 people up you have data contracts where the data originates from a particular source and some other division is using that data. So you have a contract between those two and that data contract details the data definitions which are there and the contract sign, the signatory of the contract is responsible to ensure that if they change any kind of data structure or data definition.

[00:57:14] Umesh Rajiani: Then the receiver of the data or the client of the data contract is supposed to be informed. That is a part of your data contract. And that's how these large organizations function. And what we need is that kind of a framework where you have a data contract with the service provider.

[00:57:30] Umesh Rajiani: So even if you're going with an open source solution, and if your open source solution is hosted by someone, Then you need to have that kind of a contract in place. So it's not just that open source solution is a solution for everything. It's about the person who is providing the inference. So if you are controlling the inference, then you are secure because you are not going to make the changes without, understanding the repercussions of those changes.

[00:57:52] Umesh Rajiani: But if you are let's say hosting open source model on Amazon Bedrock, for example. And if they have a system prompt that lies in front of your prompt that goes to the the model, then you have to make sure that Amazon adheres to their responsibility in terms of giving you the required inference.

[00:58:12] Alex Volkov: Absolutely. Thanks for giving us the, first of all, like it's, it sucks that it happens and hopefully now Microsoft, like you said, they [00:58:20] changed their their approach here. Aniston, go ahead if you want to follow up.

[00:58:26] Nisten Tahiraj: Yeah. So for us, this has been amazing. I already have clients lining up to pay for the Baclav API. So I'll just say that first before it's even out. However It is extremely unfortunate for those that built, let's say, apps in a hospital or for a therapist because now those kinds of applications just had a moderation engine added, and they added apparently for their safety, and now whoever was relying on these applications, now they just stop working.

[00:59:02] Nisten Tahiraj: Out of nowhere, and this is an extremely immature thing to do this is something you expect from like a random startup with kids, not from freaking Microsoft, and it is pretty worrisome that this safety hysteria has gotten to the point where You're literally just breaking medical applications in production without modifying, without notifying people.

[00:59:27] Nisten Tahiraj: That's just, you lost people's trust now. You're not going to gain that back for a couple of years. And I hope they realize and don't do this again. Don't break production and make changes. To people in Prad that are relying on this for like SOC 2 or as in the case of UMass that have signed service level agreements.

[00:59:49] Nisten Tahiraj: Because now those people lose all their money if they don't, if they don't provide the service. And it's really bad. That's all I have to say. It's pretty bad.

[00:59:58] Alex Volkov: Yep. Very bad look from Microsoft. Even I think I remember like not entirely OpenAI, when they talked about Sunsetting some models and there was like a developer outcry that said, Hey, like we use those, we haven't had time to change how we work with different prompts, et cetera, for the newer models.

[01:00:15] Alex Volkov: And so OpenAI actually went back and said, Hey, we heard you and we're going to release we're going to deprecate deprecation is going to be pre announced in advance. It's going to be way longer Omesh let's yeah, let's go ahead.

[01:00:27] Umesh Rajiani: Yeah, very quickly I think you have raised a very valid point, Alex, that I think all the models that they actually put out of service, they actually should make them open source. I think that's the best solution.

[01:00:39] Alex Volkov: Nah, I wish this was the case. We're still waiting for potentially like open source GPT 2. 5. We haven't seen any open sources from OpenAI for a while. Besides like some GitHub code, I agree with you. There should be a way for folks to keep doing this, the same exact thing they're doing.

[01:00:52] Alex Volkov: I don't know, in my example, I use Whisper, no matter like what their API really says, what it's like, what they deem inappropriate to translate, the Whisper that I use is hosted and it will be the same version until I decide basically and test everything. All right, folks, we're moving forward, I think, just quickly.

[01:01:10] Alex Volkov: There's not a lot of stuff in the vision area. I will mention briefly we've been here for more than an hour. So I'll definitely like recap the space a little bit. If you're joining, let me just play the music and then I'll recap and then we'll get into the interview. So with with Hour 15, you're listening to Thursday Eye. Those of you who just joined us, welcome. If you haven't been here before, this is a weekly space all about AI, open source, as our friend of the pod, Jan, just tweeted out, everybody and everybody in LLM space and open source is in here, and very great to see.

[01:01:45] Alex Volkov: We've covered open source stuff, we've covered corporate drama right now, and then we're moving on to an interview. Thank you.

[01:01:53] This weeks Buzz from Weights & Biases

[01:01:53] Alex Volkov: And then we're going to talk about AI, art, and diffusion, if we're going to have time at the end of this. There's a brief mention that I want to say, but basically, let me just reintroduce myself.

[01:02:01] Alex Volkov: My name is Alex Volkov. I'm the AI Evangelist with Weights Biases. And we have a small segment here for Weights Biases that I want to choose to just bring. I just came back a few days ago from San Francisco Hackathon, the WeHub sponsor with TogetherAI and LengChain. It was a pretty cool hackathon.