Holy SH*T,

These two words have been said on this episode multiple times, way more than ever before I want to say, and it's because we got 2 incredible exciting breaking news announcements in a very very short amount of time (in the span of 3 hours) and the OpenAI announcement came as we were recording the space, so you'll get to hear a live reaction of ours to this insanity.

We also had 3 deep-dives, which I am posting on this weeks episode, we chatted with Yi Tay and Max Bane from Reka, which trained and released a few new foundational multi modal models this week, and with Dome and Pablo from Stability who released a new diffusion model called Stable Cascade, and finally had a great time hanging with Swyx (from Latent space) and finally got a chance to turn the microphone back at him, and had a conversation about Swyx background, Latent Space, and AI Engineer.

I was also very happy to be in SF today of all days, as my day is not over yet, there's still an event which we Cohost together with A16Z, folks from Nous Research, Ollama and a bunch of other great folks, just look at all these logos! Open Source FTW 👏

TL;DR of all topics covered:

Breaking AI News

🔥 OpenAI releases SORA - text to video generation (Sora Blogpost with examples)

🔥 Google teases Gemini 1.5 with a whopping 1 MILLION tokens context window (X, Blog)

Open Source LLMs

Big CO LLMs + APIs

Andrej Karpathy leaves OpenAI (Announcement)

OpenAI adds memory to chatGPT (X)

This weeks Buzz (What I learned at WandB this week)

We launched a new course with Hamel Husain on enterprise model management (Course)

Vision & Video

Reka releases Reka-Flash, 21B & Reka Edge MM models (Blog, Demo)

Voice & Audio

WhisperKit runs on WatchOS now! (X)

AI Art & Diffusion & 3D

Tools & Others

Goody2ai - A very good and aligned AI that does NOT want to break the rules (try it)

🔥 Let's start with Breaking News (in the order of how they happened)

Google teases Gemini 1.5 with a whopping 1M context window

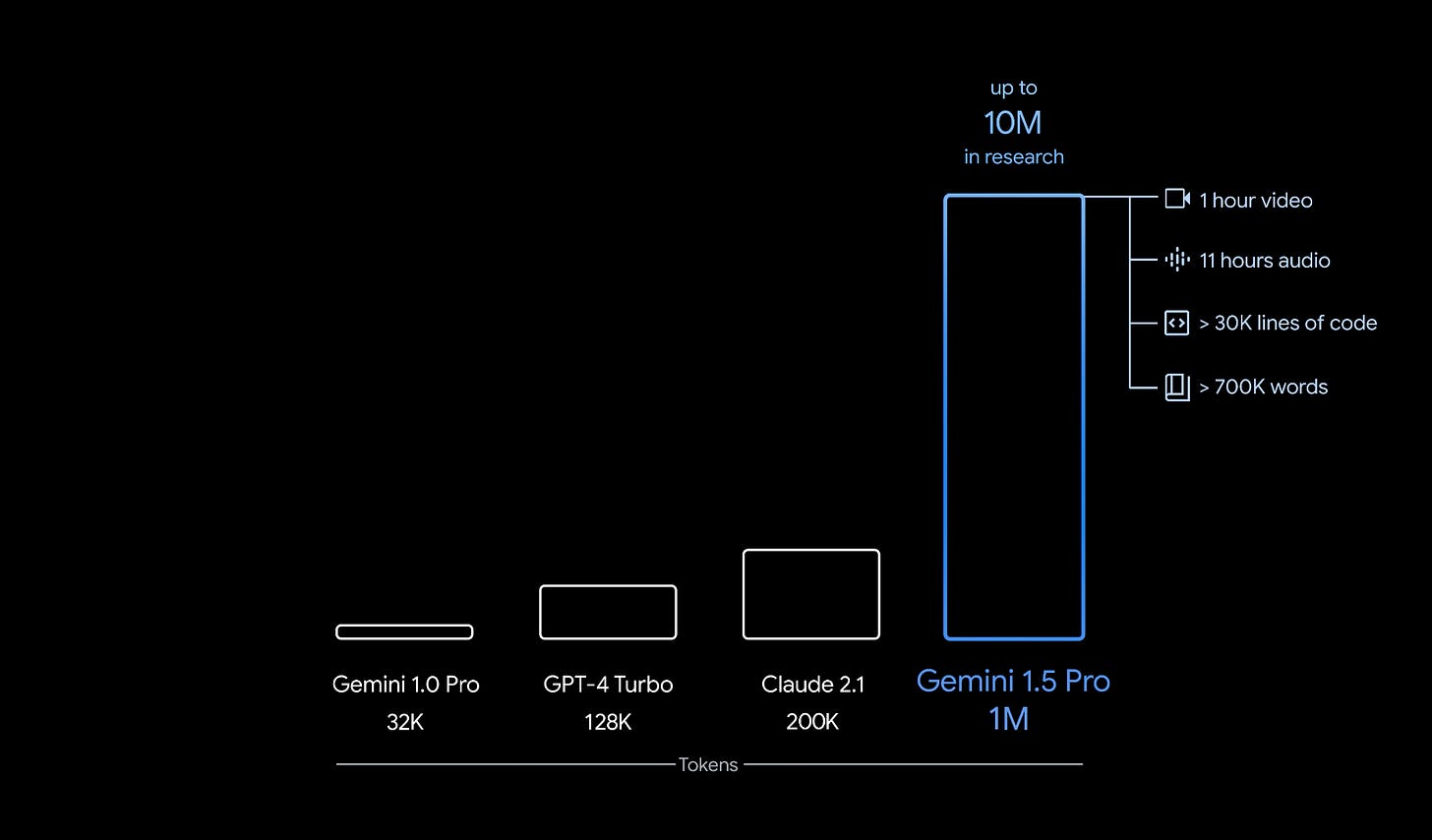

This morning, Jeff Dean released a thread, full of crazy multi modal examples of their new 1.5 Gemini model, which can handle up to 1M tokens in the context window. The closest to that model so far was Claude 2.1 and that was not multi modal. They also claim they are researching up to 10M tokens in the context window.

The thread was chock full of great examples, some of which highlighted the multimodality of this incredible model, like being able to pinpoint and give a timestamp of an exact moment in an hour long movie, just by getting a sketch as input. This, honestly blew me away. They were able to use the incredible large context window, break down the WHOLE 1 hour movie to frames and provide additional text tokens on top of it, and the model had near perfect recall.

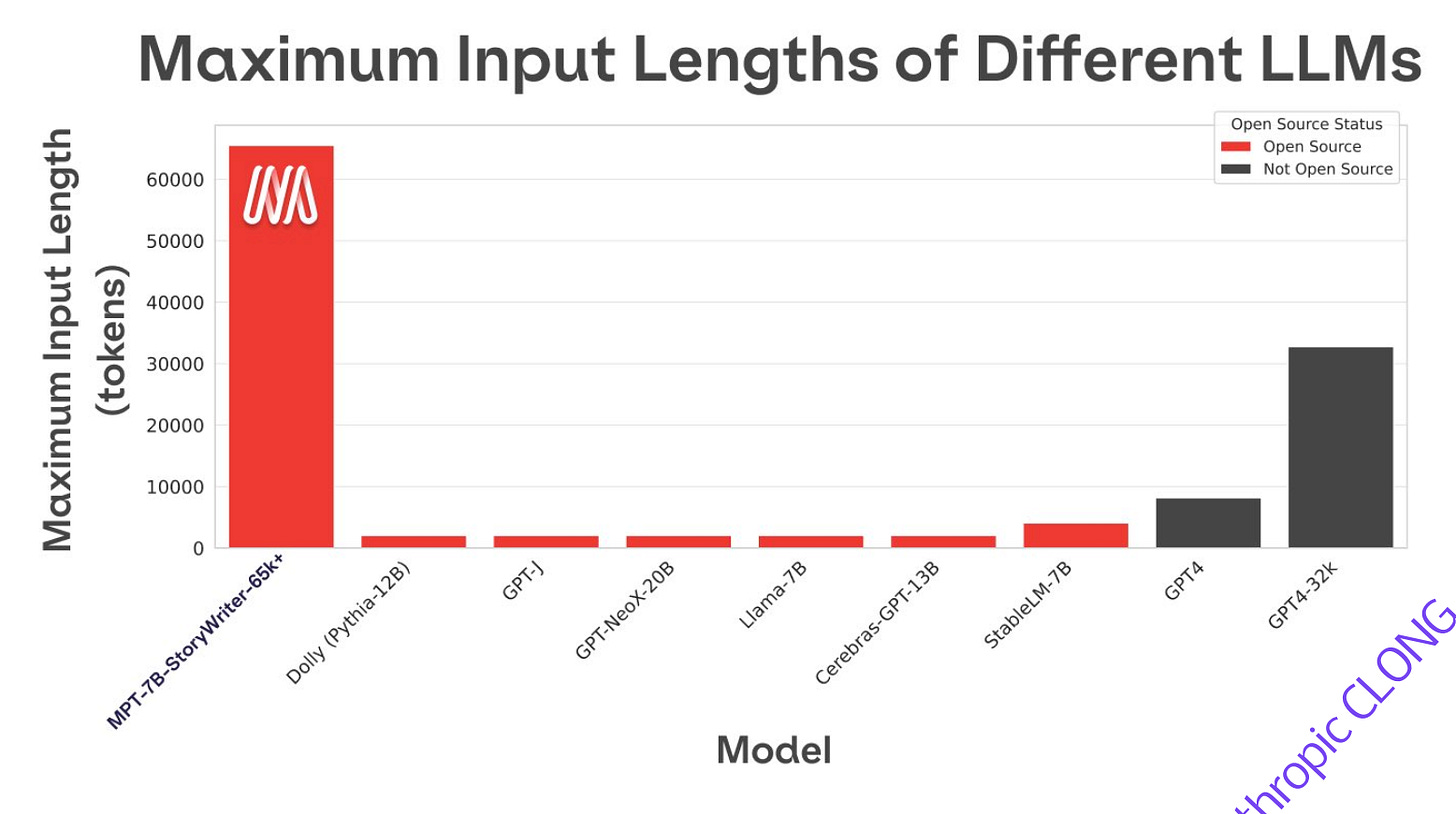

They used Greg Kamradt needle in the haystack analysis on text, video and audio and showed incredible recall, near perfect which highlights how much advancement we got in the area of context windows. Just for reference, less than a year ago, we had this chart from Mosaic when they released MPT. This graph Y axis at 60K the above graph is 1 MILLION and we're less than a year apart, not only that, Gemini Pro 1.5 is also multi modal

I got to give promps to the Gemini team, this is quite a huge leap for them, and for the rest of the industry, this is a significant jump in what users will expect going forward! No longer will we be told "hey, your context is too long" 🤞

A friend of the pod Enrico Shipolle joined the stage, you may remember him from our deep dive into extending Llama context window to 128K and showed that a bunch of new research makes all this possible also for open source, so we're waiting for OSS to catch up to the big G.

I will sum up with this, Google is the big dog here, they invented transformers, they worked on this for a long time, and it's amazing to see them show up like this, like they used to do, and blow us away! Kudos 👏

OpenAI teases SORA - a new giant leap in text to video generation

You know what? I will not write any analysis, I will just post a link to the blogpost and upload some videos that the fine folks at OpenAI just started releasing out of the blue.

You can see a ton more videos on Sam twitter and on the official SORA website

Honestly I was so impressed with all of them, that I downloaded a bunch and edited them all into the trailer for the show!

Open Source LLMs

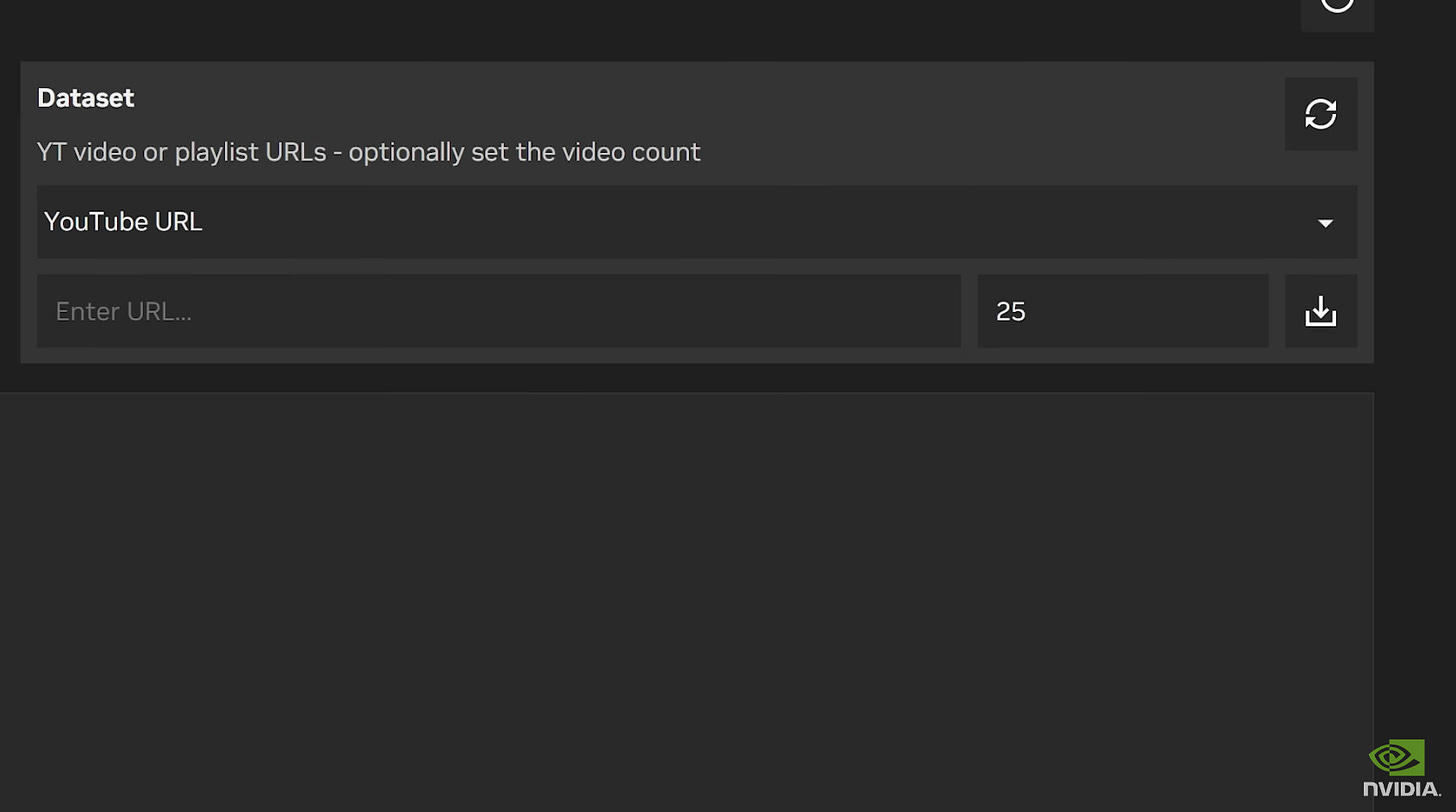

Nvidia releases Chat With RTX

Chat With Notes, Documents, and Video

Using Gradio interface and packing 2 local modals, Nvidia releases a bundle with open source AI packaged, including RAG and even Youtube transcriptions chat!

Chat with RTX supports various file formats, including text, pdf, doc/docx, and xml. Simply point the application at the folder containing your files and it'll load them into the library in a matter of seconds. Additionally, you can provide the url of a YouTube playlist and the app will load the transcriptions of the videos in the playlist, enabling you to query the content they cover.

Chat for Developers

The Chat with RTX tech demo is built from the TensorRT-LLM RAG developer reference project available from GitHub. Developers can use that reference to develop and deploy their own RAG-based applications for RTX, accelerated by TensorRT-LLM.

This weeks Buzz (What I learned with WandB this week)

We just released a new course! Hamel Hussein released a course on enterprise model management!

Course name: Enterprise Model Management

Course Link: wandb.me/emm-course

Who is this for: The course is targeted at enterprise ML practitioners working with models: MLOps engineers, ML team leaders, ML engineers. It shows both at conceptual and technical level how to get the most value of W&B Model Registry and automations. Attached is also a screenshot of a slide from the course on what different personas (MLOps, ML exec etc) get from Model Registry.

What can they expect: Learn how to store, version, and evaluate models like top enterprise companies today, using an LLM training & evaluation example. Big value props: improved compliance, collaboration, and disciplined model development.

Vision & Video

Reka releases Reka Flash and Reka Edge multimodal models

Reka was co-founded by Yi Tay, previously from DeepMind, trained and released 2 foundational multimodal models, I tried them and was blown away by the ability of the multi-modals to not only understand text and perform VERY well on metrics (73.5 MMLU / 65.2 on HumanEval) but also boasts incredible (honestly, never before seen by me) multi modal capabilities, including understanding video!

Here's a thread of me getting my head continuously blown away by the quality of the tonality of this multimodality (sorry...😅)

I uploaded a bunch of video examples and was blown away, it understands tonality (with the dive dive Diiiiive example) understands scene boundaries, and does incredible OCR between scenes (the Jason/Alex example from speakers)

AI Art & Diffusion

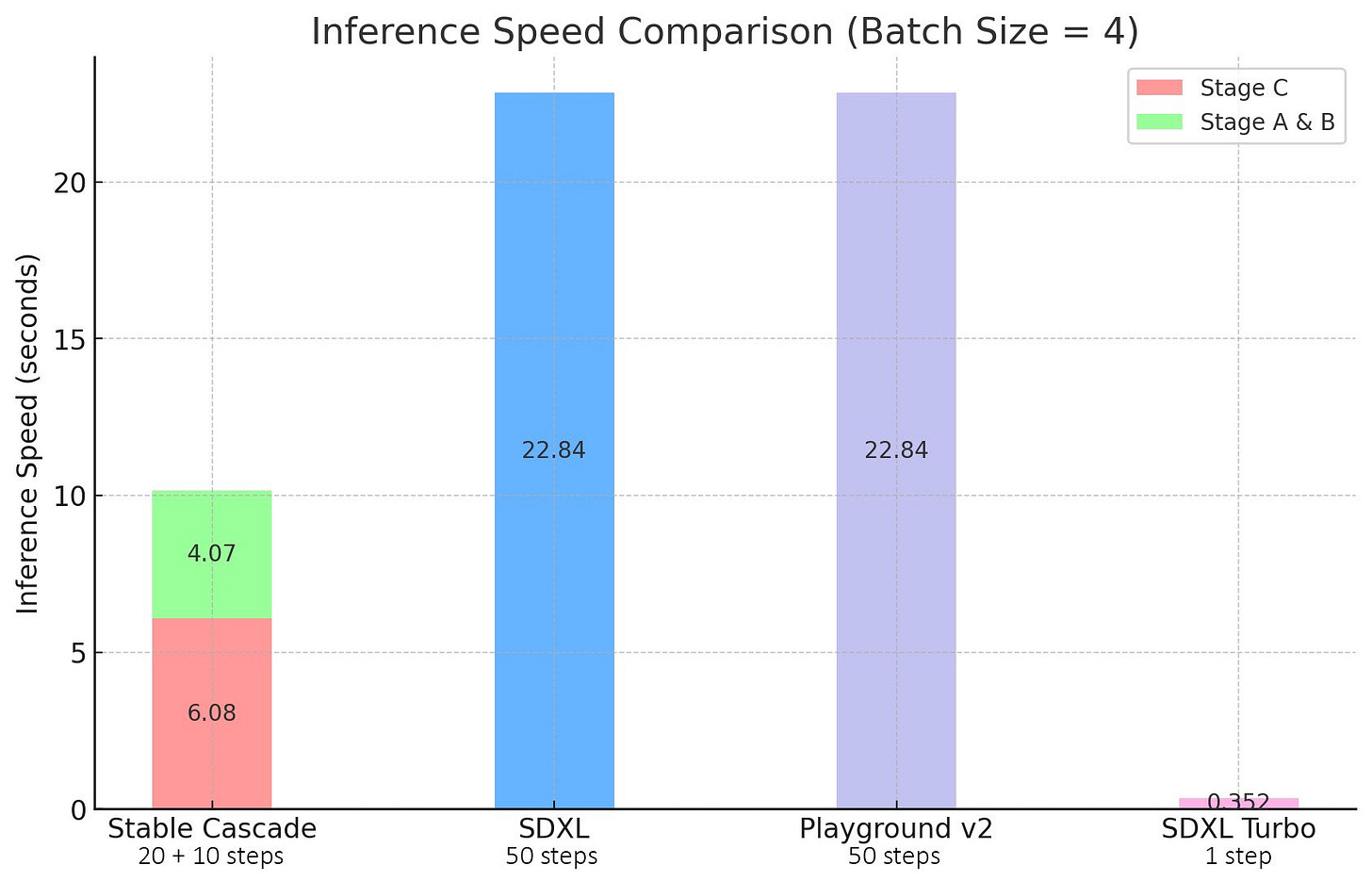

Stable Cascade (link)

Stability AI introduced a new text-to-image generation model called Stable Cascade that uses a three-stage approach to produce high-quality images with a compressed latent space, making it more efficient to train and use than previous models. It achieved better results than other models in evaluations while having faster inference speeds. The company released code to train, fine-tune, and use control models like inpainting with Stable Cascade to enable further customization and experimentation. Stability AI aims to lower barriers to AI development through models like this one.

Nate did a comparison between a much slower SDXL and Stable Cascade here:

Here’s the transcript for the whole episode, you definitely should check it out! It was really one of the coolest shows we had, and we had over 2K folks listening in!

[00:00:00] Alex Volkov: Hey, this is Alex Volkov, you're on ThursdAI, and I just gotta record this intro real quick, because today marks one of the more singular days in AI that I remember since I started recording ThursdAIs, which was itself a singular day, March 14th, 11 months ago, when GPT 4 was released and announced. We since then had a few days like this GPT Dev Day was one such day, and today marks another one.

[00:00:38] Alex Volkov: Google has released an update to their model, talking about 1 million tokens in the context window, basically unlimited. And then, just a few, just an hour or two later, OpenAI said, you know what, we also have something in store, and released the most incredible jump. Incapability of video generation, text to video generation.

[00:01:02] Alex Volkov: It's called SORA, and what you hear is us recording live, knowing only about Google, which came out an hour and a half before we started recording, and then somewhere in the middle, I think minute 35 or something, you'll hear our live reaction to the Incredibly mind blowing advancement in text to video that OpenAI just released.

[00:01:31] Alex Volkov: And I just wanted to record this as I'm finishing up the editing and about to start writing the newsletter, to say, days like this really are the reason why I'm all in on AI and I'm very excited about the changes and advancements.

[00:01:49] Alex Volkov: And I'm sure there will be more days like this going forward. We've yet to see what Apple came up with, we've yet to really see what Meta comes up with Llama 3, etc. And, yeah, I just wish you enjoyed this and I don't have a lot of words here besides just letting you listen to the rest of the episode and say that I was very happy to be in San Francisco for this, the place where most of this happens, and I was very happy to be in company of good friends, both in the virtual world those on stage in our Twitter live recording, and I was sitting across from Swyx, a friend of mine with whom I recorded an interview at the end of this, you can hear.

[00:02:30] Alex Volkov: I just couldn't let go of this chance. We also had a conversation, besides the updates and the breaking news, we also had conversations with the folks who worked on some of the stuff we talked about. I interviewed Yi Te and Max Bain from RECA, which you'll hear later, and the deep dive into RECA multimodal models, which blew me away just yesterday.

[00:02:52] Alex Volkov: And so my head kept getting blown away this week. And I also interviewed The folks who built Stable Cascade, a new stability model that outperforms the existing stability models. Dome, and Pablo. And all of those were great conversations, in addition to just generally the folks who joined me from week to week, Nisten and Far El and Alignment Lab, and we had Robert Scoble join us, with whom I've been buddying up since Vision Pro was released, as he was expecting, and that blew me away just a week ago.

[00:03:23] Alex Volkov: And I'm very excited to share with you this whole thing, and I hope that Yeah, I hope you enjoyed this as much as I do, and I hope that you enjoyed listening to these as much as I enjoy making them. And if you are, just share them with a friend, it would really help. And give us a 5 star review on Apple.

[00:03:38] Alex Volkov: This would great, gratefully help. With that, I'll give you the ThursdAI thing.

[00:03:43] Alex Volkov: All right, let's go. How's it going, everyone? Welcome to ThursdAI. Today is February 15th, and it's quite a day in the AI updates that we've had so far. Quite a day. Even today, this morning, we had like a bunch of updates. But besides those, we had quite a crazy week as well very interesting show today, very interesting show today.

[00:04:13] Alex Volkov: My name is Alex Volkov, I'm an AI evangelist with Weights Biases, and right now I'm getting my picture selfie taken by my today's co host, Swyx. Welcome,

[00:04:23] Swyx: Hey, hey, hey. Good morning, everyone.

[00:04:25] Alex Volkov: And we're in the Latent Space Studio in San Francisco. I flew in just last night. And as I was flying in, there was more news happening. So we're going to cover all of this.

[00:04:34] Alex Volkov: We have a very exciting show today. We have a bunch of guests, special guests that are coming on the second hour of this. So hopefully we'll see folks from the RECA models, and hopefully we'll see some folks from Stability. We're going to get to talk about Google and everything in between. So meanwhile, settle in.

[00:04:50] Alex Volkov: This is going to be a great show today in San Francisco. And maybe I'll also probably share with you why I Flew in here today. That's gonna come up next. So welcome to ThursdAI and we're gonna get started. All right there. Let's get started. Let me Smoothly fade out the music, say hi to everyone here on stage. Hey, Nisten, welcome. We have Robert Skobul over here, folks. We've been, we've been more, more friendly lately than usual because Robert and I are both members of the VisionPro cult. I think that's what you call it, Robert.

[00:05:37] Alex Volkov: But today is, today's the space for, for AI. But Robert you've been covering AI on your feed as well for, for a long time. We have, obviously Swyx is on stage, but also in front of me, which is super cool. And it's been a while, brother. It's great, you just flew back from

[00:05:51] Swyx: Singapore.

[00:05:52] Swyx: Yeah, Chinese New Year.

[00:05:53] Alex Volkov: Are you jet lagged at all or are you good?

[00:05:55] Swyx: I'm good actually. I have had very little sleep, but for some reason that always helps with the jet lag.

[00:06:00] Alex Volkov: Yes, awesome. And I also want to say hi to Alignment Labs, Austin and Far El as well, folks who are working on open source models, and we usually cover a bunch of stuff that they're doing, and usual co hosts and experts here on ThursdAI.

[00:06:11] Alex Volkov: So if you never joined ThursdAI before, just a brief kind of recap of what we're doing. As I said before, my name is Alex Volkov. I'm an AI evangelist with Weights Biases. It's always so fun to say. And Weights Biases is a company that is basically helping all these companies build their AI models, and it's super cool.

[00:06:26] Alex Volkov: And I flew in, I went to the office last night, and I have some cool videos to share with you from the office as well.

[00:06:32] Alex Volkov: and this

[00:06:33] Alex Volkov: is ThursdAI. ThursdAI is a Twitter space and newsletter and podcast that I started a year ago. And then slowly this built a community of fine folks who show up to talk about everything that happened in the world of AI for the past week.

[00:06:46] Alex Volkov: And there hasn't been many weeks like this last week that highlight how important and how cool ThursdAI actually is. Because we just had So much, so much to cover today and usually I start the space with a roundup of the stuff that we're going to run through just for folks who are not patient, don't have a lot of time and we're going to just run through everything we're going to talk about and then we're going to dive deep because we have some breaking news and I even have, hopefully, I have my breaking news button.

[00:07:16] Alex Volkov: Oh, I don't. Oh my God. Okay.

[00:07:17] Swyx: Oh no.

[00:07:17] Alex Volkov: I'm not set up for a breaking news button, but it's fine.

[00:07:20] Alex Volkov: We'll imagine this. I'm going to put this in the, in the, in the post edit. With that said, are you guys ready for a brief recap? Let's go to a brief recap.

[00:07:27] Recap and TL;DR

[00:07:27] Alex Volkov: Alright, folks, back for the recap. Today is Thursday. ThursdAI, February 15th. This is a recap of everything we talked about. And, ooh, boy, this was one of the worst days to be caught outside of my own personal production studio because my, my breaking news button didn't make it all the way here. And there was so much breaking news.

[00:07:57] Alex Volkov: So obviously as I woke up, the biggest breaking news of today was Ai. Actually cannot decide what was the biggest breaking news. So the first piece of breaking news from today was Google releasing a teaser of Gemini 1. 5. And 1. 5 was not only a continuation of Gemini Pro that we got last week, 1. 5 actually was teased with up to 1 million, a whopping 1 [00:08:20] million tokens in the context window, which is incredible.

[00:08:23] Alex Volkov: It's just for comparison, JGPT is currently at 128 and cloud to the best. Highest offering up until Gemini was 200k with Entropic Cloud Advanced and Google teased this out of the gate with 1 million token and their claim they have up to 10 million tokens of context window in in in the demos, which is incredible.

[00:08:44] Alex Volkov: And they've shown a bunch of demos. They did the needle in the haystack analysis that we've talked about from Greg Cumbrand and just quite an incredible release from them. They talked about that you can put a whole like hour of a movie of Dustin Keaton, I think it's called. And then you can actually ask questions about the movie and we'll give you the exact.

[00:09:03] Alex Volkov: Timestamp of something happens. They talked about it being multimodal where you can provide a sketch and say, Hey, when this, this scene happened, it will pull out just like incredibly like magic, mind blowing, mind blowing stuff. And all of this needs a lot of context because you take this, you take this video, you turn it into images, you send this into context.

[00:09:22] Alex Volkov: They also talked about, you can send 10 hours of audio within one prompt and then some ad, And the quality of retrieval is very, very high. You're talking about like 90 plus percentage, 95 plus percentage in the haystack, which is incredible. Again, we had Enrico Cipolla, a friend of the pod who worked on the Yarn paper and the rope methods before extending the LLAMA context.

[00:09:46] Alex Volkov: And he brought like four papers or something that show that open source is actually unlocking this ability as well. And not only today was a credible day just generally, but not only Google talked about a large context window, we also saw that Nat Friedman and Daniel Gross just invested 100 million in a company called Magic, that they also talk about multimodality and large context window up to 1 million as well.

[00:10:08] Alex Volkov: So it was very interesting. To see both of them release on the same day as well. We then geeked out about Gemini. We talked about Andre Karpathy leaving open AI and, and invited him to come to Thursday AI and latent space as well. And then we also mentioned the OpenAI ads, memory and personalization to charge G bt, which is super cool.

[00:10:25] Alex Volkov: They didn't release it to many people. Yeah, but personalization is my personal thread of 2024 because these models, especially with the larger, larger context window with personal per perfect recall, these models will. become our buddies that will remember everything about us, specifically, especially tied into different devices.

[00:10:43] Alex Volkov: Like the tab that's somewhere here behind me is getting built in San Francisco. We, we briefly mentioned that NVIDIA released the chat with RTX local models that you can download and run your NVIDIA GPUs. It has rack built in. It has a chat with YouTube videos and super cool. We talked about Cohere release and AYA 101 multimodal.

[00:11:01] Alex Volkov: And our friend of the pod Far El was talking about how he wasn't finding like super impressive. Unfortunately, He dropped in the middle of this. Apologies for El, but Cohere released a big multi model, which is also pretty cool. We mentioned that NOMIC, our friends at NOMIC, which we mentioned last week, released open source embeddings.

[00:11:17] Alex Volkov: If you guys remember, they released an update to those embeddings, NOMIC Embed 1. 5 with Matryoshka embeddings. Matryoshka. is obviously the name for the Russian doll that like sits one inside each other. And we're going to actually talk with the authors of the Matryoshka paper in not the next Thursday, the next after that.

[00:11:34] Alex Volkov: So we're going to cover Matryoshka but it's what OpenAI apparently used, not apparently, confirmed they used to reduce dimensions in the API for embeddings. Super cool. We're going to dive deep into this. As we're going to learn, I'm going to learn, you're going to learn. It's going to be super cool.

[00:11:48] Alex Volkov: And as we're talking about OpenAI I got a ping on my phone because I'm subscribed to all updates from their main account and we had a collective holy shit moment. Everybody's jaw was on the floor because OpenAI just released Sora, which is a foundational video model, text to video model, that just blew us the F away, pardon my French, because of the consistency.

[00:12:08] Alex Volkov: So if and if you've seen The how should I say the area of video generation has been has been evolving fairly quickly, but not as quick as what we just saw. We saw first we saw attempts at taking stable diffusion rendering frame by frame and the consistency wasn't there. It was moving from one to to another, like the face would change and everything.

[00:12:30] Alex Volkov: You guys saw this, right? So we moved from the hallucinatory kind of videos to Towards consistency videos where stable diffusion recently released and gave us SVD, which was like one to two to three seconds videos. Runway ML gives you the option to choose where the video is going to go. If it's going to be zoom in like brushes, all these things.

[00:12:49] Alex Volkov: And now all of them seem just so futile because open the eyes, Sora, can generate up to 60 seconds of a video. And honestly, we were sitting here just watching all of us just open the Sora website, and we were just mind blown away by the consistency and the complexity of the scenes that you can generate, the reflections.

[00:13:06] Alex Volkov: There was one scene where a woman was walking through the, a very busy street in Japan, and her coat stays the same, her face stays the same. There's another where a Dalmatian dog climbs out of one window and jumps into another. All the spots on the Dalmatian are perfect. perfectly in balance the legs are it's it's really unbelievable how high quality of a thing OpenAI released and what's unbelievable to me also is that The jump from what we saw in video to the open source stuff, or even the runway stuff and Pico stuff, the jump in fidelity, in quality, in consistency, is so much higher than the jump from like 200, 000 tokens to 1 million tokens that Google did.

[00:13:44] Alex Volkov: So it does feel like some folks in OpenAI sat there and said, Hey, Google just released something. It's super cool. It's picking up attention on Twitter. Let's release something else that we have behind the scenes. It looked super polished. So shout out to the folks who worked on Sora. It's really, if you haven't seen The videos, you'll see them in show notes and definitely you'll see them everywhere because Hollywood is about to get seriously, seriously disrupted with the, just the level of quality is amazing.

[00:14:08] Alex Volkov: Compare this with all the vision and, and, and sound stuff. I, moving back to the recap, I'm getting excited again. We also, then we talked about Reka and Reka Flash and Reka Edge from a company called Reka AI. And then, as I love bringing the people who actually built. the thing to talk about the thing.

[00:14:23] Alex Volkov: So we had Yitei and we had Max as well from Reka. Max made for Reka to talk to us about their multimodels. I was very, very impressed with Reka's multimodal understanding. And I think this model compared to Gemini Pro, which is probably huge and runs all the GPUs and TPUs. This model is 21 billion and Reka Edge is even smaller.

[00:14:41] Alex Volkov: And yet it was able to understand my videos to an extent that even surprised the guys who were the co founders of the company. It understood tonality, understood text. And audio in a very specific and interesting way. So we had a conversation with the RECA folks and continuing on this thread. We also had a new model from Stability called Stable Cascade that is significantly faster than SDXL and generates hands and text out of the blue.

[00:15:07] Alex Volkov: It's based on something called Worst Chen, which we learned is a hot dog today. And we had the folks that work behind this, Dom and I'm blanking on the name of the other author that joined. I apologize. It was a very exciting day. So we had a conversation with the guys behind Worshen and Stable Cascade as well.

[00:15:24] Alex Volkov: So definitely check this out. We mentioned that WhisperKid runs now on watchOS, which is quite incredible because Siri's voice to text is still not that great. And I think that's mostly of what we discussed. And then I flipped the mic on my, on my friend here that sits in front of me and I just had a deep dive interview with Swyx.

[00:15:41] Alex Volkov: In the latent space, he just posted a few images as well, and it was a great conversation as well, so definitely worth a follow and a listen if you haven't listened to this. With that, I think we recap ThursdAI on one of the more seminal days that I remember in the AI one after another, and we all hope that, Meta will just release Llama 3

[00:16:01] Investments updates from Swyx

[00:16:01] Alex Volkov: Unless I missed some stuff that's very important. I'll just double check. Nisten, out of the stuff that we've sent, did I miss anything else? Swyx, did I miss anything else?

[00:16:10] Swyx: Today there was also a LangChain Series A. True. With LangSmith.

[00:16:13] Swyx: Yes. There was Magic. dev, Series A with Nat Friedman.

[00:16:16] Alex Volkov: So I was thinking to cover this around the Google stuff because they also announced a longer context craziness.

[00:16:21] Alex Volkov: But definitely, definitely both of those.

[00:16:23] Swyx: Lambda Labs, Alonzo 300 million, Series C.

[00:16:26] Alex Volkov: Oh, wow, yeah, I even commented. I said, hey, Mitesh good. So we love Lambda, definitely. Most of the stuff that we play around with is happening in Lambda. And

[00:16:34] Swyx: Lindy also had their GA launch today.

[00:16:37] Alex Volkov: nice. Okay. Today

[00:16:38] Swyx: Today was a very bad day to launch [00:16:40] things, because everyone else launched

[00:16:41] Swyx: things.

[00:16:41] Swyx: Yes. If you're not Gemini, it's going to be a struggle

[00:16:44] Alex Volkov: I was just thinking, magic. dev, and I guess let's move to just discussing kind of the breaking news of the hour, as we already is. Let's talk about Google, and Gemina 1. 5.

[00:16:55] Google teases Gemini Pro 1.5 with 1M context windows

[00:16:55] Alex Volkov: Do we do a musical transition? Sure, let's do a musical News. This is not the Breaking News music. By not even a stretch, this is not a Breaking News music. But, imagine that we have Breaking News right now, because we do. Just an hour or so ago, we had an update from Jeff Dean and then Sundar Pichai and then a blog post and then a whole thread and a bunch of videos from Google.

[00:17:27] Alex Volkov: And if you guys remember some Google videos from before, these seem more authentic than the kind of the quote unquote fake video that we got previously with Gemini Ultra. So just a week after Google released Gemini Ultra, which is now available as aka Gemini Advance. And just a week after they killed Bard almost entirely as a concept they're now teasing.

[00:17:48] Alex Volkov: Teasing did not release, teasing. Gemini 1. 5, 1. 5, they're teasing it and they're coming out with a bang. Something that honestly, folks at least for me, that's how I expect Google to show up. Unlike before, where they're like lagging after GPT 4 by eight months or nine months, what they're doing now is that they're leading a category, or at least they're claiming they are.

[00:18:07] Alex Volkov: And so they released Gemini 1. 5, and they're teasing this with a whopping 1 million tokens. in context window on production and up to 10 million tokens in context window in research. And just to give a context, they put like this nice animated video where they put Gemini Pro, which they have currently, not 1.

[00:18:26] Alex Volkov: 5, the Pro version. is around 32, I think, and then they have GPT 4 with 128 and then they show Cloud 2 is at 200k and then Gemini 1. 5 is a whopping 1 million tokens, which is ridiculous. Not only that, they also came a little bit further and they released it with the Needle in Haystack analysis from our friend Greg Kambrad, which usually does this.

[00:18:50] Alex Volkov: We'll not be able to pronounce his name. I asked Greg to join us. Maybe he will. A needle in a haystack analysis that analyzes the ability of the model to recall whether or not it's able to actually process all these tokens and actually get them and understand what happens there. And quite surprisingly, they show like 99 percent recall, which is incredible.

[00:19:10] Alex Volkov: And we all know, previously in long context windows, we had this dip in the middle. We've talked about the The butter on toast analogy, where the context or attention is like the butter and context window is the toast and you spread and you don't have enough for the whole toast to spread evenly.

[00:19:27] Alex Volkov: We've talked about this. It doesn't seem, at least

[00:19:30] Alex Volkov: on

[00:19:30] Alex Volkov: the face of it, that they are suffering from this problem. And that's quite exciting. It is exciting because also this model is multi modal, which is very important to talk about. They definitely show audio and they are able to scrub through, I said, they said, I think they said 10 hours of audio or so.

[00:19:47] Alex Volkov: Which is quite incredible. Imagine this is going 10 hours of audio and say hey, when When did Alex talk about Gemini in ThursdAI? That would be super dope and Quite incredible. They also did video. They showed a hour of video of Buster Keaton's something and because the model is multi modal the cool thing they did is that they provided this model with a reference of with a sketch.

[00:20:11] Alex Volkov: So they drew a sketch of something that happened during this video, not even talking about this, just like a sketch. And they provided this multimodal with an image of this and said, when did this happen in the video? And it found the right timestamp. And so I'm very, very excited about this. If you can't hear from my voice, Swyx can probably tell you that it looks like I'm excited as well, because it's, it's quite.

[00:20:31] Alex Volkov: As far as I'm considering a breakthrough for multiple reasons. And now we're gonna have a short discussion.

[00:20:35] Enrico taking about open source alternatives to long context

[00:20:35] Alex Volkov: I want to say hi to Enrico here. Enrico welcome up on stage. Enrico Cipolli, one of the authors of the Yarn paper. And like we've had Enrico before talk to us about long context. Enrico, as we send this news in DMs, you replied that there have been some breakthroughs lately that kind of point to this.

[00:20:51] Alex Volkov: And you want to come up and say hi and introduce us briefly. And let's chat about the long context.

[00:20:57] Enrico Shipolle: Hi, Alex. Yeah, so there actually have been a lot of research improvements within the last couple months, even from before we submitted YARN. You could still scale even transformers to millions of essentially context. length back then. We previously in YARN worked on scaling the rotary embeddings, which was a traditional issue in long context.

[00:21:19] Enrico Shipolle: So I, if you don't mind, I'll probably go through some of the research really quickly because unfortunately,

[00:21:25] NA: so on January 2nd, there was one called, it's called LLM, maybe long LLM. That's a mouthful essentially, but they were showing that you can process these long input sequences during inference using something called self extend, which it allows you to basically manage the context window without even fine tuning these models.

[00:21:48] NA: And then on January 7th, 2024, there was another paper that released, it's called Soaring from 4k to 400k, which allows you to extend like the LLM's context with something called an activation beacon. With these activation beacons, they essentially condense raw activation functions in these models to a very like compact form, which essentially the large language model can perceive this longer context.

[00:22:14] NA: Even in a smaller context window, the great thing about these activation beacons or the LLM, maybe long LLM, is essentially they only take a few lines of code to modify the transformer architecture and get all these massive performance benefits for long context inference.

[00:22:33] Alex Volkov: Are

[00:22:33] Alex Volkov: you serious? Are we getting one of those breakthroughs that take two lines of code, kind

[00:22:37] NA: No so basically all of these require minimal code changes to even be able to scale to, to long, like token counts, whether it's audio, video, image, or text. Text is. Generally, like the shortest token count, if you look at something like RefinedWeb or SlimPajama the, the average token count of a piece of text in that is only anywhere from 300 to 500 tokens.

[00:23:02] NA: So this is actually generally a data centric issue too, when you're talking about long context with even training a standard natural language processing model. The thing about audio and video is, is these have a ton of tokens in them. And the one good thing, and then? the final note, I'm, I'm going to put in, unfortunately, before I have to head out, I know this was a lot of information.

[00:23:22] NA: I can link these

[00:23:24] Alex Volkov: Yeah, we're gonna add some, some of this, we're gonna add some, some links, the links that I'd be able to find, Enrique, if you can send

[00:23:29] NA: Yeah, I'll, I'll send you all the research papers.

[00:23:32] Alex Volkov: Yeah, you want to lend one last thing before we move on? Yeah, go ahead.

[00:23:36] NA: Yeah, So, just the last thing on January 13th is there was this paper called Extending LLM's Context Window with only a hundred samples and they were essentially able to show that even in a very limited amount of long context samples, you're able to massively improve the context lengths of these models. I should mention these are the papers that I found did pretty rigorous evaluation overall, because a lot of them, there's a huge problem in long context evaluation. But I feel these authors generally applied their knowledge pretty well, and these results are really impactful. so, even for the open source community, because you don't need a lot of computational power to be able to scale these context windows massively now.

[00:24:24] NA: And

[00:24:24] NA: that's basically everything I wanted to

[00:24:26] NA: say.

[00:24:27] Alex Volkov: Thank you, Enrico. Thank you, folks. Folks, definitely give Enrico a follow. And we have quite a few conversations with Enrico. If somebody in the open source community knows about Long Contacts, Enrico is that guy. And we're definitely going to follow up with the links in the show notes for a bunch of this research.

[00:24:41] Alex Volkov: And I think just to sum up, Enrico There have been breakthroughs, and it doesn't look like Google is the only folks who come up today. Nat Nat Friedman and Daniel Gross, the guys who have AI grant, they have the Vesuvius Challenge recently, and invest in everything AI possibly. They just announced an investment in magic, that they have a hundred million dollars investment, [00:25:00] quote unquote.

[00:25:00] Alex Volkov: We were so impressed with these guys when we decided to give them a hundred million dollars from Nat Friedman, and they also talk about the model that does. Something like 10 million context windows. Swyx, you wanna, you wanna talk about the magic thing?

[00:25:12] Swyx: They first talked about this last year, like six months ago, and then went completely silent. So we didn't really know what was going on with them. So it's good to see that this is at least real because six months ago they were talking about 5 million token context model.

[00:25:28] Swyx: But no, nothing was demoed. Not even like a little teaser graphic or anything like that. But for Nat to have invested in this amount, I think it's a huge vote of confidence. And it basically promises that you can do proper codebase embedding and reasoning over an entire codebase. Which, it's funny to have a code model that specially does this, because Gemini could also potentially do this.

[00:25:58] Alex Volkov: They showed in their examples 3JS. Did you see this?

[00:26:01] Swyx: No, I didn't see the 3JS, but okay, yeah. And we have a pretty consistent result from what we've seen so far that GPT 4 is simultaneously the best LLM, but also the best code model. There's a lot of open source code models, CodeLlama, DeepSeaCoder, all these things.

[00:26:18] Swyx: They're not as good as GPT So I think there's a general intelligence lesson to be learned here. That it remains to be seen because we, Magic did not release any other details today. Whether or not it can actually do better than just a general purpose Gemini.

[00:26:34] Alex Volkov: Yeah, and so the example that they showed is actually they took 3JS, if you folks know the 3JS library from Mr.

[00:26:40] Alex Volkov: Doob and they, embedded all of this in the context window and then asked questions and it was able to understand all of it Including, finding incredibly huge codebase. And I think I want to just move this conversation.

[00:26:52] Alex Volkov: Yeah, Nisten, go ahead. I see you, I see you unmuting. And folks on the stage, feel free to raise your hands if if you want to chime in. We'll hopefully get to some of you, but we have a bunch of stuff to chat about as well.

[00:27:01] Nisten Tahiraj: I'll just quickly say that there are still some drawbacks to these systems. And by systems the long context models where you dump in a whole code base or entire components in. And the drawbacks, even from the demos, still seem to be that. Yes, now they do look like they're much better at reading and intaking the information, but they're not yet much better at outputting similar length output, so they're still gonna only output, I think, up to 8, 000 tokens or so, and I don't know if that's that's a byproduct of of the training, or they could be trained to re output much longer, much longer context.

[00:27:43] Nisten Tahiraj: However, the benefit now is that unlike Retrieval augmentation system, unlike a RAG the, the drawback with a RAG was that yes, it could search over the document, but it would only find maybe two or three or a couple of points and bring them up. Whereas this one is more holistic understanding of the, of the entire input that you've dumped in.

[00:28:03] Nisten Tahiraj: But again, we're not quite there yet where they can just output a whole textbook. That's, that's what I mean. So that's the thing. That's the next challenge

[00:28:11] Far El: to solve.

[00:28:12] Alex Volkov: So I think, I think the, the immediate reaction that I had is very similar to what you had, Nisten. RAG is something everybody uses right now. And we've talked about long context versus, versus something like a RAG before, and the usual conversation we have is usually about cost. How much does it cost you pair these tokens, right?

[00:28:30] Alex Volkov: If you send 10 million tokens and each token is like a cent, you're basically paying 10 million cents for every back and forth. Also speed and, and user experience. If your users are sitting there and waiting for 45, 60 seconds because they sent a bunch of contacts, if you can solve this with RAG, then RAG is probably a better approach for you.

[00:28:48] Alex Volkov: However, however this specifically looks like. At least from the examples that the Google did, they showed the video transparently, they sped up the inference, but I saw something where with at least the video question, it took them around 40 seconds. to extract a frame of a video of an hour. They sent an hour worth of context of a video within this thing, and it took them 40 seconds for this inference.

[00:29:13] Alex Volkov: Folks, like I said before, and I'm going to say this again, regular ChatGPT, not even crazy context, queries took me sometimes 40 seconds. Now, you may say, okay, Alex they show the demo of their environment, and ChatGPT is in production environment. Yes, but the possibility is, if I can send I don't know, 500, 000 tokens in the context window, and then within 40 seconds get a response which is equivalent to what I get from GPT 4.

[00:29:38] Alex Volkov: Then I think that a bunch of the conversation about RAG being better just from a speed of inference perspective are slowing down. An additional thing I want to say before I get to you, Yam, just a second the immediate response in my head was, okay, RAG is done for, or at least not done for, but definitely the kind of the crown on RAG's head.

[00:29:56] Alex Volkov: Everybody's talking about RAG. There's vector databases everywhere. We just had folks talk about Colbert and different things. RAG is, okay, RAG is now shaky. But the other thing I started to think is, is fine tuning. also under risk. And Swyx, I think this goes back to what you just said about like the general models versus the maybe the Finetune or very specific models, because if a general model can take a whole book, and they had an example about this where there was a very low resource language, Kalamathi, Kalabathi, something like this, and there's only one book that's a dictionary for this language, they literally threw the book in the context window, and the model was able to, from context learning, to generalize and understand this and perform better than fine tuned models.

[00:30:37] Alex Volkov: And I'm thinking here okay, rag is the first thing to go. Is fine tuned second? Are we going to stop fine tuning and sending contexts? So Swyx, I want to hear your reaction about, about the language thing and then we're going to get to Yam and then we're going to ask some more folks.

[00:30:48] Discussion about effects of longer context windows

[00:30:48] Swyx: Yeah, I think there's generalizable insights about learning about language. And it's not surprising that throwing that into the context window works, especially if it's a cognate language of something that it already knows. So then you're just learning substitutions, and don't forget that transformers are initially trained to do language translation, like this is like bread and butter stuff for transformers.

[00:31:12] Swyx: The second thing I would respond to is, I have to keep saying and banging this drum, long context does not kill RAG because of cost. Imagine if every time you throw 10 million tokens of context in there, you have to pay like a thousand dollars. Because unless something fundamentally is very, very different about this paradigm, you still pay to ingest those tokens of cost.

[00:31:39] Swyx: So ultimately, people want to still reg for cost and then for attribution reasons, like debuggability attribution, which is something that's still valuable. So I think long context is something that I have historically quite underweighted for this reasons. I'm looking to change those assumptions, of course, because obviously this is magical capabilities if you can use

[00:32:03] Alex Volkov: this is magical capabilities if you can use

[00:32:10] Far El: Yeah, I just want to say on the topic of of latency and ingesting a lot of context. I think that there is a solution that we didn't talk about it here and will be something that is going to be incorporated in all the flagship models, which is embedding embedding knowledge into the KB cache, which is something that many of the inference engines today can do.

[00:32:34] Far El: And you simply just prefix the context beforehand, and then you don't need to process it through your model. So you're not sending the whole database each time you are calling your model. It's just saved. Imagine that OpenAI have some sort of API that you embed. The KD cache beforehand, and it's reduced price, of course, and then it uses that as, as your context.

[00:32:59] Far El: Basically, somewhere in the middle between the two. And the reason that it's not supported now in flagship models, because the first flagship model that supports a million tokens came out today. But I think that if we see this this, if we go there, this is something that we're going to see in all of the APIs.

[00:33:18] Far El: Moreover, I also don't [00:33:20] think that RUG is done for it because RUG is explaining to you very, very clearly and very simply. Where the information is coming from, what the model is basing itself on. You can claim that the model with the attention you can do it as well, but it's not like RUG. RUG, you're just showing the clients, the people, exactly where it comes from.

[00:33:40] Far El: And there are use cases where this is absolutely a must. So I think that there will always be room for RUG for these specific use

[00:33:49] NA: cases and long

[00:33:50] Far El: context. With KVCaching is going to be, I think, I think the methods for embedding, for example, a full database, or a book, or something big, and using it multiple times, with many different

[00:34:05] Far El: prompts.

[00:34:06] Alex Volkov: Or also multimodality, right? So thank you for this. Definitely, definitely makes sense. And I think somebody in the comment also left a similar comment as well. So we want to dive into the KVCache stuff maybe in the next one. But I want to talk about the multimodality part of this because, um We've, we've multiple times mentioned.

[00:34:25] Alex Volkov: I think we did this every Thursday. I sense GPT 4 launched because we were waiting for the vision part of GPT 4. And we've talked about 2024 being the year of multimodal. And we're going to have to talk about a bunch of multimodal stuff today, specifically with the RECA folks and the RECA flash, which understands videos.

[00:34:40] Alex Volkov: They basically, so I'm going to have to see whether RECA understands videos better than Gemini, but the Gemini folks talked about there's a specifically. A bunch of multi model effect on the context window where if you send videos, you, at least the way they did this was just frames. They broke down this movie to a bunch of 500, 000 frames or so and just sent it in context window.

[00:35:04] Alex Volkov: And they basically said we have all this video in the context window and then we have a little bit of text. And I think context window expansions like this will just allow for incredibly multi modal use cases, not only video, audio, they talked about, we've talked about previously with the folks from

[00:35:20] Alex Volkov: Prophetic about different fMRI and EEG signals that they're getting like multi modal like applications as well and Context window enlargement for these things, Google specifically highlighted.

[00:35:32] Alex Volkov: And I want to highlight this as well because it's definitely coming. I'm waiting for being able to live stream video, for example. And I know some folks from like 12 Labs are talking about almost live live stream embedding. So definitely multimodal from Google. I think, folks, we've been at this for 30 minutes.

[00:35:48] Andrej Karpathy leaves OpenAI

[00:35:48] Alex Volkov: Alright, so folks, I think we're going to move on and talk about the next kind of a couple of stuff that we've already covered to an extent, but there's some news from OpenAI, specifically around Andrej Karpathy leaving, and this was announced, I think broke in the information, and Karpathy, some folks here call them senpai, Karpathy is a very Very legit, I don't know, top 10, top 5, whatever, researchers, and could potentially have been listening to the space that we had with LDJ after he left, or, yeah, I think it says, it was clear that he left it was the information kind of announcement didn't have a bunch of stuff, but then Andrei just As, as a transparent dude himself, he came and said, hey, this wasn't the reaction to anything specific that happened because speculations were flying.

[00:36:33] Alex Volkov: And I think at least, at least to some extent, we were in charge of some of these speculations because we did a whole space about this that he could have just listened to. But as speculation was flying, maybe this was ILLIA related, maybe this was open source related, like all of these things.

[00:36:46] Alex Volkov: Andre basically Helped start OpenAI, then left and helped kickstart the Tesla Autopilot program, scaled that to 1500, then left. On the chat with Lex Friedman, Andrei said that Basically, he wanted to go back to hands on coding, and in OpenAI, his bio at least said that he's working on a kind of Jarvis within OpenAI, and definitely Andrei has been also talking about the AI as an OS, Swyx, you wanna, you wanna cover like his OS approach?

[00:37:14] Alex Volkov: I think you talked about this. He had a whole outline, I think you

[00:37:17] Swyx: also

[00:37:17] Swyx: talked about this. LLM OS.

[00:37:18] Swyx: Yeah. He wasn't working on it so much as thinking about it.

[00:37:21] Swyx: Thinking about it,

[00:37:21] Swyx: yeah. And maybe now that he's independent, he might think about it. The main thing I will offer as actual alpha rather than speculation is I did speak to friends at OpenAI who reassured us that it really was nothing negative at OpenAI when he left.

[00:37:40] Swyx: Apparently because they spoke to him before he left.

[00:37:43] Swyx: So yeah, he's for the way I described it is he's following his own internal North Star and every time he does that the rest of us

[00:37:51] Alex Volkov: And definitely the rest of us win.

[00:37:53] Alex Volkov: the open source community is hoping, or I've seen many, many multiple things that say, hey, Andre will unite like the, the, the bands of open source, the different bands of open source.

[00:38:02] Alex Volkov: Andre posted this thing. on his ex, where like his calendar was just free, which shows maybe part of the rationale why he left, because meetings and meetings and meetings and everything and now he can actually work. So shout out to Andrej Karpathy for all he did in OpenAI and for all he's going to continue to do.

[00:38:16] Alex Volkov: We're going to definitely keep up to date with the stuff that he releases. Andrej, if you're listening to this, you're more than welcome to join. We're here on every Thursday. You don't have to have a calendar meeting for this. You can hop on the space and just join. Also on the topic of OpenAI, they've added memory to ChatGPT, which is super cool.

[00:38:31] Alex Volkov: They released a teaser, this, I didn't get into the beta, so they released it to a limited amount of people. They added memory to ChatGPT, and memory is very, very cool, the way they added this as well. So I've said for a long time that 2024 is not only about multimodality, that's obviously going to come, but also it's about time we have personalization.

[00:38:51] Alex Volkov: I'm getting tired of opening a ChatGPT. Chat, and have to remember to say the same things on, it doesn't remember the stuff that previously said. The folks in OpenAI are working on the differentiator, the moat, and different other things, especially now where Google is coming after them with the 10 million context window tokens.

[00:39:08] Alex Volkov: And, they're now adding memory, where ChatGPT itself, like the model, will manage memory for you, and will try to figure out, oh, OpenAI, oh my god, breaking news. OpenAI just shared something. As I'm talking about them, you guys want to see this? Literally, I got a

[00:39:28] Alex Volkov: notification from OpenAI as I'm talking about this.

[00:39:30] Swyx: What?

[00:39:32] Alex Volkov: Let's look at this. I, dude, I needed my, my breaking news button today. Opening, I said, introducing Sora, our text to video model. Sora can create videos for up to 60 seconds.

[00:39:44] Alex Volkov: Holy shit, this looks incredible. Oh my god, somebody please pin this to the, to the, Nisten, you have to see, there's a video, 60 second video, folks.

[00:39:54] Alex Volkov: Like, all of the, oh my god, breaking, I have to put the breaking news button here, holy shit. So folks, just to describe what I'm seeing, cause somebody please pin this to the top of the space every video model we had so far, every video model that we had so far does 3 to 4 seconds, Pica the other labs, I forgot their name now, Runway, all of these models,

[00:40:16] Swyx: they

[00:40:16] Swyx: do

[00:40:16] Swyx: Oh my god, Runway.

[00:40:18] Alex Volkov: They

[00:40:18] Alex Volkov: do three to five seconds and it looks like wonky, this thing just that they show generates a 60 second featuring highly detailed scenes and the video that they've shared, I'm going to repost and somebody already put it up on space has folks walking hand in hand throughout a There's a zoomed in, like behind the scenes camera zooming in.

[00:40:39] Alex Volkov: There's a couple Consistent I cannot believe this is January. Holy shit The consistency is crazy. Nothing changes. You know how like previously video would jump frames and faces and things would shift

[00:40:52] Alex Volkov: Wow, okay, so I guess we should probably talk about this. Reactions from folks. I saw LDJ wanted to come up to see the reaction I'm

[00:41:00] Far El: just wild. Honestly, it looks crazy. It looks really good quality. Better than most text to video models that I've seen.

[00:41:08] Alex Volkov: Holy shit okay, so I'm scrolling through the page, folks,

[00:41:13] Alex Volkov: those who are listening, openai. com slash Sora, Sora is their like text to video I'm seeing a video of a model walking through like a Japan street, whatever, the prompt is, a stylish woman walks down a Tokyo street filled with warm glowing neon animated city signage, she wears a black leather jacket, long red dress, and black boots, and the consistency here is insane.

[00:41:35] Alex Volkov: I do

[00:41:35] Far El: out the mammoths. Or actually go on their websites. On the Sora, [00:41:40] on OpenAI's website. They've got a

[00:41:42] Far El: few examples. It's crazy. It's crazy. I've

[00:41:45] Far El: never seen a

[00:41:48] Alex Volkov: the if you showed me this yesterday, Far El, if you showed me this yesterday and said this is generated, I would not believe you. So what happens is, now the same video of this woman walking, they have a video camera zooming in, into her eyeglasses, her face stays the same, the same consistency, you can see reflection in the, in the sunglasses.

[00:42:08] Far El: Alex, you have to go on the website. There's like this video of, oh like literally the prop is reflections in the window of a train traveling through the Tokyo suburbs. And

[00:42:19] Far El: honestly, it looks, it looks like someone captured this no way this is AI

[00:42:23] Far El: generated. It's, it's crazy

[00:42:27] Alex Volkov: Wow,

[00:42:27] Alex Volkov: folks. What's the availability of this? Let's, let's see, what do we know? So we know safety. We'll be taking several important safety steps ahead of making SORA available on OpenAI's products, so it's not available yet. Working with Red Teamers, they don't want this to be used in deepfakes for porn, obviously.

[00:42:43] Alex Volkov: That's like the first thing that the waifus are going to use it for. The C2PA metadata that, if you guys remember, we've talked about that they started including in DALI, they're going to probably include this as well. And new techniques prepared for deployment, leveraging the existing safety methods.

[00:42:56] Alex Volkov: Okay research techniques.

[00:42:58] Far El: Crazy.

[00:43:00] Alex Volkov: Consistency is crazy, right folks?

[00:43:02] Swyx: Yeah, it's not available it looks like.

[00:43:03] Swyx: Not available

[00:43:04] Swyx: yet.

[00:43:04] Swyx: To answer your question. They released some details about it being a diffusion model. They also talked about it having links to DALI 3 in the sense that Honestly, I don't know if people know that there was a DALI 3 paper, which is very, very rare in this age of Not close.

[00:43:22] Swyx: Not open ai.

[00:43:23] Alex Volkov: Yeah, not

[00:43:24] Swyx: open AI.

[00:43:24] Swyx: And so they doing this like synthetic data captioning thing for the DO three model and they're referencing the same method for soa. I would just go read the Dolly three paper

[00:43:37] Alex Volkov: Wow. I, I, the consistency has been the biggest kind of problem with these LDJ.

[00:43:41] Alex Volkov: Go ahead, please. As I'm reading this and reacting and, and my mind is literally blown the demo of the doggy. Hold on nj one second. There's a demo. There's a video of the dog, like walking from one window and jumping to another window and the pause, they look like it's a video, like folks like literally does not look like generated, like anything we've seen before.

[00:44:02] Far El: This, is going to disrupt Hollywood immediately we're talking about, text to video disrupting media content creation and so on this is it, this is like the mid journey moment of, of text to video that same feeling that we had when we were able to crop mid journey and get some really high quality images this is the same but for video, essentially.

[00:44:23] Alex Volkov: This, this breaks reality for me right now. Literally I'm watching this video multiple times. I cannot believe that the dog's paws are not shaping in different shapes. The spots on this Dalmatian dog stay in the same place throughout the video. It, it don't make sense. Alright, LDJ, go. I think, I think,

[00:44:37] Far El: Yeah so

[00:44:38] Far El: Sam here, I'll post it on the, on the ding board. Sam said that that certain select creators have access now. And, oh, I just lost the tweet. I'll, I'll get it. But yeah, he says that some creators already have access and I guess they're going to slowly expand it out to like beta users or whatever.

[00:44:59] Alex Volkov: Wow, so Sam asked for some we can show you what Sora can do. Please reply with captions for videos you'd like to see and we'll start making some.

[00:45:06] Alex Volkov: So

[00:45:06] Swyx: Oh yeah, basically give him some really complicated prompt, and let's, let's go, let's go.

[00:45:12] Alex Volkov: A bunch of podcasters sitting, watching Sora and reacting in real time and their heads are blown.

[00:45:17] Alex Volkov: Not literally, because this is insane. How's that for a prompt? I'm gonna post it. Hopefully some will get it.

[00:45:25] NA: Just opening a portal through Twitter, through OpenAI to the Munich and then string

[00:45:31] Alex Volkov: Oh, there's, there's also, I don't wanna spend the rest of Thursday. 'cause we still have a bunch of talk about folks.

[00:45:38] Alex Volkov: Is anybody not scrolling through examples right now? And you definitely should. There's an example of a

[00:45:43] Swyx: there's only nine examples.

[00:45:45] Alex Volkov: What, what

[00:45:45] Far El: This is insane.

[00:45:46] Alex Volkov: The whole, no website has a bunch of, scroll down.

[00:45:48] Alex Volkov: There's like every, every kind of example has

[00:45:51] Alex Volkov: more scrollies. So I'm looking at an example of a chameleon, which, has a bunch of spots and has guys, the spots are in the same place. What the fuck? It doesn't move. it does not look like honestly, let's do this. Everybody send this to your mom and say, Hey mom, is this AI generator?

[00:46:07] Alex Volkov: Or not? Like older folks will not believe this shit, like

[00:46:10] Swyx: I, I will

[00:46:13] Far El: What's the most impressive

[00:46:14] Swyx: compare this to Google

[00:46:15] Far El: right? Like humans,

[00:46:17] Swyx: don't know, I think you guys

[00:46:18] Alex Volkov: hold on. Pharrell, I think, I think we're talking over each other. Give us a one sec. Swix and then Farrell.

[00:46:22] Swyx: Oh, sorry, yeah, there's a bit of a lag. Oh, no, nothing. Just compare this to Google Lumiere where they release a bunch of sample videos as well.

[00:46:29] Swyx: But you could, the, the, I was impressed by the consistency of the Lumiere demo videos. They would, they demoed sort of pouring syrup onto a pancake and then infilling the syrup and showing that, it would be pretty realistic in pouring all that syrup stuff. Didn't really see that kind of very technical test here.

[00:46:49] Swyx: But the resolution of these videos and the consistency of some of these movements between frames, and the ability to cut from scene to scene is way better. Instantly way better. I was thinking that Lumiere was, like, state of the art a few weeks ago, and now it is completely replaced by Sora.

[00:47:08] Swyx: This is a way better demo. I think OpenAI is showing Google how to ship.

[00:47:12] Alex Volkov: eye. Decided to say, you know what, Google, you think you can one up us with the context window?

[00:47:18] Alex Volkov: We got another thing coming, because I've

[00:47:20] Swyx: just pull up the Lumiere page, and then pull up the Sora page, and just look at them side by side, and you can see how much better they

[00:47:26] Alex Volkov: Lumiere

[00:47:26] Alex Volkov: was mind blowing as well. Go ahead, Far El. Go ahead, because we're still reacting in real time to this whole ridiculously impressive.

[00:47:32] Far El: Yeah, I was just saying that the the most impressive thing are, is like how alive these video shots feel, right? Humans talking action scenes like, all the text to video models that I've seen so far and I've used were very very simplistic, right? It felt like more like you're animating an image to do very minor movements.

[00:47:55] Far El: It wasn't actually alive in any way, but Sora's text to videos is, is nuts, the quality, the consistency, the action, like the actual action of the characters. I wonder how much like granular control do you have on a scene to scene basis. I know that Google released like a paper I think a few months back where they had a basically like a script that allowed the, like for much more long form.

[00:48:27] Far El: video content, but I'm not sure if that's the case here. It's just, it's just really impressive. It's, it's really impressive.

[00:48:35] Alex Volkov: I want to say one of our friends, LaChanze, just sent, at the bottom of the page, it says, Sora serves as a foundation model that can understand and simulate the real world. I can it's really hard for me to even internalize what I'm reading right now, because the simulation of the real world, it triggers something in me, tingles the simulation hypothesis type of thing, and this can regenerate the map of the world and then zoom in and then generate all the videos.

[00:48:58] Alex Volkov: And I'm wearing this Mixed, slash, augmented, slash, spatial reality headset that just generates and this happens on the fly, and what am I actually watching here? So this says Sura serves as a foundation for models that can understand and simulate the real world, a capability we believe will be an important milestone for achieving AGI.

[00:49:15] Alex Volkov: Yeah. Alright, folks. I will say, let's do two more minutes, cause this is I can't believe we got both of them the same day today, holy shit, we got 10 million contacts window from Google announcement, which is incredible, multi modal as well, I like, my whole thing itches right now to take the videos that OpenAI generated and shove them into, into a Gemini to understand what it sees and see if if it understands, it probably will.

[00:49:40] Alex Volkov: Wow.

[00:49:40] Far El: Thing that would make this Thursday a tiny bit even more awesome is if Meta comes out with telemetry. Too much, too much, too much.

[00:49:51] Alex Volkov: It's

[00:49:51] Alex Volkov: gonna be too much. We need, we need a second to like breathe. Yeah, definitely folks. This is a Literally like singular day. Again, we've [00:50:00] had a few of those. We had one on March 14th when ThursdAI started, OpenAI released GPT 4, Entropic released Cloud, I think on the same day. We had another one when OpenAI Dev Day came about, and I think there's a bunch of other stuff.

[00:50:12] Alex Volkov: I consider this to be another monumental day. We got Gemini 1. 5 with a potential 10 million context window, including incredible results in understanding multimodality in video, up to an hour of video. And then we also have some folks from RECA that's gonna come up soon and talk about their stuff, which is, they just with all due respect with RECA folks this news seems bigger, but they still launched something super, super cool we're gonna chat about, and now we're getting, it's just, the distance, we're used to jumps, we're used to state of the art every week, we're used to this, we're used to this model beats this model by Finetune, whatever, we're used to the OpenAI leaderboard, this is

[00:50:53] Alex Volkov: such a

[00:50:53] Alex Volkov: big jump on top of everything we saw.

[00:50:55] Alex Volkov: From Stable Visual Diffusion. From what are they called again? I just said their name, Runway. I forgot their always forget their name.

[00:51:02] Swyx: Poor guys.

[00:51:04] Alex Volkov: Poor Runway. From Pica Labs. From folks who are generating videos. This is just such a huge jump in capability. They're talking about 60 seconds.

[00:51:14] Alex Volkov: Oh, Meta just announced JEPA. Yeah, I don't know if JEPA is enough. People are commenting about JEPA, and I'm like, okay wait, hold

[00:51:21] Swyx: You, you spiked my heart rate when you said Meta just announced. I was like, what the fuck?

[00:51:25] Alex Volkov: the fuck? Meta literally just came out with an announcement, VJEPA, supervised learning for videos.

[00:51:29] Alex Volkov: But, folks unless they come out with Lama 3 and it's multimodal and it's available right now, not Meta is not participating in the

[00:51:35] Swyx: thing

[00:51:36] Alex Volkov: day

[00:51:36] Far El: Oh wait, this is actually cool. So this is this is something,

[00:51:39] Far El: actually a paper they came out with like about a month ago, but this is for video understanding. So this is pretty much like for input of video, while OpenAI's model is for output of video.

[00:51:51] Alex Volkov: It just, I will say it's a research thing, right? So they're not showing anything there, unless I'm mistaken. Um So, I kinda, so I still have a bunch of stuff to give you updates for, and I still have a bunch of interviews as well, there's a new stability model, but I'm still like, blown away, and I just wanna sit here and watch the videos,

[00:52:07] Alex Volkov: Is this what Ilya saw? Yeah, somebody reacted like, what did Ilya see? Did Ilya see a generated video and the model understanding this and that's why, that's why?

[00:52:16] Far El: No, I think, I think, I think AGI has been achieved internally at

[00:52:21] Far El: this rate.

[00:52:22] Alex Volkov: Wow. I, I'm, I'm still blown away. Like I, if a model can generate this level of detail in very soon, I just wanna play with this. I wish, I wish we had some time to, to, to, I, I was one of the artists and I hope that somebody in the audience here is, and that they will come to talk about this on Thursday.

[00:52:43] Alex Volkov: I and because I'm, yeah. I'm still mind blown. So I see. Quite a few folks that I invited that I wanna, I wanna welcome to the stage. VJEP understands the world while Sora generates one. That's the comment that some folks led. And okay, okay. VJEP is going to be something we definitely cover because Meta released this and Meta are the GOATs, even though yeah, no, Meta's definitely GOATs. I'm just a little bit lost for words right now.

[00:53:06] Nisten Tahiraj: Yeah, so if people have watched a lot of speeches from Yann LeCun is the, the main idea is that these AI models are not very good at understanding the world around them or thinking in 3D. So in some ways, you could reason out that A cat is a lot more intelligent even if it was blind and it couldn't smell, it could still figure out where to go and find its letterbox stuff like that.

[00:53:30] Nisten Tahiraj: This is one part that's missing from the world model that they get purely just from word relationships or word vectors. And so this is a step in that direction, it seems. Again, I haven't read the paper, so I'm Half making stuff up here but it feels like this is a step in, in that direction towards AI models that understand what's going on like us and animals do.

[00:53:56] Nisten Tahiraj: So that, that's the main, the gist of it for, the audience.

[00:54:04] Alex Volkov: Oh, what, what a what A Thursday. What A Thursday. I gotta wonder how am I'm gonna summarize this, all of this. And I just wanna invite, we have here in the audience and I sent you a request to join. If you didn't get it. Make sure that you're looking at requests and then accept. And then we should have, we should have Max as well at some point.

[00:54:20] Alex Volkov: Lemme text Max. 'cause we have guest speakers here from, from Breca that we wanna chat with. Meanwhile I'm gonna continue and, and move forward in some of the conversations. Let's roll back. Okay, while we're still super excited and I can't wait for this to come out, this is an announcement that they did.

[00:54:35] Alex Volkov: It's very polished. We haven't seen we didn't see any access or anything about when it's going to come out. I do feel that this is a breakthrough moment. from Google and from OpenAI. And it does look like it's reactionary to an extent. The folks in OpenAI were sitting on this and saying, Hey, what's a good time to release this?

[00:54:52] Alex Volkov: And, actually now, to let's steal some thunder from Google and they're like 10 million thing that also not many people can use. And let's show whatever we have that not many people can use which, which is an interesting. Think, to think about, because, again, the pressure is on a bunch of other labs, on Meta, to release something, we know Lama3 is coming at some point, will it be multi modal, will it be able to generate some stuff every

[00:55:16] NA: Really, really quick, sorry to interrupt

[00:55:18] Alex Volkov: Go

[00:55:19] NA: the thing about VJEBA seems to be good at is understanding video instructions I guess you could point the camera to something you're doing with your hands and arts and crafts things, or repairing something, and it understands what you're doing, so that, that's actually very easy.

[00:55:36] NA: Powerful for what data sets data sets of skills that will come, because then you can generate actions. I, I think that, that will apply a lot to robotics, what they're doing.

[00:55:48] Alex Volkov: Oh, alright, yeah. And they also have the Ego4D datasets of robotics as well, and they've talked about this.

[00:55:55] Nvidia relases chat with RTX

[00:55:55] Alex Volkov: so let's go to open source like super quick. NVIDIA released a chat with RTX for local models. And it's actually like very, very cool. So a few things about the chat with RTX. First of all, NVIDIA packed a few, a few models for you. It's 38 gigabytes or something download. And they, they have they have quite a few I think they have two models packed in there.

[00:56:16] Alex Volkov: I wasn't sure which ones. And this, this is basically a, a package you download. I don't know if a doc or not. That runs on any desktop PC with RTX 30 or 40 series with at least 8 gigabytes of RAM. And it gives you a chatbot that's fully local. And we love talking about open source and local stuff as well.

[00:56:33] Alex Volkov: And it Not only that, they give you a rag built in. So you can actually run this on some of the documents that you have. They also have something that runs through a YouTube. You can give it like a YouTube playlist or a video link, and it will it will have you talk to YouTube video. So it has built in rag, built in Tensor rt, LLM, which runs on their, on their stuff RTX acceleration and.

[00:56:56] Alex Volkov: I think it's pretty cool, like it works only on the very specific types of devices, only for like gamers or folks who run these things but I think it's pretty cool that that folks are, that NVIDIA is releasing this. They also have something for developers as well to be able to build on top of this.

[00:57:11] Alex Volkov: And I think the last thing I'll say about this is that it's a Gradio interface, which is really funny to me that people are shipping Gradio interfaces on production. It's super cool.

[00:57:18] Cohere releases Aya 101 12.8B LLM with 101 language understanding

[00:57:18] Alex Volkov: Cohere releases an open source called AYA 101, a model that's like 12. 8 billion parameters model with understanding of multilingual 101 languages from Cohere. It's, it's honestly pretty cool because Cohere has been done doing a bunch of stuff. AYA outperforms the Bloom's model and MT0 on wide, a variety of automatic evaluations despite covering double the number of languages.

[00:57:41] Alex Volkov: And what's interesting as well, they released a dataset together with AYA and then what is interesting here? Yeah, just, oh, Apache 2 license, which is super cool as well. Apache 2 license for, for this model. Let me invite Yi as a co host, maybe this can, join. Far El, go ahead.

[00:57:58] Alex Volkov: Did you see, do you want to talk about Yi Aya?

[00:58:00] Far El: Yeah first off, I I appreciate and commend Cohere to building a multilingual open source data set and so on. That's awesome. We need more of that. But unfortunately, With the first few questions that I asked in Arabic specifically most of the answers were complete. [00:58:20] nonsense on their train model.

[00:58:23] Far El: Yeah. And to, to the point that it's it's laughable, right? For instance in Arabic, I asked who was the who was the first nation that

[00:58:32] NA: had astronauts on the moon. I

[00:58:38] Alex Volkov: Yes.

[00:58:39] NA: think, I think you cut out for a sec.

[00:58:43] Alex Volkov: I think he dropped. I don't see him anymore.

[00:58:45] NA: He might have

[00:58:46] NA: His phone might have

[00:58:47] Alex Volkov: yeah, we're gonna have to

[00:58:48] NA: I can briefly

[00:58:50] NA: comment on it. Yeah, we're pretty happy now that also Kahira has started contributing,

[00:58:56] NA: To open source because datasets are very important. And yeah, I think the reason it wasn't performing so well In other languages, it's just because some languages do not have there wasn't enough data in that for it to be, to be trained.

[00:59:12] NA: But the beautiful thing is that it is Apache 2. 0. You can just add your own languages data set and it will. Literally, make the whole thing better. And yeah, that's, those are my comments on it.

[00:59:22] Interview with Yi Tay and Max Baine from Reka AI

[00:59:22] Alex Volkov: Awesome. All right, folks. So now we're moving into the interview stage, and we have quite a few folks. As one of the most favorite things that I want to do in ThursdAI, and it's been an hour since we've been here, is to actually talk with the folks who released the stuff that we're talking about.

[00:59:35] Alex Volkov: So the next thing I'm going to announce, and then we're going to talk with Yitei and Max, and then after that, we're going to talk with Dom as well. Earlier this week, a company named Reka AI released two models, or at least released a demo of two models, right? I don't think API is still available.

[00:59:51] Alex Volkov: We're going to talk about this as well. Called Reka Flash and Reka Edge. And Reka Flash and Reka Edge are both multimodal models that understand text, understand video, understand audio as well, which is like very surprising to me as well. And I had a thread where I just geeked out and my head was blown to the level of understanding of multimodality.

[01:00:09] Alex Volkov: And I think some of the folks here had, had had talked about Sorry, let me reset. Some of the folks here on stage have worked on these multi models models. And so with this I want to introduce Yi Tei and Max Bain. Please feel free to unmute and introduce yourself briefly and then we're going to talk about some record stuff.

[01:00:25] Alex Volkov: Yi first maybe and then Max.

[01:00:27] Yi Tay: Yeah, thanks thanks Alex for inviting me here. Can people hear me actually?

[01:00:31] Alex Volkov: Yeah, we can hear you

[01:00:32] Yi Tay: okay, great, great. Because this is the first, hey this is the first time using space, so yeah, try to figure out how to use it. But thanks for the invite, alex, and so I'll just introduce myself. I'm Yi Teh, and I'm one of the co founders of RectorAI.

[01:00:45] Yi Tay: We're like a new startup in the LMS space. We train multi modal models. Previously I worked at Google Brain working on Flan stuff like that. So yeah, that's just a short introduction about myself. And maybe Max, do you want to introduce yourself? Yeah,

[01:00:59] Alex Volkov: Yeah, Max, go ahead, please.

[01:01:00] Max Bain: thanks Ian. Yeah.

[01:01:01] Max Bain: Thanks Alex for having me. So yeah, as you said yeah, I'm part of Wrecker. So I joined more recently, like six months ago. I just finished my PhD and that was all my video, audio, speech understanding. I've done a bit of work in open source. So if you use WhisperX that was like something I worked on and yeah, now working more on part of Wrecker and really enjoying it.

[01:01:22] Max Bain: yeah, that's pretty much