Hey, it's Alex. Ok, so mind is officially blown. I was sure this week was going to be wild, but I didn't expect everyone else besides OpenAI to pile on, exactly on ThursdAI.

Coming back from Dev Day (number 2) and am still processing, and wanted to actually do a recap by humans, not just the NotebookLM one I posted during the keynote itself (which was awesome and scary in a "will AI replace me as a podcaster" kind of way), and was incredible to have Simon Willison who was sitting just behind me most of Dev Day, join me for the recap!

But then the news kept coming, OpenAI released Canvas, which is a whole new way of interacting with chatGPT, BFL released a new Flux version that's 8x faster, Rev released a Whisper killer ASR that does diarizaiton and Google released Gemini 1.5 Flash 8B, and said that with prompt caching (which OpenAI now also has, yay) this will cost a whopping 0.01 / Mtok. That's 1 cent per million tokens, for a multimodal model with 1 million context window. 🤯

This whole week was crazy, as last ThursdAI after finishing the newsletter I went to meet tons of folks at the AI Tinkerers in Seattle, and did a little EvalForge demo (which you can see here) and wanted to share EvalForge with you as well, it's early but very promising so feedback and PRs are welcome!

WHAT A WEEK, TL;DR for those who want the links and let's dive in 👇

OpenAI - Dev Day Recap (Alex, Simon Willison)

Recap of Dev Day

RealTime API launched

Prompt Caching launched

Model Distillation is the new finetune

Finetuning 4o with images (Skalski guide)

Fireside chat Q&A with Sam

Open Source LLMs

NVIDIA finally releases NVML (HF)

This weeks Buzz

Alex discussed his demo of EvalForge at the AI Tinkers event in Seattle in "This Week's Buzz". (Demo, EvalForge, AI TInkerers)

Big Companies & APIs

Voice & Audio

AI Art & Diffusion & 3D

The day I met Sam Altman / Dev Day recap

Last Dev Day (my coverage here) was a "singular" day in AI for me, given it also had the "keep AI open source" with Nous Research and Grimes, and this Dev Day I was delighted to find out that the vibe was completely different, and focused less on bombastic announcements or models, but on practical dev focused things.

This meant that OpenAI cherry picked folks who actively develop with their tools, and they didn't invite traditional media, only folks like yours truly, @swyx from Latent space, Rowan from Rundown, Simon Willison and Dan Shipper, you know, newsletter and podcast folks who actually build!

This also allowed for many many OpenAI employees who work on the products and APIs we get to use, were there to receive feedback, help folks with prompting, and just generally interact with the devs, and build that community. I want to shoutout my friends Ilan (who was in the keynote as the strawberry salesman interacting with RealTime API agent), Will DePue from the SORA team, with whom we had an incredible conversation about ethics and legality of projects, Christine McLeavey who runs the Audio team, with whom I shared a video of my daughter crying when chatGPT didn't understand her, Katia, Kevin and Romain on the incredible DevEx/DevRel team and finally, my new buddy Jason who does infra, and was fighting bugs all day and only joined the pub after shipping RealTime to all of us.

I've collected all these folks in a convenient and super high signal X list here so definitely give that list a follow if you'd like to tap into their streams

For the actual announcements, I've already covered this in my Dev Day post here (which was payed subscribers only, but is now open to all) and Simon did an incredible summary on his Substack as well

The highlights were definitely the new RealTime API that let's developers build with Advanced Voice Mode, Prompt Caching that will happen automatically and reduce all your long context API calls by a whopping 50% and finetuning of models that they are rebranding into Distillation and adding new tools to make it easier (including Vision Finetuning for the first time!)

Meeting Sam Altman

While I didn't get a "media" pass or anything like this, and didn't really get to sit down with OpenAI execs (see Swyx on Latent Space for those conversations), I did have a chance to ask Sam multiple things.

First at the closing fireside chat between Sam and Kevin Weil (CPO at OpenAI), Kevin first asked Sam a bunch of questions, and then they gave out the microphones to folks, and I asked the only question that got Sam to smile

Sam and Kevin went on for a while, and that Q&A was actually very interesting, so much so, that I had to recruit my favorite Notebook LM podcast hosts, to go through it and give you an overview, so here's that Notebook LM, with the transcript of the whole Q&A (maybe i'll publish it as a standalone episode? LMK in the comments)

After the official day was over, there was a reception, at the same gorgeous Fort Mason location, with drinks and light food, and as you might imagine, this was great for networking.

But the real post dev day event was hosted by OpenAI devs at a bar, Palm House, which both Sam and Greg Brokman just came to and hung out with folks. I missed Sam last time and was very eager to go and ask him follow up questions this time, when I saw he was just chilling at that bar, talking to devs, as though he didn't "just" complete the largest funding round in VC history ($6.6B at $175B valuation) and went through a lot of drama/turmoil with the departure of a lot of senior leadership!

Sam was awesome to briefly chat with, tho as you might imagine, it was loud and tons of folks wanted selfies, but we did discuss how AI affects the real world, job replacement stuff were brought up, and how developers are using the OpenAI products.

What we learned, thanks to Sigil, is that o1 was named partly as a "reset" like the main blogpost claimed and partly as "alien of extraordinary ability" , which is the the official designation of the o1 visa, and that Sam came up with this joke himself.

Is anyone here smarter than o1? Do you think you still will by o2?

One of the highest impact questions was by Sam himself to the audience.

Who feels like they've spent a lot of time with O1, and they would say, like, I feel definitively smarter than that thing?

— Sam Altman

When Sam asked this at first, a few hands hesitatingly went up. He then followed up with

Do you think you still will by O2? No one. No one taking the bet.

One of the challenges that we face is like, we know how to go do this thing that we think will be like, at least probably smarter than all of us in like a broad array of tasks

This was a very palpable moment that folks looked around and realized, what OpenAI folks have probably internalized a long time ago, we're living in INSANE times, and even those of us at the frontier or research, AI use and development, don't necessarily understand or internalize how WILD the upcoming few months, years will be.

And then we all promptly forgot to have an existential crisis about it, and took our self driving Waymo's to meet Sam Altman at a bar 😂

This weeks Buzz from Weights & Biases

Hey so... after finishing ThursdAI last week I went to Seattle Tinkerers event and gave a demo (and sponsored the event with a raffle of Meta Raybans). I demoed our project called EvalForge, which I built the frontend of and my collegue Anish on backend, as we tried to replicate the Who validates the validators paper by Shreya Shankar, here’s that demo, and EvalForge Github for many of you who asked to see it.

Please let me know what you think, I love doing demos and would love feedback and ideas for the next one (coming up in October!)

OpenAI chatGPT Canvas - a complete new way to interact with chatGPT

Just 2 days after Dev Day, and as breaking news during the show, OpenAI also shipped a new way to interact with chatGPT, called Canvas!

Get ready to say goodbye to simple chats and hello to a whole new era of AI collaboration! Canvas, a groundbreaking interface that transforms ChatGPT into a true creative partner for writing and coding projects. Imagine having a tireless copy editor, a brilliant code reviewer, and an endless source of inspiration all rolled into one – that's Canvas!

Canvas moves beyond the limitations of a simple chat window, offering a dedicated space where you and ChatGPT can work side-by-side. Canvas opens in a separate window, allowing for a more visual and interactive workflow. You can directly edit text or code within Canvas, highlight sections for specific feedback, and even use a handy menu of shortcuts to request tasks like adjusting the length of your writing, debugging code, or adding final polish. And just like with your favorite design tools, you can easily restore previous versions using the back button.

Per Karina, OpenAI has trained a special GPT-4o model specifically for Canvas, enabling it to understand the context of your project and provide more insightful assistance. They used synthetic data, generated by O1 which led them to outperform the basic version of GPT-4o by 30% in accuracy.

A general pattern emerges, where new frontiers in intelligence are advancing also older models (and humans as well).

Gemini Flash 8B makes intelligence essentially free

Google folks were not about to take this week litely and decided to hit back with one of the most insane upgrades to pricing I've seen. The newly announced Gemini Flash 1.5 8B is goint to cost just... $0.01 per million tokens 🤯 (when using caching, 3 cents when not cached)

This basically turns intelligence free. And while it is free, it's still their multimodal model (supports images) and has a HUGE context window of 1M tokens.

The evals look ridiculous as well, this 8B param model, now almost matches Flash from May of this year, less than 6 month ago, while giving developers 2x the rate limits and lower latency as well.

What will you do with free intelligence? What will you do with free intelligence of o1 quality in a year? what about o2 quality in 3 years?

Bye Bye whisper? Rev open sources Reverb and Reverb Diarize + turbo models (Blog, HF, Github)

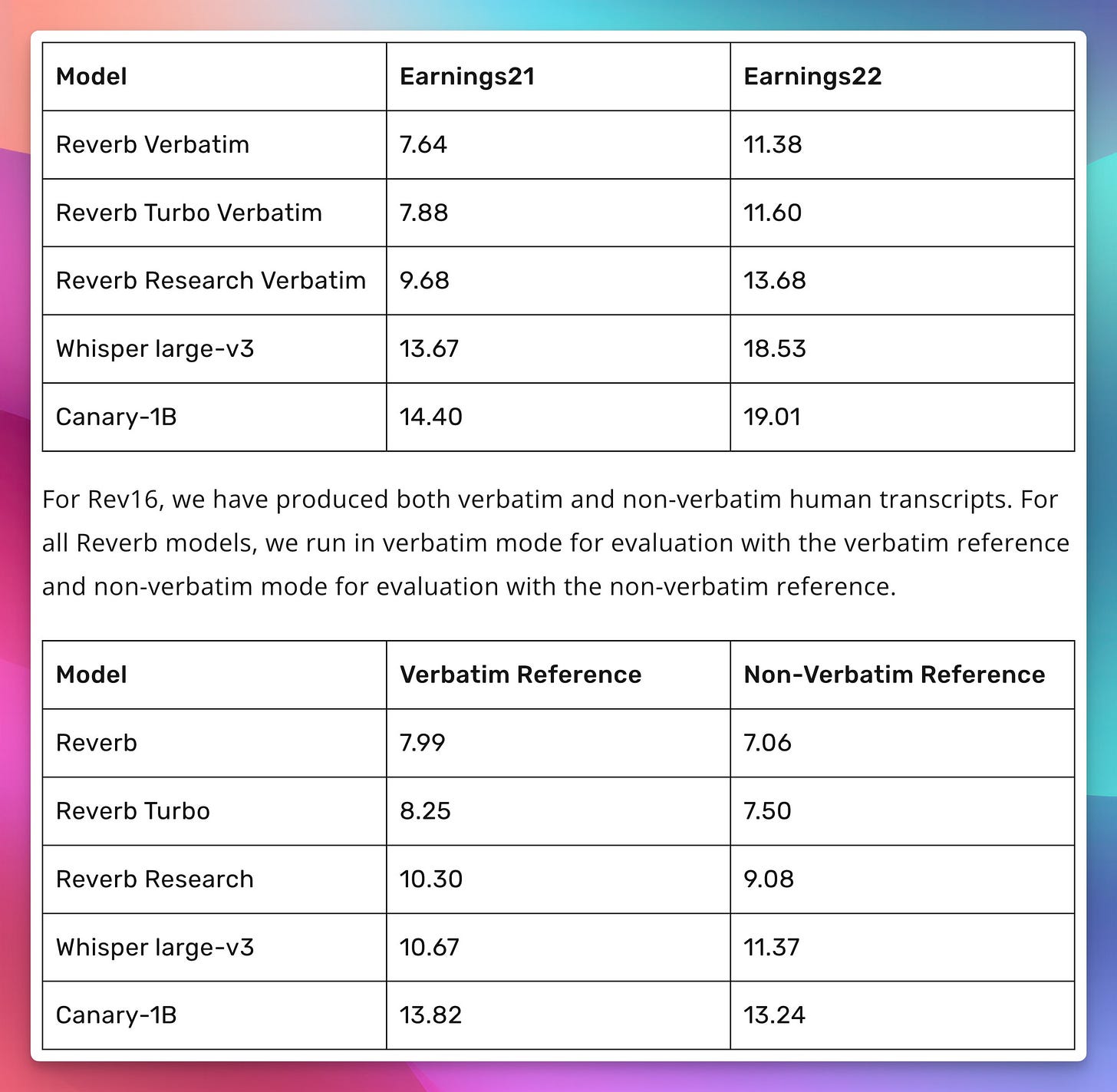

With a "WTF just happened" breaking news, a company called Rev.com releases what they consider a SOTA ASR model, that obliterates Whisper (English only for now) on metrics like WER, and includes a specific diarization focused model.

Trained on 200,000 hours of English speech, expertly transcribed by humans, which according to their claims, is the largest dataset that any ASR model has been trained on, they achieve some incredible results that blow whisper out of the water (lower WER is better)

They also released a seemingly incredible diarization model, which helps understand who speaks when (and is usually added on top of Whisper)

For diarization, Rev used the high-performance pyannote.audio library to fine-tune existing models on 26,000 hours of expertly labeled data, significantly improving their performance

While this is for English only, getting a SOTA transcription model in the open, is remarkable. Rev opened up this model on HuggingFace with a non commercial license, so folks can play around (and distill?) it, while also making it available in their API for very cheap and also a self hosted solution in a docker container

Black Forest Labs feeding up blueberries - new Flux 1.1[pro] is here (Blog, Try It)

What is a ThursdAI without multiple SOTA advancements in all fields of AI? In an effort to prove this to be very true, the folks behind FLUX, revealed that the mysterious 🫐 model that was trending on some image comparison leaderboards is in fact a new version of Flux pro, specifically 1.1[pro]

FLUX1.1 [pro] provides six times faster generation than its predecessor FLUX.1 [pro] while also improving image quality, prompt adherence, and diversity

Just a bit over 2 month since the inital release, and proving that they are THE frontier lab for image diffusion models, folks at BLF are dropping a model that outperforms their previous one on users voting and quality, while being a much faster!

They have partnered with Fal, Together, Replicate to disseminate this model (it's not on X quite yet) but are now also offering developers direct access to their own API and at a competitive pricing of just 4 cents per image generation (while being faster AND cheaper AND higher quality than the previous Flux 😮) and you can try it out on Fal here

Phew! What a whirlwind! Even I need a moment to catch my breath after that AI news tsunami. But don’t worry, the conversation doesn't end here. I barely scratched the surface of these groundbreaking announcements, so dive into the podcast episode for the full scoop – Simon Willison’s insights on OpenAI’s moves are pure gold, and Maxim LaBonne spills the tea on Liquid AI's audacious plan to dethrone transformers (yes, you read that right). And for those of you who prefer skimming, check out my Dev Day summary (open to all now). As always, hit me up in the comments with your thoughts. What are you most excited about? Are you building anything cool with these new tools? Let's keep the conversation going!Alex