Hey there, Alex here with an end of summer edition of our show, which did not disappoint. Today is the official anniversary of stable diffusion 1.4 can you believe it?

It's the second week in the row that we have an exclusive LLM launch on the show (after Emozilla announced Hermes 3 on last week's show), and spoiler alert, we may have something cooking for next week as well!

This edition of ThursdAI is brought to you by W&B Weave, our LLM observability toolkit, letting you evaluate LLMs for your own use-case easily

Also this week, we've covered both ends of AI progress, doomerist CEO saying "Fck Gen AI" vs an 8yo coder and I continued to geek out on putting myself into memes (I promised I'll stop... at some point) so buckle up, let's take a look at another crazy week:

TL;DR

Open Source LLMs

AI Art & Diffusion & 3D

Big CO LLMs + APIs

This weeks Buzz

Weights & Biases Judgement Day SF Hackathon in September 21-22 (Sign up to hack)

Video

Hotshot - new video model - trained by 4 guys (try it, technical deep dive)

Tools & Others

The Best of the Best: Open Source Wins with Jamba, Phi 3.5, and Surprise Function Calling Heroes

We kick things off this week by focusing on what we love the most on ThursdAI, open-source models! We had a ton of incredible releases this week, starting off with something we were super lucky to have live, the official announcement of AI21's latest LLM: Jamba.

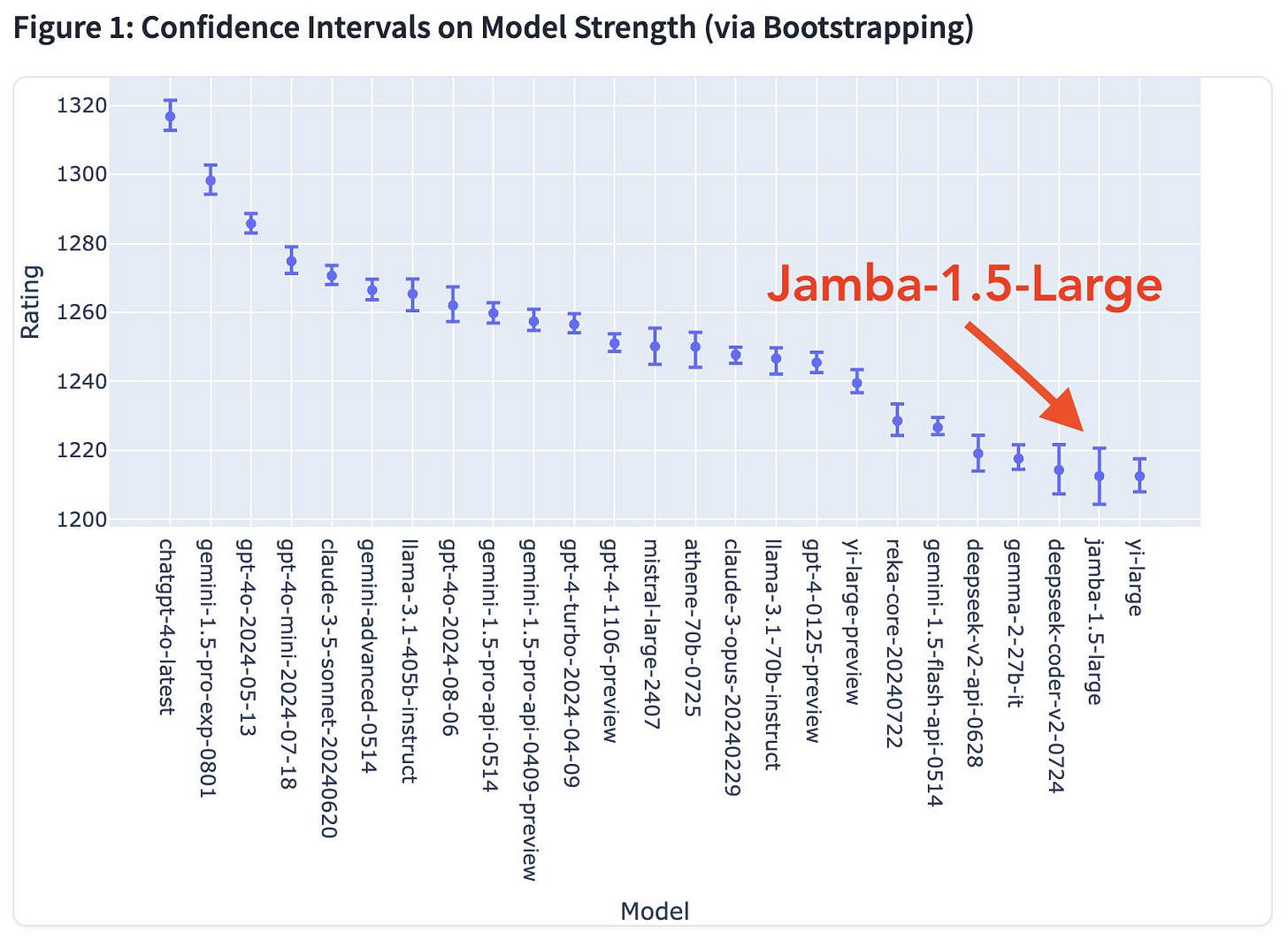

AI21 Officially Announces Jamba 1.5 Large/Mini – The Powerhouse Architecture Combines Transformer and Mamba

While we've covered Jamba release on the show back in April, Jamba 1.5 is an updated powerhouse. It's 2 models, Large and Mini, both MoE and both are still hybrid architecture of Transformers + Mamba that try to get both worlds.

Itay Dalmedigos, technical lead at AI21, joined us on the ThursdAI stage for an exclusive first look, giving us the full rundown on this developer-ready model with an awesome 256K context window, but it's not just the size – it’s about using that size effectively.

AI21 measured the effective context use of their model on the new RULER benchmark released by NVIDIA, an iteration of the needle in the haystack and showed that their models have full utilization of context, as opposed to many other models.

“As you mentioned, we’re able to pack many, many tokens on a single GPU. Uh, this is mostly due to the fact that we are able to quantize most of our parameters", Itay explained, diving into their secret sauce, ExpertsInt8, a novel quantization technique specifically designed for MoE models.

Oh, and did we mention Jamba is multilingual (eight languages and counting), natively supports structured JSON, function calling, document digestion… basically everything developers dream of. They even chucked in citation generation, as it's long context can contain full documents, your RAG app may not even need to chunk anything, and the citation can cite full documents!

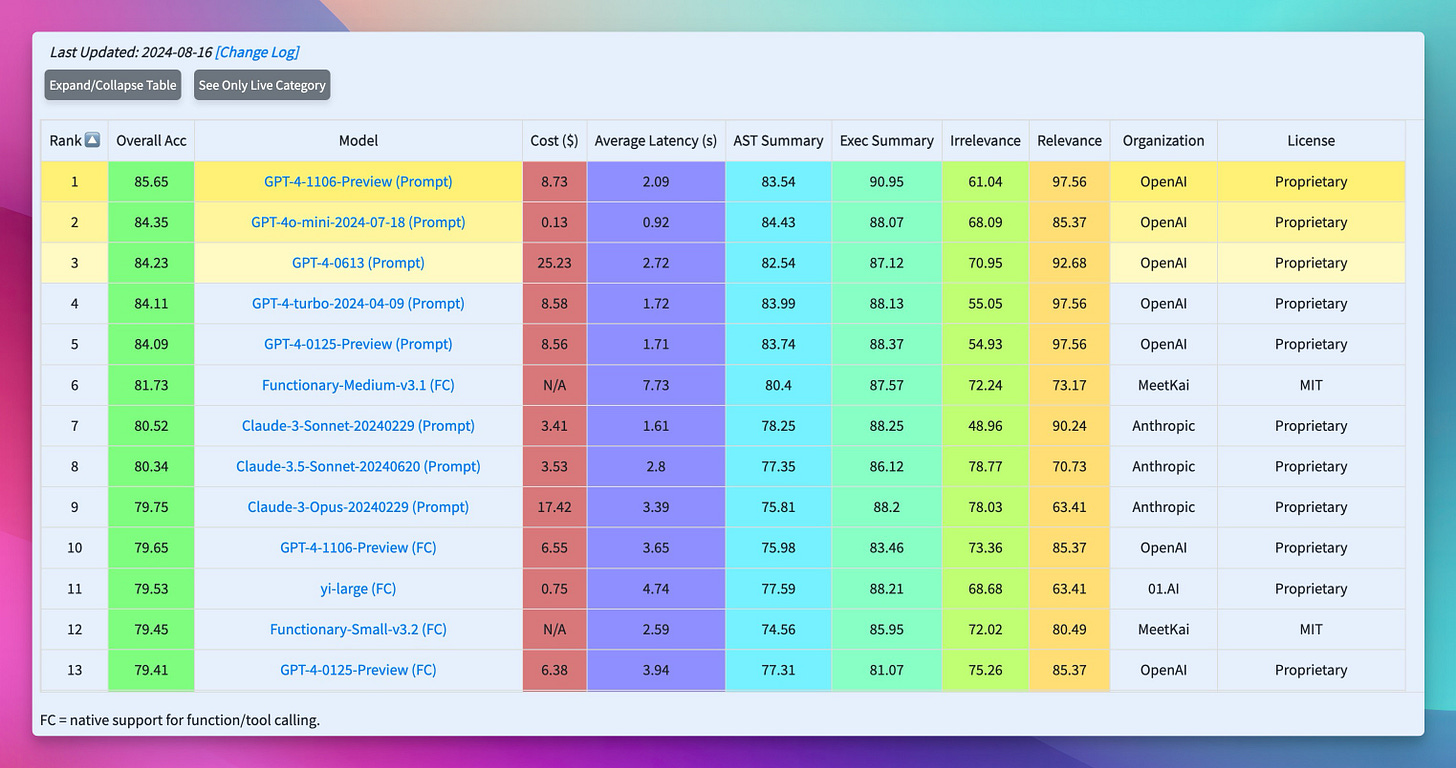

Berkeley Function Calling Leaderboard V2: Updated + Live (link)

Ever wondered how to measure the real-world magic of those models boasting "I can call functions! I can do tool use! Look how cool I am!" 😎? Enter the Berkeley Function Calling Leaderboard (BFCL) 2, a battleground where models clash to prove their function calling prowess.

Version 2 just dropped, and this ain't your average benchmark, folks. It's armed with a "Live Dataset" - a dynamic, user-contributed treasure trove of real-world queries, rare function documentations, and specialized use-cases spanning multiple languages. Translation: NO more biased, contaminated datasets. BFCL 2 is as close to the real world as it gets.

So, who’s sitting on the Function Calling throne this week? Our old friend Claude 3.5 Sonnet, with an impressive score of 73.61. But breathing down its neck is GPT 4-0613 (the OG Function Calling master) with 73.5. That's right, the one released a year ago, the first one with function calling, in fact the first LLM with function calling as a concept IIRC!

Now, prepare for the REAL plot twist. The top-performing open-source model isn’t some big name, resource-heavy behemoth. It’s a tiny little underdog called Functionary Medium 3.1, a finetuned version of Llama 3.1 that blew everyone away. It even outscored both versions of Claude 3 Opus AND GPT 4 - leaving folks scrambling to figure out WHO created this masterpiece.

“I’ve never heard of this model. It's MIT licensed from an organization called MeetKai. Have you guys heard about Functionary Medium?” I asked, echoing the collective bafflement in the space. Yep, turns out there’s gold hidden in the vast landscape of open source models, just waiting to be unearthed ⛏️.

Microsoft updates Phi 3.5 - 3 new models including an MoE + MIT license

3 new Phi's dropped this week, including an MoE one, and a new revamped vision one. They look very decent on benchmark yet again, with the mini version (3.8B) seemingly beating LLama 3.1 8B on a few benchmarks.

However, as previously the excitement is met with caution because Phi models seem great on benchmarks but then actually talking with them, folks are not as impressed usually.

Terry from BigCodeBench also saw a significant decrease in coding ability for Phi 3.5 vs 3.1

Of course, we're not complaining, the models released with 128K context and MIT license.

The thing I'm most excited about is the vision model updates, it has been updated with "multi-frame image understanding and reasoning" which is a big deal! This means understanding videos more natively across scenes.

This weeks Buzz

Hey, if you're reading this, while sitting in the bay area, and you don't have plans for exactly a month from now, why don't you come and hack with me? (Register Free)

Announcing, the first W&B hackathon, Judgement Day that's going to be focused on LLM as a judge! Come hack on innovative LLM as a judge ideas, UIs, evals and more, meet other like minded hackers and AI engineers and win great prizes!

🎨 AI Art: Ideogram Crowns Itself King, Midjourney Joins the Internet & FLUX everywhere

While there was little news from big LLM labs this week, there is a LOT of AI art news, which is fitting to celebrate 2 year Stable Diffusion 1.4 anniversary!

👑 Ideogram v2: Text Wizardry and API Access (But No Loras… Yet?)

With significantly improved realism, and likely the best text generation across all models out there, Ideogram v2 just took over the AI image generation game! Just look at that text sharpness!

They now offer a selection of styles (Realistic, Design, 3D, Anime) and any aspect ratios you'd like and also, brands can now provide color palettes to control the outputs!

Adding to this is a new API offering (.8c per image for the main model, .5c for the new turbo model of v2!) and a new IOS app, they also added the option (for premium users only) to search through a billion generations and their prompts, which is a great offering as well, as sometimes you don't even know what to prompt.

They claim a significant improvement over Flux[pro] and Dalle-3 in text, alignment and overall, interesting that MJ was not compared!

Meanwhile, Midjourney finally launched a website and a free tier, so no longer do you have to learn to use Discord to even try Midjourney.

Meanwhile Flux enjoys the fruits of Open Source

While the Ideogram and MJ fight it out for the closed source, Black Forest Labs enjoys the fruits of released their weights in the open.

Fal just released an update that LORAs run 2.5x faster and 2.5x cheaper, CivitAI has LORAs for pretty much every character and celebrity ported to FLUX already, different techniques like ControlNets Unions, IPAdapters and more are being trained as we speak and tutorials upon tutorials are released of how to customize these models, for free (shoutout to my friend Matt Wolfe for this one)

you can now train your own face on fal.ai , replicate.com and astria.ai , and thanks to astria, I was able to find some old generations of my LORAs from the 1.5 days (not quite 1.4, but still, enough to show the difference between then and now) and whoa.

🤔 Is This AI Tool Necessary, Bro?

Let’s end with a topic that stirred up a hornets nest of opinions this week: Procreate, a beloved iPad design app, publicly declared their "fing hate” for Generative AI.

Yeah, you read that right. Hate. The CEO, in a public statement went FULL scorched earth - proclaiming that AI-powered features would never sully the pristine code of their precious app.

“Instead of trying to bridge the gap, he’s creating more walls", Wolfram commented, echoing the general “dude… what?” vibe in the space. “It feels marketeerial”, I added, pointing out the obvious PR play (while simultaneously acknowledging the very REAL, very LOUD segment of the Procreate community that cheered this decision).

Here’s the thing: you can hate the tech. You can lament the potential demise of the human creative spark. You can rail against the looming AI overlords. But one thing’s undeniable: this tech isn't going anywhere.

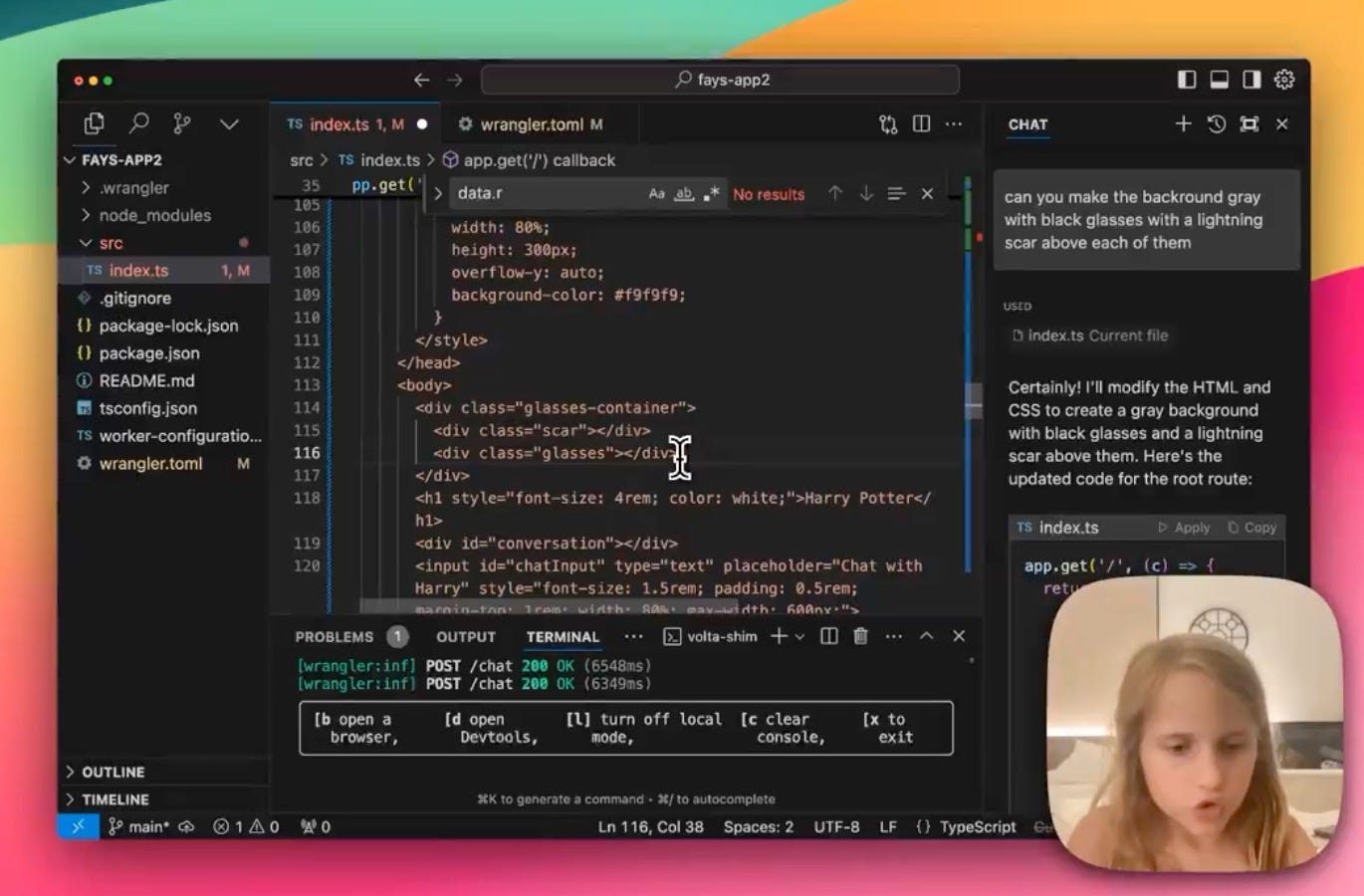

Meanwhile, 8yo coders lean in fully into AI

As a contrast to this doomerism take, just watch this video of Ricky Robinette's eight-year-old daughter building a Harry Potter website in 45 minutes, using nothing but a chat interface in Cursor. No coding knowledge. No prior experience. Just prompts and the power of AI ✨.

THAT’s where we’re headed, folks. It might be terrifying. It might be inspiring. But it’s DEFINITELY happening. Better to understand it, engage with it, and maybe try to nudge it in a positive direction, than burying your head in the sand and muttering “I bleeping hate this progress” like a cranky, Luddite hermit. Just sayin' 🤷♀️.

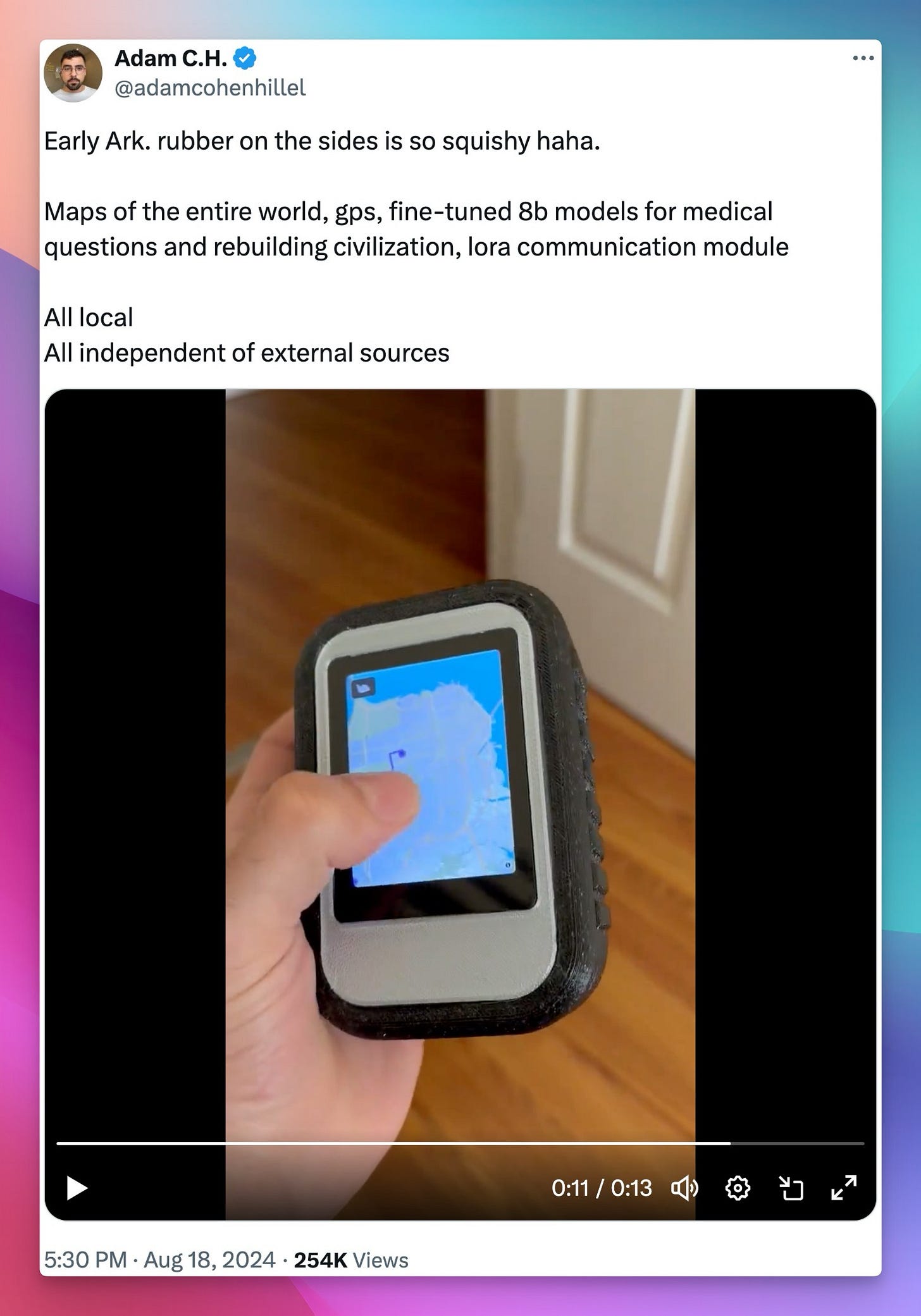

AI Device to reboot civilization (if needed)

I was scrolling through my feed (as I do VERY often, to bring you this every week) and I saw this and super quickly decided to invite the author to the show to talk about it.

Adam Cohen Hillel has prototyped an AI hardware device, but this one isn't trying to record you or be your friend, no, this one comes with offline LLMs finetuned with health and bio information, survival tactics, and all of the worlds maps and works completely offline!

This to me was a very exciting use for an LLM, a distilled version of all human knowledge, buried in a faraday cage, with replaceable batteries that runs on solar and can help you survive in the case of something bad happening, like really bad happening (think a solar flare that takes out the electrical grid or an EMP device). While improbable, I thought this was a great idea and had a nice chat with the creator, you should definitely give this one a listen, and if you want to buy one, he is going to sell them soon here

This is it for this week, there have been a few updates from the big labs, OpenAI has opened Finetuneing for GPT-4o, and you can use your WandB API key in there to track those, which is cool, Gemini API now accepts incredibly large PDF files (up to 1000 pages) and Grok 2 is finally on X (not mini from last week)

See you next week (we will have another deep dive!)