Hey everyone, Alex here 👋

This week is a very exciting one in the world of AI news, as we get 3 SOTA models, one in overall LLM rankings, on in OSS coding and one in OSS voice + a bunch of new breaking news during the show (which we reacted to live on the pod, and as we're now doing video, you can see us freak out in real time at 59:32)

This week also, there was a debate online, whether deep learning (and scale is all you need) has hit a wall, with folks like Ilya Sutskever being cited by publications claiming it has, folks like Yann LeCoon calling "I told you so".

TL;DR? multiple huge breakthroughs later, and both Oriol from DeepMind and Sam Altman are saying "what wall?" and Heiner from X.ai saying "skill issue", there is no walls in sight, despite some tech journalism love to pretend there is. Also, what happened to Yann? 😵💫

Ok, back to our scheduled programming, here's the TL;DR, afterwhich, a breakdown of the most important things about today's update, and as always, I encourage you to watch / listen to the show, as we cover way more than I summarize here 🙂

All the links / TL;DR will be below the post (in a shameless effort to get you to read/watch), and a reminder that this whole ThursdAI is sponsored by Weights & Biases Weave, which now has JS/TS support!

🔥 Open Source - Qwen 2.5 Coder new SOTA coder king (HF, Blog, Tech Report)

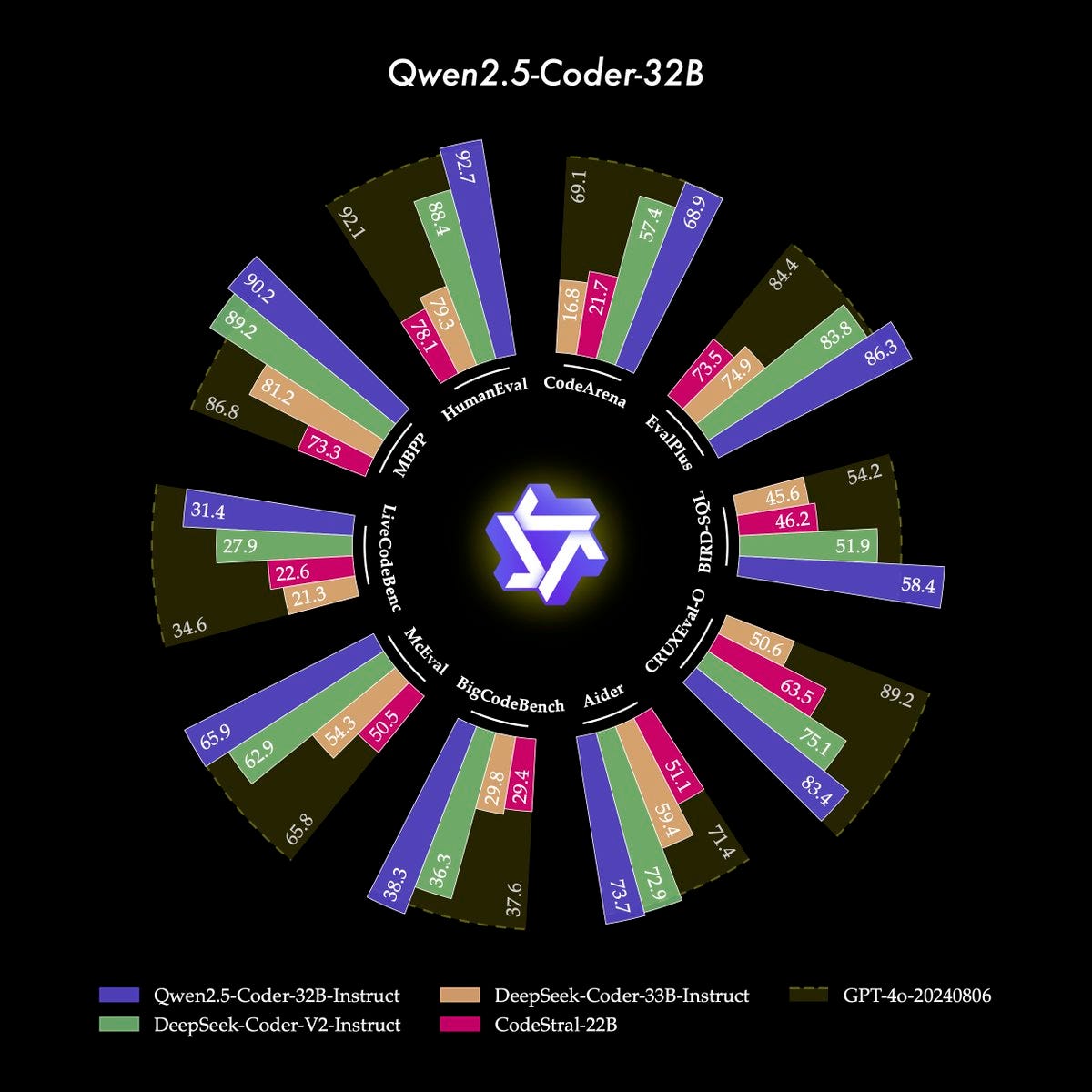

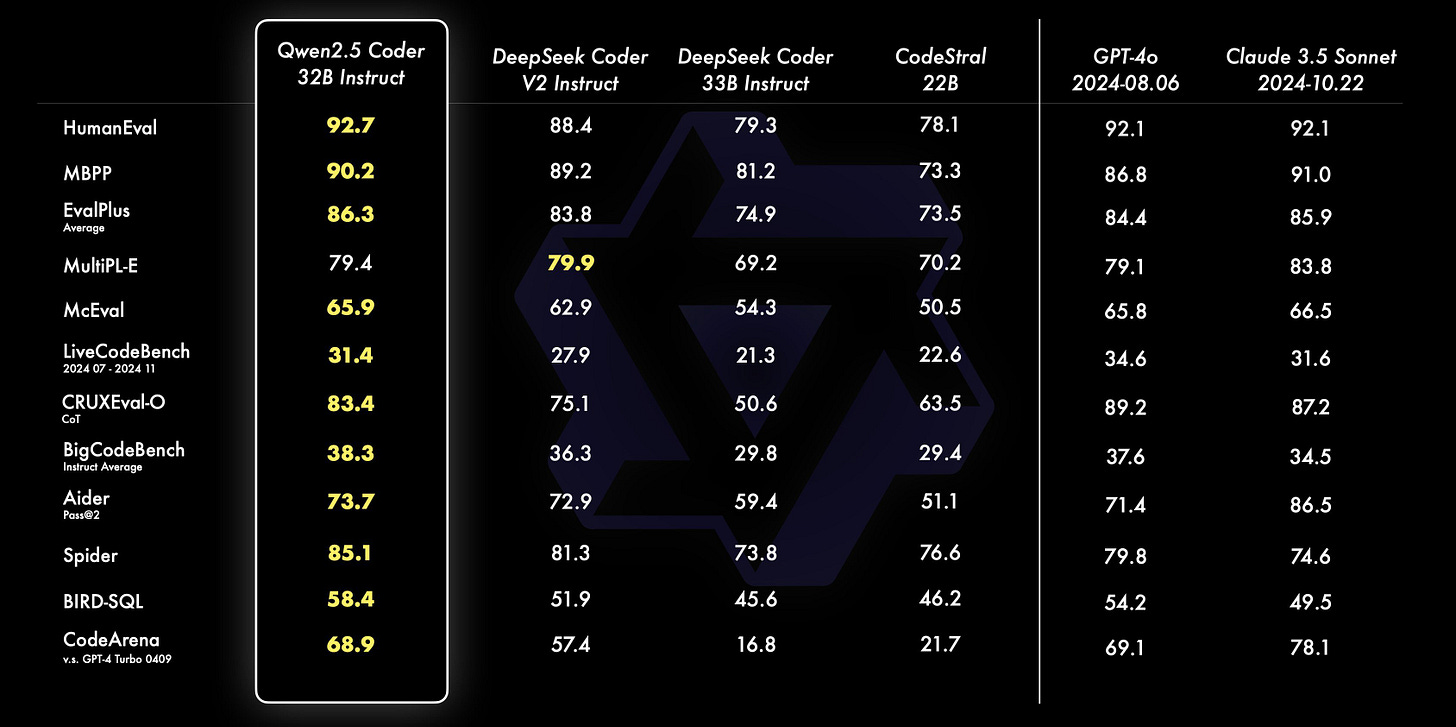

Our friends from Alibaba Qwen have done this again, they have dropped a series of 6 models, from 0.5 all the way to 32B, most of them with apache2 license, and the evals on the 32B are really really mindblowing.

Think about it this way, it's a 22GB file (if you download quantized) that is comparable to Claude Sonnet 3.5, fully open weights, that you can run on your own machine.

With 92.7 score on HumanEval and 73.7 on Aider (which becomes a standard of code editing), Qwen 2.5 Coder is not only the best open source coding model, it's not taking a stab at being one of the best... coding models! Comparable to GPT-4o and Claude Sonnet, it's incredible to see how far the Qwen team has pushed this!

Supporting over 40 coding languages and coming in 6 sizes: 0.5B, 1.5B, 3B, 7B, 14B, and 32B, these models are a very good fit for speculative decoding speed enhancements.

Additional features supported were code edit in place, and it allows for features like... artifacts, similar to how Claude builds apps on the fly and usage in of this model in Cursor, two features which the Qwen team show-cased with the release.

Congrats to the Qwen team on this momentous release with an incredible permissive license!

P.S In honor of this SOTA release, I used the upcoming SUNO v4 to generate a new Jingle for when we're getting a new SOTA models on ThursdAI 👏🎶

Athene V2 from NexusFlow beating GPT-4o?

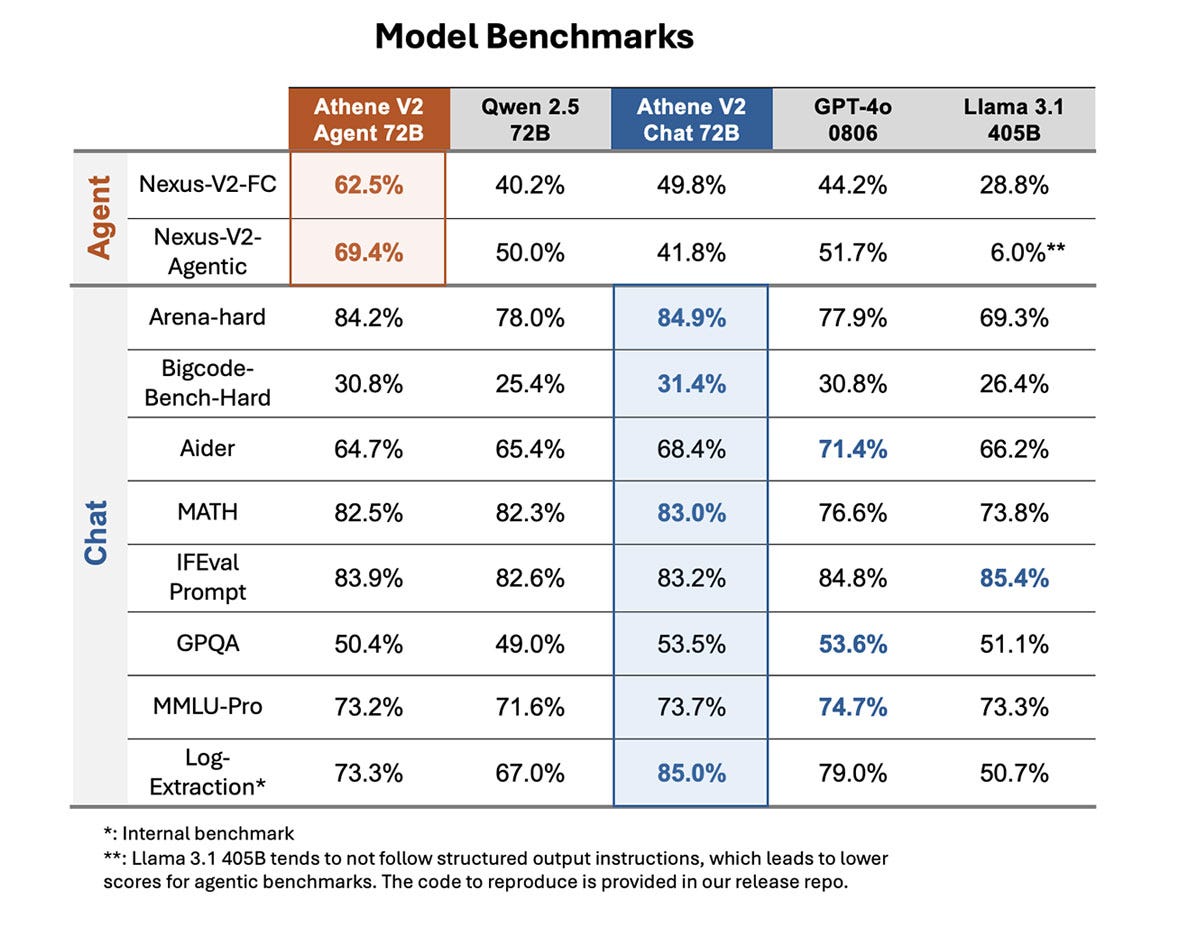

Not only did Qwen Coder become SOTA coding, it also served as a basis for these incredible 2 new models from NexusFlow called Athene v2, which show incredible performance! (X, Blog, HF)

Beating GPT-4o on Arena-hard and MATH benchmarks by a significant margin, these models are finetunes of Qwen 2.5 72B.

They have released two models, one of which is specifically focused on agentic tasks, and claims SOTA on agentic capabilities (tho those benchmarks seem custom to Nexus)

Givem them a try, the code is open source, but they models are under research license only.

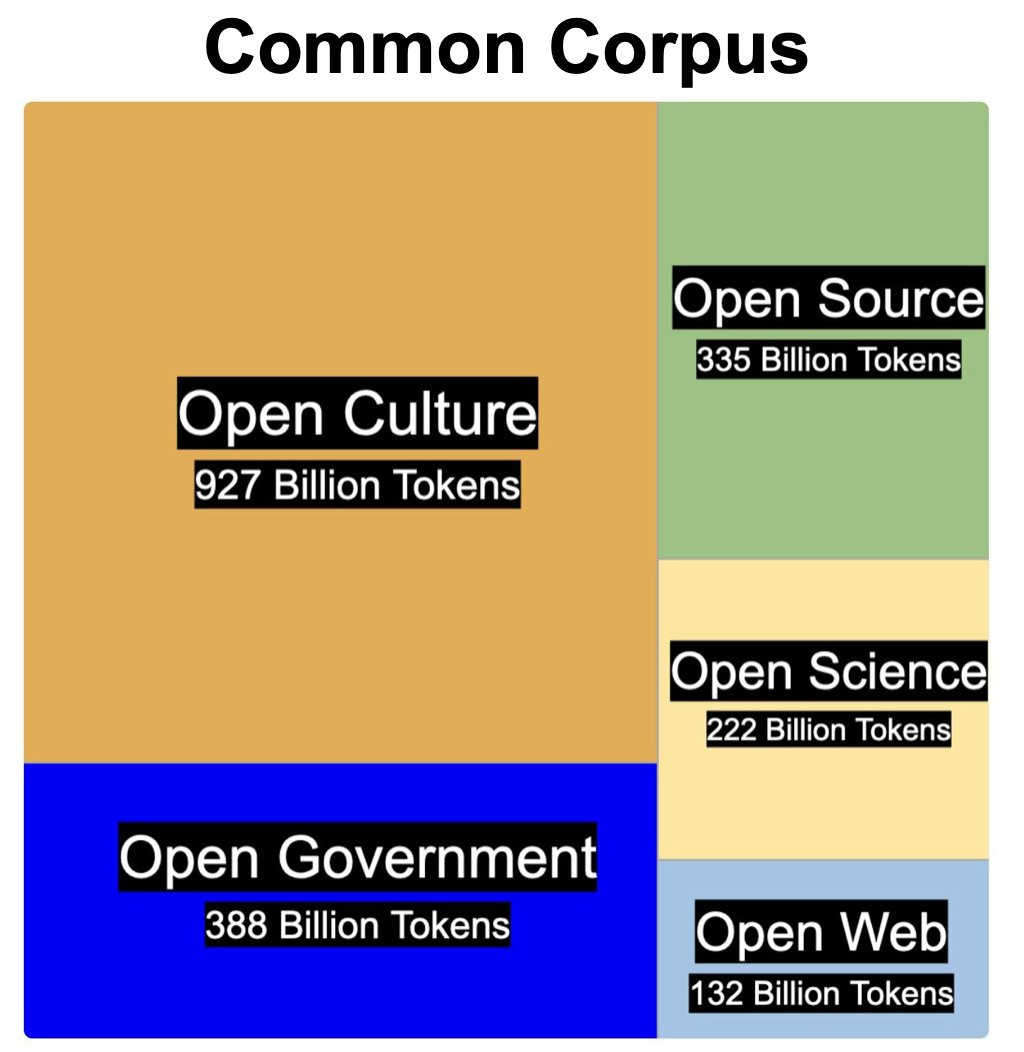

Honorable mention to the OSS chapter is Common Corpus

A release of CommonCorpus, a MASSIVE 2T tokens dataset (blog), featuring the largest multilingual open pretraining dataset ever released! Massive massive kudos to these folks! 🔥

Big Companies & APIs

👑 Google DeepMind Gemini 1114 - New #1 King of LLMs on LMArena

Out of the blue today, Logan Kilpatrick, PM for Gemini AI Studio (who likes dropping stuff right in the middle of ThursdAI) decided to announce an updated experimental version of Google Gemini, called 1114, together with an LMArena (previously LMSys) update, that it's now #1 overall LLM in the world. Beating O1-preview (with reasoning) at MATH 😮, #1 on Hard Prompts.

This is massive news! (tho for temporary reasons, 1114 is coming with only 32K context window, vs the 2M context window we've come to expect from Google).

We all got super excited! Though as I started testing it on some very hard prompts, and it didn't do VERY well, tho to be fair, those prompts are designed to trick language models!

I'll be playing around with this model some more as they open up the context window and let you know what I think!

In other Google news, Google AI Studio have shipped support for OpenAI SDK compatibility, which means that along with other frontier labs, it's now 2 lines of code to switch between Gemini, OpenAI, Grok and other models. Anthropic is the last one IMO that doesn't support this API and I hope they will soon as well. 🤞

This weeks buzz - Weights & Biases corner

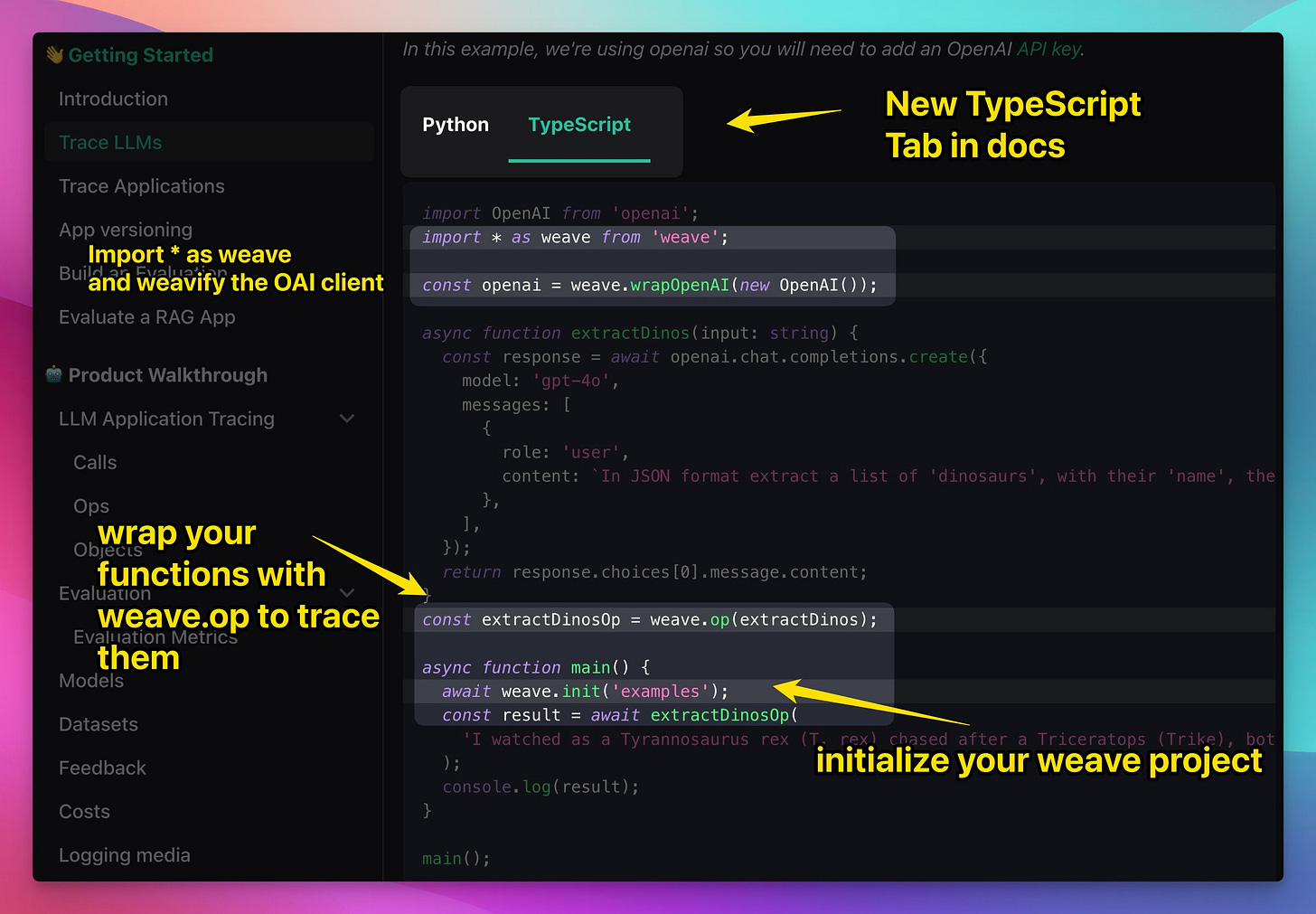

We're about to do some important announcements about Weave (coming in December, so stay tuned, more on this next week) but meanwhile, gearing towards that, the team has shipped maybe the number 1 most requested feature this week!

npm i weave

Weave JS/TS support for those folks who don't use python! And we've landed new doc support for this, which I wanted to call out, as I've been asked many times and promised folks I'd tell them when this lands. It's super simple to get started, here's the real quick TL;DR.

Please give this a try and let me know how it goes 🙏 (DM on X is great)

OpenAI integrates with MacOS native apps (and releases a Windows app) + Greg is back!

In a very traditional ThursdAI drop, (and a very traditional... "we're OpenAI, we can't let Google outship us" drop) OpenAI shipped two features for their Desktop apps, first being, the windows native app has been released to all users.

The second one tho, is way cooler, and I got to test it live on the stream (which I LOVED doing btw, this is the second time this happened, and it solidifies the excitement)

OpenAI has shipped the ability to integrate the native ChatGPT mac app with apps like VisualStudio Code (not Cursor mind you), Terminal and Iterm2, and Xcode! By giving permissions to these apps, ChatGPT now has context to what you see on the screen, allowing the app to read and analyze what's written in there.

You may ask, "So what? "I could have copy-pasted it into the window myself, and also, cursor already let's me do this", but I still find this very exciting, as for many Xcode developers, the process was very curbersome and this streamlines it (and you can see a very clear indication that it's working with code)

And no, so far there's no "Apply" functionality like in cursor or CoPilot, you can only copy the code back yourself, but I'm sure it'll come at some point, and also, AVM (advanced voice mode) still doesn't support or see it (Kevin Weil, CPO of OpenAI confirmed to me that it will soon), so soon you will be able to "chat" about the code you see on your screen with an AI sidekick with real time voice!

Voice & Audio

Speaking of AI sidekicks with realtime voice 👇

Fixie UltraVox - A fast multimodal LLM for real-time voice (Demo, HF, API)

This release kind of blew me away, as I didn't expect open models to arrive here so soon. Now, bear in mind, this isn't quite an Omni model like GPT-4o, so it doesn't "speak" like advanced voice mode, but the folks in Fixie have found a way to train multimodality into the model and there's no ASR (automatic speech recognotion) step, and because of that, the conversation seems so very natural and fast!

I've played with tons of these open sources ones, from Moshi to Ichigo, to playcat, to the Meta AI ones, this one sounds by far one of the natural ones and closest ones to AVM from OAI.

The folks at Fixie claim that full duplex (having the model also speak while it listens) is on the roadmap as well.

Oh and the kicker, their API is 3x cheaper than OAI advanced voice mode and is based on Llama (if you don't want to run this yourself!)

Suno V4 is coming soon-o

I've got invited to play around with the upcoming release of Suno, the AI music generation tool, and honestly I've been floored by some of the generations I've been getting. They have long since passed the uncanny valley and some of the tracks are so so good.

I took some of these videos on Monday and I decided to test Suno out by just telling it, hey, Colorado, snow, bison, deer, sunny, and it was amazing how it fit the mood! Content creation will never be the same!

Tho, you still have to go through a few good and bad ones, the good ones are... SO good!

TL;DR and Show Notes:

Open Source LLMs

Qwen Coder 2.5 32B (+5 others) - Sonnet @ home (HF, Blog, Tech Report)

The End of Quantization? (X, Original Thread)

Epoch : FrontierMath new benchmark for advanced MATH reasoning in AI (Blog)

Common Corpus: Largest multilingual 2T token dataset (blog)

Big CO LLMs + APIs

This Weeks Buzz

Weave JS/TS support is here 🙌

Voice & Audio

Suno v4 is coming and it's bonkers amazing (Alex Song, SOTA Jingle)

Tools demoed

Qwen artifacts - HF Demo

Tilde Galaxy - Interp Tool